The State of Enterprise Data & AI

Executive Summary

Permalink to “Executive Summary”Picture a star athlete. Everyone wanted to recruit them. Teams fought to sign them. They made the roster, got the jersey, showed up to practice.

But when game time comes? They’re benched. That’s the current state of enterprise AI.

What explains the gap between expectations and reality? And if it’s failing to deliver on its promise, how long will it be until AI investments stop pouring in?

There’s no doubt that the enthusiasm is real: nearly 84% of organizations are fully bought into AI or actively experimenting with it. Yet only 17% report that AI is operationalized and driving business value.

That 67-point execution split is what we call the AI Value Chasm. It’s the growing gap between how fast we’re building with AI and how little value we’re actually seeing from it at scale.

And as we enter 2026, investors, executives, and finance teams alike will be scrutinizing it more closely. Research from IDC shows that 70% of the Forbes Global 2000 companies will soon make ROI analysis a prerequisite for any new AI investment.

The question will shift from “Should we do AI?” to “Why can’t we get AI to do meaningful work?” and eventually, “Is it even worth the investment?”

This will be the year that shift begins. And the difference between those who continue to lean into AI and those who abandon it will come down to one thing: context.

Context is the shared definitions, data quality, governance bridges, and semantic understanding that let AI understand your business. Without context infrastructure, AI is just expensive pattern matching that can’t drive real outcomes. But with it, AI becomes a business driver, partner, and innovator.

This report is designed to dig into the factors that contribute to context readiness. We’ll identify the top challenges with scaling AI, the hesitations that keep data leaders up at night, and the opportunities for improvement that are right under our noses.

Our hope is that you walk away feeling confident about how to harness context to make AI actually work, so you can stop fielding questions about AI and start showing its value – and your own.

Methodology

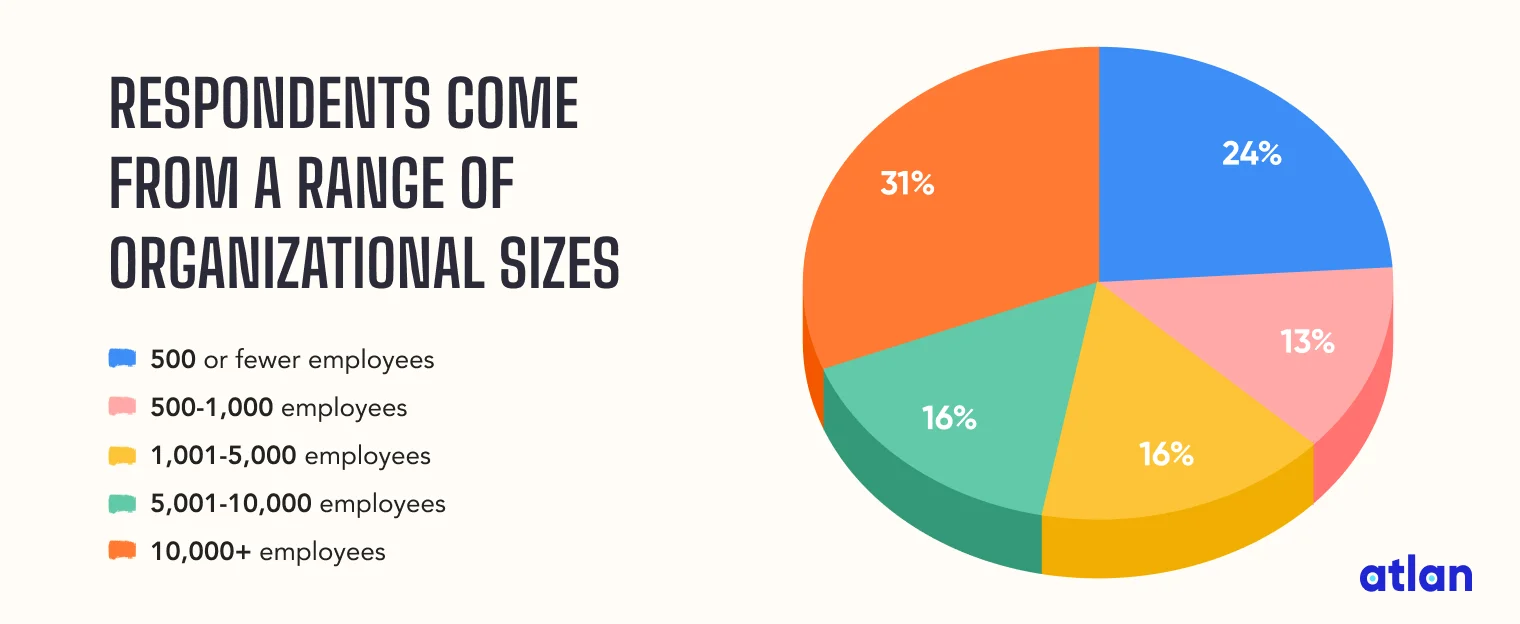

Permalink to “Methodology”This report is based on a survey of 561 data professionals from 50+ industries and various countries. Slightly more than half of respondents (61%) are data leaders or executives, while 35% are practitioners. The remaining 4% comprises consultants.

The State of Enterprise Data & AI respondents come from a range of organizational sizes. Image by Atlan.

The most represented industries are technology (34%), financial services (24%), healthcare and life sciences (9.5%), and media and entertainment (5%).

Finding #1: AI Is Everywhere, Value Is Nowhere

Permalink to “Finding #1: AI Is Everywhere, Value Is Nowhere”You don’t need to look far back in history to see the trend line of new technologies. It begins with the early adopters who become enthusiastic evangelizers, followed by the cautious optimism of the early majority, which gives way to full-blown hype and promise.

But the ambition of technological waves often runs faster than the foundations beneath it.

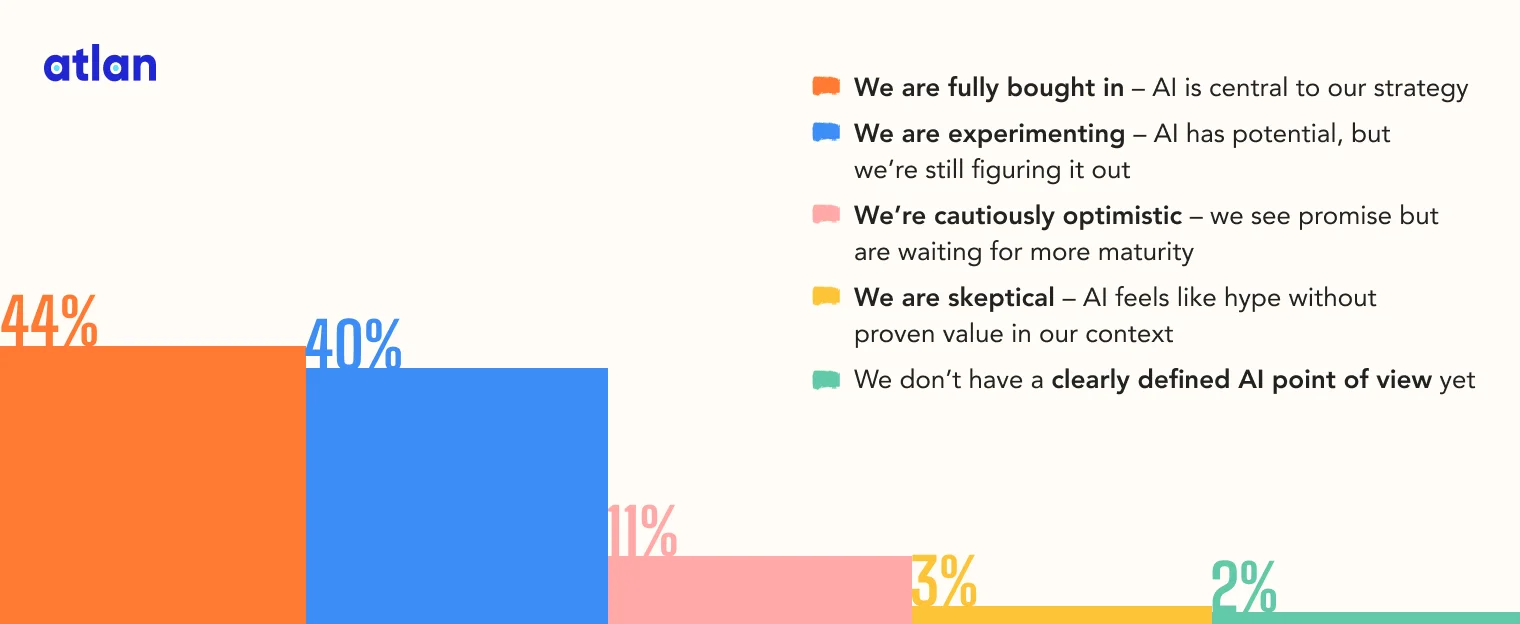

When asked about their organization’s mindset towards AI, respondents said:

The State of Enterprise Data & AI organization’s mindset towards AI. Image by Atlan.

Yet despite the enthusiasm for and focus on AI as a strategic pillar, AI adoption is lagging behind:

- 24% of respondents say they’re still in the pilot or planning stage

- 36% say they’ve built a few pilots, with limited traction

- 24% are scaling across functions with traction

- 17% have operationalized it and are driving business value

As for AI deployment, 21% of respondents’ organizations have deployed nothing, 33% have launched only a few small-scale projects, and just 5% have deployed “nearly all” of what they’ve built.

Clearly, there’s a mismatch between what companies think and what they’re actually doing. Because while 79% of respondents say their organization has deployed at least a few AI projects, only 17% are seeing an impact.

In other words, nearly 4 in 5 organizations have AI on the bench, but fewer than 1 in 5 are getting it to actually play. And if AI isn’t playing, it’s not putting points on the board. This is where most organizations sit today: multiple AI initiatives running without anything to show for it.

What’s Blocking AI Operationalization?

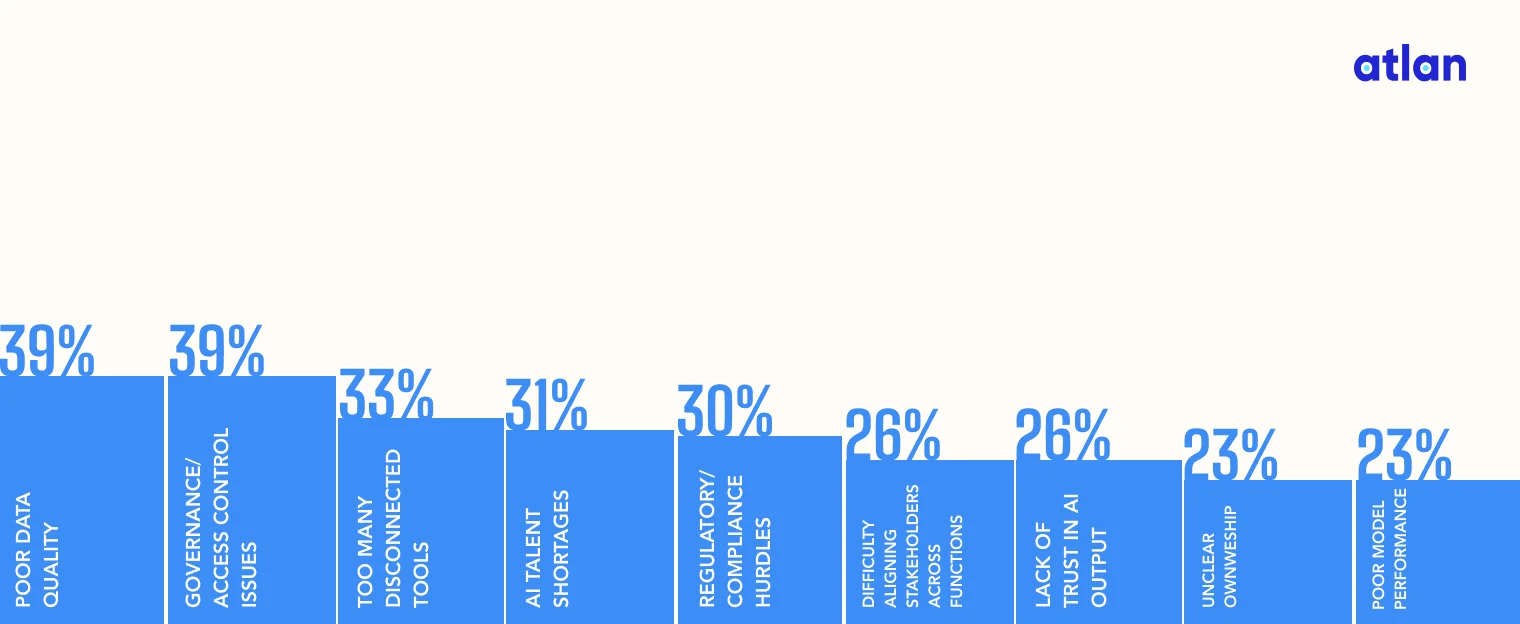

Permalink to “What’s Blocking AI Operationalization?”If so many companies are invested in AI, what’s stopping them from scaling it?

The State of Enterprise Data & AI what’s stopping them from scaling it. Image by Atlan.

These responses show that it’s often not the models that are at fault for blocking AI scalability. Instead, the people and the data itself – or the way it’s managed – present bigger barriers.

Why It Matters

Permalink to “Why It Matters”AI pilots aren’t a win anymore – most organizations have reached that point and consider it table stakes.

The gap lies in the transition from “we built it” to “it drives business outcomes.”

While 83% struggle with basics, the 17% who’ve moved AI into production are pulling further ahead every quarter. The gap isn’t closing – it’s widening. And the faster AI develops, the harder it will be to catch up.

Finding #2: Context Gaps Are Multifaceted

Permalink to “Finding #2: Context Gaps Are Multifaceted”The growing gap between scalable, production-ready AI and continued piloting comes down to context. For those who struggle to get AI off the bench and into the game, missing context – not the models, the developers, or even the level of investment – is often to blame.

And it bears out in the data: 97% of organizations have context gap issues.

The AI context gap is the space between what AI is taught and what humans just know – the edge cases, the unwritten rules, the judgment calls that can only be made by drawing on years of experience. So the fact that such a vast majority of organizations struggle with it is a problem for AI initiatives.

If we think about humans as the original enterprise “agents,” supplementing them with AI agents means onboarding and training them the same way you would a human – with context. But this is more intensive and multifaceted than simply feeding AI a list of business terms, because the average organization faces 2.69 context gaps, and 56% report having three or more context problems. What do those gaps actually look like?

The Three Types of Context Gaps

Permalink to “The Three Types of Context Gaps”Definition Gaps

Permalink to “Definition Gaps”Definition gaps concern alignment and accountability. They include:

- Lack of shared definitions across teams: “We can’t even agree on what ‘customer churn’ means.”

- Unclear context ownership: “Nobody is accountable for managing business context.”

Quality Gaps

Permalink to “Quality Gaps”Quality gaps relate directly to the data and AI models. These include:

- Poor data quality: “We can’t trust our training data or AI inputs.”

- Lack of business context: “AI understands language, but not our specific business.”

Trust Gaps

Permalink to “Trust Gaps”Trust gaps can be seen not just in AI outputs, but also in its value and impact within the organization. They include:

- Hallucinations making outputs unreliable: “I end up spending more time always checking AI’s work.”

- Hard to align stakeholders: “Our leadership team can’t agree on success metrics and use cases, which is delaying our projects.”

- Unclear ROI: “We’re rolling out AI initiatives, but can’t tell if they’re really making a difference to the business.”

Of these, survey respondents most frequently cited the trust gaps, indicating anxiety about the downstream impacts of AI without context. More than a quarter (26%) report concern about inaccurate AI responses stemming from a lack of business context, while 45% say hallucinations undermine their confidence in LLMs.

Why It Matters

Permalink to “Why It Matters”You wouldn’t put a player into the game if you didn’t trust their competence on the field. The same goes for AI – if you can’t trust it, you won’t use it. And that trust is built through context.

Context is the invisible infrastructure AI needs to function accurately and reliably. Without it:

- AI outputs are untrustworthy

- Teams can’t align on basic definitions

- Stakeholders can’t agree on priorities

- Nobody can measure success

But with it:

- AI is able to act autonomously without requiring constant quality checks

- Teams can make fast, informed decisions, and proactively address issues

- Stakeholders can align and act with greater clarity

- Success metrics are clearly defined, making ROI easier to understand

The first step is identifying, prioritizing, and making a plan to close context gaps, so that context becomes the driver of AI, not the failure point.

Finding #3: Valid Security Concerns Hide Execution Blind Spots

Permalink to “Finding #3: Valid Security Concerns Hide Execution Blind Spots”The proliferation of data regulations that exist across industries and jurisdictions means that security is always top of mind for data teams – it has to be. Not only can companies’ bottom lines take a hit when they incur a fine, but the reputational damage from mishandling data could have even more lasting effects.

But the stakes are even higher with AI. Organizations need a way to ensure AI agents and systems are accessing and using data compliantly, which is much more difficult when AI can consume massive volumes of data in milliseconds. So it’s easy to understand why 52% of respondents cite security and access to sensitive data as a top concern for AI implementation.

As Andrew Reiskind, Chief Data Officer at Mastercard, said during Atlan Re:Govern, “As we move to AI agents, my job is getting ever more complex. The speed of transactions is increasing, the speed of your life is increasing, but that also means the speed of fraudsters is increasing. And in order to fight that fraud, I need the right context.”

Still, while governance and access control loom large in the AI conversation, just 39% report that they’re actually blocking AI scalability. And only 30% say the same about regulations and compliance. Is the imagined threat to data security greater than the reality? Or is it masking other executional tensions?

Security vs. Execution

Permalink to “Security vs. Execution”Security concerns are valid. But they may also be standing in for more difficult cross-functional issues that also need to be addressed. For instance:

- Why do AI outputs feel unreliable? → Context gaps

- Why can’t teams trust the data and AI outputs? → Data quality problems

- Why can’t stakeholders align? → Definition misalignment

- Why can’t anyone measure success? → ROI criteria are unclear

It’s true that security underpins all data operations. But having the tough conversations to address reliability, accuracy, alignment, and measurement may naturally help solve security issues. After all, you can’t secure what you can’t define.

Why It Matters

Permalink to “Why It Matters”Many organizations treat security as the defining factor for AI readiness – you can’t scale until you’ve figured it out. But that thinking is one dimensional: Failing to address other issues surrounding context and readiness will be the greatest limiting factor in AI implementation.

To revisit our sports analogy, a coach may choose to bench a player because they missed practice – only to find that when they’re put in the game, the biggest issue is not their lack of practice time, but rather their lack of communication skills. It turns out the most obvious issue is not necessarily the most limiting one.

But, security is still a context problem. You can’t protect data and AI inputs without knowing their contents. You can’t implement access controls without understanding data semantics. And you can’t govern data at enterprise scale without shared definitions. Starting with context helps clarify the unknowns, so the rest can fall into place.

Finding #4: Context Experts are Sidelined

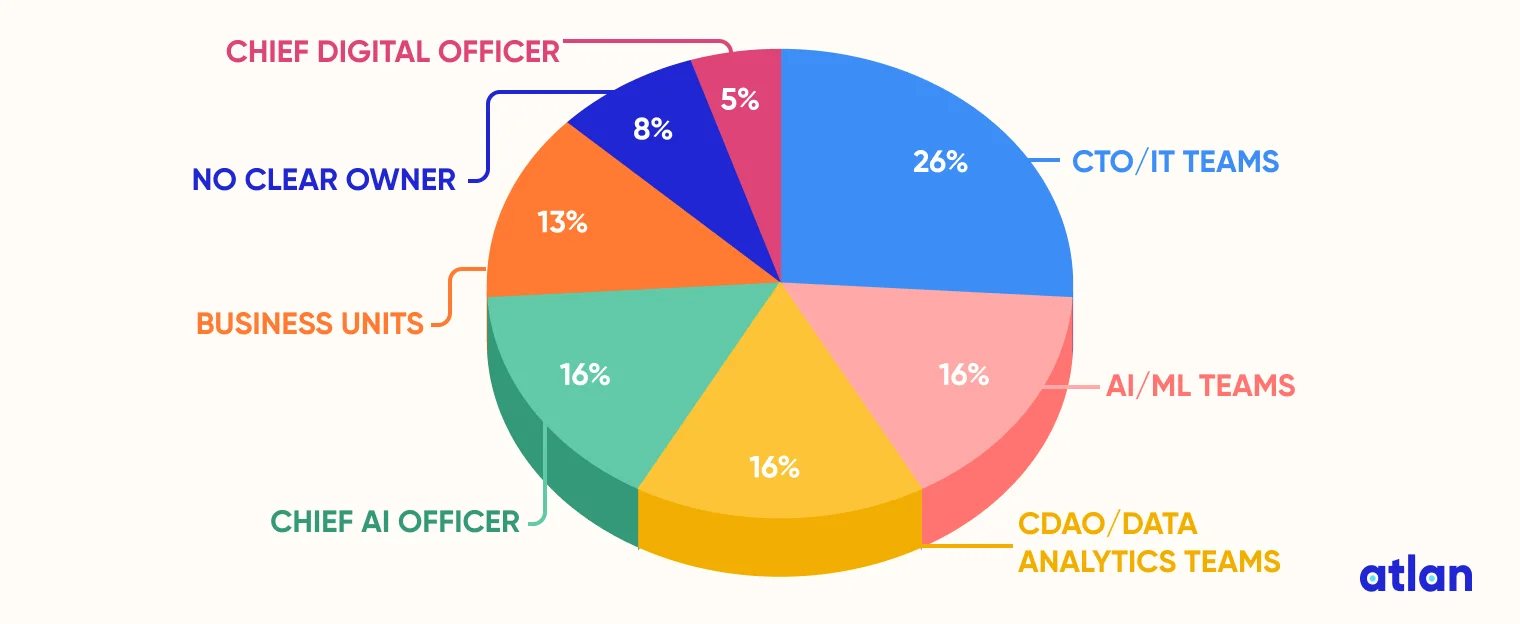

Permalink to “Finding #4: Context Experts are Sidelined”You wouldn’t implement a governance framework without the Governance team, or have the Analytics team spearhead an engineering project. But respondents told us that’s exactly what’s happening with AI.

Only 16% of organizations have AI/ML teams leading AI initiatives – and 8% have no clear owner at all.

When asked who the primary drivers of AI initiatives are in their organizations, respondents report:

The State of Enterprise Data & AI who the primary drivers of AI initiatives are in their organizations. Image by Atlan.

Who Should Lead AI?

Permalink to “Who Should Lead AI?”While IT teams have a firm grasp on how data flows throughout their organization and the infrastructure that supports it, AI/ML teams are focused primarily on building and deploying models, running experiments, and managing training pipelines – separate from the day-to-day operations of IT teams. Tasking IT with driving AI initiatives runs the risk of spreading them too thin and limiting AI momentum.

This isn’t to say deploying production-ready AI is a one-team effort. However, it should primarily belong with the teams who have a business-oriented vision and the context to execute it.

The Leadership Vision

Permalink to “The Leadership Vision”Of the 16% of respondents who report having deployed most or nearly all of their AI projects, 52% say an executive sponsor (CxO) is leading the initiative. And of that executive-led group, 87% report that they’ve operationalized AI and are seeing value, or are scaling it across functions with traction.

Having a company leader driving AI initiatives forward helps build the business case and vision for implementation. They can amplify wins, identify emerging areas of opportunity, and advocate for budget and training resources. But the foundational layer must be effective as well.

The Context-Driven Execution

Permalink to “The Context-Driven Execution”Nearly a third (30%) of the survey respondents who have deployed most or nearly all of their AI projects say those projects are driven by AI/ML teams or business unit leaders – and 89% have operationalized or are scaling AI.

What do these stakeholders have that IT teams may not? Context. AI or domain-based teams often either have a view into or outright own foundational context AI needs, including:

- Data quality and availability

- Shared definitions and semantic understanding

- Governance and access control

- Cross-functional stakeholder alignment

These inputs are critical for ensuring that AI outputs are accurate, reliable, and valuable to the business, even as AI agents and systems scale.

Why It Matters

Permalink to “Why It Matters”When the people who own context don’t lead AI, context gaps are inevitable. Companies that bypass data teams are likely to deal with the problems that stem from lack of context: confusion, inconsistency, and frustration when AI projects get stuck in pilot.

Starting an AI project with IT may neglect the context-gathering needed to make it effective at scale, not to mention the leadership vision that helps steer the initiative and align the right stakeholders. Like a coach with a game plan, starting with a vision and context helps close context gaps so that AI gets off the bench and into production faster.

Finding #5: Investments Are Shifting from Foundation to Intelligence

Permalink to “Finding #5: Investments Are Shifting from Foundation to Intelligence”While all organizations recognize the need for foundational capabilities, the difference between those stuck in the pilot stage and those in production comes down to how they distribute investments across three distinct layers.

The foundation layer provides secure infrastructure, governance, quality, and metadata context for all models and applications. It comprises:

- Data governance, access control, and metadata management

- Data quality and observability

- Cloud data lakehouse/warehouse infrastructure

- Unstructured metadata management (e.g. PDFs, images, audio)

- AI governance (risk, ethics, policy)

The translation layer maps human intent to data queries and model calls. It includes:

- LLM orchestration and RAG pipelines

- AI observability (drift, accuracy, feedback loops)

- Use of foundational models (e.g. OpenAI, Claude, Mistral)

The application layer is where users interact with data that’s powered by the underlying foundation and translation layers. It’s composed of:

- AI agents or copilots in business apps (e.g. Glean, SAP, Joule)

- Talk-to-data interfaces (e.g. Snowflake Cortex, Databricks Genie)

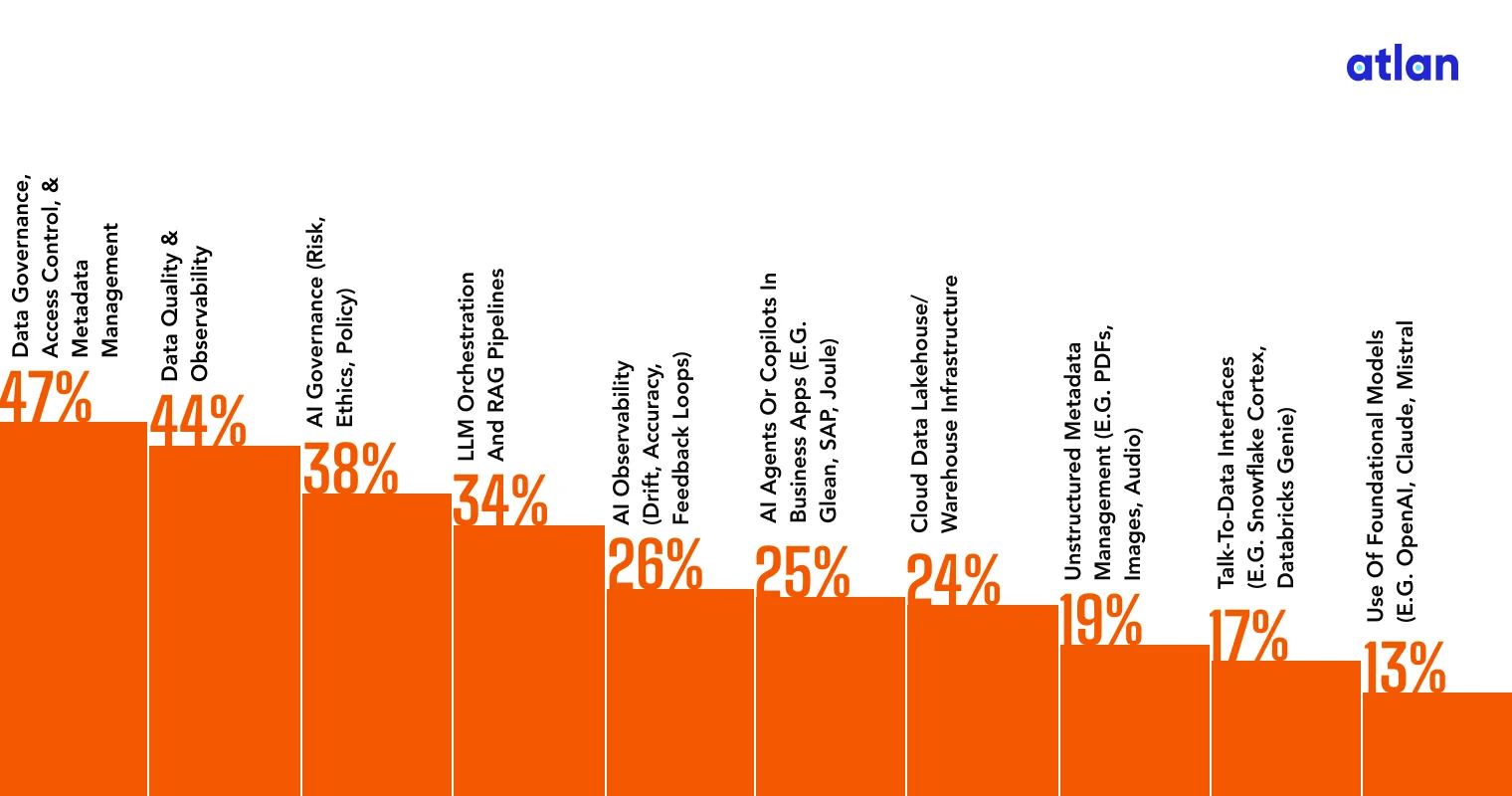

Organizations’ top investment categories tell a clear story of evolution:

The State of Enterprise Data & AI Organizations’ top investment categories. Image by Atlan.

This shows a clear shift from investing in the foundation layer for AI toward the translational and application layers. As organizations begin to move AI initiatives into production, they need to focus less on laying the groundwork, and more on building the infrastructure that will allow them to scale. Forward-looking investments in AI governance, orchestration, agents, and conversational interfaces are how they’ll get there.

Why It Matters

Permalink to “Why It Matters”Your 2026 budget allocation will determine whether you’re still in the pilot stage in 2027 or are showcasing production wins. Data leaders face a critical decision: continue perfecting the foundation, or accept that governance with strong AI activation capabilities beats perfect governance that blocks AI access.

Shifting from AI foundations to translation and application is a necessity for the future of AI initiatives. Organizations still concentrating the majority of their budget on data governance and quality are training a legacy team at the expense of the next generation of players.

Meanwhile, forward-looking teams are investing in LLM orchestration, AI agents, and talk-to-data interfaces that deliver immediate value to business users and build the scaffolding for scalability. The gap between these two approaches compounds every quarter, creating a competitive disadvantage for those who wait too long to shift.

The most successful AI-driven organizations know that reducing the percentage (not absolute dollars) spent on foundation allows them to fund the capabilities that actually deliver AI value. These teams recognize that unstructured data management and AI observability can be the difference between AI that works on spreadsheets versus AI that understands contracts, emails, and the documents where real business knowledge lives.

What Context-Ready Teams Do Differently

Permalink to “What Context-Ready Teams Do Differently”There’s a lot of talk about AI readiness, and with good reason – the right people, systems, and processes must be in place in order to be successful. But the real key to AI readiness is context readiness – because without context there is no scalable AI.

But what does it really mean to be context-ready? Here’s what companies that are doing it right have in common:

1. They Own Their Context Infrastructure

Permalink to “1. They Own Their Context Infrastructure”The enterprise context layer may not yet be ubiquitous, but it will be what separates AI-native companies from those stuck in the pilot stage. Organizations who get this right have:

- Unified definitions across all teams that reflect how the organization actually thinks and operates

- Data quality checks that ensure reliability and accuracy are built into pipelines, not bolted on after

- Clear ownership with data teams at the center and an executive sponsor to support

- Governance that extends seamlessly to AI and allows for local ownership

2. They Invest in the Full Stack

Permalink to “2. They Invest in the Full Stack”As AI becomes more advanced, so will the tooling it needs to work. Already, organizations that invest in capabilities like AI agents and talk-to-data interfaces are pulling ahead of those solely focused on data governance. Here’s what they focus on:

- A three-tiered approach that encompasses the foundation, translation, and application layers

- Spreading budget across the three layers, instead of prioritizing just the foundation layer

- LLM orchestration that connects AI to governed data

- Semantic layers with machine-readable context that make data understandable by and accessible to AI

3. They Ship and Iterate

Permalink to “3. They Ship and Iterate”“Don’t let perfect be the enemy of good,” said Vivek Radhakrishnan, SVP of Data Governance Engineering at Mastercard, during Atlan Re:Govern. “We spent a bunch of time trying to design essentially the perfect playbook that would catch every edge case imaginable, and that was just not going to work.”

Being context-ready starts with context awareness. When you’re getting started:

- Don’t wait for perfect foundations, but ensure context is embedded by design

- Launch with the context you have, quickly identify your gaps and inconsistencies, and train models on that knowledge with human-in-the-loop feedback

- Measure actual business outcomes, such as time to resolve customer support tickets or conversion rates on personalized ads

- Iterate based on what drives value (which an executive sponsor can help identify)

How to Become Context-Ready

Permalink to “How to Become Context-Ready”In the internet era, Bill Gates famously said that “content is king.” Today, in the AI era, context is king. So the question isn’t whether you need context infrastructure – it’s how soon you’ll join the minority of companies taking the steps to implement it and start seeing value from AI.

These are the best practices for moving from context chaos to context-ready, and eventually, context-native:

- Accept the context crisis: You’re not alone in facing this – the question is how you approach it. Identify and prioritize the context gaps facing your team so you can make a plan to tackle them.

- Look beyond security concerns: Governance and access control are real concerns – but they may not be why you’re stuck. Look at the other factors that are making your AI unreliable and align security concerns to missing context.

- Put data teams at the center: You can’t get to scalable AI without the context experts. Enlist an executive sponsor and rally the right teams to own context and drive AI initiatives toward a business-aligned vision.

- Invest in the full stack: AI can’t exist solely on the foundation of data governance – to scale and have an impact, it needs specific capabilities that make meaning from data. Budget for those capabilities.

- Don’t let perfect get in the way of operational: The beauty of testing and iteration is your ability to give AI the feedback it needs to get context right. Once you start, you can quickly learn and optimize.

Your Next Step

Permalink to “Your Next Step”Where do you stand on the Context Maturity Scale?

- Context Chaos: Multiple gaps, no ownership, security excuses

- Context-Aware: See the problem, investing in foundations only

- Context-Ready: Building translation layers, data teams engaged

- Context-Native: Full stack operational, driving measurable value

Before you build more models, you need to understand where you stand today and what context infrastructure you need to get AI off the bench and into the game. Because the organizations that win won’t have better models – they’ll have better context.

Did AI break the data stack? See what these stats mean for 2026 data priorities. Read the report here.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.