"Approved" Is the Most Dangerous Word in AI

Atlan helps teams define and maintain shared data and AI context across an organization. Artie keeps operational data continuously fresh as it moves between systems. Together, we work with teams running AI and analytics in production — where correctness depends not only on what data means, but whether it still reflects reality right now.

Most production AI failures don’t happen because the model is misconfigured or underperforming. They happen because systems either lack the context they need, or keep operating on context that quietly stops being true.

Production AI Rarely Breaks — It Quietly Drifts Out of Reality

Permalink to “Production AI Rarely Breaks — It Quietly Drifts Out of Reality”AI systems rarely fail all at once. They drift — starting with ordinary, reasonable change: a new product flow launches, an upstream definition changes, or a source system shifts how it records state. Each change makes sense on its own. Pipelines keep running, dashboards stay green and from the outside, everything looks fine.

But underneath, the assumptions that made the system trustworthy begin to expire. Nothing breaks and the system keeps making decisions. This is often misdiagnosed as model drift. It isn’t. The model is behaving exactly as designed. What’s drifting is the context the model relies on — and once that happens, correctness quietly erodes.

When Context Drifts, Models Behave Correctly and Still Cause Damage

Permalink to “When Context Drifts, Models Behave Correctly and Still Cause Damage”An AI system is only as reliable as the context it uses at runtime. Depending on the use case, that context might include:

- Recent account behavior or risk signals

- Entity state like plan status, entitlements, or permissions

- Documents and policies retrieved for RAG systems

Many context layers today are built by replicating operational data into a warehouse and converting it into features or search indexes that AI models can use. For analytics, microbatch pipelines that update every 5–15 minutes are usually fine. For production AI, that same architecture can be dangerously stale — even when everything is governed and approved.

When context lags behind reality, models don’t fail noisily. They produce confident, explainable decisions based on assumptions that are no longer true.

Governance Proves Data Was Approved — Not That It’s Still Safe to Use

Permalink to “Governance Proves Data Was Approved — Not That It’s Still Safe to Use”Strong governance shows up in every serious AI initiative. Teams invest in lineage, ownership, access controls, and certification workflows to make data compliant and auditable. These programs are necessary. But they’re designed to answer a specific question: Was this data approved to exist and be used?

That’s not the question production AI needs answered. Production systems need to know:

- Does this data reflect the current state of the business?

- Is it fresh enough for this decision right now?

- Is it contextually accurate for this specific query?

- Are downstream features and indexes still in sync with the source?

In short, governance signals are largely static. They prove defensibility at a moment in time when production AI requires continuous safety guarantees. When teams assume those are the same thing, systems fail.

The Real Failure Mode Is Approved Context That’s Now Stale

Permalink to “The Real Failure Mode Is Approved Context That’s Now Stale”The real risk isn’t that data arrives late once. It’s that staleness accumulates.

Micro-batch replication. Scheduled transformations. Retries. Multi-system joins. Even when raw data lands every 10 minutes, derived context is often 30–60 minutes behind reality. That delay is acceptable for reporting. For AI systems acting on current state, it can be fatal.

The system keeps running. Decisions keep getting made. By the time anyone notices, damage has already occurred.

A Customer Support AI That Fails Without Hallucinating

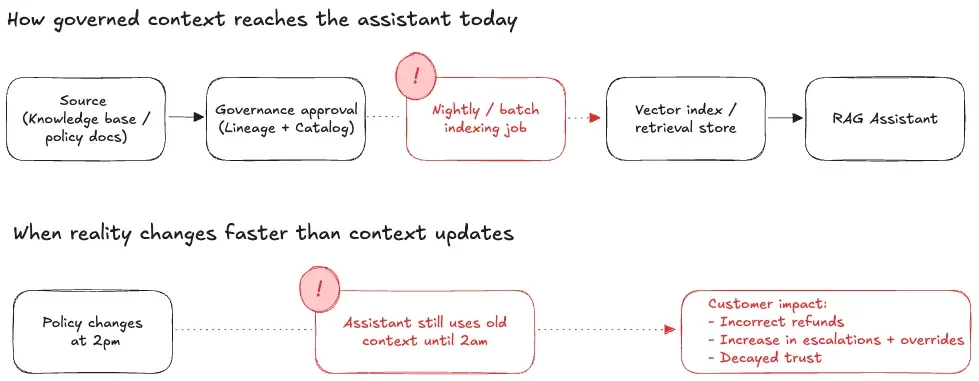

Permalink to “A Customer Support AI That Fails Without Hallucinating”Consider a company deploying an AI-powered customer support assistant using RAG.

The context layer pulls from a governed knowledge base: pricing policies, refund rules, escalation paths. Governance is strong. Documents are owned, versioned, access-controlled, and approved. The assistant launches with confidence.

The knowledge base is indexed nightly.

At 2:00 PM, the refund policy changes. Legal updates the document and Customer Support leadership rolls out new instructions. The change is correct and approved.

But the AI assistant now lives in two worlds:

- The approved world in the catalog

- The current world customers experience

For the next 12 hours, the assistant confidently gives incorrect answers. It doesn’t hallucinate. It behaves exactly as designed — using stale context.

The result is incorrect refunds, broken trust, escalations, and eventually a rollback of the AI system because “we can’t trust it.” Governance didn’t fail here. Freshness was never treated as a first-class requirement.

How context rot causes AI systems to fail silently. Source: Atlan.

Trusting Old Approvals at Scale Becomes a Leadership Decision

Permalink to “Trusting Old Approvals at Scale Becomes a Leadership Decision”Early on, this looks like a technical problem. Teams try to debug pipelines or retrain models. At small scale, those fixes can work. But at scale, they don’t.

You can’t patch your way out of expired assumptions. Continuing to rely on old governance approvals without freshness guarantees stops being an engineering choice. It becomes a leadership decision.

Because when leaders start sensing rising risk, they’re rarely told the system should stop. Instead, they end up presented with a familiar tradeoff:

- Move fast and manage issues as they appear

- Or govern heavily and slow everything down

Both paths fail. Speed pushes teams to keep data moving even as assumptions change underneath them. Heavy governance pushes teams to optimize reviews rather than runtime reliability. Responsibility fragments. No one owns whether the system’s assumptions are still valid after it’s live.

Freshness without governance spreads mistakes faster. Governance without freshness creates false confidence. If your governance programs can’t detect stale context, scaling AI doesn’t reduce risk. It compounds it — quietly.

Teams That Win Treat Context as Production Infrastructure

Permalink to “Teams That Win Treat Context as Production Infrastructure”Teams that operate AI reliably treat context as production infrastructure, not documentation. That means context updates continuously, freshness is a defined reliability guarantee, and governance is enforced where data moves, not months after decisions are made.

Practically, this requires three shifts:

1. Treat freshness as a guarantee

Permalink to “1. Treat freshness as a guarantee”Not “this table updates every 5 minutes,” but:

- What is the maximum tolerated staleness for this decision?

- What happens when that guarantee is violated?

- Does the model degrade, fall back, or refuse to act?

If operational systems and context layers aren’t continuously in sync, no amount of governance can make real-time AI reliable.

2. Make context drift observable

Permalink to “2. Make context drift observable”Teams need to see:

- When upstream definitions change

- When pipelines are “healthy” but delayed

- When assumptions expire before customers notice

This requires lineage and quality checks that tracks where context comes from, what it means, and when it changed — across teams and systems.

3. Make dependency impact instant

Permalink to “3. Make dependency impact instant”When context becomes unsafe, teams must be able to answer immediately:

- Which models and use cases depend on it?

- What changed?

- Who is accountable?

Without a live impact graph connecting data to decisions, risk stays invisible until damage occurs.

How Atlan and Artie Eliminate Context Rot at Runtime

Permalink to “How Atlan and Artie Eliminate Context Rot at Runtime”This is what Atlan and Artie are designed to solve together.

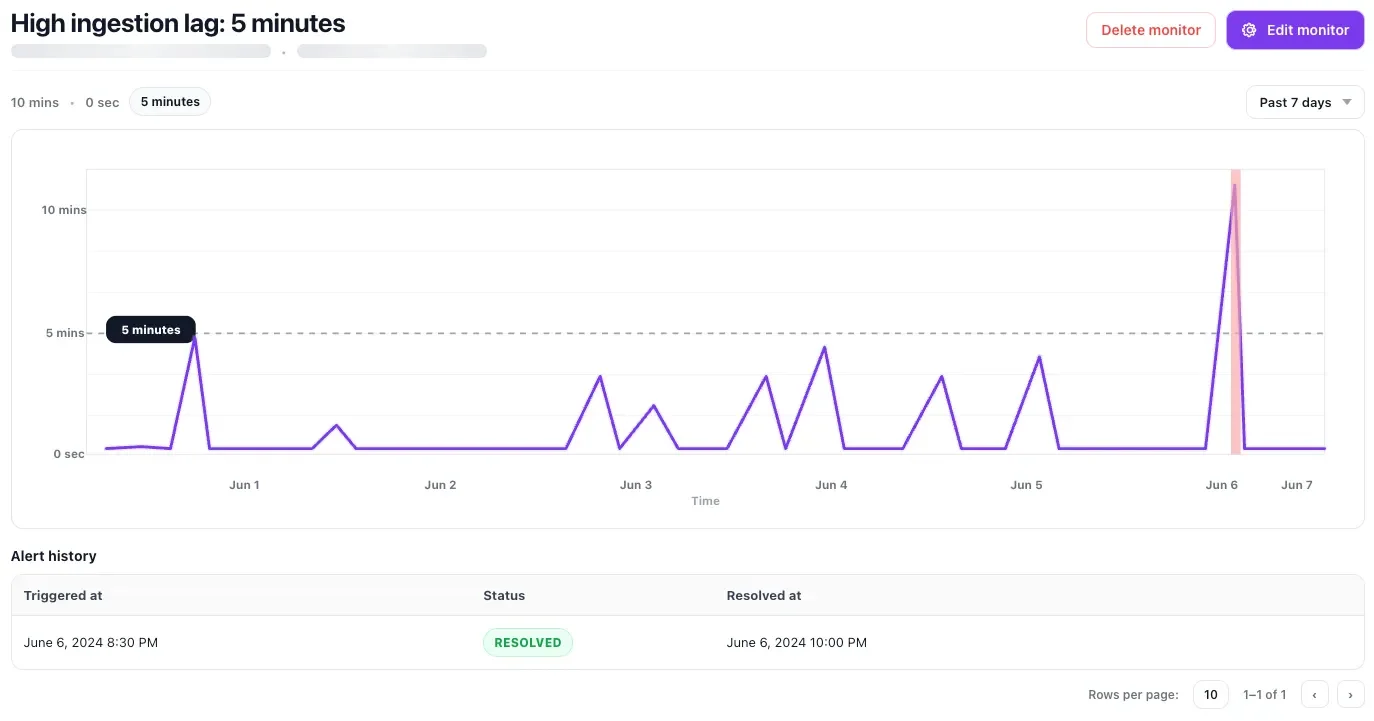

Artie keeps operational data continuously fresh as it moves between systems, so context layers aren’t built on snapshots that lag reality. It gives teams the foundation to define and enforce freshness guarantees for AI use cases.

Prevent stale context in production AI with ingestion lag alerts and freshness SLA. Source: Artie.

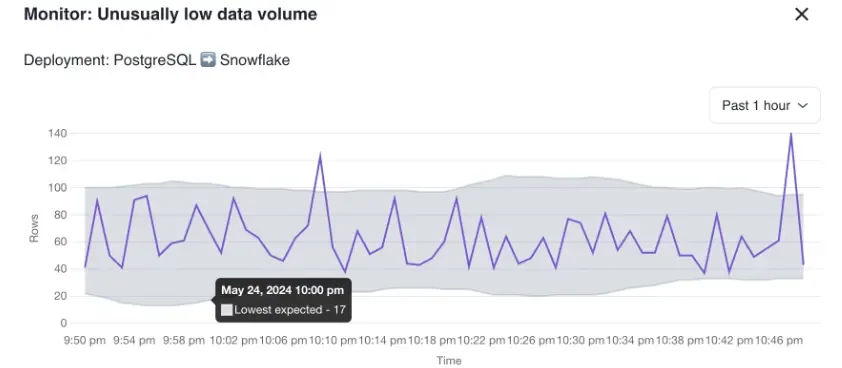

Catch silent pipeline failures early with volume anomaly alerts before it impacts production. Source: Artie.

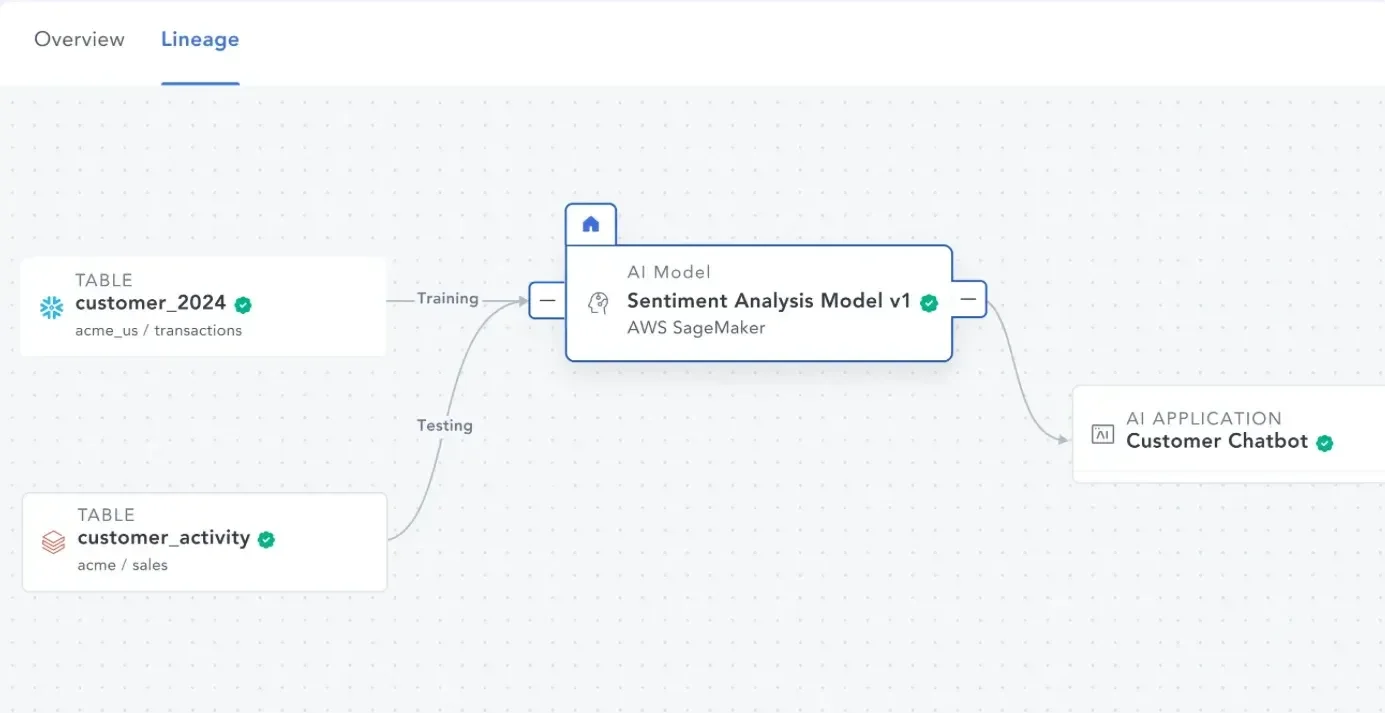

Atlan provides the governance and shared context layer that makes freshness actionable, with lineage, ownership, certification, and impact analysis that show exactly which features, data products, and AI systems depend on which sources.

Get end-to-end visibility into how your data and AI is used, enabling data and AI governance. Source: Atlan.

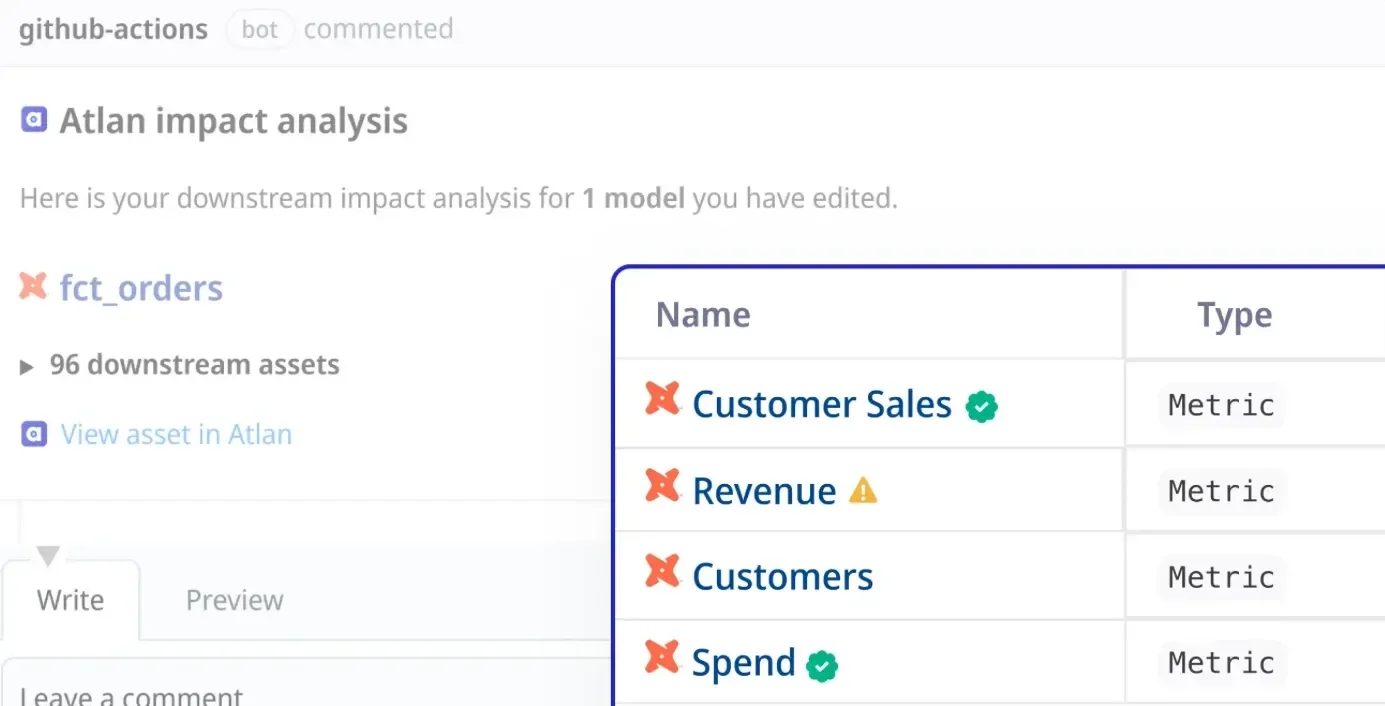

Proactive impact analysis in Atlan. Source: Atlan.

Together, Atlan + Artie make freshness a first-class part of data and AI governance:

- Freshness guarantees (Artie)

- Observability signals and context-aware impact analysis (Atlan)

- Runtime trust you can defend (both)

In AI, Approval Is a Snapshot — Freshness Is the Guarantee

Permalink to “In AI, Approval Is a Snapshot — Freshness Is the Guarantee”In AI, “approved” is not a synonym for “safe.” It’s a snapshot. And when the world changes faster than your context updates, approvals don’t prevent failure. They create false confidence.

The teams that win won’t just govern data. They’ll govern freshness, validity windows, and lineage-driven trust signals at runtime — so the context layer stays aligned with the world their AI systems operate in.

Because if you can’t explain — plainly — why an AI system is trustworthy right now, then what you’re scaling isn’t intelligence. It’s risk.

Ready to eliminate context rot in your AI systems?

Book a Demo →Share this article

Context rot and AI governance: Related reads

Permalink to “Context rot and AI governance: Related reads”- AI Readiness: Complete Guide to Assessment: Learn how to prepare your organization for AI at scale

- Data Governance for AI: Why governance is essential for trustworthy AI

- AI Data Governance: Why Is It A Compelling Possibility?

- What is Active Metadata?: Definition, Characteristics, Example & Use Cases

- Data Lineage Tracking: Why It Matters, How It Works & Best Practices

- Data Quality and Observability: The Foundation for Reliable AI