Bringing Data & AI Context to Market: Atlan's Use Case Framework

Most enterprises don’t have a data problem. They have a context problem.

Teams have petabytes of data, modern clouds, and ambitious AI roadmaps. But when the board asks a simple question — “How many customers did we add last quarter?” — five teams give five different answers. AI pilots stall, dashboards lose credibility, and audits turn into all‑hands fire drills.

The fix isn’t bigger models. It’s better context. But many organizations – even those with sophisticated or well-funded AI programs – still struggle to close the context gap.

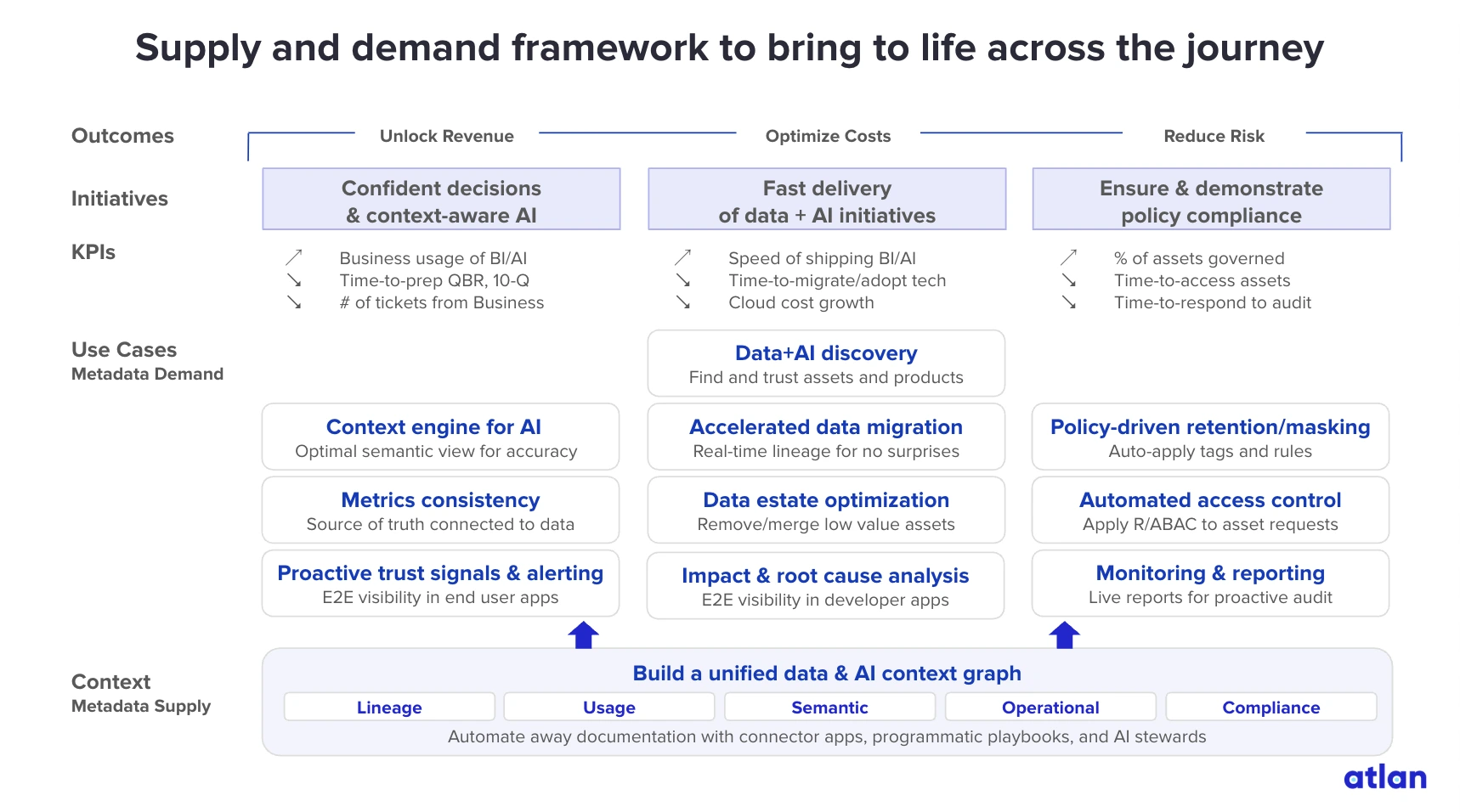

That’s why Atlan architected an outcome-focused use case framework that provides a practical path for data and AI leaders to harness context for AI. It’s a two-pronged approach that treats context like a market:

- The supply side produces and distributes context via a context graph.

- The demand side consists of business and AI use cases that consume context.

Used well, this framework gives CIOs, CDOs, and CDAOs a repeatable path from:

Pain → Use case → Business value → Atlan capability → Measurable outcome

TL;DR for executives

Permalink to “TL;DR for executives”- The problem: AI and analytics fail not because of missing data, but because teams don’t share context — consistent definitions, lineage, quality, policies, and usage patterns.

- The shift: Leaders are moving from static catalogs and one‑off projects to a context layer that serves both humans and AI across the data estate.

- The framework: Atlan’s supply–demand model is organized into three outcome pillars:

- Unlock revenue with confident decisions and context‑aware AI.

- Optimize costs by shipping more data and AI initiatives faster.

- Reduce risk by ensuring and proving data and AI compliance.

- The engine: Underneath is a unified context graph — powered by Atlan’s Active Metadata Platform and Metadata Lakehouse — that continuously captures, enriches, and activates context where work happens.

- The deliverable: A practical way to prioritize 3–5 high‑ROI use cases, align teams around a single framework, and show measurable value in weeks, not quarters.

The context gap: Why data and AI programs stall

Permalink to “The context gap: Why data and AI programs stall”If you sit in a C‑level data or technology seat today, you may recognize some of the issues data leaders have shared with us:

- “We can’t trust our KPIs — Finance and Product report different ‘revenue’ numbers.”

- “Our AI pilots look great in demos, but no one is willing to put them in front of customers.”

- “Audit prep hijacks six weeks of our year, every year.”

These are not tooling failures– they’re context failures. And they often become major roadblocks to AI momentum and value.

The problem: Systems evolved, context did not

Permalink to “The problem: Systems evolved, context did not”Over the last decade, enterprises have modernized the systems side of their tech stacks. They integrated cloud data platforms, lakehouses, and real‑time pipelines, along with modern BI, notebooks, MLOps, and more recently, agentic AI.

But most organizations still manage context the old way: defining metrics in slide decks and wikis that quickly get stale; reverse‑engineering lineage in spreadsheets when something breaks; and burying policies in PDFs, detached from the pipelines they’re meant to govern.

The result is predictable:

- AI models hallucinate because they don’t understand what “revenue” or “churn” mean in your business.

- Analysts spend 80% of their time hunting for data and validating it, not analyzing it.

- Governance is perceived as a burden, not an operating system.

To fix this, leaders are shifting from projects to a context operating model.

From projects to a context market: Supply and demand

Permalink to “From projects to a context market: Supply and demand”The most effective data and AI leaders treat context like a market, with a supply side and demand side.

- On the demand side, there are concrete use cases — revenue dashboards, AI assistants, migration programs, compliance reporting — each with a clear outcome and set of personas.

- On the supply side, there’s a context graph, a living map of how data is defined, produced, used, and governed across your estate. It shows how data flows, who owns it, and why decisions were made.

Atlan’s framework connects these two sides so you can invest in context once, then reuse it across dozens of initiatives as it continuously evolves.

Figure 1: Atlan's supply–demand framework ties business outcomes and use cases on the demand side to a unified data & AI context graph on the supply side. Source: Atlan.

For executive buyers, this framework turns “governance and catalog” into something far more strategic: A single operating model to prioritize investments, align teams, and measure value across your entire data and AI estate.

Let’s walk the framework from the demand side first, then look at how the context graph makes it possible.

Outcome pillar 1: Unlock revenue

Permalink to “Outcome pillar 1: Unlock revenue”Few companies have achieved true data democratization, and even fewer are getting value from AI (research from MIT puts the rate at 5%). Without accurate, fresh, or trustworthy data, revenue-generating analytics and AI will continue to elude even the most tech-savvy teams.

But context-aware AI can power confident decision-making, unlocking new revenue streams or maximizing existing ones. Talk to data initiatives, consistent metrics, and proactive trust signals help teams get there.

Primary value driver: Trusted data for self‑service analytics and AI.

Core personas: CDO/CIO, business leaders, analytics leaders, AI teams.

Use case 1: “Talk to Data” — a context engine for AI apps

Permalink to “Use case 1: “Talk to Data” — a context engine for AI apps”The scenario

A global enterprise launches an AI assistant so executives can talk to their data, asking natural‑language questions and getting straightforward responses. In the first demo, someone asks: “What was Q4 revenue in North America?”

The assistant confidently replies with a number — and Finance disagrees. The pilot stalls. Leaders lose trust before the AI ever reaches production.

The problem? AI doesn’t ask for clarification – Q4 of which year? How is revenue defined? Which countries are counted in North America? When it acts on the first set of definitions it can find, AI answers incorrectly, or worse, confidently hallucinates.

Atlan in action

Atlan mitigates this risk by implementing a context layer that sits between your AI tools and your data. This layer encodes business logic, including metrics definitions, glossary terms, policies, and ownership. And to be AI-ready, Atlan ensures context is machine-readable, universal, and embedded, and incorporates human-in-the-loop feedback for continuous improvement.

Atlan’s Metadata Lakehouse plays a key role in context readiness by exporting governed context into LLM ecosystems. Whether you’re using Snowflake Cortex, Databricks Genie, or custom agents, context is consistent and trustworthy. And column‑level lineage maps where every figure in a dashboard or AI answer came from, so questions or inconsistencies can be traced back to their source.

This way, AI is pointed at context-rich, certified assets – not raw tables.

What changes

- Natural‑language questions map to the right metrics and datasets, not whatever the model retrieves first.

- Business users can see why an answer is trustworthy — or why it isn’t — with built‑in lineage and quality signals.

- AI moves from uncertain pilot to trusted, scalable decision partner.

Use case 2: Metrics consistency — one source of truth for KPIs

Permalink to “Use case 2: Metrics consistency — one source of truth for KPIs”The scenario

At the end of the quarter, the board reviews “customer acquisition cost.” Marketing and Finance each show carefully prepared decks – but the numbers don’t match. The meeting derails into a debate about whose pipeline is correct, followed by weeks of reconciliation. By the time teams agree on the number, it’s no longer relevant.

Atlan in action

Atlan gives you one governed, shared source of truth for critical metrics, so every team is speaking the same language – no ambiguity, no disagreements.

In Atlan’s business glossary, KPI logic, metrics, or taxonomies are defined once, then linked to tables, dashboards, and AI features. The definitions include certification, ownership, and versioning, so there’s a recognizable “gold” version of each metric. And since the glossary is built on a knowledge graph, you can connect the dots across data, definitions, and domains to mirror how your business actually operates.

Usage analytics show who is using which metric, where, and how, and users can suggest updates as context evolves – avoiding the tribal knowledge problem.

What changes

- Executive meetings focus on making decisions, not reconciling numbers.

- Teams stop rebuilding the same metric logic in different tools.

- You can measure concrete outcomes, like 50% fewer conflicting reports and faster QBR/10‑Q prep cycles.

Use case 3: Proactive trust signals & alerting

Permalink to “Use case 3: Proactive trust signals & alerting”The scenario

On Monday morning, a CDAO discovers that the revenue dashboard feeding the CEO’s weekly update ran on stale data all weekend. Pipelines “passed” the last run, but the data was incomplete. The incident started Thursday night, but no one noticed until Monday. Now, there’s a scramble to clean up and remediate the incorrect data – taking teams away from other high-priority focus areas.

Atlan in action

Atlan brings trust signals out of back‑office tools and into the places where decisions are made through its Data Quality Studio. This embeds freshness and quality scores directly into BI dashboards, contracts, AI guardrails, and 360° reporting, so users know they can trust data before they start acting on it.

Atlan’s end-to-end lineage and proactive alerts further support data quality by highlighting upstream changes that impact specific downstream metrics, and notifying users in Slack or Teams when data breaks or recovers. Together, they help ensure issues are caught before executives walk into a meeting.

What changes

- Fewer decisions are made on bad data.

- Data teams shift from reactive firefighting to proactive reliability.

- Concrete improvements can be easily tracked, like reduced incident frequency and shorter time‑to‑detect and resolve data issues.

Outcome pillar 2: Optimize costs

Permalink to “Outcome pillar 2: Optimize costs”Cost optimization is one of the leading investment rationales for AI, with 93% of business leaders citing it as a driver. But to actually see an impact in the budget, teams need easy discovery, efficient data migrations, streamlined systems, and real-time impact analysis.

Primary value driver: Accelerate productivity for data and AI initiatives while controlling platform spend.

Core personas: VP of Data, data engineering leaders, architects, analytics leaders.

Use case 4: Data+AI discovery — self‑service data asset 360°

Permalink to “Use case 4: Data+AI discovery — self‑service data asset 360°”The scenario

A VP of Data is asked to stand up a new pricing optimization initiative in six weeks. The blockers aren’t models or infrastructure. They’re questions like:

- “Which tables actually contain pricing history?”

- “Is this dataset still used, and by whom?”

- “Can we safely join these sources without violating policy?”

Analysts spend most of the project budget just finding and validating data, not putting it to use.

Atlan in action

Atlan provides a single place to search, filter, and explore every asset with full context. Its data discovery capabilities provide a Google‑like search across warehouses, lakes, BI tools, and ML artifacts. Asset profiles are enriched with schema, descriptions, owners, quality signals, and lineage, reducing reliance on IT to find and understand data.

With natural-language search and personalized views for analysts, data scientists, and business users, analysts can go from spending most of their time wrangling and validating data, to actually using it.

What changes

- Teams get to first insight faster, with far less SME dependency.

- Reuse becomes the default — analysts can see prior work, not start from scratch.

- Time‑to‑value decreases for new analytics and AI projects.

Use case 5: Accelerated data migration

Permalink to “Use case 5: Accelerated data migration”The scenario

You’re midway through a strategic migration from legacy warehouse to cloud, or cloud to lakehouse. But timelines keep slipping because no one can answer three simple questions:

- What do we move first?

- What can we retire?

- Which dashboards and models will break when we flip a switch?

Projects slow to avoid risk, or move fast but encounter unnecessary detours. Either path raises questions from leadership and frustration from team members.

Atlan in action

Atlan takes a metadata‑first approach to migration, starting with usage analytics. The insights quickly highlight which assets are actively used, and by which teams. Then Atlan’s lineage maps dependencies from sources through transformations to dashboards and AI systems. Plug‑and‑play reports help you plan “waves” of migration with clear impact analysis.

What changes

- You minimize the risk of “lift everything and hope nothing breaks.”

- Migration risks, surprises, and rework drop, while confidence and speed go up.

- Leaders can track hard outcomes, like reduced migration cost and fewer regression incidents.

Use case 6: Data estate optimization

Permalink to “Use case 6: Data estate optimization”The scenario

Cloud bills take up significant space in the budget. And while most line items are familiar — Snowflake, Databricks, BigQuery — no one can tie spend to actual business value.

In reality, some datasets are never queried. Others are used by one forgotten dashboard. But without a clear view into usage and dependencies, leaders hesitate to delete anything. So they keep signing off on spending that doesn’t move the needle.

Atlan in action

Atlan turns your data estate into a cost‑aware context graph. Usage and performance metadata highlight orphaned, stale, or low‑value assets. Meanwhile, leaders can deprecate dashboards with peace of mind thanks to lineage that shows which reports, models, and teams would be impacted by it. And automated workflows help archive or delete assets safely, with clear approvals.

What changes

- Teams confidently clean up low‑value assets and expensive queries.

- Reductions in compute and storage spend are measurable and backed by evidence.

- The remaining estate is smaller, more trustworthy, and easier to govern.

Use case 7: Impact & root cause analysis

Permalink to “Use case 7: Impact & root cause analysis”The scenario

A customer‑facing KPI suddenly drops on a key dashboard. Is it a real signal — or a broken pipeline? Teams scramble across Slack threads, SQL snippets, and old diagrams to figure out what changed and where.

Hours or days later, someone discovers a well‑meaning schema change upstream. In the meantime, decisions were delayed and trust took a hit.

Atlan in action

Atlan’s data lineage and impact analysis help teams understand how data moves, transforms, and influences downstream reports, pipelines, and AI models. By providing visual graphs from raw sources through transformations to every downstream dashboard, dataset, and model, Atlan shows where data comes from and what depends on it.

When a column is updated, Atlan’s change history shows who made the change and what else might adjust as a result. Engineers can also run “what if” analyses before shipping a change, so they can preemptively know what will be impacted.

What changes

- Root‑cause investigations shrink from days to minutes.

- Teams can prevent breakages before they occur, not just react once dashboards go red.

- Engineers spend more time creating value and less time debugging.

Outcome pillar 3: Reduce risk

Permalink to “Outcome pillar 3: Reduce risk”Speed versus security has always created tension in data operations. The flip side of enabling self-service for humans and AI agents is ensuring that data stays secure and governed – and the penalties for noncompliance are steep. But when done correctly – through reporting, obfuscation, and access controls – risk decreases while utility increases.

Primary value driver: Safeguard data and AI security and compliance while enabling governed self‑service.

Core personas: CDO, CISO, privacy and compliance leaders, data governance teams.

Use case 8: Compliance monitoring & reporting

Permalink to “Use case 8: Compliance monitoring & reporting”The scenario

Audits still look like they did 10 years ago: Governance teams pull CSV exports from multiple systems, stitch them together in spreadsheets, and chase down owners to answer basic questions:

- “Where does personal data live?”

- “Who accessed it in the last 90 days?”

- “Can you prove lineage for this regulated metric?”

Preparation consumes weeks of high‑value time and still leaves blind spots. And with more users than ever accessing data, those blind spots are far more numerous and dangerous.

Atlan in action

Atlan turns compliance into a set of continuous, queryable reports, simplifying audit processes without additional overhead. It starts with automated discovery and classification of sensitive fields across warehouses, lakes, and BI, so you know where to enforce the right policies. Atlan’s lineage then shows how regulated data flows through the ecosystem and where those policies are applied.

To keep track of it all, Atlan’s Metadata Lakehouse powers plug‑and‑play compliance dashboards for data stewards and auditors.

What changes

- Audit prep time drops significantly as teams spend less time manually pulling reports.

- You move from one‑off evidence gathering to always‑on compliance visibility.

- Regulators and internal risk teams see a coherent, end‑to‑end picture of how data is controlled.

Use case 9: Policy‑driven retention, masking, and hashing

Permalink to “Use case 9: Policy‑driven retention, masking, and hashing”The scenario

New products and tools come online faster than security teams can keep track. Sensitive data is copied into sandboxes, BI extracts, and AI feature stores. Policies exist on paper, but enforcement is inconsistent.

A single misconfigured dataset exposes customer information in the wrong environment. If auditors detect the misstep, your organization could not only face fines, but also incur the reputational damage that comes with mishandling customer data.

Atlan in action

Atlan provides centralized, policy‑as‑code control over how sensitive data is handled. Security and compliance teams define retention, masking, and hashing rules once — aligned to regulations and internal policies – and apply them automatically across platforms via connectors and automation workflows.

To remain in compliance, teams can monitor coverage and exceptions through metadata analytics. This helps proactively identify risks and gaps before they can show up in an audit report and incur a penalty.

What changes

- Security and compliance teams get consistent, automated enforcement instead of manual policing.

- The organization reduces both the probability and impact of data exposure.

- Business teams can still move quickly, because controls travel with the data.

Use case 10: Automated data access control

Permalink to “Use case 10: Automated data access control”The scenario

Ask any business user, and you’ll hear a familiar story: “It took three weeks to get access to that data, so we just downloaded a CSV from somewhere else.”

Shadow IT grows not because people dislike governance, but because they can’t wait. Governance is seen as a blocker, so teams work around it — increasing risk for the entire organization.

Atlan in action

Atlan makes governed access the fastest path, not the slowest. With policy‑based access controls embedded in the tools people already use, and approval workflows that route to the right owners with clear context on why access is needed, governance is embedded and seamless.

Once access is granted, policies are automatically applied (row-, column-, attribute‑based) with full audit trails, so security and compliance teams have a clear view of who has accessed what data. And all of this happens in real time – no waiting weeks for access or finding risky workarounds.

What changes

- Time‑to‑access reduces from weeks to minutes.

- Users stop bypassing controls because the safe path is also the easiest.

- Governance stops being a gate and starts operating as an enabler.

The supply side: Building the unified data & AI context graph

Permalink to “The supply side: Building the unified data & AI context graph”Everything we’ve covered so far falls on the demand side of context — the problems your stakeholders feel and the use cases they’ll fund. The supply side is the data and AI context graph that makes those use cases repeatable.

What is a context graph?

Permalink to “What is a context graph?”Most catalogs stop at “what exists where.” Traditional knowledge graphs stop at “what things are.” A context graph goes further than both.

The context graph unifies technical, business, operational, and governance metadata – including definitions, lineage, usage, and policies – into a single active layer. It is a living system that encodes how a company thinks, decides, and acts, including:

- How data is defined (business terms, metrics, policies)

- How it is produced (pipelines, models, transformations)

- How it is used (dashboards, queries, AI applications, users)

- How it is governed (classifications, access policies, quality checks)

In Atlan, this graph is powered by three core elements:

- Active Metadata Platform – The engine that continuously ingests, unifies, and enriches metadata from your stack.

- Metadata Lakehouse – An Iceberg‑native, queryable context store that separates storage and compute for metadata analytics and AI‑scale workloads.

- Context Layer – The outcome the previous two pieces deliver: a unified layer of business and governance context surfaced directly in human and AI workflows.

Two foundational supply‑side use cases

Permalink to “Two foundational supply‑side use cases”Unlike the demand side, where context powers outcomes, the supply side is where the context supply chain is generated. Here, Atlan focuses on building trustworthy business context and compliance context delivery.

To build trustworthy business context, Atlan combines AI‑driven suggestions with human stewardship to define, certify, and connect the core elements of meaning in your organization — glossary terms, metrics, data products, and the documentation that surrounds them.

Over time, this creates a single, up-to-date context layer that every user can rely on. Executives see fewer conflicting metrics, analysts stop rebuilding definitions from scratch, and AI systems hallucinate less because they’re grounded in shared, governed semantics instead of raw tables.

On the compliance side, the same graph becomes the delivery system for control. Atlan automatically discovers sensitive data, helps teams generate and refine policies with AI assistance, and uses no‑code workflows to push those signals everywhere they’re needed.

The result is continuous enforcement of the right controls — across warehouses, BI tools, and AI platforms — without governance teams having to manually chase every copy of a dataset or every new AI use case.

In practice, this automates away documentation and reactive governance so teams focus on the 10–20% of work that truly requires human judgment.

Executing on the framework

Permalink to “Executing on the framework”The value of any framework is in how you use it. Here’s how we see executive teams operationalizing Atlan’s supply–demand model.

Step 1: Anchor on value drivers by persona

Permalink to “Step 1: Anchor on value drivers by persona”Start with what the key players in your organization stand to gain from context:

- Executives: Trusted AI and metrics, provable governance ROI.

- Engineering leaders: Fewer incidents, faster shipping, lower cloud spend.

- Governance leaders: Reliable audits, clear policy enforcement, less manual toil.

- Analytics and AI leaders: Less time hunting for data, more time building products.

Use the Pain → Use case → Value driver mapping to identify 3–5 high‑value, low‑friction use cases across revenue, cost, and risk. Those become your first “demand‑side” bets.

Step 2: Map demand to the context supply chain

Permalink to “Step 2: Map demand to the context supply chain”For each prioritized use case, ask:

- What context does it need? (definitions, lineage, sensitivity, usage, owners)

- Where does that context live today — and where is it missing?

- Which systems must produce or consume this context?

Then design a context supply chain:

- Which connectors do we light up first?

- Which automations and playbooks will keep context fresh?

- Where do we embed context (BI, AI tools, collaboration tools) so people and agents can actually use it?

Step 3: Launch with measurable success metrics

Permalink to “Step 3: Launch with measurable success metrics”Before you execute, measure your benchmarks and define success metrics by use case. Examples include:

- Reduction in conflicting KPI reports

- Reduction in frequency of data incidents and resolution time

- Reduction in audit prep time and number of ad‑hoc evidence requests

- Reduction in cloud/platform spend from estate optimization

- Increase in self‑service adoption and AI accuracy for key use cases

Align these metrics with your broader AI and data strategy so you can show progress to the board and peers.

Step 4: Mature into an AI‑ready data estate

Permalink to “Step 4: Mature into an AI‑ready data estate”As your context graph matures, you unlock second‑order use cases, such as:

- Conversational access to governed data for every role

- Agentic workflows where AI agents act with the same context humans rely on

- Advanced metadata analytics that optimize not just cost and risk, but AI performance and reliability

At that point, the question shifts from “Can we ship this AI project?” to “Which new business problems can we tackle now that context is in place?”

What “good” looks like with Atlan’s use case framework

Permalink to “What “good” looks like with Atlan’s use case framework”In organizations that embrace this framework, a few patterns emerge:

- Every major initiative is backed by clear business outcomes and KPIs, named demand‑side use cases, and a visible context supply chain that explains how the work gets done.

- Business users self‑serve trusted data and AI experiences instead of waiting in ticket queues.

- Engineers and data scientists spend more time building value and less time reverse‑engineering lineage or answering “where did this number come from?”

- Governance teams can prove compliance and AI responsibility on demand, without pausing the business.

Most importantly, data and AI stop being a leap of faith, and instead become a disciplined, measurable capability, powered by context.

If you’re a CIO, CDO, or CDAO looking to move AI from pilots to production, the invitation is simple:

- Identify 2-3 strategic initiatives

- Map them onto this supply–demand framework

- Stand up a context graph pilot with Atlan that proves value in weeks, not quarters

From there, the rest of your roadmap starts to look a lot clearer — because the missing piece, context, is finally in place.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Atlan Use Case Framework: Related reads

Permalink to “Atlan Use Case Framework: Related reads”- What is a Context Graph?: Definition, architecture, and implementation

- Context Layer 101: Why it’s crucial for AI

- Do Enterprises Need a Context Layer Between Data and AI?: Bridging the AI context gap

- Active Metadata Platform: Powering the context graph

- Metadata Lakehouse: Queryable context for AI

- Data Governance Framework: From strategy to execution