Atlan vs DataHub

Choose the Metadata Platform That Operationalizes Context Across the Enterprise

Trusted by companies

with more than $10T in enterprise value

Affirm chose Atlan as a partner to power an extensible governance platform

Atlan vs DataHub:

Key differences that deliver better outcomes

Platform architecture & extensibility

Product capabilities

Personas & user experience

Systems, scale & pricing

| What Teams Need | DataHub | |

|---|---|---|

| Metadata lakehouse (core differentiator) | Iceberg-Native Lakehouse - Separated storage/compute. Optimized for multi-cloud/AI workloads | OSS Platform - Built on GraphQL/OpenLineage. Scale and infra are self-managed |

| App framework (core differentiator) | App Exchange - Developer first tools for AI apps/connectors. 20+ external partners already building | No Native Framework - Relies on OSS scripts. Extensions require manual engg/PRs |

| MetaModel | Flexible, No-Code - UI-interface for admins to define custom metadata attributes | Structured, Schema-First - Requires developers to define new "Aspects" using PDL files |

| Event driven architecture | CDC and webhooks with Kafka for dynamic metadata capture | Runs on a Kafka-based Metadata Change Log (MCL) |

Why data and AI-forward enterprises choose Atlan over DataHub

Data leaders chose Atlan for tangible business outcomes and superior product fit.

Build or buy? With Atlan, you don't have to choose.

Open-source tools like DataHub offer flexibility but require heavy engineering resources to secure, maintain, and scale.

Managed platforms provide reliability but limit customization and control.

Atlan brings the best of both worlds

- The hybrid advantage: Atlan's platform is open by design - with robust APIs/ developer SDK, iceberg-native metadata lakehouse and developer first app exchange for easy extensibility.

- Focus on outcomes: Atlan manages the burden of infrastructure, upgrades, and uptime. This frees your team to concentrate on more data projects and AI innovation.

- Proven at scale: Atlan is trusted by enterprises with more than $10 trillion in value, with the likes of Affirm, GM, and Mastercard, proves that openness and enterprise scale can coexist.

- Industry recognition: Validated by leading analysts and partners including Forrester, Gartner, and Snowflake for innovation and customer impact.

Frequently asked questions: Atlan vs DataHub

Does Atlan support Bring Your Own Cloud (BYOC) or on-prem deployments?

DataHub requires teams to host and maintain their own infrastructure or rely on managed OSS offerings, which increases operational overhead.

How does Atlan's Metadata Lakehouse differ from DataHub's backend architecture?

DataHub operates as an OSS metadata platform built on open standards like GraphQL and OpenLineage; scale and extensions are self-managed rather than lakehouse-based.

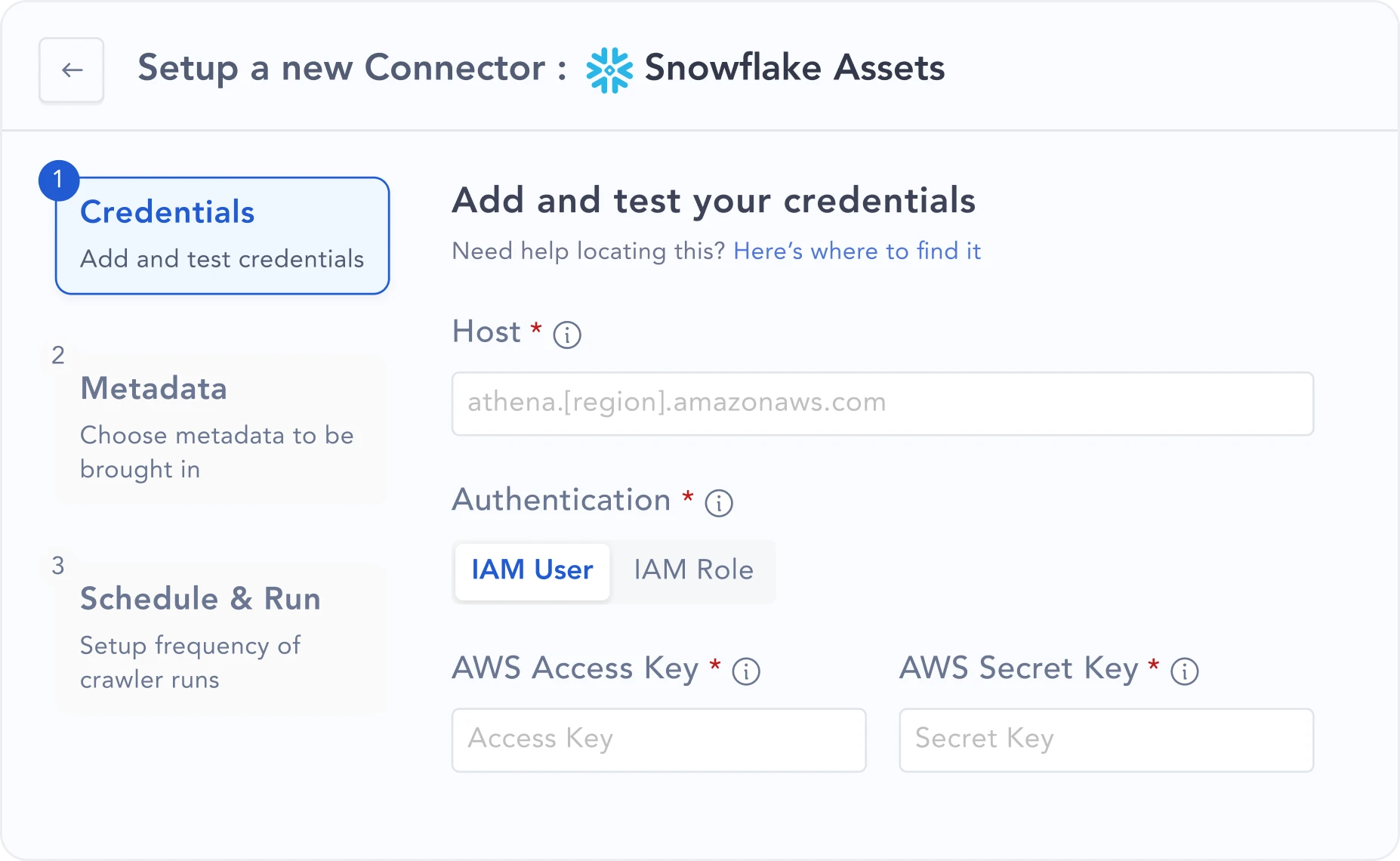

Can Atlan integrate directly with Databricks, Snowflake, and dbt?

How extensible is Atlan's API and SDK framework?

Does Atlan provide developer tooling similar to OSS frameworks?

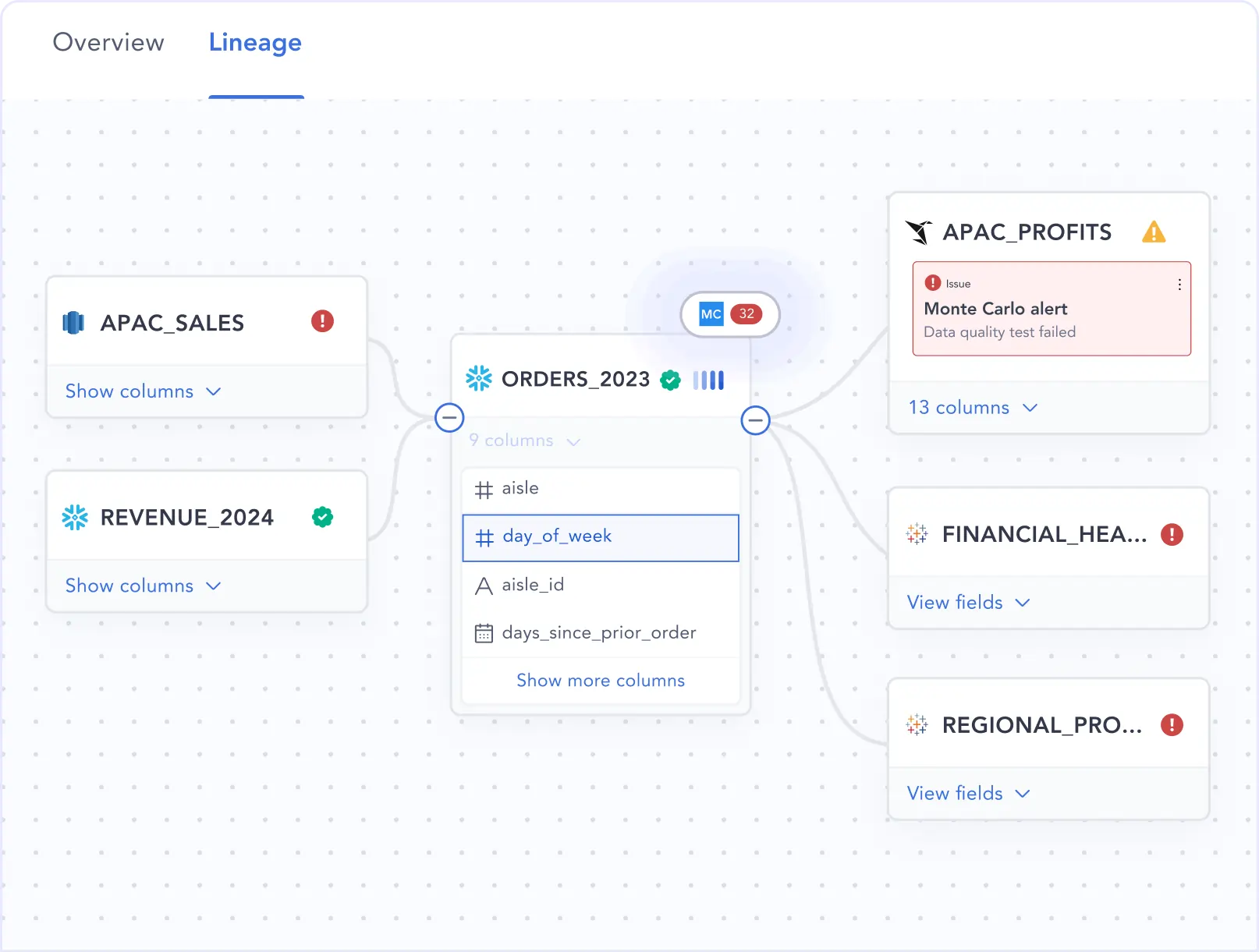

How does Atlan handle automation and lineage at scale?

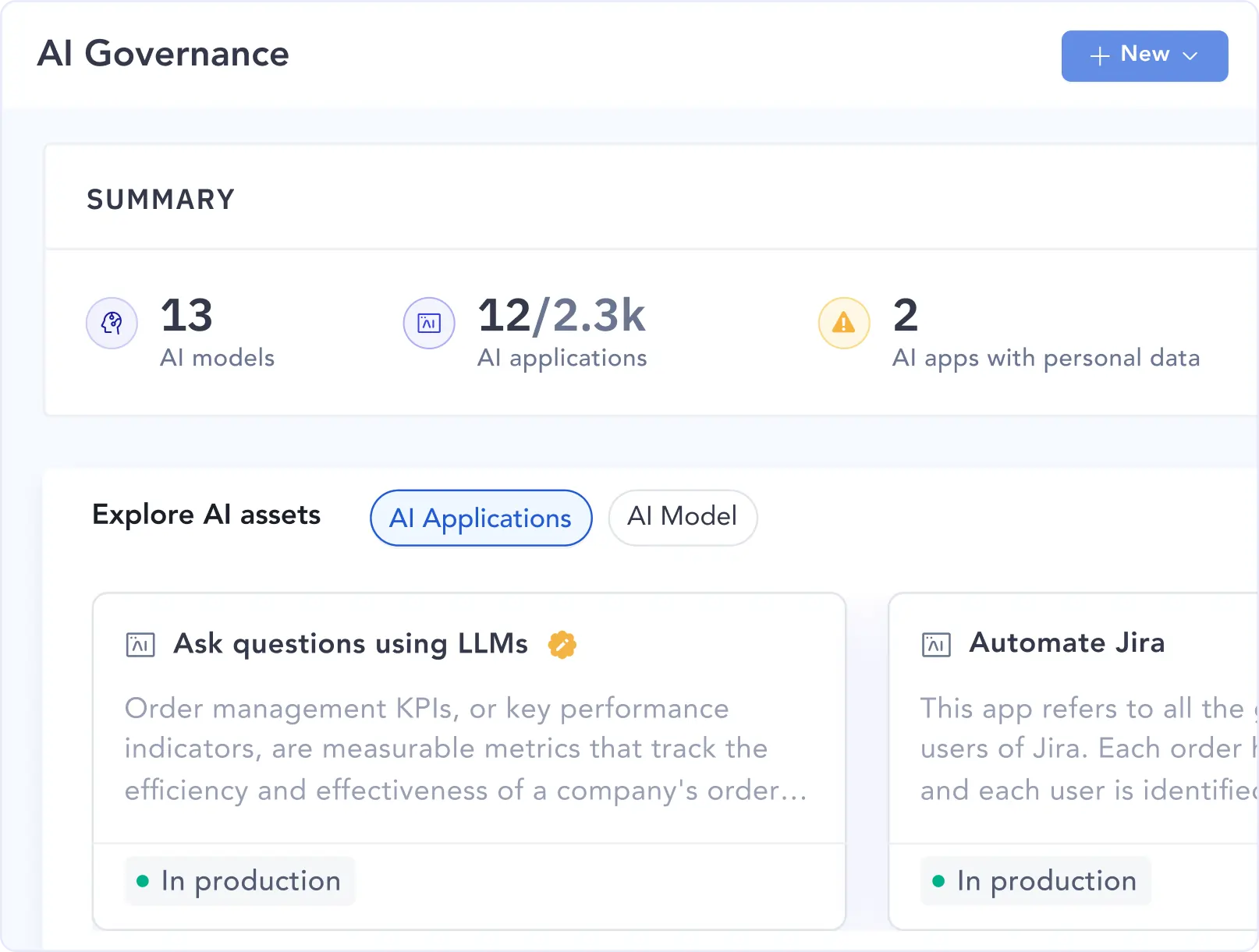

How is Atlan optimized for AI and LLM use cases?

Can Atlan manage unstructured data or logs?

How does Atlan support metadata versioning and change tracking?

What kind of scalability can Atlan handle?

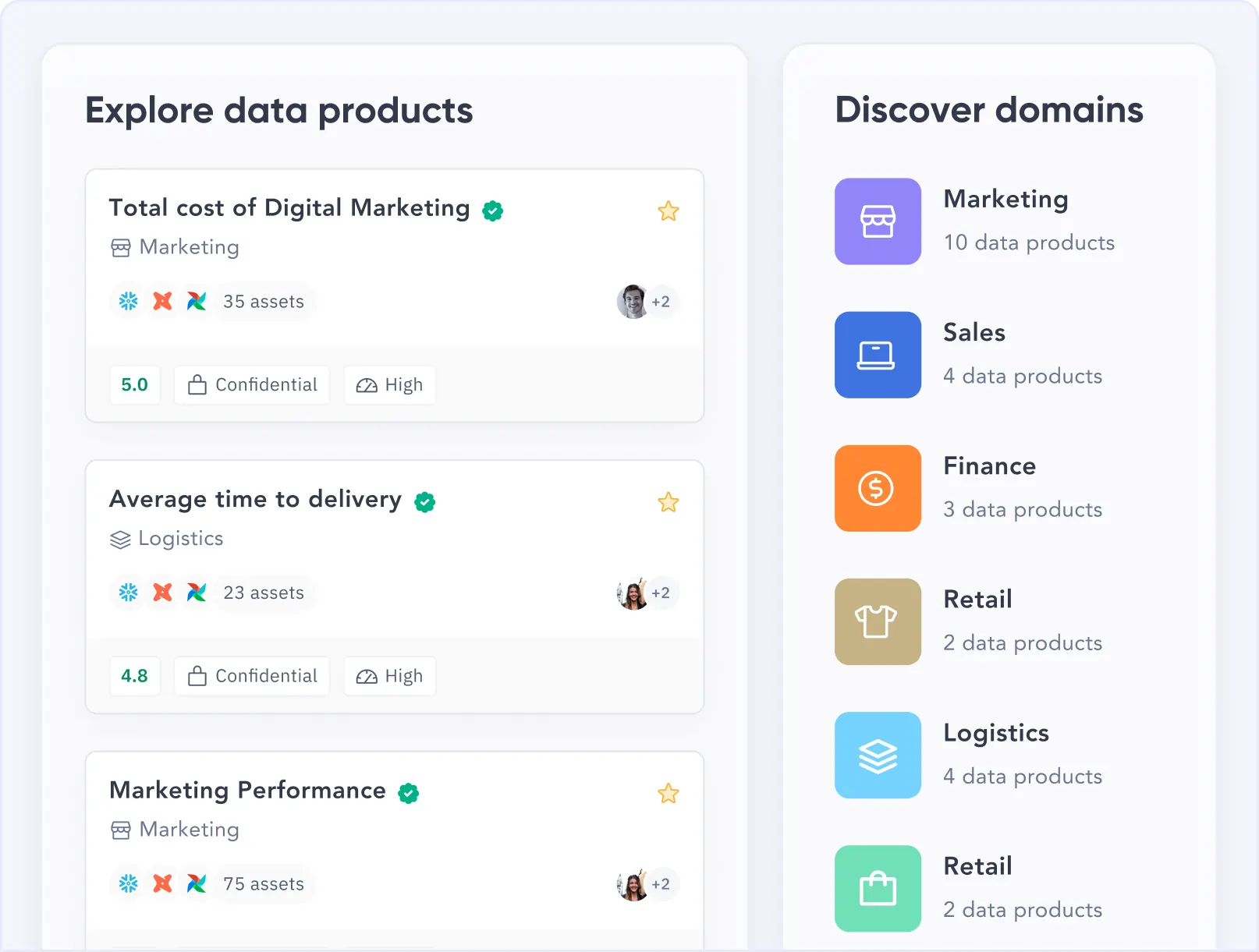

Does Atlan offer support for multi-tenant or domain-first data architectures?

Is Atlan secure enough for regulated industries?

DataHub's OSS model requires manual setup for comparable controls.