Everyone’s Building with AI. But How Many Are Actually Seeing Value?

The AI Value Chasm Beneath the Curve

Last Updated on: May 29th, 2025 | 11 min read

A Personal Origin Story: When Data Changed Everything

Permalink to “A Personal Origin Story: When Data Changed Everything”I’ve seen data unlock progress that once felt out of reach. At 21, I co-founded a data intelligence company that worked with governments and nonprofits—places where decisions were often made from instinct, not information. Even a clean spreadsheet felt like a breakthrough. But when we could map what we had, layer it, and make it visible—something shifted.

That’s when I saw how the right data, in the right hands, could change everything. We rerouted resources to impact 50 million women living below the poverty line. We worked with the UN to accelerate the SDGs. I was hooked.

The second time I felt that kind of awe? In 2016, I witnessed first hand the rise of the modern data stack. From adopting Airflow early, to the open-source waves of Airbnb and Facebook—Knowledge Repo, Presto, and beyond—to the rise of Snowflake and its surrounding ecosystem. That awe led to founding Atlan.

But what’s happening with AI is… something else.

AI Feels Like Magic. But There’s a Catch.

Permalink to “AI Feels Like Magic. But There’s a Catch.”Bob Muglia, the first CEO of Snowflake, told me, “I’ve never seen anything like it in my career.” I agree. This isn’t just another trend, it’s the closest thing to electricity we’ve seen. But amidst the buzz, I keep hearing mixed signals. I speak with leaders across startups and Fortune 100s — and the pattern is consistent: momentum on the surface, molasses underneath.

AI is everywhere — boardrooms, conferences, bake-offs, demos. In 2023, most AI strategies were still on paper. In 2025, they’re real. Every company has a copilot. And yet…

Under all that motion, I keep seeing something strange — the current’s running, but the gears don’t turn.

If this sounds familiar, you’re not alone. The pilots are slick. The demos are exciting. But when I ask what’s in production — what’s actually delivering value — the rooms get quiet.

This isn’t an isolated experience. The numbers back it up: 49% of organizations say generative AI hasn’t delivered its full potential. While most are still stuck in experimentation, only 26% have developed the capabilities to move beyond proofs of concept — and just 1% have embedded AI into workflows in a meaningful way.

The AI Value Chasm: The Gap Between Hype and Value

Permalink to “The AI Value Chasm: The Gap Between Hype and Value”I’ve come to think of this as the AI Value Chasm.

The growing gap between how fast we’re building with AI and how little value we’re actually seeing at scale.

So… why? What’s blocking us from translating promise into production? That’s the real question.

Over the past six months, my team and I have spoken to 100+ data and AI leaders trying to understand why. Three themes come up again and again:

Problem 1: Data Without Context

Permalink to “Problem 1: Data Without Context”“We have a thousand AI use cases on the roadmap, but we don’t even know what data we have.” — CDO, Fortune 500 Tech Company

In a pilot, you can work with what you already know. A clean dataset, a narrow scope, a well-understood system.

But in production, that illusion breaks. You’re dealing with distributed systems, undocumented sources, orphaned pipelines, and dependencies no one tracks. The context isn’t stored in one place. It’s scattered across people, tools, and memory. You can’t govern what you can’t inventory.

Problem 2: AI Doesn’t Understand Your Business

Permalink to “Problem 2: AI Doesn’t Understand Your Business”“When someone says TAM here, it means Total Addressable Market. But online, it might mean something else entirely. How do I train my AI on that?” — CIO, Investment Management Firm

Inside most organizations, meaning lives in silos. “Customer” means one thing in Product, another in Finance, and something else entirely in GTM dashboards. “Revenue” shifts depending on context. Even “active user” can mean five different things. Everyone assumes their meaning is the default. No one owns alignment.

That might be manageable in reporting. But AI doesn’t pause to ask what you meant. It acts on what it sees. And when meaning is fractured, the output sounds confident — and lands wrong. Ultimately, breaking trust.

Problem 3: Governance That’s Too Slow for AI

Permalink to “Problem 3: Governance That’s Too Slow for AI”“I’m okay with a chatbot using payroll data for HR, but I don’t want anything else touching it. How do I control that?” — Chief Data & AI Officer, Public Software Company

Governance wasn’t built for real time systems that act. Agents don’t just look at data. They take action, trigger workflows, and generate outputs that move downstream.

Yet, most organizations still rely on static policies and manual reviews. There’s no real-time layer to enforce intent or adapt to how data is actually being used.

That’s not just a slowdown. It’s a risk — one that gets harder to spot the faster you move.

Crossing the AI Value Chasm

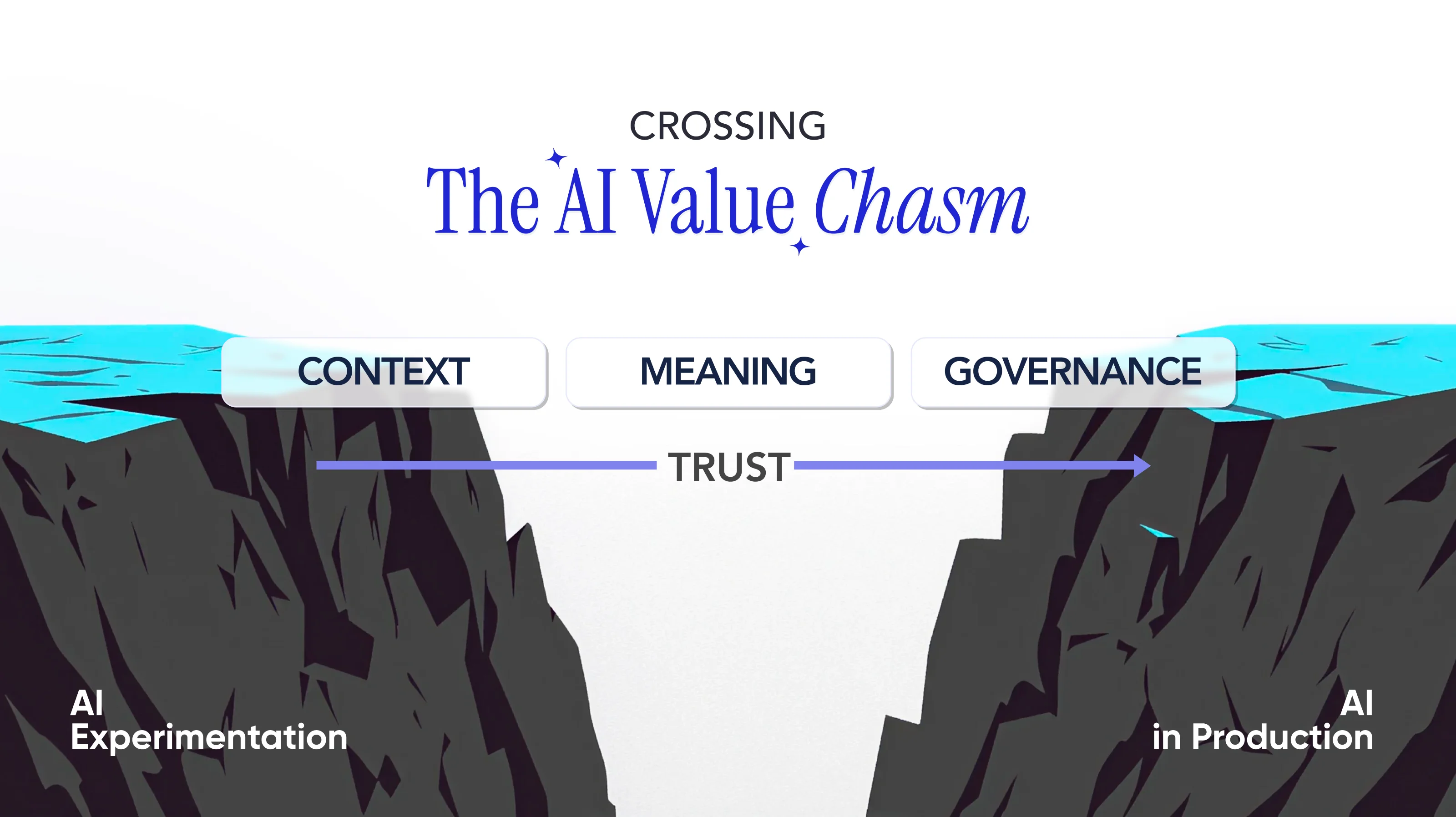

Permalink to “Crossing the AI Value Chasm”So how do we actually cross the AI Value Chasm? Not with more pilots, demos, or LLM integrations.

We cross it by building a bridge that addresses the three structural hurdles preventing AI from scaling in the enterprise: data context, business meaning, and governance.

I like to think of this as the Trust Bridge — the foundational layer required to move from AI experimentation to AI at scale.

1. Data Context: From Fragmentation to Foundation

Permalink to “1. Data Context: From Fragmentation to Foundation”Most enterprises still operate in a fragmented, siloed, and poorly documented data and AI landscape. In pilots, teams can handpick clean, well-understood datasets. In production, that luxury disappears.

To operationalize AI, teams need consistent, reliable answers to questions like:

- What data is available for this use case?

- Where did it come from?

- Who owns it?

- What does this table or column actually mean?

- Can I trust this data? Has it been validated?

- What’s off-limits — and why?

Getting to these answers isn’t trivial. It requires two foundational investments:

- First, enabling AI to discover a heterogeneous data estate — across warehouses, lakes, APIs, files, and internal tools.

- Second, helping it understand that estate — via metadata, ownership, usage patterns, semantics, and end-to-end lineage.

Companies are beginning to recognize this. In fact, Gartner reported that metadata management was the fastest-growing submarket in the data management software (DMS) market — a clear signal that metadata is no longer peripheral. It’s becoming a critical infrastructure for AI readiness.

2. Business Meaning: The Hidden Alignment Problem

Permalink to “2. Business Meaning: The Hidden Alignment Problem”The second hurdle is semantic alignment.

AI systems today may understand language — but they don’t understand your business.

Terms like “Total Addressable Market,” “customer,” or “revenue” sound simple, but they often mean very different things across functions and teams:

- When Product says “active user,” do they mean logins, engagement, or adoption?

- Does Finance include sales comp in “customer acquisition cost”?

- Are freemium signups still classified as “customers” in reporting?

This isn’t just a technical issue. It’s a shared meaning problem. Most companies today have data brawls playing out in executive rooms — where two teams pull up the same dashboard and argue over what the number actually means.

If we as humans can’t agree on what a metric means, how do we expect AI to understand it?

I’ve been excited about semantic layers since the concept became mainstream (read: hyped) in 2022. Now, with ecosystems like Snowflake building their own, I’m genuinely optimistic. But here’s the catch: no semantic layer can fix a coordination problem on its own.

Solving this requires a human collaboration layer — a way to bring business stakeholders into the process early and define shared meaning from the outset.

One VP of Data recently shared how they’re reframing their entire data operating model — making reusability and business meaning foundational to how their teams define, build, and ship data. At the center of this shift is the data product: a reusable, AI-ready asset that captures not just data, but the context, ownership, and business logic behind it.

They’ve moved beyond scattered definitions and reactive governance. They’ve adopted what we call a Data Product Operating Model — one that embeds meaning across the full lifecycle: from design, to build, to publish.

- In the design phase, they’ve introduced collaborative canvases that bring business stakeholders and data teams together at the very beginning — to define intent, context, and shared semantics.

- In development, they’ve adopted data contracts to embed context, meaning, and governance directly into engineering workflows — shifting left and treating this metadata as a first-class part of how data is created.

- At publish, they’re launching data and AI products with embedded trust signals — quality scores, certification status, and clear metadata — so consumers can immediately understand what’s ready, what’s reliable, and how it should be used.

It’s a full-stack approach to business meaning — one that treats clarity and alignment as design problems, not downstream fixes.

3. Risk Governance

Permalink to “3. Risk Governance”The third challenge is governance.

Most governance today is designed for a slower world — one of dashboards, static reports, and manual reviews. But AI systems don’t wait. They act. They recommend. They trigger workflows.

To scale AI responsibly, we need more than policy documents.

We need systems that enforce intent in real time, across every layer of the data and AI estate. That means answering questions like:

- What are our policies for data and AI use?

- Who can access what — and under what context?

- Which use cases are permitted, restricted, or prohibited?

- How do we apply these rules automatically, as the work is happening?

Governance can’t be an afterthought. It needs to be designed in — not layered on. It has to move from reactive to proactive.

And with regulations like the EU AI Act on the horizon, passive governance is no longer an option.

This shift is driving two significant changes in how organizations approach governance:

-

Shift Left:

Governance is moving earlier into the development lifecycle. Data contracts, access rules, and quality expectations are defined upfront—well before deployment—so they become integrated constraints, not reactive checks.

-

Embedded and Invisible:

Rather than relying on after-the-fact approvals or manual interventions, governance rules are increasingly built directly into the data architecture and operational systems themselves. Policies defined once can now automatically propagate across the entire data estate through centralized metadata management systems. By leveraging bi-directional synchronization, companies are able to consistently enforce governance rules at the actual point of use—within their query engines, compute layers, and orchestration frameworks.

This evolution is reflected in the growing role of data & AI governance engineering within organizations. We’re seeing the rise of dedicated governance engineering teams—focused on designing, embedding, and maintaining these real-time control systems.

Closing Thoughts: It’s Time for the Real Work to Begin

Permalink to “Closing Thoughts: It’s Time for the Real Work to Begin”In November 2022, ChatGPT captured global attention and sparked an unprecedented wave of AI experimentation. But beneath the excitement lies a harder truth: we’re still far from realizing AI’s full value. The AI Value Chasm isn’t just a temporary setback—it’s a structural gap. While companies rush to adopt AI, few have invested in the groundwork needed to scale it. The result is a growing divide between ambitious promises and outcomes.

Closing this gap won’t happen on its own. It requires intentional, strategic investments—not just in technology, but in the very ways humans collaborate with AI. Eventually, today’s hype cycle will fade, and the flashy experiments will give way to rigorous, methodical, foundational work. This is the quiet, essential effort of embedding context, aligning meaning, and engineering trust directly into our AI systems.

Organizations that embrace this challenge—those that tackle the structural roots of the AI Value Chasm—will emerge as the winners. The organizations that close this gap won’t just succeed with AI — they’ll define how modern businesses operate.

And the ones that don’t? The writing’s already on the wall.

Resonated with this content? I write weekly on building AI native companies, active metadata, data culture, and our learnings building Atlan at my newsletter, Metadata Weekly. Subscribe here.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.