For decades, data teams have been the translators between business questions and data systems. Every insight began with a question — “Why did revenue drop?” — and required someone fluent in both business and SQL to find the answer. Then, there were the tools, handoffs, dashboards, and meetings needed to answer the next question: “What should we do next?” It was time-consuming, subjective, and unreliable.

Today, a new teammate is emerging to close that gap and accelerate insights: the AI Analyst. It’s not a dashboard or a chatbot. It’s a conversational layer that allows you to talk to data, and find out what happened, why it happened, and what to do next. It’s the holy grail of analytics: the ability to talk to data and know how to act on it – no disjointed tools, static dashboards, or extra meetings.

With it comes enormous buzz. Industry leaders have launched agentic features, like Snowflake Cortex and Databricks AI/BI Genie. Companies are lining up – and reallocating budgets – to get their hands on AI Analysts. Talking to an AI agent about your data promises to change the game for enterprise analytics – and yet, it’s not working. Research from MIT Sloan found that 95% of AI pilots fail in production, creating what I call the AI value chasm. So, the question we started asking was why?

To find out, we tapped into our most AI-forward customers – rolling up our sleeves and working alongside them to get to the blockers of AI use cases. What we repeatedly saw in Atlan AI Labs workshops was that talking to data was one thing – but being understood was another. AI systems couldn’t fully understand and explain what happened, why it happened, and what to do next because they lacked the context needed to do so – the definitions, metrics, unwritten rules, and judgment calls that live in people’s heads.

The space between what AI Analysts know and what humans know – but may have not documented – is what we call the context gap. And it’s why, despite the hype, most AI Analysts are failing in production.

But we’ve also seen what happens when the context gap is closed. Working with customers like Workday to design real-world test cases with deep context and structured iteration, we’ve achieved a 5x increase in response accuracy. It’s proof that being understood by AI, not just talking to it, is the key to tapping into the potential of enterprise-scale AI Analysts.

From What Happened to What Next

Permalink to “From What Happened to What Next”AI Analysts aren’t replacing human analysts; they’re accelerating how humans reason with data, so they can make smarter decisions with it. Understanding what happened, why it happened, and eventually, what to do about it doesn’t happen in a single step – it takes layers of depth, context, and trust.

Stage 1: What Happened — Accuracy and Trust

Permalink to “Stage 1: What Happened — Accuracy and Trust”Every decision needs to start with clarity – without it, you’re feeling your way forward in the dark. So, the first job of an AI Analyst is to answer what questions with precision:

- “What were our sales last quarter?”

- “Which regions drove the most churn?”

Here, success depends on accuracy — using the right definitions, the right metrics, and the right context to ensure that the AI Analyst speaks the same truth as your dashboards and analysts. Nail this, and trust is born – and trust is the first step toward adoption.

Stage 2: Why It Happened — Exploration and Structured Thinking

Permalink to “Stage 2: Why It Happened — Exploration and Structured Thinking”Once the AI Analyst has established trust, curiosity deepens. Decision-makers begin to ask why:

- “Why did churn increase?”

- “Why did revenue grow faster in North America?”

At this stage, the AI Analyst becomes a true collaborator — not just reporting numbers, but investigating and exploring. It helps break down hypotheses, connect signals across datasets, and structure the reasoning process.

Now, the AI Analyst is no longer answering questions in isolation; it’s helping humans think.

Stage 3: What Should We Do — Context and Recommendations

Permalink to “Stage 3: What Should We Do — Context and Recommendations”Eventually, the AI Analyst can move beyond explanation to suggestion — the earliest form of action. It might say:

- “Churn rose 8% in your APAC segment after the new pricing rollout. In similar cases last year, retention improved when communication campaigns were targeted to inactive users within 7 days.”

This stage relies on deep context — not just about data, but about goals, history, and patterns of decision-making specific to your organization. It’s the most complex element, and the most human — where AI and human analysts work together to turn shared meaning into action.

Moving from what to why to what next is not as simple as flipping a switch; it requires building a system. Each stage requires progress in accuracy, context, and collaboration. And while we’re still in the early phases, it’s clear that we’re onto something big: a new kind of teammate that helps every person in an organization not just access data, but actually reason with it.

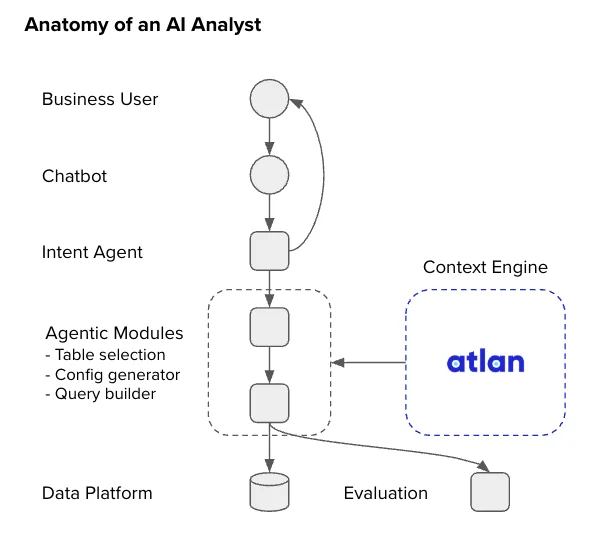

The Anatomy of an AI Analyst

Permalink to “The Anatomy of an AI Analyst”Before an AI Analyst can help you understand why something happened or suggest what to do next, it must first be able to accurately answer what happened. Getting this right every time is the foundation of every decision-making journey.

Accuracy depends on how well the AI Analyst can interpret intent, apply business meaning, and map it to the right data definitions. In other words, looking at what happens inside the system when the AI Analyst receives a question like: “What was our sales performance last quarter?”

When you ask that question, a human analyst doesn’t just pull numbers — they clarify what “performance” means, recall the right metric, find where it’s stored, and compute the result. The AI Analyst mirrors that reasoning pattern through a series of steps:

1. Intent

Permalink to “1. Intent”Understanding what the user is asking.

Every question starts with intent – why are we asking the question? What are we trying to find out?

The AI Analyst first interprets the meaning behind the question — “sales performance,” “last quarter,” “by region” — and identifies what the user is trying to achieve. Are they looking for a comparison, a trend, a summary, something else entirely?

Knowing the meaning behind the words allows the AI Analyst to interpret purpose.

2. Business Definition

Permalink to “2. Business Definition”Connecting intent to business meaning.

Next, the AI Analyst links the terms in the question to their business definitions*.* For example, “performance” might mean revenue growth or sales target achievement, depending on the organization.

A crucial step for humans in this stage is ensuring these definitions live in business glossaries or metric stores that describe what each term represents and how it’s used. This verifies that “performance” means the same thing across Finance, Sales, Marketing, and any other business unit.

3. Data Definition

Permalink to “3. Data Definition”Mapping business meaning to actual data.

With business meaning confirmed, the AI Analyst looks for how it’s defined in the data. If “performance” means revenue growth, it finds where “revenue” is stored — perhaps in the revenue_amount column within a sales_summary table — and how it should be calculated (e.g., sum(revenue) for the last quarter).

At this point, the AI Analyst knows what to query and where to find it.

4. Response

Permalink to “4. Response”Generating and validating the answer.

Finally, the AI Analyst runs the reasoning step — retrieving data, applying definitions, and generating an answer that connects back to the user’s original intent. The response isn’t just numbers – it includes context:

- “Sales performance, defined as total revenue, was $5.3M last quarter — a 7% increase over Q1.”

It doesn’t just answer the question; it explains.

The Reasoning Flow, At-a-Glance

Permalink to “The Reasoning Flow, At-a-Glance”User Intent → Business Definition → Data Definition → Response (“sales performance?”) → (“performance = revenue”) → (“revenue_amount in sales_summary”) → (“$5.3M, +7% QoQ”)

This is the anatomy of the AI Analyst: a clear, repeatable reasoning chain that turns natural-language questions into trusted, context-aware answers.

The anatomy of the AI Analyst. Source: Atlan.

Building an AI Analyst

Permalink to “Building an AI Analyst”Like any AI initiative, building an AI Analyst is best done by starting small. Focus on a single domain and answering the question “what happened?” This allows you to control the scope, iterate faster, and reuse your structure for subsequent deployments.

In our experience working with Atlan customers and through Atlan AI Labs, the rest of the process is best done following these steps:

Step 1. Curate the Right Metadata

Permalink to “Step 1. Curate the Right Metadata”The foundation of an AI Analyst isn’t the model — it’s the metadata that defines your data’s meaning. Focus areas include:

- Column descriptions: Add clear, concise explanations of what each column represents and how it’s calculated. Example:

csat_score→ “Average satisfaction rating from post-support survey, 1–5 scale.”net_revenue→ “Revenue minus refunds and discounts, in USD.”

- Filters and enumerations: Define categorical values and how they should be grouped. Example:

region→ “Geographical grouping; ‘EU’ may include Western Europe and EMEA.”

- Relationships: Document joins, lineage, and dependencies between datasets. Example:

customer_feedback.customer_idjoins tocustomers.customer_id.

- Synonyms and aliases: Capture user-facing language directly on metadata elements. Example:

- “Revenue” →

net_revenue; “Customer happiness” →csat_score.

- “Revenue” →

Step 2. Build a Domain-Specific Context Layer

Permalink to “Step 2. Build a Domain-Specific Context Layer”Once metadata is embedded, organize it into a domain context layer — the AI Analyst’s structured mental model of the domain.

For example, for a Customer Success Analyst, you might define:

- Datasets:

tickets,customer_feedback,accounts. - Metrics:

csat_score,resolution_time,renewal_rate. - Dimensions:

region,customer_segment,support_channel. - Relationships:

tickets → accounts,feedback → customer_id.

This isn’t prompt configuration — it’s a semantic map that guides how the AI Analyst retrieves context and reasons about questions. When a user asks “What was our customer satisfaction in EMEA last quarter?”, the model doesn’t guess — it pulls meaning directly from this structured layer.

Step 3. Create a Domain Test Set

Permalink to “Step 3. Create a Domain Test Set”Before deploying, you need to know how accurately your metadata supports the domain.

Instead of testing prompts, test context retrieval accuracy using a curated list of real-world business questions mapped to expected logic or results.

Run the system against this test set and measure:

- Mapping accuracy: Did it select the right fields and relationships?

- Coverage: How many types of questions it can understand with current metadata.

- Confidence: Whether it can identify uncertainty or missing context.

Failures at this stage indicate metadata gaps, not model issues. Fixing them means enriching context — not rewriting prompts.

Step 4. Iterate on Metadata, Not Prompts

Permalink to “Step 4. Iterate on Metadata, Not Prompts”When an answer is wrong, the issue almost always lies in context, not model reasoning. Treat each failure as a metadata refinement opportunity.

A consistent improvement loop looks like this:

- Find what failed — a missing dimension, unclear definition, or mismatched filter.

- Identify why — missing description, synonym conflict, or ambiguous relationship.

- Fix it at the source — update column descriptions, relationships, or valid value sets.

- Re-test — rerun the evaluation set to confirm accuracy improves.

This structured iteration keeps the focus on systematic context improvement, not ad hoc LLM tuning. Over time, you develop a durable semantic layer that can evolve independently of any model version.

Step 5. Define a Threshold, Then Deploy and Observe

Permalink to “Step 5. Define a Threshold, Then Deploy and Observe”Once your domain AI Analyst consistently achieves around 80% accuracy and 70% consistency, it’s ready for controlled deployment.

Deploy to a small group of domain users first, and observe:

- Which questions are asked most often

- Which queries fail and why

- How users phrase questions

This observation isn’t about prompt behavior; it’s about context behavior — how well the system retrieves, interprets, and applies metadata under real usage.

Step 6. Measure Human and AI Collaboration

Permalink to “Step 6. Measure Human and AI Collaboration”Accuracy isn’t purely a technical metric — it’s the result of collaboration between humans and AI**.** Each interaction teaches the system something new about how users think.

That context must be recorded, consolidated as metadata, and governed for updates and consistency. Over time, this feedback flow becomes a governed context loop, ensuring the AI Analyst keeps learning without drifting.

Step 7. Scale Across Domains

Permalink to “Step 7. Scale Across Domains”Once the first domain analyst is stable, replicate the process. Each domain should have its own specialized AI Analyst built with its domain-specific metadata and test set. Eventually, an AI coordinator can connect the dots across domains, much like how human analysts work cross-functionally under shared governance.

Context Engineering for AI Analysts and What It Means for Data Teams

Permalink to “Context Engineering for AI Analysts and What It Means for Data Teams”As we’ve established, every AI system that talks to data lives or dies by one thing: context. Context connects language to logic. So when an AI Analyst translates a natural-language question into an insight, its accuracy doesn’t depend on the size of the model, but on how well it understands the meaning behind your data.

Context is how an AI system knows that “performance” means revenue growth, that “churn” means inactive customers, and that these definitions may differ between Finance and Marketing. Without it, even the smartest model is bound to make mistakes. The mandate for data teams, then, is engineering context into models. Here’s how:

1. Unpacking Context

Permalink to “1. Unpacking Context”Every AI Analyst operates with two worlds of knowledge: the one it already knows, and the one it learns from you.

The former is the base layer for comprehension, known as model (or world) context. This is the general understanding the model brings: what “revenue,” “growth,” or “forecast” mean in common language and across industries. It enables the AI Analyst to parse questions, understand intent, and reason logically.

But the model’s world context is generic by design — it knows what “revenue” means, but not what your organization means by it. That specialization comes from organizational context, which translates your data definitions, goals, and semantics into machine understanding.

Model Context × Organizational Context = Real Understanding

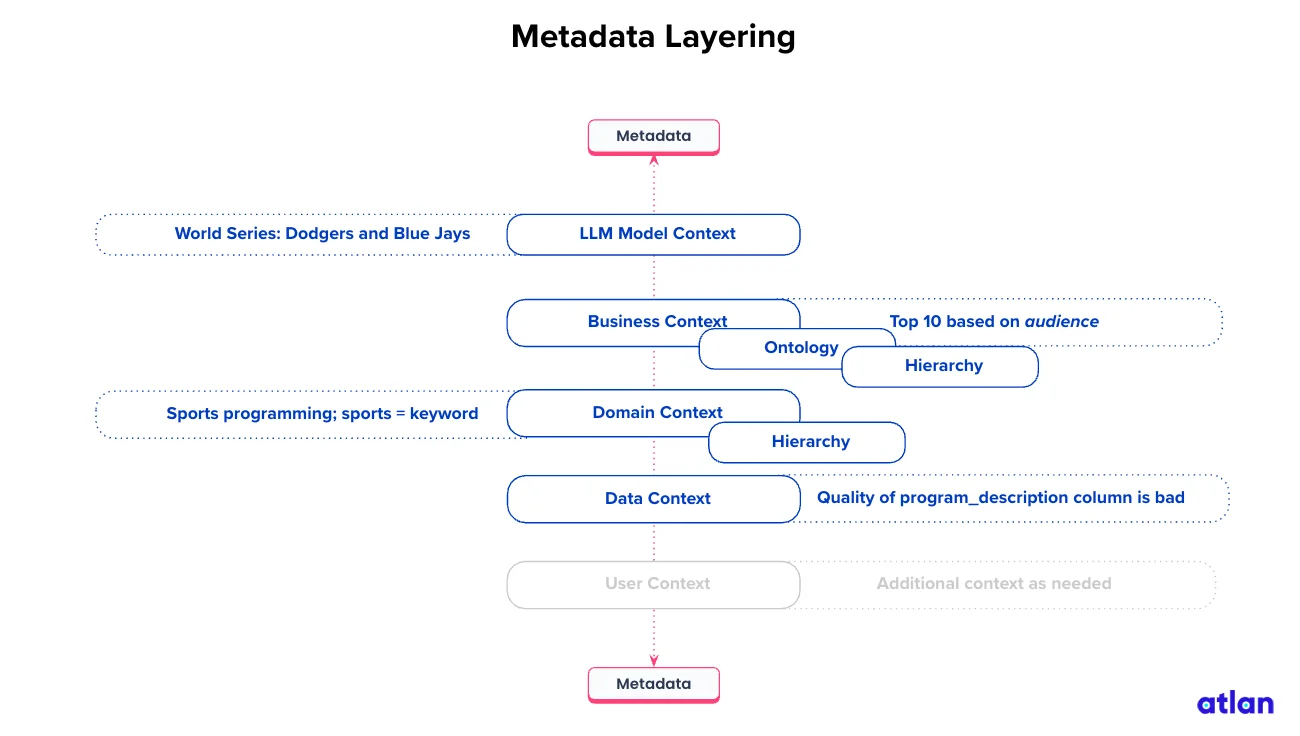

2. The Layers of Context

Permalink to “2. The Layers of Context”Within any organization, layers of context exist that mirror how humans think about data. Together, they create the semantic scaffold that enables the AI Analyst to reason accurately — not by guessing, but by grounding its answers in how the organization defines meaning.

The first layer – the model context – gives the system language and logic. The next four localize that knowledge to your business reality.

Layer | What It Captures | Example |

|---|---|---|

1. Model (World) Context | Pre-trained knowledge of general language and concepts. | Knows that “revenue” means money earned; “growth” means increase over time. |

2. User Context | Who’s asking, their intent, and their goal. | Marketing asks about “performance,” meaning engagement. |

3. Business Context | Organizational goals, KPIs, and semantic relationships. | “Performance = revenue growth over last quarter.” |

4. Domain Context | Functional or departmental nuances and definitions. | “Revenue in Finance = net of refunds.” |

5. Data Context | Actual data structures — tables, columns, joins, filters. |

|

The Layers of Context. Source: Atlan.

3. The Context Supply Chain

Permalink to “3. The Context Supply Chain”Context behaves like a supply chain, ensuring that understanding flows cleanly from human definitions to AI reasoning. Just as data pipelines ensure data quality, a context supply chain ensures semantic quality — consistency and correctness of meaning across systems.

The context supply chain process unfolds as a living loop, not a one-time setup:

- Bootstrap — Connect your existing metadata: glossaries, lineage, verified SQLs, and semantic models. This forms the base layer of the AI Analyst’s organizational understanding.

- Test for Readiness — Before deploying the AI Analyst, evaluate whether it can accurately answer key business questions. Run context evaluation sets — benchmark prompts representing real-world queries — to measure precision, reasoning quality, and coverage. Only when these tests meet an agreed accuracy threshold should the system move into production.

- Continuously Deploy and Observe — Once live, observation is ongoing. Monitor how the AI Analyst applies context — where it performs well, where it misinterprets intent, or where definitions are missing. This creates telemetry for understanding, a real-time signal of how healthy your semantic layer is.

- Enrich — Use insights from observation to refine definitions, relationships, and metric logic. Every correction and clarification becomes part of a structured context update.

- Validate and Scale — Re-test after enrichment, confirm improvements, and propagate verified context across domains and teams. Over time, this cycle turns context management into a self-improving semantic system.

4. Context as a Continuous Habit

Permalink to “4. Context as a Continuous Habit”Like data, context decays over time: definitions evolve, new metrics emerge, and teams reinterpret goals. That’s why context engineering must be continuous.

It thrives on a human–AI feedback loop:

- Analysts validate whether the AI used the right definitions.

- Stewards update and version metadata to maintain alignment.

- The AI Analyst learns from these refinements, improving retrieval and reasoning accuracy.

Every question, correction, or clarification feeds this loop — not as model retraining, but as context enrichment. That’s how organizational knowledge becomes a living, evolving system that keeps up with the business.

5. The New Operating Model for Data Teams

Permalink to “5. The New Operating Model for Data Teams”Context engineering introduces a new way of working. Instead of focusing solely on producing data assets, data teams begin to engineer shared understanding. This becomes the semantic infrastructure that AI systems depend on to reason accurately.

In this new model:

- Context is co-created by humans and AI.

- It is tested and validated before being trusted.

- It is monitored and refined through continuous observation.

- It is scaled via metadata automation and governance.

By cultivating this habit, data teams evolve from data producers to context engineers — custodians of meaning who ensure that AI systems understand the organization as deeply as its people do.

Context is the intelligence layer that makes AI Analysts accurate and trustworthy.

It lives at the intersection of the model’s world knowledge and the organization’s own definitions. Engineering it — through layered metadata, testing, and continuous feedback — is how you ensure that AI systems don’t just answer questions but understand them.

Context Engineering in Practice

Permalink to “Context Engineering in Practice”To understand how context engineering works in the real world, imagine an executive asks: “How has customer satisfaction trended in Europe for our enterprise customers this year?”

It may seem like a simple question — something any data analyst could handle. But for an AI Analyst, it’s a multi-layered reasoning problem. It needs to translate natural language into precise data logic, align vague business terms with metrics, and ensure it’s using the right dimensions, filters, and relationships. This is done in a six-step process:

1. Dissecting the Question

Permalink to “1. Dissecting the Question”Every natural-language query can be broken down into its core analytical components — measures, dimensions, and filters.

Component | Extracted Element | Role |

|---|---|---|

Measure | Customer Satisfaction | A business concept that must be translated to a measurable column or calculation. |

Dimension | Region, Customer Type | The entities used for grouping and filtering results. |

Filter | Europe, Enterprise Customers, This Year | Constraints applied to the corresponding dimensions. |

Time Dimension | Year | Temporal grouping or range derived from the date column. |

This breakdown is the first step in translating human language into structured reasoning.

2. Translating Business Terms into Data Reality

Permalink to “2. Translating Business Terms into Data Reality”“Customer Satisfaction” isn’t a column name, and depending on the organization, it could mean different things:

- Average NPS score (

nps_score) - Percentage of satisfied customers (

satisfaction_rate) - CSAT score from survey data (

csat_score)

For the AI Analyst to select the right one, context must do the heavy lifting — pulling from business glossaries, column descriptions, and metadata relationships.

User Term | Resolved Meaning | Data Mapping | Context Dependency |

|---|---|---|---|

Customer satisfaction | Customer feedback survey rating (CSAT) |

| Glossary entry + column description: “Average satisfaction rating from post-support surveys.” |

Europe | Region filter |

| Sample value match + synonym metadata |

Enterprise customers | Segment filter |

| Business context linking customer tiers |

This year | Time filter |

| Derived from date dimension logic |

Here, metadata richness is the difference between success and failure. Without a description or glossary mapping, “customer satisfaction” could have pointed to the wrong column — for example, customer_health_score, which might measure retention, not satisfaction.

3. Mapping Semantic Reasoning

Permalink to “3. Mapping Semantic Reasoning”Context engineering ensures that user intent connects to the correct data through multi-layered reasoning:

- Semantic Similarity – Matches user terms against column names, glossary synonyms, and descriptions. Example: “Customer satisfaction” semantically aligns with

csat_scorebecause of matching terms in description embeddings. - Data Matching – Uses sample values to resolve filters and ensure validity. Example: “Europe” may map to

region='EU'in the data, confirmed by sample values or synonym mapping. - Relationship Validation – Ensures measures and filters originate from connected tables. Example:

customer_feedbackjoined withcustomer_masterviacustomer_id. - Aggregation & Temporal Logic – Applies correct calculation rules and time boundaries. Example:

AVG(csat_score)filtered fororder_datewithin the current year.

The final output goes beyond just a query — it’s a validated reasoning path from language to logic:

SELECT

region,

AVG(csat_score) AS customer_satisfaction

FROM customer_feedback

JOIN customer_master USING (customer_id)

WHERE region IN ('EU', 'Europe', 'EMEA')

AND customer_segment = 'Enterprise'

AND order_date BETWEEN '2025-01-01' AND '2025-10-26'

GROUP BY region;

4. Incorporating Metadata

Permalink to “4. Incorporating Metadata”Because of the context it provides, metadata — especially column descriptions — is the connective tissue of context engineering.

For example, the description of csat_score (“Average satisfaction rating from post-support surveys”) provides the semantic anchor that lets the AI Analyst interpret “customer satisfaction” correctly. Without it, the AI has to guess — and guessing in data is just another form of inaccuracy.

5. Avoiding Mistakes

Permalink to “5. Avoiding Mistakes”There are two key things not to do when implementing context engineering:

Don’t Dump Context into Prompts

A common anti-pattern is to embed all metadata — glossaries, SQL definitions, lineage — directly into LLM instructions. This feels easy at first, but it breaks down fast as complexity grows, causing:

- Context rot – Outdated or conflicting definitions.

- Ambiguity collisions – Multiple synonyms for the same term (“EU” vs. “Europe” vs. “EMEA”).

- Debugging black boxes – No way to isolate why a query failed or which definition was used.

Instead, context must live as structured, queryable metadata, not static prompt text. The AI Analyst should retrieve the right context dynamically — pulling relevant definitions for each user query, instead of memorizing everything.

Don’t Overfit to Vocabulary

It’s tempting to make the AI Analyst rely on exact name matches. But real data is messy — region might be stored as sales_geo, geo_area, or even territory_code. That’s why semantic similarity and data validation must coexist: language understanding for breadth, and data verification for depth.

Ultimately, the goal of context engineering is to ensure that the right column in the right table, with the right relationship, answers the right question.

That means building rich semantic metadata (names, synonyms, descriptions, relationships), continuously testing how well the system maps intent to structure, and treating every query not as an answer, but as a context test case that strengthens the next one.

This is what context engineering looks like in practice:

- Dissect the question into its measures, dimensions, and filters.

- Use metadata and semantic matching to translate business language into data logic.

- Validate relationships and aggregations through structured lineage.

- Continuously enrich and version the context layer — not the prompt.

In the end, an AI Analyst is only as intelligent as the context that surrounds it. And that context isn’t written in prompts — it’s engineered.

When the Model Must Change

Permalink to “When the Model Must Change”Context engineering operates on an important assumption: that the right data already exists — it’s just a matter of discovering and mapping it correctly. But in practice, that’s not always true.

Sometimes, the reason an AI Analyst struggles to answer a question isn’t missing metadata — it’s a missing data construct. This is where data modeling and context engineering intersect.

If the AI Analyst repeatedly fails to find or infer a concept, it may be a signal that the underlying data model needs to evolve.

When Context Isn’t Enough

Permalink to “When Context Isn’t Enough”Context solves semantic problems — discovering the right column, resolving synonyms, disambiguating meaning. But some problems are structural — they can’t be patched through metadata alone.

A few examples:

- Missing dimension or category: In the entertainment domain, users ask: “Show engagement by program category.” If “category” doesn’t exist as a stored field, no amount of metadata enrichment can create it. The fix isn’t a dynamic query hack — it’s updating the model to preprocess and persist the category.

- Derived or implicit attributes: If “customer lifecycle stage” is repeatedly inferred from events or timestamps, it should become a modeled field (

customer_stage), rather than a runtime computation. - Fragmented relationships: When a join logic appears in multiple metadata definitions (“link tickets to accounts”), it’s a sign that the relationship belongs in the physical model, not just in metadata mapping.

- Performance and reliability issues: If the AI Analyst relies on complex or expensive derived queries to answer basic questions, it’s better to model and materialize those entities explicitly.

The Principle: Persist What Is Repeatedly Inferred

Permalink to “The Principle: Persist What Is Repeatedly Inferred”A simple rule of thumb is that if the AI Analyst needs to infer the same relationship or derive the same field more than once, it’s a data modeling problem, not a context problem. This means:

- Don’t treat metadata as a catch-all for missing structure.

- Don’t overload column descriptions with runtime logic.

- Let metadata describe the data — not create it.

Instead, collaborate with data engineering teams to push those recurring derivations into the model itself. This strengthens the foundation and simplifies future context mapping.

You can use this simple heuristic to decide whether a fix belongs in metadata or the data model:

Symptom | Likely Fix | Why |

|---|---|---|

Incorrect column mapping or ambiguity | Metadata change | Context misunderstanding; fixable via synonyms or descriptions. |

Field consistently missing across queries | Data model change | Structural absence; needs to be added or derived upstream. |

Multiple metadata entries inferring same logic | Data model change | Indicates repeated runtime derivation. |

AI Analyst struggles with aggregation logic | Metadata change | Likely definition ambiguity or unclear metric rule. |

Repeated runtime filtering or joins | Data model change | Relationship should be formalized in schema. |

Evolving the data model is part of making context sustainable. When teams try to solve every problem through metadata alone, they eventually create context sprawl — brittle logic, duplicated definitions, and inconsistent meaning.

By distinguishing between semantic gaps (fixes in metadata) and structural gaps (fixes in the data model), you build a cleaner, more maintainable foundation on which the AI Analyst can reason.

In the long run, metadata and modeling are not competing layers — they are co-dependent systems:

- The data model captures structure.

- The metadata layer captures meaning.

- The AI Analyst connects both to human intent.

Together, modeling and metadata create the conditions for context to thrive — and for AI Analysts to evolve from clever translators into truly grounded experts.

Taking AI Analysts From Vision to Reality

Permalink to “Taking AI Analysts From Vision to Reality”If context is a shared habit, it’s time we start treating it as such. That means getting our hands dirty, understanding the blockers of AI Analysts in production, and finding strategies for productizing AI in a way that lives up to the hype.

It’s about getting our hands dirty and experiencing the real-world complexities of data work – not in theory, but in practice. It’s about understanding the challenges data teams are facing, and working together to find solutions that will actually work.

If most AI Analysts are failing in production, we need to take another look at what organizational context we’ve given them, and acknowledge whether it’s enough. Because if AI Analysts are really going to become valuable, functional parts of the enterprise, getting context right is non-negotiable.

In this new paradigm, the most valuable skill isn’t writing SQL or tuning models — it’s engineering context. And that requires a human touch. The AI Analyst can reason, but only within the boundaries of the context it’s given. Humans, acting as context engineers, define those boundaries and improve them over time.

Together, they form a continuous feedback loop of understanding: AI accelerates reasoning; humans refine meaning. And with that, the promise of AI can finally become reality.

Share this article