The Context Store for AI: Metadata Lakehouse

Last Updated on: October 31st, 2025 | 6 min read

Over the last decade, enterprises modernized their data architectures, from warehouses to lakehouses, unlocking analytics at unprecedented scale. Yet one critical layer has lagged behind: metadata.

Legacy metadata systems were built for documentation, not context. They were closed, rigid, and slow, never designed for the scale, speed, and openness that AI now demands. In today’s world, where every model and agent depends on trustworthy context, this legacy foundation simply can’t keep up.

Why legacy metadata architectures don’t work for AI

Permalink to “Why legacy metadata architectures don’t work for AI”AI has changed what we need from metadata. It’s no longer static; it’s real-time and in motion, constantly generated, enriched, and consumed by both humans and AI systems. But traditional metadata platforms struggle with this shift.

- Closed and locked in. Proprietary metadata stores can’t interoperate across tools or clouds, limiting visibility and flexibility.

- Poor performance at scale. Metadata workloads are exploding as real-time AI apps and agents start leveraging metadata, and legacy platforms are not set up for real-time, highly performant, scalable workloads

- Rigid and one-way. AI doesn’t just read metadata; it generates it. Models, agents, and users all feed new context back into the graph, a two-way flow that legacy architectures were never built for.

- Limited trust in metadata. AI’s reliability depends on trust in the underlying context. But older systems lack time travel, auditability, and versioning of metadata, making it impossible to verify “what changed, when, and why.”

To support the next generation of AI, metadata needs a new architecture, one that’s open, scalable, and designed for intelligence in motion.

Reimagining metadata infrastructure for the AI era

Permalink to “Reimagining metadata infrastructure for the AI era”Atlan recognized this shift years ago. The company reimagined how metadata should be stored, shared, and activated in an AI-driven world, resulting in the Metadata Lakehouse.

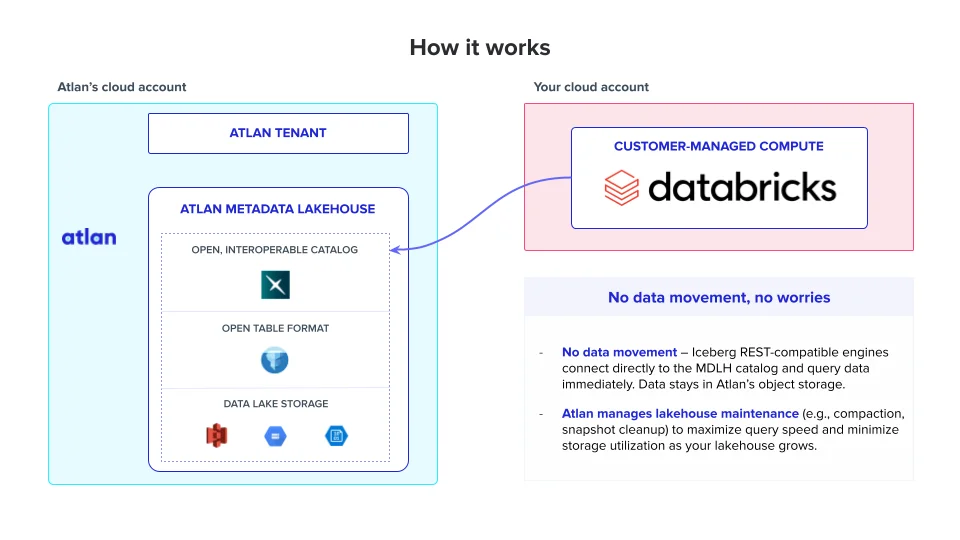

It stores metadata in an open, Apache Iceberg-based lakehouse, the same architectural pattern that modern data and AI platforms like Databricks use for data itself.

By treating metadata like data, open, queryable, and ready for activation, the Metadata Lakehouse unifies technical, semantic, and governance context across the entire ecosystem. Teams can analyze completeness, ownership, and quality with SQL, run operational reports, and feed consistent context directly into AI systems.

“The Atlan Metadata Lakehouse is a unified view of all metadata and usage activity for data assets across your entire architecture. Surfacing all of this context through an open, interoperable lakehouse foundation makes it incredibly easy for Databricks customers to drive data governance, reporting, and AI applications. It’s a game changer for our customers.” — Ben Hudson, Product Lead, Atlan Metadata Lakehouse

A shared vision with Databricks

Permalink to “A shared vision with Databricks”Atlan and Databricks share a common vision: a future without lock-in, where openness, interoperability, and intelligence define the modern data stack.

Databricks has long championed open formats and architectures like Delta and Iceberg. As a launch partner for the Metadata Lakehouse, Databricks extends that philosophy to metadata, ensuring that customers can unify context across their data and AI ecosystems, no matter where it lives.

Together, Databricks and Atlan are helping enterprises turn context into a strategic asset: the connective tissue between data, AI, and trust.

Together, the Metadata Lakehouse and Databricks turn metadata into a first-class citizen:

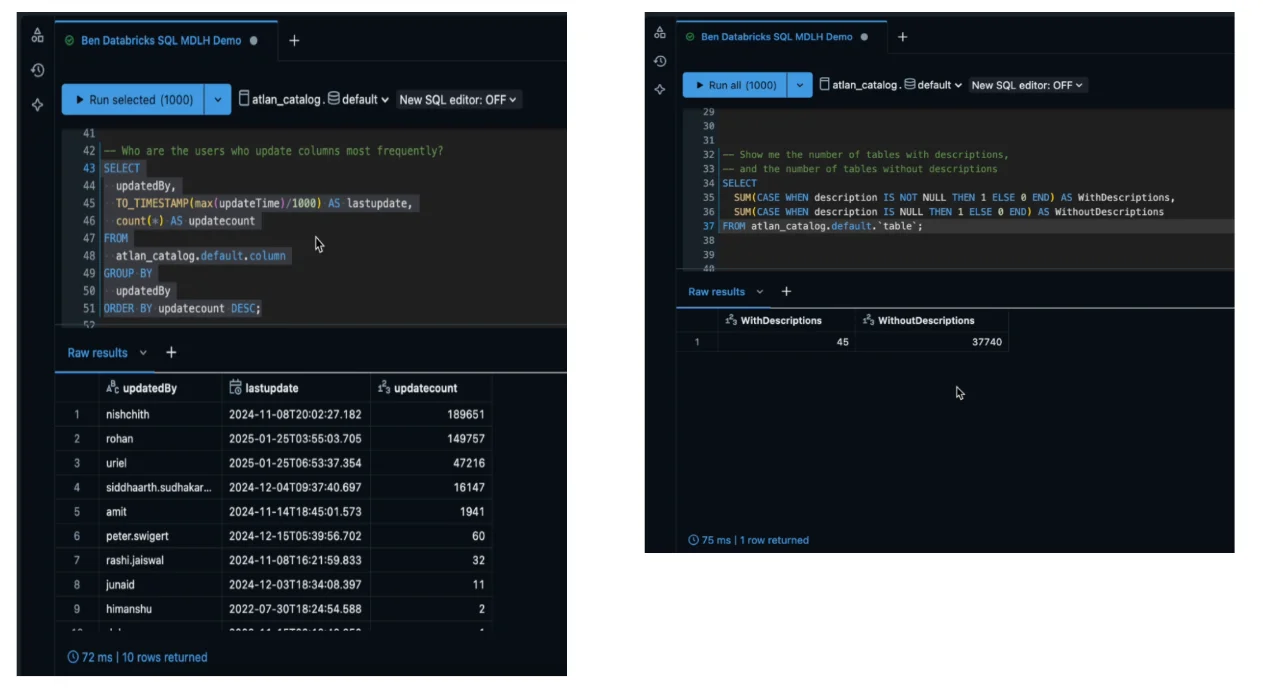

- Query it like data. Use SQL to explore the completeness, ownership, popularity, and quality of your assets.

- Expose curation gaps. Examples: - “Which tables don’t have descriptions?” - “Which databases are the largest but rarely accessed?” - “Who are my most active contributors?”

- Guide investment. Decide which datasets should be enriched, which should be migrated, and which can be deprecated.

The power of the Metadata Lakehouse combined with Databricks

Permalink to “The power of the Metadata Lakehouse combined with Databricks”The Metadata Lakehouse isn’t just an architectural upgrade; it’s a new foundational context store for AI-ready enterprises.

- Unified metadata across Databricks and the broader ecosystem: A bi-directional integration between Unity Catalog and Atlan keeps metadata consistent across the enterprise. Read and write updates flow seamlessly between Databricks and Atlan’s 100+ connectors and open connector framework, synchronizing schemas, lineage, and ownership across warehouses, pipelines, BI, and ML tools.

- Enriched context beyond technical metadata: The Metadata Lakehouse layers semantic, governance, and quality metadata from Atlan’s glossary and context stores on top of Databricks’ native technical metadata. Assets in Unity Catalog gain verified definitions, ownership, and trust signals, making them understandable and usable in business context for both humans and AI agents.

- Power real-time governance: Through the Metadata Lakehouse’s activation layer and its apps, teams can perform real-time lineage tracing, impact analysis, search, and policy workflows at scale. Operational signals, usage, popularity, and completeness flow back into Databricks, transforming static metadata into an active control plane for governance, cost optimization, and AI readiness.

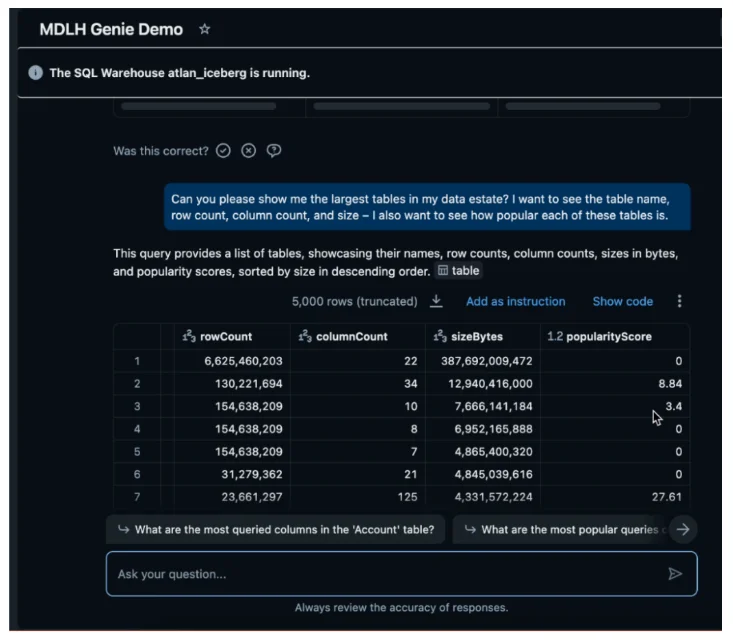

- Improve Databricks Genie accuracy with closed-loop context: The Lakehouse optimizes Genie responses by supplying rich context and captures metadata created by Genie (new terms, relationships, usage patterns) through the Atlan MCP Server. This creates a feedback loop where AI both consumes and generates metadata, improving accuracy and accelerating new AI application development.

Real-world scenarios:

- Data leader: Asks Genie, “Show me high-cost datasets across my estate that aren’t being used.” Atlan metadata reveals cross-platform optimization opportunities.

- Analyst: Asks, “Which revenue table should I trust?” Genie surfaces the most curated, most used, and best documented source.

- Governance manager: Runs a SQL query in Databricks: “Count all assets without descriptions by owner.” Now they know exactly who to engage for stewardship.

- Platform architect: Uses adoption signals to identify datasets that should be migrated into Databricks for performance and cost efficiency.

Already, shared customers like General Motors, Mastercard, and FOX are using this architecture to unify metadata, accelerate collaboration, and enable trusted AI adoption.

The road ahead: an open foundation for AI-native enterprises

Permalink to “The road ahead: an open foundation for AI-native enterprises”As enterprises transition into the AI-native era, the need for open metadata infrastructure has never been clearer. The Metadata Lakehouse sits at the center of this transformation, bridging governance, business meaning, and AI through a single, interoperable layer of context.

Together, Atlan and Databricks are building the open foundation for trusted, AI-powered innovation with context as its foundation.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.