Just as the Data Warehouse Defined BI, the Context Layer Will Define AI

In 1996, at the dawn of the internet era, Bill Gates wrote that “content is king.” In 2025, in the dawn of the AI era, context is king — and nowhere is that clearer than in enterprise AI.

Last Updated on: February 3rd, 2026 | 17 min read

Over the past year, as artificial intelligence has moved from research labs into real businesses, one word keeps surfacing everywhere: context. You see it everywhere. Context windows. Context tokens. Model Context Protocol.

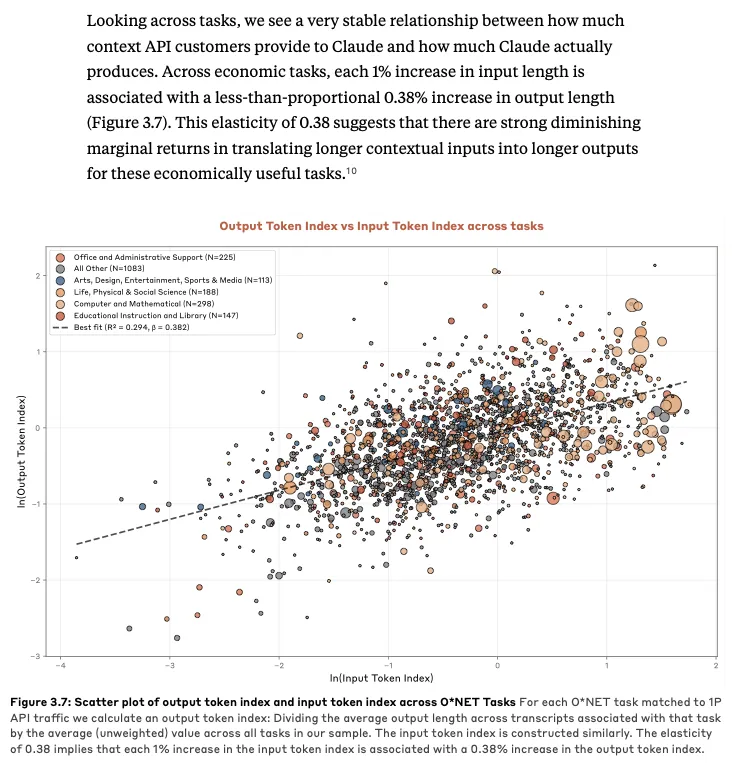

Recent research from Anthropic pointed out that enterprises that fail to effectively gather, organize, and operationalize contextual data — particularly data rooted in their organizational knowledge — will struggle to deploy sophisticated AI systems.

Scatter plot of output token index and input token index across O*NET Tasks. Source: Anthropic Report.

Source: Recent research from Anthropic.

At the same time, Chroma’s Context Rot report showed that more context isn’t always better — that beyond a point, excessive or irrelevant context actually worsens model reasoning.

So what’s going on? Why is everyone suddenly talking about context?

Permalink to “So what’s going on? Why is everyone suddenly talking about context?”For decades, enterprise systems have evolved in layers. First came systems of record — databases that stored what happened.Then systems of intelligence — analytics tools that explained why it happened. Most of these systems were designed for coordination: to move data, track progress, and report outcomes. Now, as AI begins to act on our behalf, we’ve entered the age of systems of agency.

But until now, the “agents” inside companies were humans. And human context — the invisible glue that holds organizations together — has never been captured in software.

Every business runs on thousands of unwritten rules no one has ever documented. When to offer a discount. When to escalate. When to bend a policy — and when to absolutely not. These rules live in people’s heads — and nowhere else.

So when AI fails, it’s rarely because the model is wrong or the team that built it messed up. It fails because the AI doesn’t know what humans know — the unwritten rules, the edge cases, the “oh, except when…” judgment calls that make everything actually work.

This is what I am beginning to call the AI context gap.

For AI systems to truly work, we have to teach them what humans intuitively know. In other words, we have to teach AI context.

Context 101

Permalink to “Context 101”Before we start dreaming up solutions to this problem, let’s start with getting to shared context (about context!). What the hell is “context?”

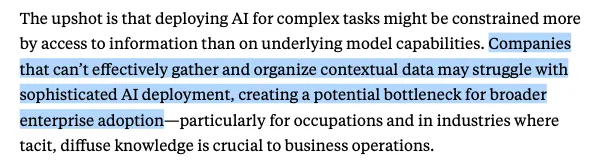

One question I get often is: How is context different from data? Here’s how I think about it: Data is what is. Context is what it means.

How data is different from context. Source: Atlan.

In essence, context is the interpretation layer that transforms data into action.

We can think of it across three tiers:

- Structural Context — definitions, relationships, hierarchies

- Operational Context — rules, procedures, decision logic

- Behavioral Context — patterns, preferences, historical lessons

So how do we teach agents human context?

The Two Emerging Paths for Enterprise Context

Permalink to “The Two Emerging Paths for Enterprise Context”Path 1: Local Solutions

Permalink to “Path 1: Local Solutions”Right now, most companies are building what I’d call context islands — each team solving for its own agents in isolation. We’ve seen this movie before. In the early data era, every team built its own system of record. It worked — until coordination mattered. Then came the great data cleanup: integration layers, data lakes, warehouses, catalogs, and governance frameworks.

Local context layers recreate the same problem — only this time, the consequences are far more severe, because AI doesn’t just analyze this context, it acts on it.

LLMs don’t “think.” They reason over context. If two agents see different versions of the same world, they can’t collaborate or make consistent decisions.

Imagine a hospital where one agent triages patients using last night’s capacity data, while another schedules emergency staff based on this morning’s roster. Both are acting rationally within their local context — and together, they’ve just delayed critical care for patients who can’t afford to wait.

That’s what happens when context fragments. Without a shared, enterprise-wide context layer, your AI behaves like a company full of interns with no onboarding — capable, eager, and utterly uninformed.

Path 2: Context as Infrastructure

Permalink to “Path 2: Context as Infrastructure”The alternative is to treat context as infrastructure — a shared foundation that every system depends on. Netflix already does this with its Unified Data Architecture, ensuring every system that references a “movie” means the same thing.

Instead of dozens of teams building isolated context stores, we can build a shared, federated context layer — one that reflects how the organization actually thinks and operates. It acts as a living system that encodes how a company thinks, decides, and acts.

In this model, context becomes part of the company’s infrastructure, not its applications. It turns scattered knowledge into a single, reliable frame of reference — a living map of how the organization thinks and operates.

The Rise of the Enterprise Context Layer

Permalink to “The Rise of the Enterprise Context Layer”Building a context layer isn’t about sitting in a room and documenting everything. It’s about creating systems that capture, maintain, and deliver context automatically. These systems are built on four core components:

1. Context Extraction

Permalink to “1. Context Extraction”You can’t manually document every piece of context. You need systems that can infer rules from behavior — mining decision logs, analyzing communication patterns, and learning from historical actions.

For example:

- Sales context lives in CRMs, pricing rules, and exceptions learned over years.

- Finance context lives in policy docs, approval flows, and conversations.

- Support context lives in ticket resolutions, macros, and feedback loops.

In most organizations, the raw material for context already exists. We could probably extract 80% of our unwritten rules just by analyzing Slack messages, PR reviews, and support tickets. The patterns are already there — they’ve simply never been codified.

That’s why the context layer needs to connect across a fragmented landscape — from knowledge bases to CRMs, from data lakes to BI tools. And it must intelligently mine them to create a bootstrapped version of your organization’s collective context.

This is where the foundation of the Enterprise Context Layer begins: turning the scattered traces of human judgment into a structured, evolving system of shared understanding.

2. Creating “Context Products” as Minimum Viable Context

Permalink to “2. Creating “Context Products” as Minimum Viable Context”In the data world, the concept of a data product became foundational — a reusable, reproducible, trusted, and governed unit of value. It turned messy datasets into reliable, consumable assets that teams could depend on.

In the age of AI, we can imagine that a context product will be an equivalent building block.

Context products are structured representations of data plus the shared understanding that gives that data meaning. They combine facts, relationships, rules, and definitions into a single, verified unit of context that AI systems — and humans — can trust. It’s a living artifact that tells an AI not just what is true, but how to think about it.

To put this into perspective, consider one of our customers, a major content publisher, that was rolling out an AI analyst for a specific domain. Their business users needed to answer questions like, “During the war, what happened to our political shows?”

To answer this, the agent needed to:

- Identify which “war” the user meant, based on public and internal information.

- Map it to a time period and encode it in a time series.

- Understand what “political” means in the company’s taxonomy, and link it to specific shows.

- Determine what “happened” meant — which metric defines performance: ratings, reviews, or revenue.

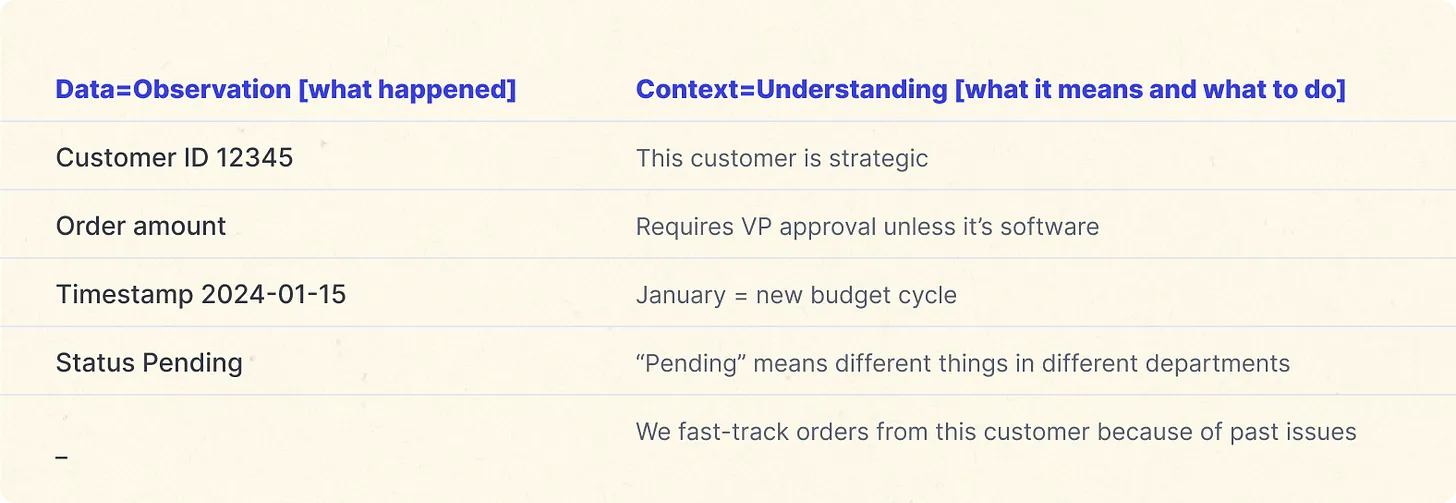

This is what we call context layering — combining data with domain-specific meaning so agents can think and act intelligently.

To solve this, the team created a context product — a verified, reusable unit of organizational understanding. It had access to relevant data assets, verified queries, and definitions of key business terms. It drew from the company’s knowledge base and org chart. Some of it was auto-generated, while other parts were seeded through a human-in-the-loop process.

Context Product. Source: Atlan.

Building a context product is a collaborative process between humans and AI. Think of it as a test suite for context. Teams define a set of golden questions, such as “Here’s what this agent should be able to answer.” The system runs those questions as evaluations, measures accuracy, and iteratively seeds new context until performance hits a threshold.

That’s the moment the system passes the minimum viable context bar — when an agent has enough verified context to operate reliably in production.

Every useful AI system will eventually depend on context products. They’ll become the modular units of organizational intelligence — the blocks that power decision-making at scale.

3. Human-in-the-Loop Context Feedback Loops

Permalink to “3. Human-in-the-Loop Context Feedback Loops”The interesting thing about context is that it’s never done. It’s not something you “set up” once and move on. It’s alive — it changes as your company changes. New products, new people, new priorities — all of that shifts your context every day.

The strength of a context layer isn’t in being complete — it’s in being continuously improved. That’s where context feedback loops come in.

Every interaction between a human and an AI agent is a chance to refine your organization’s shared understanding. The system answers, the human adjusts, and that correction becomes part of the institutional memory.

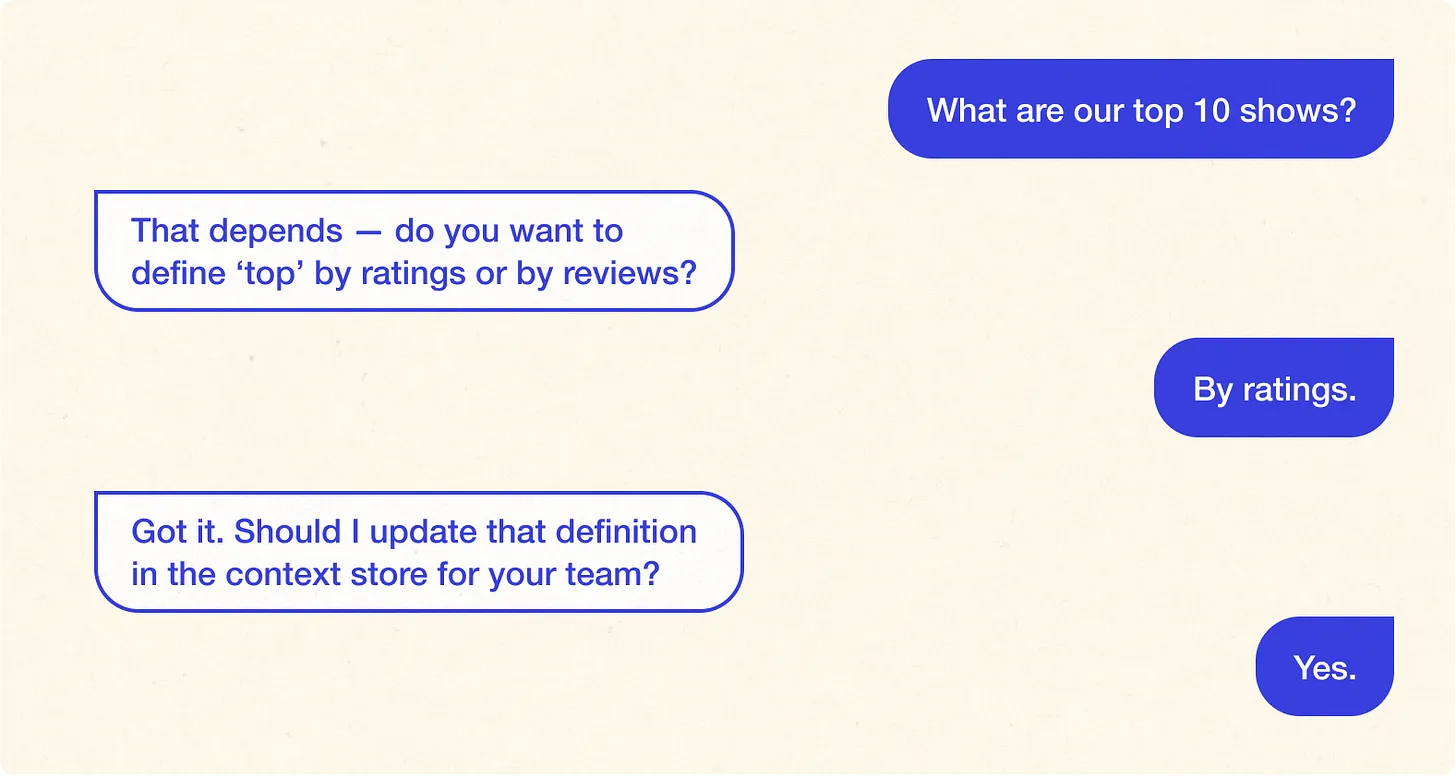

Imagine a quick exchange between a user and an AI agent at a media company:

Talk To Your Data/Agent. Source: Atlan.

Over time, this simple feedback loop — question, clarification, confirmation — builds a more precise and human-aligned system.

The same mechanism resolves conflict. If Finance defines a customer as “Enterprise” while Sales calls them “Mid-Market,” the system doesn’t choose arbitrarily. It flags the mismatch, escalates to a domain expert, and captures the decision so the conflict never repeats.

In this way, context becomes self-healing.

4. Context Store

Permalink to “4. Context Store”Two things comprise the context store, and they exist at the foundation of any context layer:

- Where context lives: It must exist in a form that both humans and machines can work with.

- How context is accessed: Humans need to review and refine it; machines need to pull it instantly to make decisions.

That means the architecture needs to do two things well: store context in multiple specialized ways, and unify how it’s retrieved.

A modern context layer might look something like this:

- Graph store: captures relationships — who reports to whom, how teams and approvals connect.

- Vector store: holds unstructured knowledge — documents, conversations, historical decisions.

- Rules engine: encodes business logic — compliance rules, policies, if–then procedures.

- Time-series store: keeps track of how things change over time — trends, historical patterns, audit trails.

Interfaces and Interface Points

Permalink to “Interfaces and Interface Points”In data, SQL became the universal standard. In AI, that common interface doesn’t exist yet – and therein lies the potential of the interface layer.

The interface layer is the connective tissue that makes them feel like one system, making it arguably more powerful than the context stores. This is where retrieval, reasoning, and governance converge.

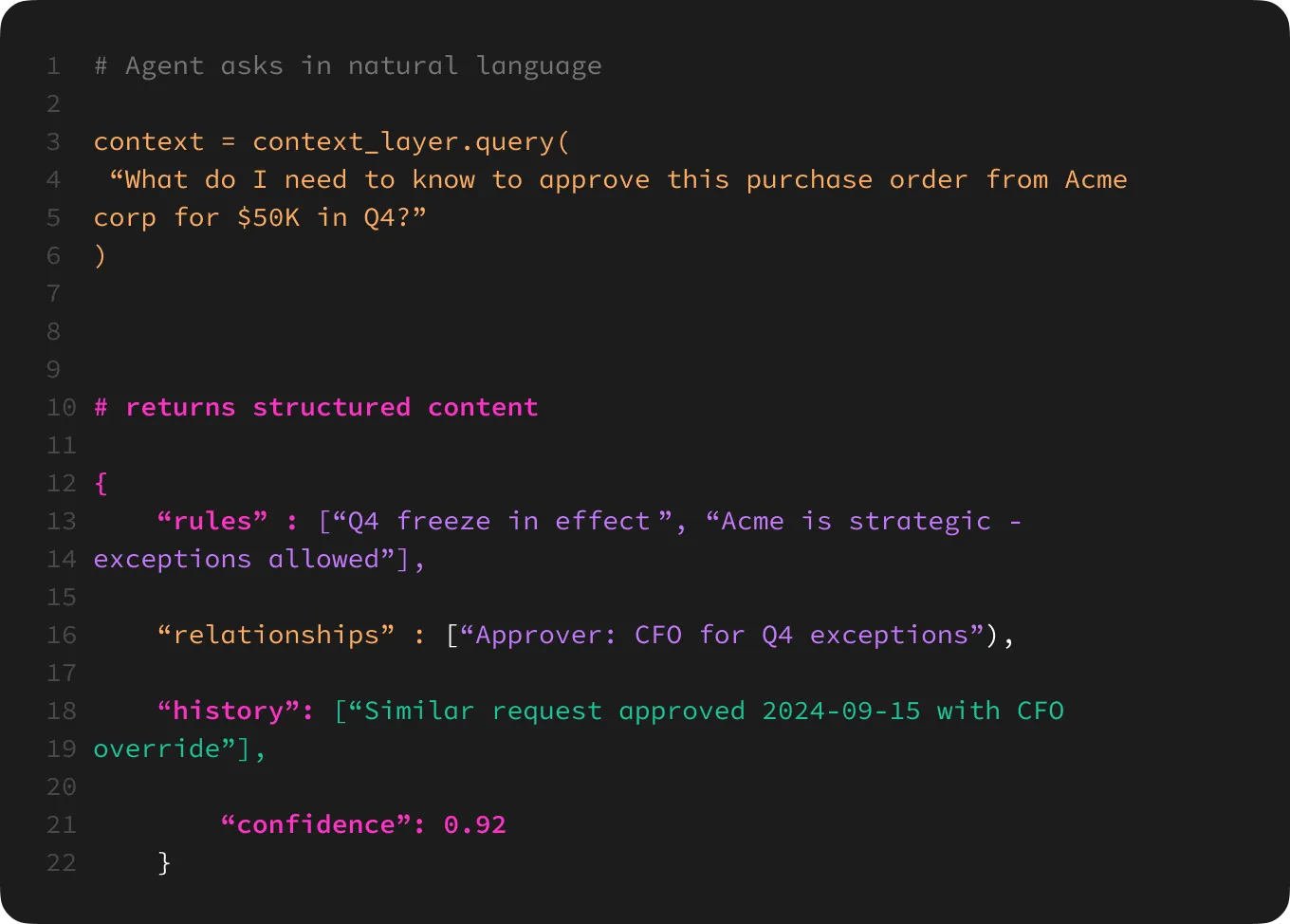

One core building block is a natural language query interface: Imagine being able to ask your company a question — in plain language — and the system pulls context from everywhere it needs to.

Natural Language Query Interface. Source: Atlan.

Governance, Scale, and Interoperability

Permalink to “Governance, Scale, and Interoperability”You can imagine a world where the context layer is natively MCP-ready — built to communicate seamlessly across models, agents, and tools, yet flexible enough to evolve as new standards form. To reach that point, it has to be governed, scalable, and interoperable.

Still, context is a living thing. It changes each time a decision is made, a team shifts, or a new system comes online. The only way to keep it trustworthy is to make its evolution visible — every update tracked, every change explainable. When something breaks, you should be able to trace it back, see the decision, and understand why it happened.

The same architecture must also scale to match the rhythm of modern decision-making: agents retrieving context millions of times per day, often in milliseconds. A context layer has to operate at the speed of inference, not storage.

And like all resilient systems, it must remain open. The future of context will be federated, not centralized — interoperable across tools, architectures, and standards. Just as Iceberg-native systems proved data can remain distributed and still act as one, the context layer will do the same for knowledge — open by design, consistent by default, and adaptive by necessity.

Context Layer FAQs

Permalink to “Context Layer FAQs”What is a context layer for AI systems?

Permalink to “What is a context layer for AI systems?”A context layer is the infrastructure that provides AI systems with the business meaning, relationships, and operational rules needed to understand and act on enterprise data. It transforms raw data into actionable intelligence by encoding what data represents, how it connects, and why it matters. Without a context layer, AI models lack the organizational knowledge required to make accurate, trusted decisions—leading to hallucinations and misaligned outputs. Think of it as the difference between giving your AI raw ingredients versus a complete recipe with instructions.

How is a context layer different from a data layer?

Permalink to “How is a context layer different from a data layer?”A context layer interprets and enriches data, while a data layer simply stores it. Your data layer contains facts—numbers, records, transactions—but lacks meaning. The context layer adds the “why” and “how”: business definitions, relationships between entities, governance rules, and historical patterns. When your AI needs to understand that “customer churn” means something specific to your business, that understanding lives in the context layer, not the data layer.

What are the main components of an enterprise context layer?

Permalink to “What are the main components of an enterprise context layer?”An enterprise context layer typically includes four interconnected components. The context store houses relationships, business logic, and historical patterns across graph, vector, and rules-based systems. Context extraction tools pull knowledge from documents, conversations, and existing systems automatically. Context retrieval interfaces deliver the right context to humans and AI agents in milliseconds at inference time. Context products package verified data with business meaning, while human-in-the-loop feedback loops continuously refine organizational understanding.

What is “minimum viable context” for AI deployment?

Permalink to “What is “minimum viable context” for AI deployment?”Minimum viable context is the threshold of verified organizational knowledge an AI system needs to operate reliably in production. It includes essential business definitions, data relationships, and decision rules for a specific use case. You’ve effectively reached minimum viable context when your AI can consistently answer a curated set of “golden questions” for that domain—an evaluation set of real-world queries—without requiring constant human correction. This threshold varies by complexity: a simple reporting agent needs less context than an agent making autonomous pricing or purchasing decisions.

How do you build a context layer for your organization?

Permalink to “How do you build a context layer for your organization?”Start by identifying your highest-value AI use cases and the knowledge gaps causing failures today. Extract context from existing sources: CRM systems, policy documents, support tickets, analytics queries, and team conversations often contain most of the unwritten rules. Create context products that package data assets with verified business definitions and decision logic. Implement feedback loops so human corrections and “golden question” evaluations continuously improve organizational understanding. Build governance mechanisms to track changes and maintain trust. Most organizations begin with a single domain before scaling context infrastructure enterprise-wide.

What data sources feed into a context layer?

Permalink to “What data sources feed into a context layer?”A context layer draws from both structured and unstructured sources across your organization. Structured sources include databases and warehouses, CRM and ERP systems, business glossaries, and semantic layers. Unstructured sources encompass policy documents, email threads, Slack conversations, support tickets, wikis, and meeting notes—where substantial organizational knowledge often lives undocumented. The context layer also captures human feedback, decision history, and relationship mappings that may not exist anywhere else in your data infrastructure.

How long does it take to implement a production context layer?

Permalink to “How long does it take to implement a production context layer?”Initial context layers for focused use cases can often be delivered in weeks to a few months, not years. You can usually achieve minimum viable context for a single domain—such as customer analytics or financial reporting—relatively quickly by starting from existing metadata, semantic models, and business glossaries instead of a blank slate. Enterprise-wide deployment takes longer as you expand across domains, integrate more sources, and harden feedback loops and governance. The key is starting narrow, proving value, then scaling systematically rather than attempting comprehensive coverage immediately.

What is the difference between a context layer and a semantic layer?

Permalink to “What is the difference between a context layer and a semantic layer?”A semantic layer translates technical data into business-friendly terms and consistent metrics—it standardizes how you query and calculate things like “revenue” or “active customers.” A context layer goes further by capturing relationships, operational rules, historical patterns, and organizational knowledge that AI needs to reason and act intelligently. Your semantic layer might define “revenue” consistently; your context layer teaches AI when revenue recognition rules have exceptions, which customers require special handling, and how different teams interpret the same metric differently. In practice, the semantic layer often becomes one of the most important inputs into the context layer—they’re complementary, not competing.

How does a context layer relate to RAG (Retrieval-Augmented Generation)?

Permalink to “How does a context layer relate to RAG (Retrieval-Augmented Generation)?”RAG is a technique for retrieving relevant information to augment AI responses; a context layer is the infrastructure providing that information. Think of RAG as the retrieval pattern and the context layer as what gets retrieved. Industry research shows that RAG implementations grounded in well-structured, trusted context can significantly reduce hallucinations and improve answer quality. Your context layer makes sure RAG retrieves not just semantically similar documents, but verified business meaning, relationships, and rules—often the difference between technically plausible and actually useful AI outputs.

Why does enterprise AI fail without proper context?

Permalink to “Why does enterprise AI fail without proper context?”Enterprise AI fails without context because models cannot access the unwritten rules, edge cases, and judgment calls that humans intuitively understand. When your AI doesn’t know that “customer” means something different to Finance than Sales, or that certain pricing rules have regulatory or contractual exceptions, it produces plausible-sounding but wrong answers. Many enterprises report significant data integration difficulties with AI and cite inaccurate or untrusted outputs as a top risk. The context layer fills this gap by encoding organizational understanding—definitions, rules, and exception patterns—into governed, machine-readable form that AI systems can actually use.

How do you measure whether your context layer is working?

Permalink to “How do you measure whether your context layer is working?”Measure context layer effectiveness through AI output quality and trust, not just infrastructure metrics. Track hallucination or override rates, human correction frequency, and time-to-accurate-answer for AI responses. Monitor adoption—are teams trusting AI outputs enough to act on them and embed them in their workflows? Assess context coverage by identifying which “golden questions” your AI still cannot answer reliably and which domains still require heavy manual intervention. Evaluate feedback-loop velocity: how quickly do human corrections and new decisions improve future responses? Successful context layers show declining error and correction rates, fewer “what does this mean?” questions, increasing trust, and expanding AI use cases over time.

Final Thoughts

Permalink to “Final Thoughts”Every company is a living system — a network of people, decisions, and unwritten rules that define how things get done. For years, we’ve built systems that captured data, but ignored judgment.

Now we have the chance to change that. The next decade of AI won’t be defined by better models — it’ll be defined by better context. Every intelligent system will eventually need to understand not just what data says, but what it means.

Context is that bridge. And the context layer is where to start — a conversation we’ll be continuing at Re:govern, with the people building this future every day.

Re:govern 2025 - The Data & AI Context Summit. Source: Re:govern 2025.

This article was originally published in Metadata Weekly. View the original article here.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Context Layer: Related reads

Permalink to “Context Layer: Related reads”- What is a Context Graph?: Definition and Guide

- Semantic Layer: A Complete Guide

- AI-Ready Data: How to Prepare Your Data for AI

- Data Governance Framework: Building Trust at Scale

- Context Layer vs. Semantic Layer: What’s the Difference?

- Context Layer for Enterprise AI: A Complete Guide

- Context Layer Ownership: Data vs. AI Teams

- What is Active Metadata?: The Foundation of Modern Data Management

- What is a Data Catalog?: Everything You Need to Know

- What is Data Governance?: A Complete Guide