Context Graph: What It Is, How It Works, & Implementation Guide

How context graphs differ from knowledge graphs and lineage systems

Permalink to “How context graphs differ from knowledge graphs and lineage systems”The terms “knowledge graph,” “data lineage,” and “context graph” often get used interchangeably, but they serve distinct purposes. Understanding these differences matters when evaluating how to solve metadata challenges.

1. Knowledge graphs

Permalink to “1. Knowledge graphs”Knowledge graphs represent entities and their semantic relationships in a conceptual model. They focus on “what things are”: defining business concepts, taxonomies, and ontologies. A knowledge graph might capture that “Customer” relates to “Order” through a “Places” relationship, and that “Product” belongs to a “Category.”

Research shows that knowledge graph-based retrieval systems can improve AI accuracy by up to 35% on complex reasoning tasks. However, they’re limited in that they’re often static. They don’t capture operational metadata like lineage, real-time policies, or usage patterns.

2. Decision lineage vs. traditional lineage

Permalink to “2. Decision lineage vs. traditional lineage”Traditional data lineage tracks data flow and transformations. It answers “where” questions, such as:

- Where did this data come from?

- Which jobs processed it?

- Which tables were created?

Decision lineage takes a different approach. It captures decision events, approvals, exceptions, and precedent links. The goal is to answer “why” questions:

- Why was this decision made?

- What policies were active when this report was approved?

- What precedent decisions informed this classification?

This distinction becomes critical for AI agents. When an automated system encounters an edge case, decision lineage provides searchable precedent rather than forcing teams to re-learn the same exception every time.

3. Data lineage tools

Permalink to “3. Data lineage tools”Most data lineage tools excel at tracking technical transformations. They connect tables, columns, jobs, and pipelines to show impact analysis and troubleshooting paths. These tools typically use linear representations optimized for “upstream/downstream” queries.

The challenge emerges with complex multi-hop traversal. Prompts like “Show all dashboards affected by this table change across three transformation layers, filtered by business domain and data classification” require graph-native storage rather than relational joins.

4. Context graphs

Permalink to “4. Context graphs”Context graphs unify semantic meaning, operational lineage, and governance. They treat business glossaries, policies, ownership, quality metrics, and usage patterns as first-class graph nodes connected through typed relationships.

Recent research from Cornell University demonstrates the technical foundation of this approach. Their2024 study on context graphs showed that expanding knowledge graphs with contextual information - including temporal dynamics, geographic location, and source provenance - significantly improves performance on knowledge graph completion and question answering tasks. The research validates that incorporating contextual metadata enables more sophisticated reasoning processes than traditional triple-based knowledge graphs.

Modern data platforms implement context graphs as active metadata layers. Atlan’s approach connects business semantics with technical lineage, making governance policies and metadata relationships queryable alongside data assets. This unified model enables efficient multi-hop queries that traditional lineage tools struggle to handle.

The key advantage: Graph-native storage separates storage from compute, allowing organizations to query relationships at scale without rebuilding indexes for each new question type.

Knowledge graphs vs. data lineage tools vs. context graphs

Permalink to “Knowledge graphs vs. data lineage tools vs. context graphs”Aspect | Knowledge Graphs | Data Lineage Tools | Context Graphs |

|---|---|---|---|

Primary Purpose | Represent semantic relationships and business concepts | Track technical data flow and transformations | Unify semantic meaning, operational lineage, and governance |

What It Connects | Business concepts, taxonomies, ontologies | Tables, columns, jobs, pipelines, transformations | All of the above, plus policies, ownership, quality metrics, usage patterns, decision traces |

Storage Model | Often static triple stores or property graphs | Linear representations optimized for upstream/ downstream queries | Graph-native storage with separation of storage and compute |

Query Pattern | Semantic relationships (“what things are”) | Technical transformations (“where did data come from”) | Multi-hop traversal across semantic, technical, and governance boundaries |

Primary Use Cases | Semantic search, entity resolution, conceptual modeling | Impact analysis, troubleshooting, compliance reporting | AI-grounded RAG, intelligent impact analysis, policy automation, semantic discovery |

Key Limitation/ Advantage | Limitation: Often static; doesn’t capture operational metadata like lineage or real-time policies | Limitation: Struggles with complex, multi-hop traversal and semantic context | Advantage: Enables efficient multi-hop queries; integrated semantics, lineage, and governance in a single model |

Who builds the context graph? Platform vs. application.

Permalink to “Who builds the context graph? Platform vs. application.”The question of who owns context graphs sparked significant debate in early 2026. Jaya Gupta’s “Context Graphs: AI’s Trillion-Dollar Opportunity” thesis argued that vertical agent startups sitting in execution paths would capture context graphs, since they see decisions as they’re made.

Atlan’s co-founder Prukalpa Sankar offered a counterargument focused on enterprise heterogeneity: while vertical agents see execution paths deeply, most enterprise decisions pull context from 6-10+ systems simultaneously. A single renewal decision might require data from CRM, support tickets, usage analytics, communication platforms, and semantic layers across different vendor combinations.

This heterogeneity challenge - the fact that every enterprise runs different system combinations - suggests context graphs may be fundamentally a platform problem rather than an application one. The discussion reached over 174,000 impressions and over 90 retweets.Read the full exchange on X.

Context graphs: The evolution of metadata management

Permalink to “Context graphs: The evolution of metadata management”Context graphs represent the natural evolution of what Atlan has championed from the beginning: active metadata management. This isn’t about reinventing data governance - it’s about recognizing that metadata has fundamentally evolved.

From passive documentation to active infrastructure

Permalink to “From passive documentation to active infrastructure”Traditional metadata management treated metadata as static documentation - technical specifications captured in catalogs, glossaries stored in wikis, and lineage visualized in diagrams. This passive approach worked when data moved slowly and systems changed infrequently.

Active metadata changed the equation. By continuously capturing and enriching metadata across the data stack, organizations could automate governance, accelerate discovery, and enable real-time decision-making. Atlan’s approach to active metadata established the foundation: treat metadata as infrastructure, not documentation.

Context graphs are Metadata 2.0 - the next evolutionary step. They extend active metadata’s continuous capture and enrichment with graph-native relationships, semantic understanding, and operational context. Where active metadata automated governance workflows, context graphs enable AI to reason over your entire data estate.

What makes this evolution necessary

Permalink to “What makes this evolution necessary”The shift from business intelligence to artificial intelligence demands richer context. BI tools could work with table-level metadata and basic lineage. AI agents need to understand:

- Semantic relationships: How business concepts connect across systems and domains

- Decision traces: Why specific data transformations were applied and what policies governed them

- Temporal context: When data was valid and how definitions changed over time

- Operational patterns: How data is actually used and who relies on it for critical decisions

Gartner’s research found that metadata management was the fastest-growing submarket in data management software - a clear signal that organizations recognize metadata’s strategic importance. Their 2025 Magic Quadrant for Metadata Management Solutions specifically cited Atlan’s vision of being “the metadata control plane to capture, unify, and understand enterprises’ data estates” as central to supporting AI and agentic solutions.

Why this distinction matters

Permalink to “Why this distinction matters”Many vendors are rebranding data catalogs as “context layers” or adding graph databases to legacy metadata systems. The distinction between genuine metadata evolution and marketing repositioning matters.

Context graphs built on active metadata foundations inherit continuous capture, embedded collaboration, and bi-directional sync that traditional catalog architectures can’t replicate. They extend proven metadata orchestration patterns rather than retrofitting graph capabilities onto static systems.

Organizations evaluating context graph approaches should ask: Does this vendor have production-proven active metadata at scale? Can their architecture support real-time context propagation? Do they treat governance as active infrastructure or passive documentation?

The answers reveal whether you’re getting metadata’s natural evolution or just new terminology on old architectures.

What are the core components of context graph architecture?

Permalink to “What are the core components of context graph architecture?”Context graph architecture consists of five core components: a graph database foundation for relationship storage, a metadata capture layer for continuous collection across the data stack, a semantic enrichment layer that transforms technical metadata into business context, a context activation layer that serves metadata to humans and AI systems, and modeling choices that balance formal reasoning with operational performance. These components work together to transform static metadata into active infrastructure that powers AI agents, impact analysis, and automated governance.

1. Graph database foundation

Permalink to “1. Graph database foundation”Context graphs typically run on either triple stores (RDF) or property graphs (Neo4j-style databases). The choice matters.

Triple stores excel in environments requiring formal ontology reasoning, like healthcare, financial services, and government sectors where OWL/RDFS standards drive interoperability.

Property graphs prioritize query performance and developer experience, with native support for Cypher and GraphQL.

Graph databases optimize for relationship traversal rather than JOIN operations. This architectural difference shows up immediately in impact analysis queries. What takes seconds in a graph database might require minutes of complex JOINs in a relational system.

2. Modeling choices: RDF quads vs. property graphs

Permalink to “2. Modeling choices: RDF quads vs. property graphs”RDF quad stores add qualifiers – like time, provenance, and confidence – as named graphs. This approach suits regulatory environments requiring audit trails and formal reasoning. Property graphs store context as edge properties, making them more intuitive for application developers.

When to choose RDF: Regulatory environments requiring formal ontology reasoning, integration with existing semantic web infrastructure.

When to choose property graphs: Operational use cases prioritizing query performance and developer experience, native Cypher/GQL support.

Most enterprise implementations favor property graph models for performance. Platforms like Atlan layer semantic capabilities through glossary and policy integrations while maintaining the performance advantages of property graphs.

3. Metadata capture layer

Permalink to “3. Metadata capture layer”Context graphs require continuous metadata collection from across the data stack. Active collection methods include:

- Query parsing and log-based tracking from warehouses and BI tools

- Pipeline instrumentation through orchestration hooks (dbt, Airflow, Dagster)

- Metadata API integrations with catalogs, transformation tools, and AI platforms

- Manual curation through glossaries and tag systems

The goal isn’t capturing everything – it’s to capture the right context at the right time. So high-churn pipelines may need instrumentation-level detail while stable reference data might only need API-level collection.

4. Semantic enrichment

Permalink to “4. Semantic enrichment”This layer transforms technical metadata into business context. It connects ontologies and business glossaries as graph nodes, performs entity resolution across systems, and treats policy nodes as first-class citizens.

Atlan’s approach makes governance rules queryable graph elements rather than passive documentation. A data classification policy becomes a node with relationships to affected assets, enforcement workflows, and compliance requirements.

5. Context activation layer

Permalink to “5. Context activation layer”The activation layer serves context back to humans and AI systems through multiple interfaces:

For humans:

- APIs for discovery, search, and impact analysis

- Graph-grounded retrieval for AI/LLM applications

- Policy propagation and exception handling

For AI agents:

- Multi-state traversal where agents navigate from questions to relevant entities across multiple relationship types simultaneously

- Constraint density regions signal parts of the graph with high policy/governance node concentration, requiring additional validation steps

- Action-state guarantees ensure agents can only take actions permitted by the current policy state

- Exception routing flows handle requests that hit policy constraints, directing to appropriate approval workflows

- Trace persistence writes all agent actions and decisions back to the graph as auditable decision events

Platforms like Atlan implement context graphs as control planes for metadata and policies, treating governance as active infrastructure rather than passive documentation. This activation layer is what makes context graphs operational rather than just informational.

What are the top enterprise use cases for context graphs?

Permalink to “What are the top enterprise use cases for context graphs?”Context graphs solve problems that traditional metadata tools struggle with. The value shows up in measurable outcomes.

1. Intelligent impact analysis and root-cause investigation

Permalink to “1. Intelligent impact analysis and root-cause investigation”Graph traversal enables multi-hop queries that span technical and semantic boundaries. The prompt “Show all dashboards affected by this table change, filtered by business domain and data owner” becomes a single-graph query rather than multiple system lookups.

Organizations using graph-based lineage can perform faster impact analysis when lineage and semantic context are unified. The time savings compound: analysts spend less time hunting for dependencies and more time preventing issues.

2. AI-grounded RAG and hallucination reduction

Permalink to “2. AI-grounded RAG and hallucination reduction”LLMs hallucinate when they lack context. Research demonstrates that knowledge graph-enhanced RAG systems achieve accuracy rates above 81% in specialized domains, with improvements of 6.8% over traditional RAG approaches.

Context graphs take this further. They retrieve not just data definitions, but full operational context: lineage showing where data came from, policies governing its use, quality metrics, and ownership. This semantic and operational context helps LLMs reason more accurately.

Graph-grounded RAG reduces AI hallucinations by retrieving the governance and semantic context that gives data meaning. The graph structure also provides explainable reasoning paths, making it clear why the AI answered in a specific way.

3. Semantic discovery and intelligent search

Permalink to “3. Semantic discovery and intelligent search”Natural-language queries leverage graph relationships to understand intent. A search for “revenue data” doesn’t just match keywords, but actually traverses relationships to certified revenue metrics, traces to source tables, connects to business owners, and surfaces related dashboards.

Auto-tagging and glossary linkage through graph inference improves search relevance. Teams measure success through speed to metrics access and search effectiveness scores.

4. Policy automation and governance at scale

Permalink to “4. Policy automation and governance at scale”Policy nodes propagate through lineage graphs automatically. A data classification policy applied to a source table flows to downstream tables, views, and dashboards through graph relationships. Exceptions and approvals become auditable graph edges.

Research in governance automation shows that knowledge graph-based systems enhance audit process efficiency and accuracy while strengthening risk management. Graph-native policy enforcement reduces manual governance overhead by automating compliance monitoring and enabling real-time policy propagation across complex data estates.

5. Measurement and benchmarks

Permalink to “5. Measurement and benchmarks”Assessing the performance of knowledge graphs is key to ongoing refinement and accuracy. Organizations tracking context graph implementations measure operational KPIs, including:

- Impact analysis time: Before/after implementation improvements

- Search effectiveness: Hits@1 improvement, time-to-find reduction

- AI answer accuracy: Hallucination rate, citation accuracy in RAG applications

- Governance efficiency: Policy compliance rates, exception resolution time

A best practice is to run parallel tests on high-value queries with and without context enrichment to quantify improvement. This benchmark demonstrates ROI before full-scale rollout.

What are the implementation phases for deploying a context graph?

Permalink to “What are the implementation phases for deploying a context graph?”Building a context graph isn’t an all-or-nothing decision. Organizations should follow a phased approach that delivers incremental value, quick feedback, and agile learning.

Phase | Focus | Time to Value | Key Actions | Success Metrics |

|---|---|---|---|---|

Phase 1: Modern metadata foundation | Establish baseline asset inventory | 2-4 weeks | - Deploy active metadata catalog with API-based collection - Connect pre-built connectors across the data stack - Establish centralized asset inventory | Quick win: Centralized search and discovery Measure: Search adoption rates, time to find improvements |

Phase 2: Lineage capture and enrichment | Build graph-native lineage | 2-3 months | - Blend capture methods: APIs for coverage, parsing/ instrumentation for critical pipelines - Start with high-churn domains - Build graph-native lineage models supporting multi-hop traversal | Quick win: Analysts spend less time on impact analysis Measure: Impact analysis time, ticket deflection |

Phase 3: Semantic layer and governance integration | Connect business context to technical assets | 4-6 months | - Integrate business glossaries and domain ontologies as graph nodes - Implement policy nodes with propagation rules - Take a domain-driven, incremental approach | Tip: Start where pain is highest, and measure impact analysis time and governance ticket volume in critical domains first Measure: Search accuracy, policy compliance rates |

Phase 4: AI activation and agent integration | Enable AI-powered use cases | 6+ months | - Enable graph-grounded RAG for internal AI applications - Connect to BI tools and orchestrators for metadata serving - Support autonomous agent workflows with context retrieval | Critical capability: Agents navigate graph to retrieve decision-making context Measure: AI answer accuracy, policy compliance rates |

Identify gaps in metadata, lineage, and governance to understand your organization’s readiness for context graphs. Check your context maturity now.

How to get started with context graph: Key considerations

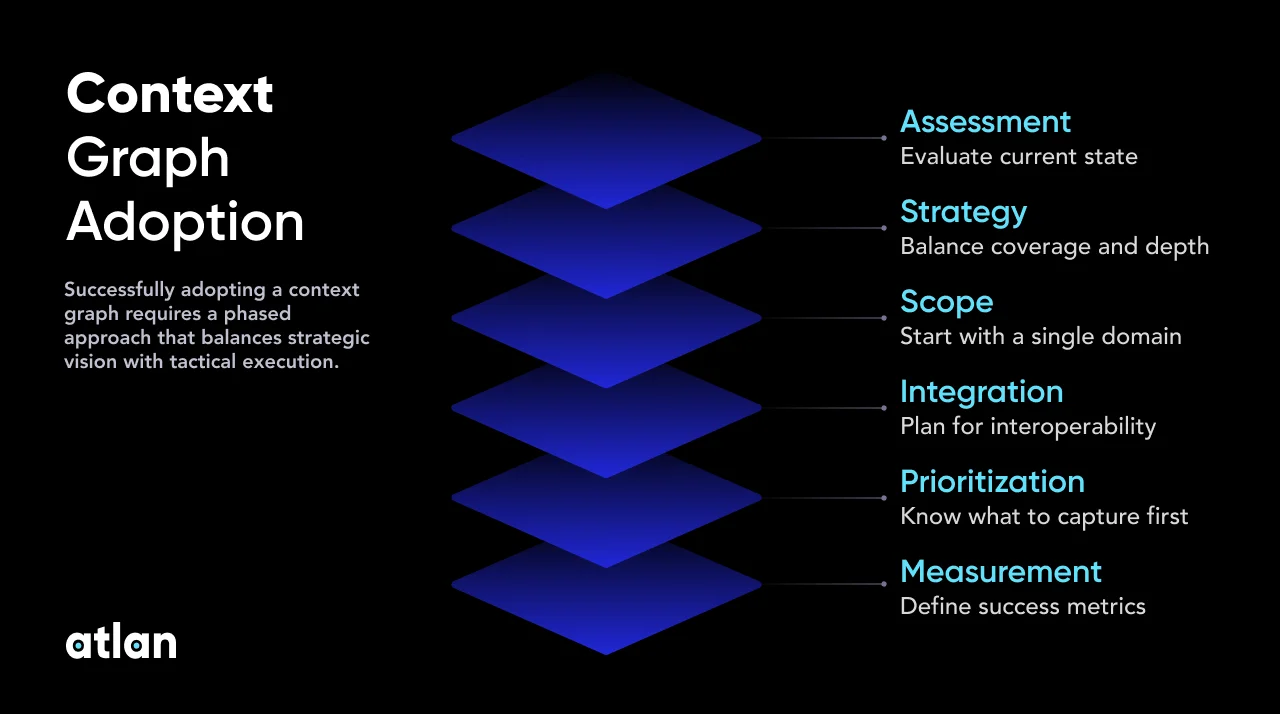

Permalink to “How to get started with context graph: Key considerations”Success with context graphs requires balancing strategic vision with tactical execution. These considerations will help avoid common pitfalls.

Context graph adoption diagram. Source: Atlan.

1. Assessment: Evaluate current state

Permalink to “1. Assessment: Evaluate current state”Audit existing knowledge graphs, lineage tools, and metadata systems. Identify integration points and gaps in semantic coverage. Determine whether to build on existing infrastructure or start fresh.

The decision often depends on your current metadata maturity. Organizations with established data catalogs can extend them. Those starting fresh have the advantage of avoiding legacy technical debt.

2. Capture strategy: Balance coverage and depth

Permalink to “2. Capture strategy: Balance coverage and depth”APIs provide broad coverage across your data stack, from warehouses and BI tools, to orchestrators and notebooks. Query parsing and instrumentation add depth for critical pipelines where column-level lineage matters.

Don’t try to capture everything at once. Prioritize high-value paths: the critical dashboards, the compliance reports, the datasets powering AI applications. Expand coverage as you prove value.

3. Ontology approach: Start domain-driven

Permalink to “3. Ontology approach: Start domain-driven”Begin with one critical business domain, such as customer, revenue, or product. Link glossary terms to actual data assets in the graph. Expand incrementally as teams see value.

Domain-driven implementation delivers faster time-to-value than trying to model the entire enterprise upfront. It also builds organizational buy-in through concrete results.

4. Integration architecture: Plan for interoperability

Permalink to “4. Integration architecture: Plan for interoperability”Context graphs serve as semantic layers across data fabric and lakehouse architectures. Ensure APIs support your BI tools, orchestrators, and AI frameworks. Consider governance and access control from day one.

Modern platforms like Atlan provide 200+ pre-built connectors and graph models that reduce time-to-value from months to weeks. The interoperability layer determines whether your context graph becomes infrastructure or just another silo.

5. Context types: Know what to capture first

Permalink to “5. Context types: Know what to capture first”Context falls into two categories that require different capture strategies:

Entity contexts (metadata about nodes themselves):

- Temporal validity: When is this data valid?

- Provenance: Where did this originate?

- Location/geography: What region does this apply to?

- Confidence: How reliable is this?

- Ownership: Who is responsible?

Relation contexts (metadata about edges/relationships):

- Event-specific: What triggered this relationship?

- Quantitative: What is the strength/weight?

- Supplementary: What additional context explains this connection?

Knowing which context type to capture first helps prioritize next steps. Temporal validity or provenance are generally good starting points.

6. Success metrics: Define measurement framework

Permalink to “6. Success metrics: Define measurement framework”Technical: Query performance, graph completeness, lineage coverage

Operational: Impact analysis time, search effectiveness, ticket reduction

Strategic: AI answer accuracy, governance compliance, self-service adoption

Define these metrics before implementation. They become your north star for prioritization decisions.

Key takeaways on context graphs

Permalink to “Key takeaways on context graphs”Context graphs represent the convergence of knowledge graphs, active lineage, and governance automation into unified infrastructure for modern data operations. As organizations move from AI experimentation to production-scale agents, the ability to provide rich semantic and operational context becomes as fundamental as compute and storage.

The platforms and patterns emerging today will define which organizations can scale AI with trust, accuracy, and governance. Starting with high-value domains and measuring concrete outcomes – impact analysis time, AI accuracy, policy compliance – creates the foundation for expanding context graph adoption across the enterprise.

Context graphs are the next $1T opportunity – but who owns them? Hear the experts square off at The Great Data Debate 2026.Register now →

FAQs about context graphs

Permalink to “FAQs about context graphs”1. Can I build a context graph on top of my existing knowledge graph?

Permalink to “1. Can I build a context graph on top of my existing knowledge graph?”Yes. Many organizations start with an existing knowledge graph for business semantics and extend it with operational metadata like lineage, ownership, and policies. The key is adding graph-native lineage capture and treating governance rules as first-class nodes. This approach preserves your semantic investment while adding the operational context needed for AI and impact analysis.

2. What’s the difference between a data catalog and a context graph?

Permalink to “2. What’s the difference between a data catalog and a context graph?”Traditional data catalogs store metadata in tables optimized for search. Context graphs use graph databases optimized for relationship traversal. This architectural difference matters for multi-hop queries like impact analysis. Modern active metadata platforms combine both: catalog interfaces for discovery with graph foundations for lineage and semantic reasoning.

3. How does a context graph reduce AI hallucinations?

Permalink to “3. How does a context graph reduce AI hallucinations?”Graph-grounded RAG retrieves not just data definitions but the full context: lineage showing where data came from, policies governing its use, quality metrics, and ownership. This semantic and operational context helps LLMs reason more accurately. The graph structure also provides explainable reasoning paths, making it clear why the AI answered in a specific way.

4. What technical skills are needed to implement a context graph?

Permalink to “4. What technical skills are needed to implement a context graph?”Implementation requires data engineering skills for connector setup and lineage capture, plus knowledge graph experience for ontology design. However, modern platforms provide pre-built connectors, automated lineage capture, and low-code interfaces for glossary management. Most organizations succeed with their existing data engineering team plus strategic guidance on domain modeling and phased rollout.

5. How long does it take to see value from a context graph?

Permalink to “5. How long does it take to see value from a context graph?”Initial value - centralized discovery and basic lineage - appears within 2-4 weeks. Deeper benefits like AI-grounded RAG and policy automation emerge at 3-6 months as semantic enrichment matures. Start with one high-priority domain, measure concrete outcomes like impact analysis time or search accuracy, then expand based on results.

6. Do I need to move my data to use a context graph?

Permalink to “6. Do I need to move my data to use a context graph?”No. Context graphs store metadata and relationships, not the data itself. They connect to your existing data sources through APIs and connectors, building a semantic layer that unifies metadata across systems. Your data stays in warehouses, lakes, and BI tools. The graph provides the context layer that helps people and AI understand and govern that distributed data.

7. Is a context graph an RDF quad store or a property graph?

Permalink to “7. Is a context graph an RDF quad store or a property graph?”Either can work. RDF quad stores excel when you need formal ontology reasoning and standards compliance (OWL, RDFS). Property graphs perform better for operational queries and are more intuitive for developers. Most enterprise implementations use property graphs with semantic capabilities layered through glossary integrations and policy frameworks.

8. How does a context graph differ from GraphRAG?

Permalink to “8. How does a context graph differ from GraphRAG?”GraphRAG is a retrieval technique that uses graph structures to improve LLM responses. A context graph is the underlying infrastructure that makes GraphRAG effective. Think of it this way: GraphRAG is the “how” of retrieval; the context graph is the “what” being retrieved. Context graphs provide the semantic, operational, and governance context that GraphRAG traverses.

9. Do I need temporal qualifiers and provenance from day one?

Permalink to “9. Do I need temporal qualifiers and provenance from day one?”Not necessarily. Start with basic entity relationships and lineage. Add temporal qualifiers when you need “point-in-time” queries for compliance or audit. Add provenance when you need to trace data back to authoritative sources. The context graph architecture supports incremental enrichment, so you can add context types as use cases demand.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Context graph: Related reads

Permalink to “Context graph: Related reads”- Gartner® Magic Quadrant™ for Metadata Management Solutions 2025: Key Shifts & Market Signals

- What Is a Semantic Layer? Definition, Types, Components, and Implementation Guide

- The G2 Grid® Report for Data Governance: How Can You Use It to Choose the Right Data Governance Platform for Your Organization?

- Data Governance in Action: Community-Centered and Personalized

- Data Governance Framework — Examples, Templates, Standards, Best practices & How to Create One?

- Data Governance Tools: Importance, Key Capabilities, Trends, and Deployment Options

- The 10 Foundational Principles of Data Governance: Pillars of a Modern Data Culture

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- 7 Top AI Governance Tools Compared | A Complete Roundup for 2026

- Dynamic Metadata Discovery Explained: How It Works, Top Use Cases & Implementation in 2026

- 9 Best Data Lineage Tools: Critical Features, Use Cases & Innovations

- Data Lineage Solutions: Capabilities and 2026 Guidance

- 12 Best Data Catalog Tools in 2026 | A Complete Roundup of Key Capabilities

- Data Catalog Examples | Use Cases Across Industries and Implementation Guide

- 5 Best Data Governance Platforms in 2026 | A Complete Evaluation Guide to Help You Choose

- Data Lineage Tracking | Why It Matters, How It Works & Best Practices for 2026

- Dynamic Metadata Management Explained: Key Aspects, Use Cases & Implementation in 2026