dbt Semantic Layer for Metrics Definition

Share this article

What is dbt Semantic Layer?

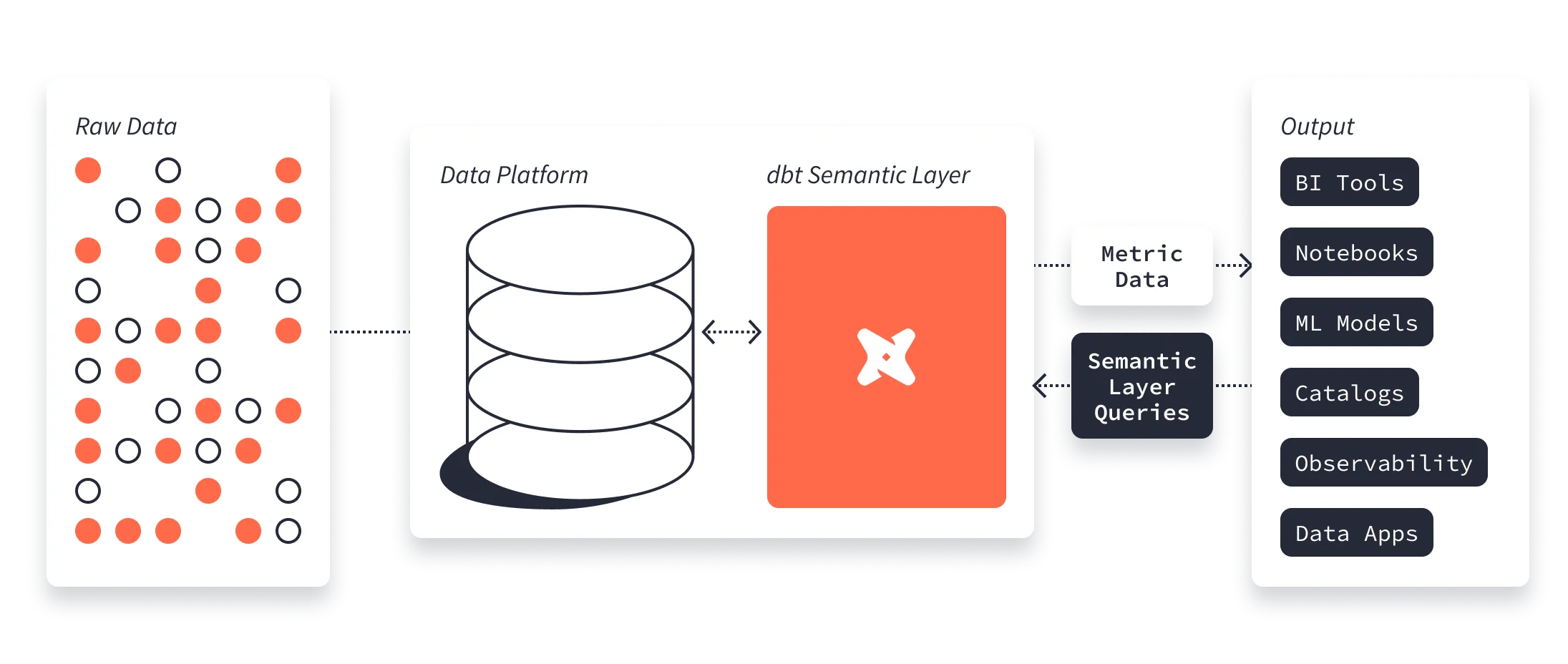

Permalink to “What is dbt Semantic Layer?”dbt Semantic Layer is a translation layer between business metrics and underlying data structures. It provides an abstraction from data sources and allows technical and non-technical data consumers to define, manage, and analyze business metrics using understandable business concepts.

As humans, we rarely map the world to 3NF database schemas. Even an organization’s most knowledgeable data practitioner couldn’t deeply understand all the data structures and how they are mapped to business concepts.

The dbt Semantic Layer centralizes metrics definition, eliminates duplicate code, and enables consistent self-service access to metrics in downstream tools.

dbt Semantic Layer - Source: dbt.

The dbt Semantic Layer also provides the context about how a metric is calculated and who defined it. That allows consumers to fully understand the usage, limitations, and considerations to incorporate that metric in decision-making.

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

This article will explore the problems the dbt Semantic Layer aims to solve and its benefits. We will also define the difference with a metrics layer, the underlying technical concepts, the relationship with MetricsFlow, and navigate through examples that will clarify the presented information.

Table of contents

Permalink to “Table of contents”- What is dbt Semantic Layer?

- Data trust issues without dbt Semantic Layer

- The advantages of the semantic layer

- Semantic layer vs metrics layer

- Core concepts of dbt Semantic Layer

- Available integrations

- Wrapping up

- Related reads

Data trust issues without dbt Semantic Layer

Permalink to “Data trust issues without dbt Semantic Layer”The primary problem data practitioners want to solve is the lack of trust in data. No one wants an executive to determine that a metric has differences between systems. Metrics reliability is crucial for decision-making, serving customers, and the data team’s peace of mind.

The problems to be solved by the dbt Semantic Layer include:

- Mismatches in metrics definition and lack of context

- Repeated code in different tools

- Metrics awareness gap in dbt

Mismatches in metrics definition and lack of context

Permalink to “Mismatches in metrics definition and lack of context”Business users sometimes find mismatches between definitions of the same concept in different places or metrics changing values for the same period in a matter of days, leading to mistrust in the results or, even worse, decisions based on wrong data.

This is not a problem for business users alone, but also for analysts that generate metrics copying queries from the last place they remember something similar was used, without having all the context or the proper explanation of why some filters were placed to fulfill particular business objectives.

On both sides, we have unclear definitions and lack of context about business metrics, similar but different definitions for the same concept, ad-hoc analysis that prevent knowledge sharing across stakeholders, leading to reduced productivity of the technical team, and lack of trust and mistakes in the decision-making process.

Repeated code in different tools

Permalink to “Repeated code in different tools”The problem with repeated definitions worsens when the company uses multiple tools where business metrics are displayed because you either replicate the same logic in various places (and maintain them everywhere) or potentially have discrepancies that lead to the problems mentioned above.

The data team needs a version-controlled central repository for metric definitions to remain consistent and track changes. That is simple, but propagating the definition across systems is challenging because internal object representation could vary, and we will need to code a “translator” between applications.

Metrics awareness gap in dbt

Permalink to “Metrics awareness gap in dbt”In October 2021, Drew Banin, co-founder of dbt Labs, opened a GitHub issue about “dbt should know about metrics”, and in June 2022, dbt added the metrics package that allowed users to define time-series aggregation over a model to define metrics. The package had scalability and customization issues because it was implemented using Jinja templates (among other issues). The new MetricFlow aims to solve the scalability issues and support future improvements.

Having shared definitions that can be reused by different users in different places can break this vicious circle and create the expected consistency and connection among tools.

The advantages of the semantic layer

Permalink to “The advantages of the semantic layer”After describing the problems the dbt Semantic Layer aims to solve, we conclude that consistency in the metrics definition is the main benefit. Getting the same results across different platforms and applications is crucial for aligning business stakeholders.

Some general advantages include:

- Reusability. Data practitioners only need to maintain metrics in one place, saving time and reducing the risk of errors and impact on downstream applications. It embraces the DRY (Don’t Repeat Yourself) principle, allowing you to consume metrics via robust APIs.

- Context. When metrics are defined in a central repository, you can relate them with models and dimensions and add documentation, which closes the gap of having more information about the metric and its relationships.

- Discoverability. Having a central place for metrics also helps to find all the available definitions.

- Reduced cost. Without a semantic layer, you might need to store and compute similar but different definitions of the same metric. Consumers execute overlapping queries from BI tools, APIs, or other consumption interfaces, increasing the cost of the platform.

- Reduced Time-to-Insight. When access to data is simplified through a semantic layer, analysts and stakeholders can significantly reduce the time from data ingestion to actionable insights.

- Governance and auditing. Using a semantic layer allows you to audit changes and define ownership. You can also control who can create and access metrics.

The previous list was generic to semantic layers, and the dbt Semantic Layer adds more advantages that are specific to the dbt ecosystem:

- Define metrics on top of dbt models. This is a foundational integration for dbt users because you start the metrics definition based on models you already defined, creating a dependency between them. You can define multiple semantic models from a single dbt model.

- Dynamic join support. Relationships are crucial to making dimensions available for metrics. When defining metrics with dbt semantic layer, you only need to specify the relationship between the models, and dbt will perform the join at query time only if you request fields from the related models. This means you can get rid of pre-aggregated tables.

- Complex metrics definition. Rolling metrics are not straightforward to calculate, and dbt simplifies the process with four types of metrics:

simple,ratio,cumulative, andderived. - Optimized SQL generation. The core functionality of MetricFlow is to generate optimized SQL for each data platform, now supporting Snowflake, Google Big Query, Databricks, and Amazon Redshift.

Semantic layer vs metrics layer

Permalink to “Semantic layer vs metrics layer”If you read our previous article about metrics layer you can relate these two concepts. Both aim to solve similar problems, but the implementation is different. As mentioned before, the dbt_metrics package has been deprecated and replaced with MetricFlow, and dbt is encouraging users to migrate using the dbt Semantic Layer migration guide.

Core concepts of dbt Semantic Layer

Permalink to “Core concepts of dbt Semantic Layer”We will explore the architectural components of the dbt Semantic Layer (how it “executes”), the components of the semantic models (how to “configure” it), which are the foundation for data definition in MetricFlow, and the metrics.

Architecture

Permalink to “Architecture”The dbt Semantic Layer has four main components:

- MetricFlow. Where you define and manage the logic of your metrics and semantic models using YAML specifications, and an abstraction layer that generates SQL to query them.

- MetricFlow Server. Handles metric requests and generates optimized SQL for the specific data platform.

- Semantic Layer Gateway. Gateway to interact with MetricFlow Server, passing the metric queries and executing the generated SQL against the data platform.

- Semantic Layer APIs. Interfaces to submit metric queries using GraphQL or JDBC APIs.

Some components of the dbt Semantic Layer are open-source, such as dbt-core and MetricFlow, while the other three components of the architecture are part of the dbt Cloud, and you need Team or Enterprise plans to access them.

Semantic models

Permalink to “Semantic models”Semantic models are an abstraction to define metrics. Multiple semantic models can be defined from a single dbt model. They are defined using YAML files with metadata that includes the data tables and keys to navigate relationships.

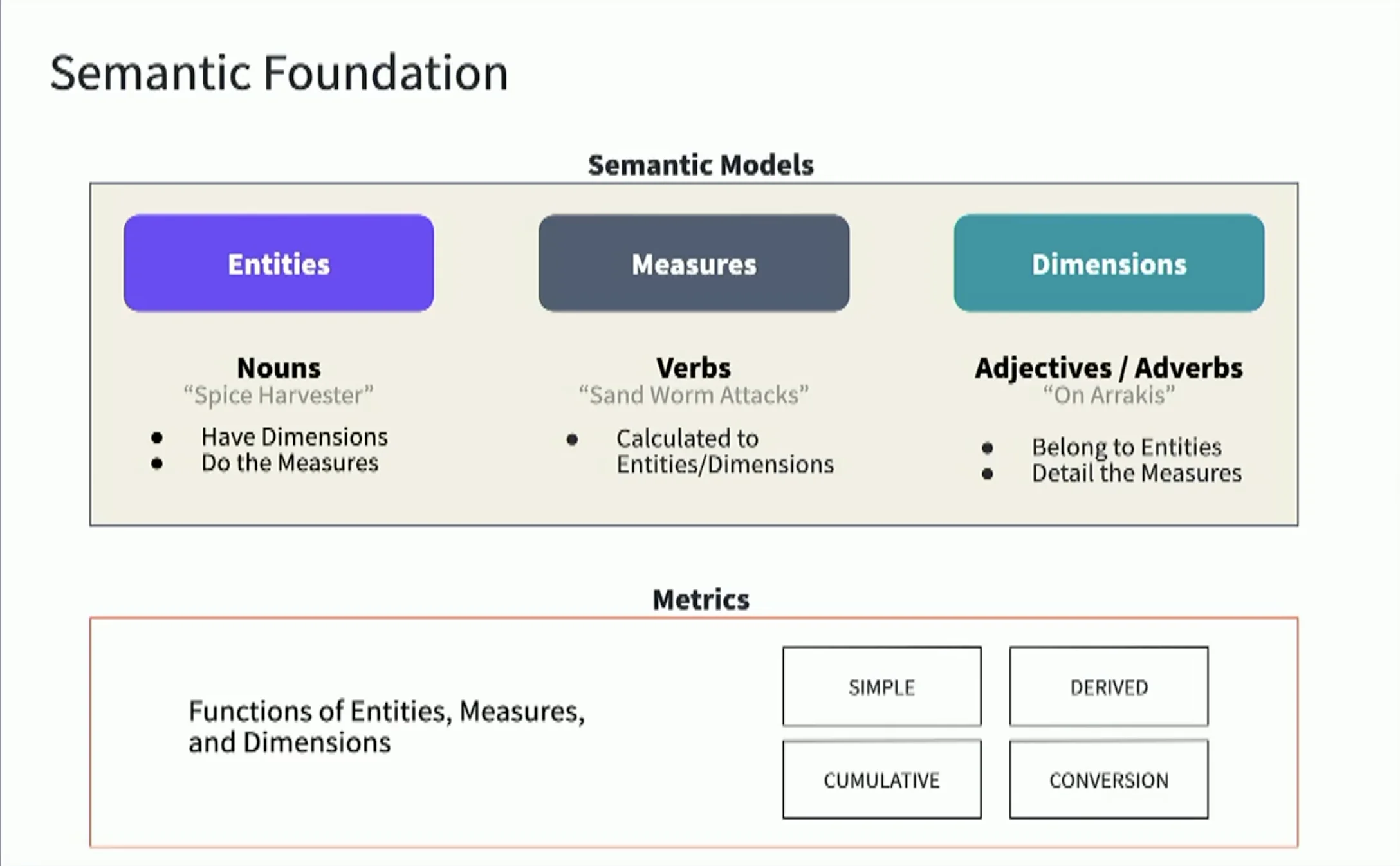

dbt Semantic models foundation - Source: dbt.

There are three main pieces of metadata:

- Entities. Real-world concepts in a business (such as customers or transactions). You need to define its name (key column) and type, which will determine the MetricFlow join logic.

- Measures. Aggregation functions are performed on columns of the model. They can be used as final metrics or building blocks for more complex ones.

- Dimensions. Ways to group or filter information based on categories or time (currently, there are limitations in supporting SCD). They can be used to add more details to your data.

The following example displays a complete configuration and detailed descriptions of each field available in the YAML configuration file:

semantic_models:

- name: transaction # A semantic model with the name Transactions

model: ref('fact_transactions') # References the dbt model named `fact_transactions`

description: "Transaction fact table at the transaction level. This table contains one row per transaction and includes the transaction timestamp."

defaults:

agg_time_dimension: transaction_date

entities: # Entities included in the table are defined here. MetricFlow will use these columns as join keys.

- name: transaction

type: primary

expr: transaction_id

- name: customer

type: foreign

expr: customer_id

dimensions: # dimensions are qualitative values such as names, dates, or geographical data. They provide context to metrics and allow "metric by group" data slicing.

- name: transaction_date

type: time

type_params:

time_granularity: day

- name: transaction_location

type: categorical

expr: order_country

measures: # Measures are columns we perform an aggregation over. Measures are inputs to metrics.

- name: transaction_total

description: "The total value of the transaction."

agg: sum

- name: sales

description: "The total sale of the transaction."

agg: sum

expr: transaction_total

- name: customers # Another semantic model called customers.

model: ref('dim_customers')

description: "A customer dimension table."

entities:

- name: customer

type: primary

expr: customer_id

dimensions:

- name: first_name

type: categorical

Metrics

Permalink to “Metrics”Metrics can be defined in the same YAML files as your semantic models or split into separate YAML files in other subdirectories.

Among the metadata to define a metric, you need to specify the metric type, which can be simple, ratio, cumulative, or derived:

- Simple. Point directly to a measure.

- Ratio. The ratio between two metrics, defining the

numeratoranddenominator. You can apply a dimensional filter to both metrics using a constraint string (optional). - Cumulative. Aggregate a measure over a given accumulation window. It accumulates values over all time if no window is specified. There are certain limitations to consider.

- Derived. Created by defining an expression to perform calculations using other metrics.

Example of simple metric:

# Simple metric

metrics:

- name: customers

description: Count of customers

type: simple # Pointers to a measure you created in a semantic model

label: Count of customers

type_params:

measure: customers # The measure you're creating a proxy of.

Example of cumulative metric:

# Cumulative metrics aggregate a measure over a given window.

# The window is considered infinite if no window parameter is passed

# (accumulate the measure over all time)

metrics:

- name: window_cumulative_sales_rolling_1_month

owners:

- [email protected]

type: cumulative

type_params:

measures:

- sales

# Omitting window will accumulate the measure over all time

window: 1 month

Available integrations

Permalink to “Available integrations”Several data applications integrate with the dbt Semantic Layer. They include spreadsheets, notebooks, data catalogs, and business intelligence tools. Currently supported tools: Tableau (beta), Google Sheets (beta), Hex, Lightdash, Mode, and others. Check the complete list here.

You can create custom integrations using different languages and tools, connecting with JDBC, ADBC, and GraphQL APIs. For more information, check the GitHub examples.

Wrapping up

Permalink to “Wrapping up”Having multiple places to define your metrics is causing a lack of trust and consistency. The dbt Semantic Layer aims to solve those problems by providing a central location to define business metrics and serve them to different applications.

Connect with Bruno Gonzalez here.

dbt Semantic Layer: Related reads

Permalink to “dbt Semantic Layer: Related reads”- dbt Data Catalog: Discussing Native Features Plus Potential to Level Up Collaboration and Governance with Atlan

- dbt Data Governance: How to Enable Active Data Governance for Your dbt Assets

- dbt Data Lineage: How It Works and How to Leverage It

- dbt Metadata Management : How to Bring Active Metadata to dbt

- dbt Data Contracts: Quick Primer With Notes on How to Enforce

- Snowflake + dbt: Supercharge your transformation workloads

- OpenMetadata and dbt: For an Integrated View of Data Assets

- Semantic Layers: The Complete Guide for 2026

Share this article