Snowflake + dbt: Supercharge your transformation workloads

Share this article

Snowflake is a data cloud platform that is built to bring all your data together by integrating various data sources and removing data siloes. It is a fully managed SaaS platform you can deploy on any of the three major cloud platforms — AWS, Azure, and Google Cloud Platform. Snowflake’s distinctive feature is that it allows you to scale storage and compute separately, getting more speed and performance gains at lower costs.

dbt solves the T in ETL and ELT. It is an SQL-first transformation workflow that lets you generate and deploy code for any customized data model. It uses dbt macros to fill Jinja templates with metadata. As a result, it generates SQL queries that you can execute in Snowflake to handle all your transformation workloads.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

As with any other ETL tool, the transformation code must be version-controlled and managed by a CI/CD pipeline. It also needs to support a fair bit of testing to ensure code and data quality in the transformation layer. There are several ways dbt and Snowflake align to make it easier for you to manage complex workflows. This article will take you through some of them under the following themes:

- Using dbt’s Snowflake adapter

- Transforming data in Snowflake

- Version controlling your dbt code with Git

- Testing transformations with dbt Tests

- Generating data documentation automatically in dbt

- Activating Snowflake + dbt’s metadata with Atlan

Let’s get started!

Table of contents #

- Using dbt’s Snowflake adapter

- Version controlling your dbt code in Git

- Transforming data with dbt in Snowflake

- Testing your transformation pipeline with dbt Tests

- Generating data documentation automatically in dbt

- Activating Snowflake + dbt’s metadata with Atlan

- Snowflake + dbt: Related reads

Using dbt’s Snowflake adapter #

For developing and deploying your dbt code, you’ll need to connect it to Snowflake. You can use dbt’s Snowflake adapter to do that. dbt’s adapters are abstractions of different types of database connectors and APIs. Using these connectors, you don’t have to worry about the implementation details and portability issues when migrating from one data store to another or using more than one target data store.

Whether you’re on dbt Cloud or using dbt Core, there are many ways you can authenticate your dbt connection with Snowflake — with username-password (with or without MFA), key-pair, Okta, or Snowflake OAuth. When using dbt Cloud, you can use the GUI to configure the connection. On the other hand, when you’re using dbt Core, you can install the dbt-snowflake PyPi package and use the profiles YAML file to store the authentication configuration. Once the connection is up and running, you can go to the next step.

Version controlling your dbt code in Git #

One thing that’s true about data is that it’s constantly changing. A business always sees new data sources, changing schemas, more unique requirements, etc. This means the code transforming that data into consumable models must also change. This is where ETL code emulates a software application code, which has the same lifecycle.

Git provides a great way to version control your dbt transformation code. Combined with a continuous integration and deployment tool, it also allows you to run structural and data-related tests as part of the pipeline, preventing you from pushing any breaking changes while maintaining and, in many cases, improving data quality across your system.

Git workflows, branching techniques, and deployment strategies vary from one business to another. This Git Workflow chapter in dbt’s Analytics Engineering guide provides a peek into how you should think about using Git with dbt. Now that we’ve discussed how the code is stored and versioned, let’s discuss the code contains, i.e., how data is transformed with dbt.

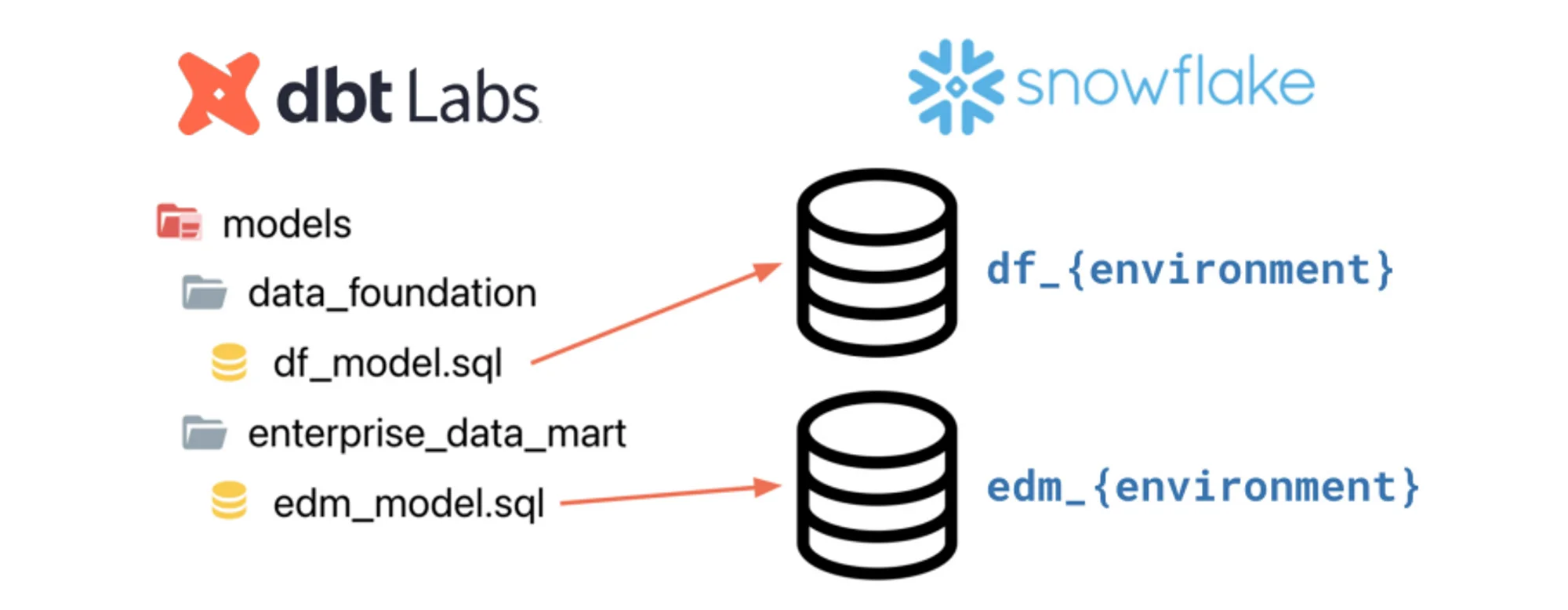

Transforming data with dbt in Snowflake #

Before dbt, much of the transformation work was done using SQL queries in the form of complex queries, views, materialized views, and stored procedures. Although these database objects are fit for purpose for some ad-hoc workloads and database administration, using them for complex ETL workloads results in unnecessarily complicated workflows. This is especially true when your database only allows SQL as the programming language.

Snowflake has empowered developers by supporting Java, Javascript, Scala, and Python on top of SQL. This makes it easy to write stored procedures that can generate SQL queries for transformation workloads on the fly. However, stored procedures lack native support for documentation, logging, and scalability.

Transforming data with dbt in Snowflake - Source: Snowflake Wiki.

This is where dbt comes in. It lets you create your transformation workflows using DRY (do not repeat yourself) and automation principles, such as code reusability, CI/CD, code portability, and automated documentation. dbt manages to generate the transformation queries you’ll be running in Snowflake.

Testing your transformation pipeline with dbt Tests #

We’ve already touched upon the aspect of testing that dbt enables with the CI/CD pipeline. While those tests might be considered more from the point of view of data modeling, you can write tests in dbt to validate actual data. dbt allows you to write tests in plain old SQL or using dbt macros. It also allows you to use external testing libraries which is similar to the open-source testing tool Great Expectations.

The results of a dbt test execution are stored in the same file that stores the result of dbt seed, dbt build, and, i.e., the run_results.json file. Depending on how you have configured your dbt DAG, you can either fail fast when you get an error building a model or continue with the rest of your DAG. For critical models, you can also choose to treat warnings as errors.

If you’re using a data cataloging and governance tool, it might be able to read and show these results in the browser-based application.

Generating data documentation automatically in dbt #

Metadata forms the backbone of dbt, as the SQL queries responsible for transforming data are created using a combination of dbt macro-based Jinja templates. All of this metadata is stored in several dbt artifacts, such as catalog.json. This data in the catalog file makes it easy for dbt to generate templated documentation. With the simple dbt docs generate and dbt docs serve commands, you can host your model documentation on a web page locally.

This, combined with metadata from Snowflake, can result in an enriched collection of metadata, which can help the business get a better grasp of the data and how it is modeled, structured, categorized, linked together, and used.

Activating Snowflake + dbt’s metadata with Atlan #

As dbt takes care of all your transformation workloads, it maintains all the metadata around objects, data structures, tests, etc. If you take a DIY approach, extracting further value from this metadata requires some effort. A better alternative is to use a metadata activation platform like Atlan with built-in connectors for dbt. It utilizes all the structural, data governance, data quality, and lineage information in a way that allows your business to explore how the data is flowing through the system.

Altan also integrates with dbt’s Semantic layer, combining business metrics across your data ecosystem. This is a game-changer, as it allows business users to see metrics as part of the end-to-end lineage. This, combined with the tags, object dependencies, and lineage metadata from Snowflake, makes it easy to have a complete system view. Doing this previously was a pretty disjointed exercise, which not only required a lot of effort but also resulted in an incomplete picture of the data flow.

Snowflake + dbt: Related reads #

- Snowflake Summit 2025: How to Make the Most of This Year’s Event

- dbt Data Catalog: Discussing Native Features Plus Potential to Level Up Collaboration and Governance with Atlan

- dbt Data Governance: How to Enable Active Data Governance for Your dbt Assets

- dbt Data Lineage: How It Works and How to Leverage It

- dbt Metadata Management : How to Bring Active Metadata to dbt

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Snowflake Data Governance: Features, Frameworks & Best practices

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Snowflake Data Lineage: A Step-by-Step How to Guide

- Snowflake Data Dictionary: Documentation for Your Database

- Snowflake Metadata Management: Importance, Challenges, and Identifying The Right Platform

Share this article