How to Combine Knowledge Graphs With LLMs

Three approaches for integrating knowledge graphs with LLMs

Permalink to “Three approaches for integrating knowledge graphs with LLMs”Teams combine knowledge graphs and large language models through three distinct architectural patterns. Each serves different use cases based on whether the primary goal is enhancing AI responses, automating graph creation, or achieving bidirectional benefits.

1. KG-enhanced large language models

Permalink to “1. KG-enhanced large language models”This approach incorporates knowledge graph data during LLM training or inference phases. The graph acts as an external memory that grounds model responses in factual relationships. When users ask questions, the system first retrieves relevant graph data, then passes that context to the LLM for natural language generation.

Research from Gartner shows knowledge graphs improve LLM accuracy by 54.2% on average when used for retrieval augmentation. The structured format prevents the model from fabricating connections or entities that don’t exist. Financial services firms use this pattern to ensure AI assistants only reference verified regulatory data, not hallucinated policies.

2. LLM-augmented knowledge graphs

Permalink to “2. LLM-augmented knowledge graphs”Language models excel at extracting entities and relationships from unstructured text. This approach uses LLMs to build and maintain knowledge graphs automatically. The models process documents, identify key concepts, determine how concepts relate, and populate graph structures without manual annotation.

Modern metadata platforms employ this pattern to continuously extract technical lineage and business context from code repositories, documentation, and data pipelines. Platforms like Atlan use active metadata approaches where LLMs enrich knowledge graphs with usage patterns, quality signals, and ownership information captured from system activity.

3. Synergized bidirectional systems

Permalink to “3. Synergized bidirectional systems”The most sophisticated implementations create feedback loops where LLMs and knowledge graphs continuously improve each other. The graph provides structured context that makes LLM responses more accurate, while the LLM identifies new relationships and entities that expand the graph. Organizations deploying AI agents at scale often require this pattern.

Research published in arXiv analyzing 28 integration methods found that hybrid approaches combining multiple patterns achieved the best results for complex enterprise use cases. The synergized model works particularly well for evolving domains where both the knowledge structure and the language understanding need regular updates.

GraphRAG and retrieval-augmented generation implementation

Permalink to “GraphRAG and retrieval-augmented generation implementation”Traditional RAG systems retrieve relevant text chunks based on semantic similarity. GraphRAG extends this by traversing knowledge graph relationships to gather connected context. This enables multi-hop reasoning where answering a question requires linking information across several related entities.

The architecture operates in three stages

Permalink to “The architecture operates in three stages”- First, query understanding translates natural language into graph concepts.

- Second, graph traversal follows relationships to collect relevant subgraphs.

- Third, context assembly combines retrieved graph data with the original query for LLM processing.

Microsoft’s GraphRAG implementation demonstrated substantial improvements over standard vector-based retrieval. The system performs community detection on large document collections, creating hierarchical summaries that capture themes across the entire dataset. Teams report 29.6% faster resolution times when customer support systems use GraphRAG compared to conventional retrieval methods.

Implementation requires several technical components

Permalink to “Implementation requires several technical components”- Graph database infrastructure stores entities and relationships with efficient query capabilities. Neo4j, JanusGraph, and cloud-native options handle enterprise-scale graphs. Atlan’s knowledge graph architecture automatically maps relationships across data assets, connecting business concepts to technical implementations.

- Vector embeddings enable semantic search over graph content. Modern systems combine graph traversal with vector similarity, retrieving both directly connected nodes and semantically related concepts. This hybrid approach addresses limitations of pure graph or pure vector retrieval.

- Query planners determine which graph paths to explore based on query intent. Simple questions may only need one-hop neighbors, while complex analytical queries require deeper traversal. Intelligent planning prevents exponential explosion in subgraph size.

- Context compression techniques manage token limits by summarizing graph data before LLM processing. Techniques include pruning low-relevance nodes, aggregating similar entities, and hierarchical summarization where detailed subgraphs get replaced with summary nodes.

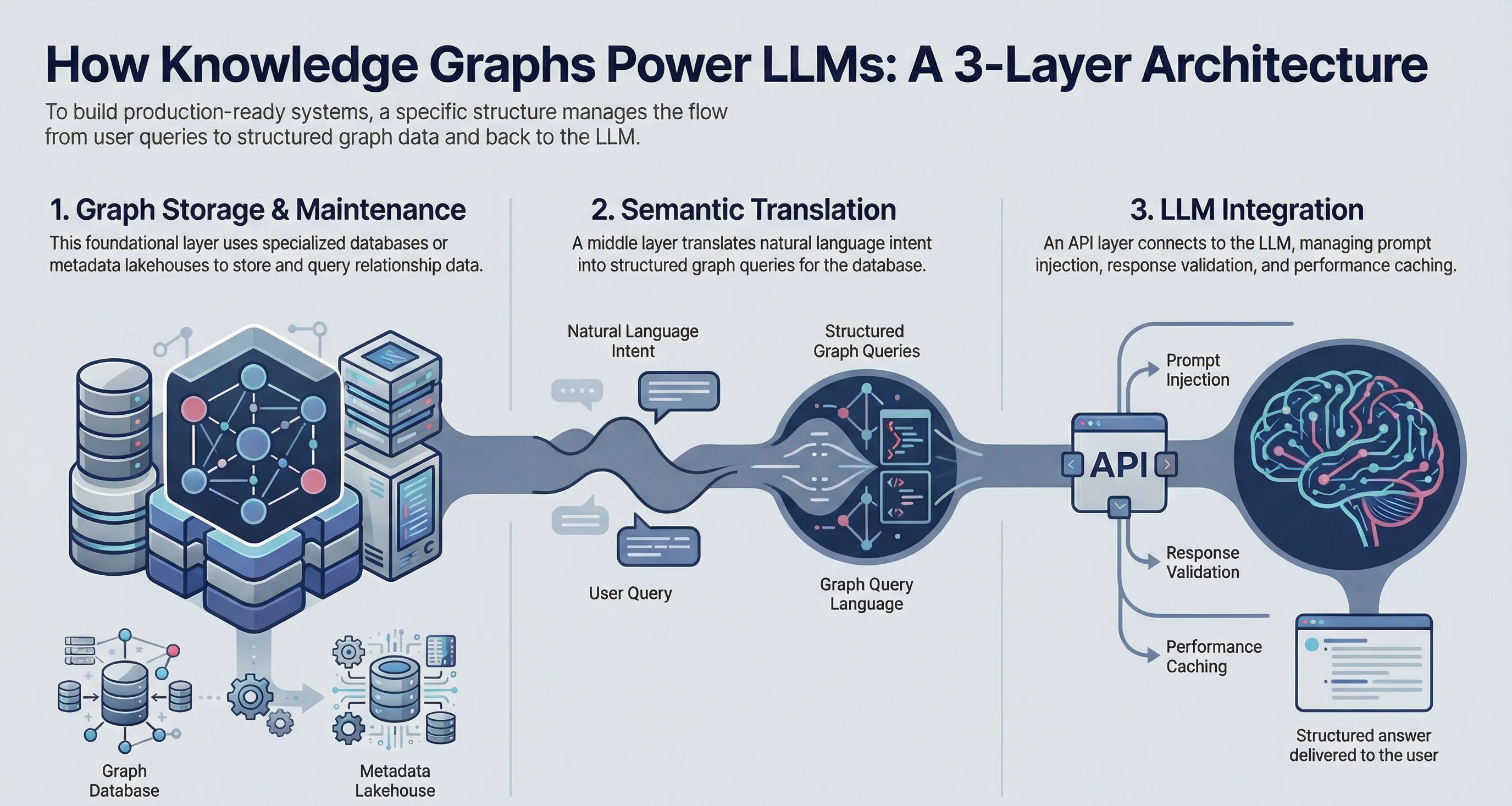

Technical architecture – Knowledge graph and LLM integration

Permalink to “Technical architecture – Knowledge graph and LLM integration”Building production systems that effectively combine knowledge graphs with LLMs requires careful architectural decisions around data flow, interface design, and real-time responsiveness.

Core architectural layers

Permalink to “Core architectural layers”1. Graph storage and maintenance layer

Organizations implement knowledge graphs using specialized databases optimized for relationship queries. Open metadata lakehouses provide an architectural pattern where graph data remains queryable alongside traditional analytics workloads, enabling SQL-based access to relationship metadata.

2. Semantic translation layer

This component, the semantic layer, sits between natural language and structured graph queries, handling three key functions:

- Interprets user intent from natural language input

- Identifies relevant entities mentioned in queries

- Generates appropriate graph traversal strategies

Advanced implementations use LLMs themselves for this translation, creating a self-improving loop where the model learns domain-specific query patterns over time.

3. LLM integration layer

API layers manage the connection to language models through:

- Prompt engineering and context injection

- Response validation against graph data

- Batch processing of multiple graph lookups to reduce latency

- Caching for frequently accessed graph patterns

Model Context Protocol servers enable AI agents to programmatically access graph context without manual integration work.

How to Combine Knowledge Graphs With LLMs. Source: Atlan.

Data pipeline architecture

Permalink to “Data pipeline architecture”Real-time update strategy

Continuous graph updates require change data capture mechanisms that:

- Detect modifications in operational systems

- Trigger entity extraction processes

- Update graph relationships incrementally

Teams must choose between two consistency models:

| Consistency Model | Characteristics | Best For |

|---|---|---|

| Eventual consistency | Graphs update asynchronously | High-volume systems prioritizing speed |

| Strict consistency | Updates complete before queries see changes | Regulated environments requiring accuracy |

Metadata enrichment pipelines

Active metadata management automates enrichment workflows that augment basic graph structures:

- Usage analytics: Identify which entities matter most to users

- Quality scores: Indicate reliability of specific relationships

- Provenance tracking: Maintain lineage from graph assertions back to source evidence

- Automated workflows: Eliminate manual curation overhead

Performance optimization tactics

Permalink to “Performance optimization tactics”Graph-level optimizations

Three primary strategies reduce query costs at scale:

- Graph partitioning: Divide large graphs by domain boundaries or temporal ranges so queries identify relevant partitions before full traversal

- Materialized views: Pre-compute common query patterns and relationship aggregations

- Caching layers: Store frequently accessed subgraphs in memory for sub-millisecond retrieval

LLM cost management

LLM inference costs dominate total expenses in high-volume deployments. Proven optimization approaches include:

- Lightweight filtering: Organizations report 10x token reduction using small models to filter graph content before expensive LLM calls

- Tiered architectures: Route simple queries to small models, reserve large models for complex reasoning

- Batch processing: Group multiple queries to amortize API overhead

- Result caching: Store and reuse responses for identical or similar queries

Common challenges when combining knowledge graphs and LLMs

Permalink to “Common challenges when combining knowledge graphs and LLMs”Integration projects face predictable obstacles around data quality, system complexity, and organizational alignment. Understanding these challenges helps teams plan realistic timelines and allocate resources appropriately.

1. Knowledge graph construction and maintenance

Permalink to “1. Knowledge graph construction and maintenance”Building comprehensive graphs from enterprise data remains labor-intensive despite LLM assistance. Entity disambiguation challenges arise when the same term references different concepts across contexts. Relationship extraction accuracy varies by document type, requiring domain-specific tuning.

Graph schema evolution presents ongoing difficulties. As business needs change, teams must migrate existing graph structures to new ontologies without breaking dependent applications. Modern platforms address this through versioned schemas and automated migration tools that propagate changes consistently.

2. LLM hallucination and factual grounding

Permalink to “2. LLM hallucination and factual grounding”Language models sometimes ignore provided graph context and generate responses based on training data instead. This particularly happens when graph information contradicts patterns the model learned during pre-training. Teams implement validation layers that check generated responses against source graph data before presentation.

Context window limitations constrain how much graph data can inform each LLM call. Queries requiring information from across large graph regions must employ summarization strategies. Hierarchical graph representations help by providing multiple granularity levels that fit within token budgets.

3. Integration complexity and technical debt

Permalink to “3. Integration complexity and technical debt”Maintaining consistency between graph databases, vector stores, and LLM inference infrastructure creates operational overhead. Teams must monitor data freshness across components, handle partial failures gracefully, and implement retry logic for transient errors.

Cost management becomes critical as query volumes scale. Vector database operations, graph traversals, and LLM API calls each contribute to total expenses. Organizations implement query result caching, batching strategies, and tiered service levels to control costs without sacrificing user experience.

4. Governance and explainability requirements

Permalink to “4. Governance and explainability requirements”Regulated industries require understanding how systems reach conclusions. Pure neural approaches provide limited transparency into reasoning paths. Graph-augmented systems offer inherent explainability since each response grounds in specific graph relationships that can be inspected and validated.

Access control complexity increases when multiple data sources feed the knowledge graph. AI governance frameworks must enforce permissions at the graph level, ensuring LLMs only access relationships authorized for each user. This requires integrating graph databases with enterprise identity systems and propagating permissions through query execution.

Real-world applications and use cases of combined knowledge graph and LLM systems

Permalink to “Real-world applications and use cases of combined knowledge graph and LLM systems”Organizations across industries deploy combined knowledge graph and LLM systems to solve specific business problems. These implementations demonstrate practical value beyond proof-of-concept demos.

- Financial services institutions use graph-enhanced LLMs for regulatory compliance. The graphs map complex relationships between regulations, internal policies, and business processes. When analysts ask compliance questions, the system retrieves relevant regulatory passages and their connections to specific procedures. One European bank reduced compliance review time by 40% using this approach.

- Healthcare systems leverage medical knowledge graphs to ground clinical AI assistants. The graphs encode relationships between symptoms, diseases, treatments, and patient demographics. Physicians receive evidence-based suggestions that trace back to specific medical literature rather than unsourced recommendations. Gartner identifies knowledge graphs as essential for AI-driven healthcare applications requiring explainable reasoning.

- E-commerce platforms combine product knowledge graphs with conversational AI for sophisticated recommendation systems. The graphs capture product attributes, customer preferences, and purchase patterns. When customers describe needs in natural language, the system traverses graph relationships to suggest relevant items even when exact matches don’t exist.

- Software development teams implement code understanding systems where knowledge graphs represent program structure, dependencies, and design patterns. Developer tools use graph-augmented LLMs to answer questions about codebases, suggest refactoring opportunities, and generate documentation. These systems maintain currency by automatically updating graphs as code evolves.

- Manufacturing organizations deploy supply chain intelligence platforms combining logistics knowledge graphs with natural language interfaces. The graphs model suppliers, components, assembly processes, and distribution networks. Operations teams query the system conversationally to understand disruption impacts, identify alternative sources, and optimize routing decisions.

The pattern across successful implementations involves starting focused rather than attempting comprehensive coverage. Teams typically begin with one high-value domain, validate the approach, then expand to additional areas as they refine integration techniques and build organizational capability.

How context layers enable knowledge graph and LLM integration at scale

Permalink to “How context layers enable knowledge graph and LLM integration at scale”Building production systems that effectively combine knowledge graphs with large language models requires infrastructure purpose-built for integrating these technologies. Organizations moving beyond proof-of-concept implementations need unified platforms that handle graph management, metadata orchestration, and LLM context delivery without extensive custom integration work.

Automated knowledge graph construction and enrichment

Permalink to “Automated knowledge graph construction and enrichment”Modern metadata lakehouses provide the architectural foundation for this integration. These platforms automatically:

- Capture technical metadata from data systems without manual cataloging

- Extract business context from documentation, queries, and usage patterns

- Monitor governance signals through quality checks and access policies

- Build comprehensive graphs spanning the entire data and AI estate

The result is a living knowledge graph that updates continuously as your data landscape evolves, eliminating batch refresh cycles and manual curation overhead.

Standardized LLM context delivery

Permalink to “Standardized LLM context delivery”Context delivery to LLMs happens through standardized protocols rather than brittle point-to-point integrations. Model Context Protocol servers expose graph data programmatically to AI agents and applications:

- Handle authentication and access control at the graph level

- Optimize query planning for efficient relationship traversal

- Format results appropriately for LLM consumption

- Enable on-demand retrieval during inference without pre-loading

This standardization allows LLMs to retrieve relationship context dynamically rather than requiring custom integration code for each AI application.

Enterprise requirements addressed

Permalink to “Enterprise requirements addressed”The approach addresses several enterprise requirements simultaneously:

- Real-time freshness: Graph updates happen automatically as source systems change, maintaining accuracy without scheduled refresh jobs.

- Access governance: Controls apply at the graph level, ensuring LLMs only retrieve authorized relationships for each user.

- Explainability: Lineage tracking connects graph assertions back to source evidence, providing the transparency regulated industries require.

- Reduced complexity: Organizations report faster implementation timelines compared to assembling separate graph databases, vector stores, and LLM infrastructure.

See how Atlan activates knowledge graph context for AI systems

Book a Demo →Real stories from real customers: Knowledge graphs powering enterprise AI

Permalink to “Real stories from real customers: Knowledge graphs powering enterprise AI”Context as culture: Workday’s AI-ready semantic layer

Permalink to “Context as culture: Workday’s AI-ready semantic layer”“Atlan enabled us to easily activate metadata for everything from discovery in the marketplace to AI governance to data quality to an MCP server delivering context to AI models. All of the work that we did to get to a shared language amongst people at Workday can be leveraged by AI via Atlan’s MCP server.” - Joe DosSantos, VP of Enterprise Data and Analytics, Workday

Workday achieved 5x improvements in AI analyst response accuracy

Watch Now →Context by design: Mastercard’s foundation-first approach

Permalink to “Context by design: Mastercard’s foundation-first approach”“Atlan is much more than a catalog of catalogs. It’s more of a context operating system. The metadata lakehouse is configurable across all our tool sets and flexible enough to get us to a future state where AI agents can access lineage context through the Model Context Protocol.” - Andrew Reiskind, Chief Data Officer, Mastercard

Mastercard's building context from the start

Watch Now →Key takeaways on combining knowledge graphs with LLMs

Permalink to “Key takeaways on combining knowledge graphs with LLMs”Integrating knowledge graphs with large language models creates AI systems grounded in factual relationships rather than statistical patterns alone. The architecture you choose depends on whether improving LLM accuracy, automating graph construction, or achieving bidirectional benefits matters most. GraphRAG implementations demonstrate substantial improvements for multi-hop reasoning compared to simple vector retrieval. Organizations starting this journey should focus on one high-value domain, validate the technical approach, then expand as they build capability. Modern platforms that unify metadata management with LLM context delivery significantly reduce integration complexity compared to assembling separate infrastructure components.

Unified context layers simplify knowledge graph and LLM integration for your AI initiatives.

Let’s help you build it — Book a Demo →

FAQs about combining knowledge graphs with LLMs

Permalink to “FAQs about combining knowledge graphs with LLMs”1. What’s the difference between GraphRAG and traditional RAG?

Permalink to “1. What’s the difference between GraphRAG and traditional RAG?”GraphRAG traverses knowledge graph relationships to gather connected context, enabling multi-hop reasoning. Traditional RAG retrieves text chunks based on semantic similarity without understanding how information connects. GraphRAG works better for questions requiring linked insights across multiple sources.

2. Do I need a knowledge graph if I already use vector embeddings?

Permalink to “2. Do I need a knowledge graph if I already use vector embeddings?”Vector embeddings capture semantic similarity but not explicit relationships between entities. Knowledge graphs provide structured connections that vector search cannot infer. Most production systems combine both approaches for comprehensive retrieval.

3. How much does knowledge graph quality affect LLM performance?

Permalink to “3. How much does knowledge graph quality affect LLM performance?”Research shows graph-augmented LLMs achieve 54% higher accuracy than standalone models. However, this benefit disappears if graph data contains errors or missing relationships. Continuous validation and enrichment are essential for maintaining quality.

4. Can LLMs build knowledge graphs without human supervision?

Permalink to “4. Can LLMs build knowledge graphs without human supervision?”LLMs excel at initial entity extraction and relationship identification but require human validation for domain-specific accuracy. Hybrid approaches where LLMs propose graph updates and domain experts approve them achieve the best balance of automation and quality.

5. What infrastructure components are required for GraphRAG?

Permalink to “5. What infrastructure components are required for GraphRAG?”Core components include a graph database for relationship storage, vector embeddings for semantic search, a query planner for graph traversal strategy, and context compression tools to fit results within LLM token limits. Modern platforms integrate these components natively.

6. How do I handle real-time updates to knowledge graphs?

Permalink to “6. How do I handle real-time updates to knowledge graphs?”Change data capture mechanisms detect source system modifications and trigger incremental graph updates. Teams must decide between eventual consistency where updates propagate asynchronously or stricter consistency that prioritizes accuracy over responsiveness.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Combining knowledge graphs with LLMs: Related reads

Permalink to “Combining knowledge graphs with LLMs: Related reads”- What Is a Context Graph? Definition, Components & Use Cases

- Do Enterprises Need a Context Layer Between Data and AI?

- Context Graph vs Knowledge Graph: Key Differences for AI

- Context Graph vs Ontology: Key Differences for AI

- Semantic Layer: Definition, Types, Components & Implementation Guide

- Context Layer 101: Why It’s Crucial for AI

- Context Engineering for AI Analysts and Why It’s Essential

- Context Layer 101: Why It’s Crucial for AI

- Active Metadata: 2026 Enterprise Implementation Guide

- Dynamic Metadata Management Explained: Key Aspects, Use Cases & Implementation in 2026

- How Metadata Lakehouse Activates Governance & Drives AI Readiness in 2026

- Metadata Orchestration: How Does It Drive Governance and Trustworthy AI Outcomes in 2026?

- What Is Metadata Analytics & How Does It Work? Concept, Benefits & Use Cases for 2026

- Dynamic Metadata Discovery Explained: How It Works, Top Use Cases & Implementation in 2026

- Semantic Layers: The Complete Guide for 2026

- 9 Best Data Lineage Tools: Critical Features, Use Cases & Innovations

- Data Lineage Solutions: Capabilities and 2026 Guidance

- 12 Best Data Catalog Tools in 2026 | A Complete Roundup of Key Capabilities

- Data Catalog Examples | Use Cases Across Industries and Implementation Guide

- 5 Best Data Governance Platforms in 2026 | A Complete Evaluation Guide to Help You Choose

- Data Governance Lifecycle: Key Stages, Challenges, Core Capabilities

- Mastering Data Lifecycle Management with Metadata Activation & Governance

- What Are Data Products? Key Components, Benefits, Types & Best Practices

- How to Design, Deploy & Manage the Data Product Lifecycle in 2026