The Practitioner’s Guide to Experimenting with Conversational Analytics

Conversational analytics offers enormous potential, not just to accelerate insights but to finally make data democratization work at enterprise scale. Gartner predicts that the conversational AI market, which generated just over $8B in 2023, will reach $36B by 2032 – 4.5x increase in under a decade. But despite all the hype and, well, conversation around it, few companies have actually deployed conversational analytics at all, let alone at scale.

What’s to blame for the gap between promise and reality? Ask five different people and you’ll get five different answers. The truth is, while AI is changing how users interact with data, conversational analytics is still nascent. In most cases, we still don’t know what we don’t know.

But what we do know is that AI needs context and experimentation in order to become more accurate and reliable. Without that, we’ll still face an AI value chasm where speed at which AI systems are being built is completely disconnected from the value they’re providing. We talked to more than 20 data leaders at Atlan Re:Govern to understand where exactly to start when experimenting with conversational analytics – here’s what they told us.

Why Conversational Analytics Isn’t Working (Yet)

Permalink to “Why Conversational Analytics Isn’t Working (Yet)”AI budgets are expanding by the year, and nearly every company is experimenting with AI. According to McKinsey, 88% of companies say at least one business function is regularly using AI, up 10% from the year prior. And with good reason – replacing complex dashboard with natural-language queries lowers the barrier to entry and makes all users more data literate.

The problem isn’t that the intent isn’t there – it’s that the execution isn’t. McKinsey’s report found that at the enterprise level, 62% of organizations are in the experimentation or pilot phase. And beyond that, the outlook is bleak: research from MIT Sloan found that 95% of AI pilots fail to reach production.

“Ironic, isn’t it? That which used to be easy is now hard. And that which was once hard, is now easy,” said Joe DosSantos, Vice President of Enterprise Data and Analytics at Workday, during Re:Govern. “Why? Because our beautiful governed data, while great for humans, isn’t really particularly digestible for an AI.”

The main blockers of conversational analytics adoption are a lack of context; poor data quality and availability; disconnected tools and systems; and unreliable governance. But we can boil it down to this fundamental shift: while BI required humans to speak data’s language, AI requires data to speak the business’s language. That means you need to inform AI with context:

- Business definitions and glossaries

- Semantic layers

- Data lineage and relationships

- Quality signals and ownership

Just as you can’t accurately tell someone the weather without knowing where they’re located, AI can’t give you accurate analytics without knowing your business context. Therein lies the AI Context Gap.

“AI is like a super smart alien,” said Prukalpa Sankar, Co-founder of Atlan, during her keynote. “For it to succeed in production, it must be grounded explicitly with shared human context.”

That human context exists within your organization – it’s just a matter of harnessing and operationalizing it. The first step is experimentation.

Best Practices for Conversational Analytics Experiments

Permalink to “Best Practices for Conversational Analytics Experiments”When so few companies are successfully deploying conversational analytics (and so many “experts” are sharing their perspectives), how do you know where to get started?

Before we dive into use cases, there are some best practices that our experts at Re:Govern shared from their own experiences:

1. Start Small (But Valuable)

Permalink to “1. Start Small (But Valuable)”As with most experiments – technological or otherwise – boiling the ocean doesn’t work. Identify a small but valuable use case, and use it as your starting line, so you can pivot and iterate faster, without becoming bogged down by different people, systems, or processes.

“Start where we can find value. Only work with a small set of people and then solve one problem,” said Fabien Thiaucourt, SVP of Data Governance and Enablement at Mastercard, during his Re:Govern session. “Start with one important problem for [stakeholders], solve that with them, and then build on that story to draw in other teams.”

Cortney Worthy, Enterprise Data Governance Lead at Dropbox, echoed Thiaucourt, saying “We’re starting small scaling piloting, and then we’re taking those successes and onboarding additional teams and building out additional features into kind of the tooling.”

2. Balance Viability with Risk

Permalink to “2. Balance Viability with Risk”When you’re first starting out, quick wins are your currency. The task then becomes how to get those quick wins with minimal friction. Choosing to tackle a clear, low-risk business problem is the most straightforward path to get there.

For instance, experimenting with AI-powered developer productivity tools, like code search assistants or automated documentation generation, can show you immediate time savings, but is still low risk because mistakes are caught internally rather than impacting customers or triggering regulatory concerns.

“Once you have that clearly defined business problem… then you can start establishing how to get there,” said Gu Xie, Head of Data at Group 1001, at Re:Govern. “It’s just really about empowering the team to iterate, experiment, and just execute.”

As you start to gain wins – or if you operate in a highly regulated industry – piloting conversational analytics with risk-averse teams can pressure test models for potential context, governance, and trust gaps.

“What you’re trying to do is to find those people that need trustworthiness,” said Joe DosSantos of Workday. “Generally speaking, those people can be found in places like finance…. So that’s where we went first.”

3. Introduce Context Early

Permalink to “3. Introduce Context Early”AI’s ascent is fueled by context. Atlan Co-founder Prukalpa Sankar quoted Bill Gates’s 1996 essay in her opening keynote at Re:Govern, in which he said content is king. Today, she said, context is king. And what are the 95% of companies that are failing with AI in production missing? Context.

“Before, when we were able to pass [data] through a dashboard or a dedicated analytics team, you could kind of filter and see, okay, well, there’s some context missing; I can add it into a dashboard,” said Takashi Ueki, Head of Data and Enterprise Analytics at Elastic, during Re:Govern. “With AI, you lose all of that…. So what we’re focused on is how can we scale the data quality and context development as much as possible?”

Without the right context, AI struggles to deliver consistent, accurate, and relevant responses. But with a context layer, you can move faster, scale reliably, and onboard new use cases with confidence.

Sample Conversational Analytics Use Cases

Permalink to “Sample Conversational Analytics Use Cases”Navigating conversational analytics experiments can be daunting. Here are a few sample use cases to inspire your own experiments.

Query Resolutions for Customer Success

Permalink to “Query Resolutions for Customer Success”The problem: Support teams waste time navigating multiple systems to piece together customer context, leading to frustrated customers who have to repeat their issues and longer resolution times.

The conversational AI query: “What’s the support history with customer ID #12345?”

The response: “I found four interactions with this customer in the past 30 days. To give you the most relevant information, are you looking for:

- Support ticket history (3 open tickets related to billing)

- Product usage patterns (last login 2 days ago, 60% feature adoption)

- Account status (Premium tier, renewal in 45 days)

Based on the context, it looks like there’s a billing issue that’s been escalated. The customer last spoke with Sarah on Tuesday and was promised a callback within 24 hours.”

What you need:

- Customer data unified across systems (CRM, support tickets, product usage)

- Clear definitions of customer states and issue types

- Data quality checks on customer interaction data

- Ownership established for each customer data domain

Starting point: Build a simple Q&A interface that can answer “What is this customer’s recent interaction history?” for your top 10 support ticket categories. Focus on read-only queries first before enabling any actions. Instruct the AI to ask for clarification to ensure it’s learning as well as optimizing its responses.

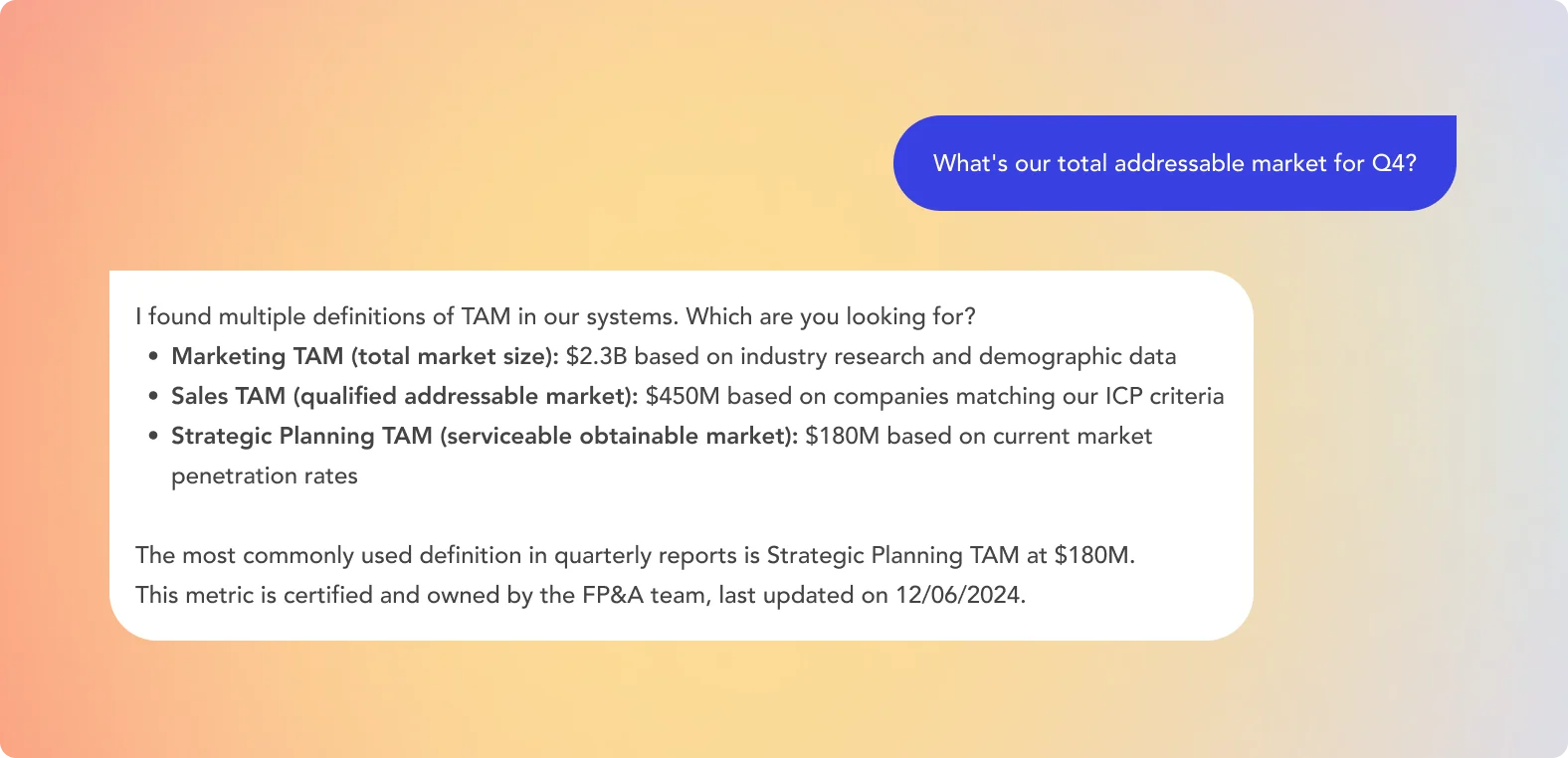

Metric Definitions for Finance Analysis

Permalink to “Metric Definitions for Finance Analysis”The problem: Finance teams struggle with the “five analysts, five different answers” issue, where the same business question yields inconsistent results because metrics like “revenue,” “customer,” or “TAM” are defined differently across teams.

The query and response:

Metric Definitions for Finance Analysis. Source: Atlan.

What you need:

- Business glossary with unified KPI definitions for your top metrics

- Semantic layer connecting each metric definition to its underlying calculation logic

- Clear ownership and certification status for each metric (see how Invitation Homes certified 350 KPIs)

- Data lineage showing how metrics are calculated from source data

Starting point: Start with 10-15 critical metrics that drive the business, and document their official definitions, owners, and where they’re used in reports.

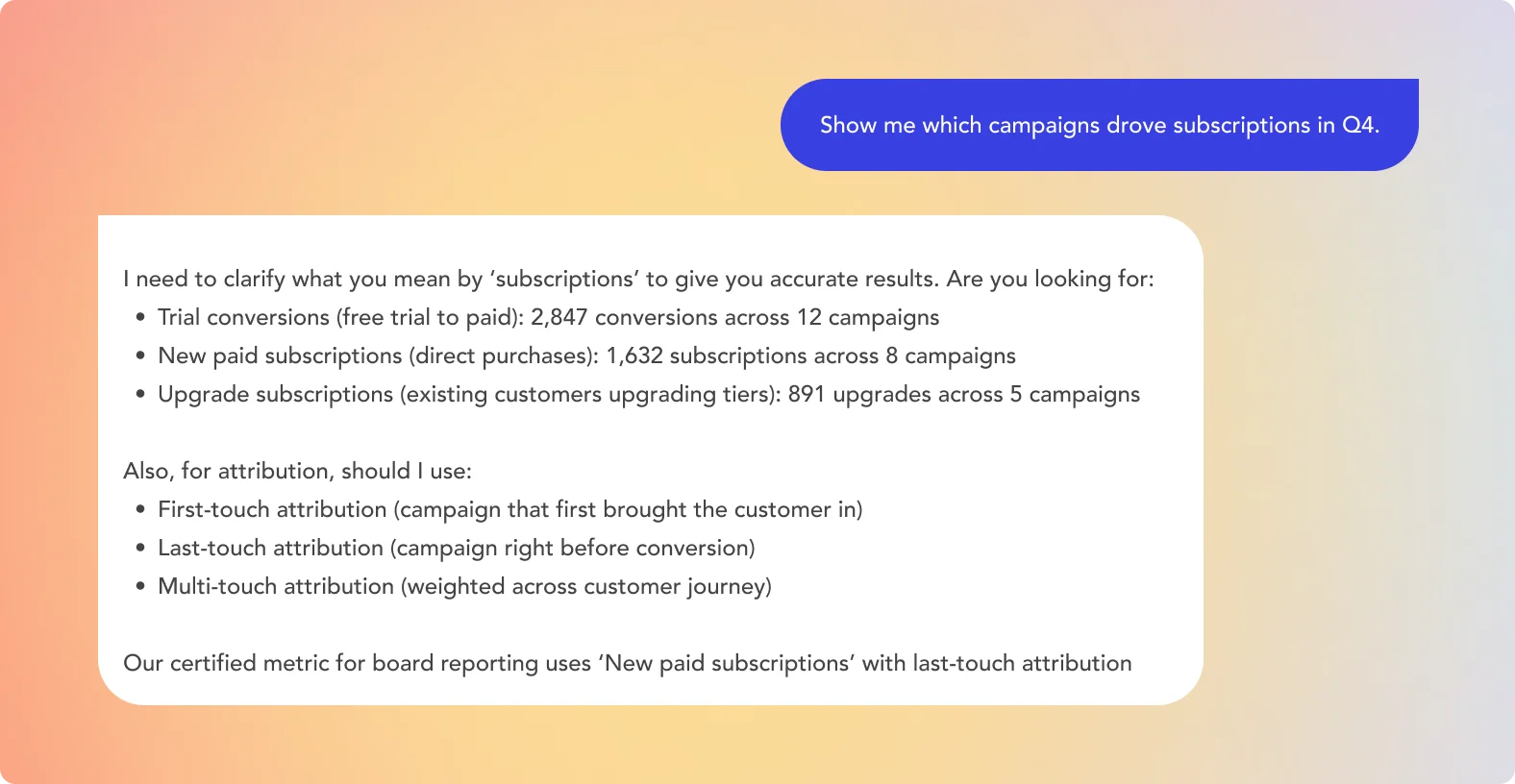

Campaign Performance Analysis for Marketing

Permalink to “Campaign Performance Analysis for Marketing”The problem: Marketers often hit roadblocks accessing self-serve analytics quickly, waiting hours or days for analysts to clarify definitions or pull reports, which slows down campaign analysis and decision-making.

The query and response:

Campaign Performance Analysis for Marketing. Source: Atlan.

What you need:

- Clear definitions of leads, trials, subscriptions, and conversions

- Documented campaign attribution models with alignment on which to use when

- Data quality checks on marketing attribution data (ensuring UTM parameters are captured consistently)

- Ownership of campaign taxonomy and naming conventions

Starting point: Focus on one channel (e.g., email campaigns) or one campaign type (e.g., product launches) first. Document the 5-10 most commonly asked questions about that channel and ensure the underlying data and definitions support accurate answers.

The Conversational Analytics Experiment Roadmap

Permalink to “The Conversational Analytics Experiment Roadmap”To ensure that your conversational analytics experiment is as effective as possible, consider the following implementation phases.

Phase 1: Pick One Use Case to Experiment With

Permalink to “Phase 1: Pick One Use Case to Experiment With”Two of the best practices covered earlier were starting small and identifying quick wins that are high viability and low risk. Use that guidance to choose a use case with which to experiment.

Is churn analysis taking weeks when it should take days? Is sales forecasting based on outdated models? Look for the necessary but inefficient processes that exist within your organization, and consider one as a starting point.

Working alongside companies like Workday, we’ve been following this approach in Atlan AI Labs. By embedding metadata context and context supply chains, we’ve been able to increase accuracy by over 5x – but the key is starting small.

Be sure the team(s) that you plan to work with on this use case are bought in as well. Identify the people who are open to change who can help champion the initiative and get internal buy-in.

Phase 2: Audit Your Context Layer

Permalink to “Phase 2: Audit Your Context Layer”The only way to govern what you get out of conversational AI is to know what you’re putting into it.

“While humans can often work around a flawed system, patching together dashboards and making educated guesses, AI will not. It will demand clarity,” said Joe DosSantos of Workday. “This new reality will create gravity around the governed data, making the semantic context layer the single most important asset in the organization.”

Start by getting a handle on the context that exists in your systems – including business glossary completeness, semantic layer coverage, and data quality benchmarks – and addressing any gaps. Focusing on your experimental use case will help avoid getting stuck on this step.

Phase 3: Deploy a Limited Pilot

Permalink to “Phase 3: Deploy a Limited Pilot”With the use case and context lined up, you’re ready to start your experiment. Work with your internal champion and their team – simply handing off a tool or process will fall flat. For Michael Weiss, AVP of Product Management at Nasdaq, this means embedding with the team to deploy the use case pilot – an approach that he says is not just efficient, but also effective.

“One, you’re getting the team’s buy-in as part of the rollout,” he said during Re:Govern. “And two, we’re not just giving them a set of documentation and hoping that they do it themselves. We’re actually in the weeds with them figuring out the ways they should approach these types of problems.”

In this phase, metrics to track include changes in time-to-insight, query accuracy, and user adoption.

Phase 4: Iterate Based on Feedback

Permalink to “Phase 4: Iterate Based on Feedback”Tight feedback loops are critical for AI experiments because they allow you to optimize and build while still keeping up with the pace of AI. Establish communication channels, like dedicated Slack channels, where pilot teams can ask questions, raise breakages, and suggest new use cases. Track all that feedback and incorporate it wherever possible.

This phase is ongoing, but over time you may find that teams become more responsive and involved.

“At GitLab, we’re actually starting to see a lot more teams want to participate in active metadata management because they’re seeing the value on the other side,” said Amie Bright, VP of Enterprise Data and Insights at GitLab, during Re:Govern.

Getting Started with Conversational Analytics

Permalink to “Getting Started with Conversational Analytics”There’s no shortage of opinions on AI readiness and advice on where to begin your conversational analytics journey. The bottom line is that without context, any AI initiative is likely to stall at some point, whether in pilot or production.

Starting small and staying practical – with your context documented and aligned – are your best bets for making your conversational analytics jumping-off point solid and effective. From there, you’re able to continue tweaking, iterating, scaling, and collaborating, so that what comes next is as seamless as it is innovative.

To learn more about context engineering for AI Analysts, check out our blog on Context Engineering 101.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.