What Is a Semantic Layer? Definition, Types, Components, and Implementation Guide

What is a semantic layer?

Permalink to “What is a semantic layer?”A semantic layer is a translation layer that sits between raw data (tables, columns, joins) and business users, mapping technical database structures to user-friendly business concepts.

Think of it as a layer that takes the definition of a business term or metric, and connects it to the code needed to calculate that metric. This helps standardize metric definitions, and enables consistent data interpretation across teams and platforms.

Organizations face three critical challenges that semantic layers address:

- Metric inconsistencies across teams: Different departments define the same metrics differently, creating conflicting analyses. For instance, Marketing calculates customer lifetime value one way, while Finance uses a different formula. These discrepancies erode trust and trigger debates about whose numbers are correct.

- Barriers for technical teams and business users: Data engineers understand SQL, but often don’t have business context. Business analysts lack SQL expertise, but know all the business rules. The disconnect between the two groups creates discrepancies and bottlenecks that delay decision-making.

- Scattered business logic: Maintaining separate metric definitions in each BI tool creates technical debt. As calculations evolve, updating logic across multiple platforms becomes unreliable and time-consuming.

For instance, data warehouses store information in technical schemas with complex relationships and table names like fact_trans_dtl. For business users who need to analyze “monthly recurring revenue by customer segment,” understanding the underlying database contains fields like tbl_trans.amt_net and dim_cust.seg_cd can be difficult and error-prone.

The semantic layer bridges this context gap by mapping technical structures to business concepts. When an analyst requests “revenue,” the semantic layer automatically queries specific transaction tables, applies appropriate filters, and aggregates values correctly. This translation happens transparently, without requiring SQL expertise.

With the surge of AI analysts and agents, this translation becomes even more critical. If a human analyst struggles without context, an AI agent has no chance. AI doesn’t know how to say “I don’t know,” or ask clarifying questions when encountering ambiguous definitions.

Modern data catalogs complement semantic layers by enriching technical metadata with business context. And with research showing that organizations use an average of 3.8 different BI tools, a unified semantic layer prevents each tool from having its own metric definitions, so business concepts are aligned and consistent across the board.

What are the components for a semantic layer?

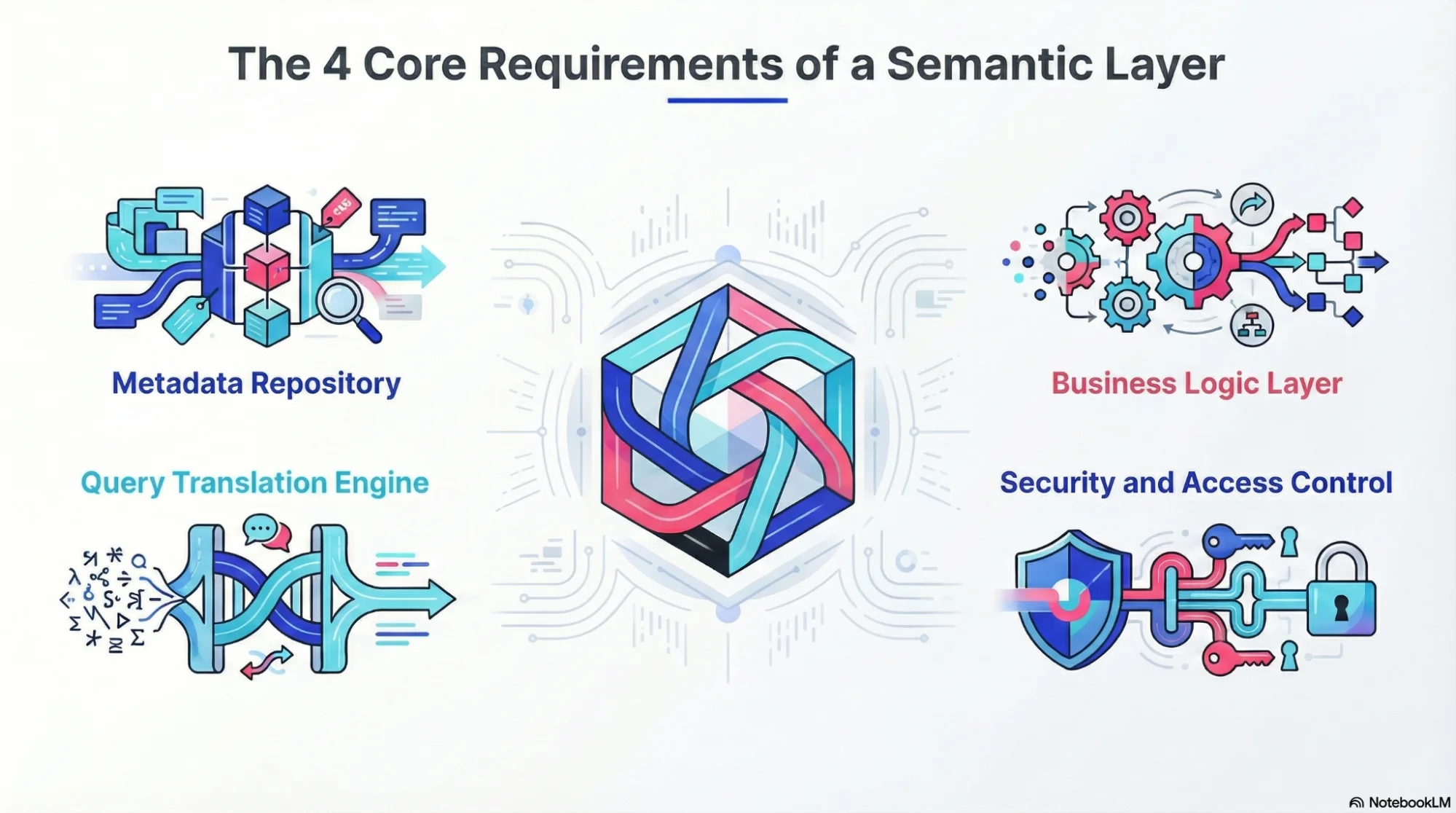

Permalink to “What are the components for a semantic layer?”These are the four core components that covers the requirements of a semantic layer:

What are the components for a semantic layer. Source: Atlan.

1. Metadata repository

Permalink to “1. Metadata repository”A metadata repository stores business definitions, metrics, relationships, and calculation rules. It maps technical database elements to business-friendly names, and maintains the logic that connects terms like “customer lifetime value” to the correct underlying tables, joins, and formulas.

This repository also tracks data lineage, documenting where each metric originates and how transformations occur.

2. Business logic layer

Permalink to “2. Business logic layer”The business logic layer defines calculations, metrics, formulas, and KPIs centrally, rather than scattering them across separate reports. In many systems, like dbt Semantic Layer, Snowflake Cortex, and Looker, this is implemented as YAML/code-based models that tools and agents can call to compute metrics consistently.

The business logic layer handles complex scenarios, including:

- Time-based calculations (year-over-year growth, month-to-date aggregations)

- Currency conversions and exchange rate handling

- Hierarchical rollups (product categories, organizational structures)

- Custom formulas specific to business operations

Centralizing this logic ensures that when a metric definition changes, the update propagates automatically to all downstream consumers.

3. Query translation engine

Permalink to “3. Query translation engine”The query translation engine converts business requests into optimized SQL or other query languages. It handles tasks like pushing down filters and joins, mapping logical fields to physical columns, and supporting multiple backends without changing business definitions.

In practice, when a user asks for “Q3 revenue by region,” the engine:

- Identifies relevant tables and joins

- Applies appropriate filters and aggregations

- Optimizes query performance

- Routes requests to appropriate data sources

This component manages the complexity that would otherwise require technical expertise from every analyst.

4. Security and access control

Permalink to “4. Security and access control”Security and access control are enforced at the semantic level, rather than requiring configuration in each individual tool. This is the data governance envelope around the semantic layer that determines who can see and query which entities and metrics, according to underlying policies. It also helps track data quality and data lineage.

The semantic layer implements:

- Row-level security based on user attributes

- Column-level permissions for sensitive data

- Audit logging of data access

- Compliance with regulatory requirements

With this structure, organizations can define security policies once and enforce them consistently across all analytics tools and applications, avoiding gaps and inconsistencies.

What are the types of semantic layers?

Permalink to “What are the types of semantic layers?”1. Logical semantic layer

Permalink to “1. Logical semantic layer”A logical semantic layer defines business metrics and dimensions as abstract concepts without physically storing or moving data. It maps business terminology to underlying data structures through metadata and rules, allowing queries to be translated at runtime.

Example: When an analyst queries “quarterly revenue by region,” the logical semantic layer translates this into the correct SQL joins across customer, transaction, and geography tables without creating a new physical dataset.

Benefits:

- Storage efficiency: No data duplication since definitions exist as metadata, not physical tables

- Real-time accuracy: Queries always fetch current data from source systems rather than working with stale copies

- Agility: Business logic changes immediately reflect across all consuming tools without data pipeline updates

- Governance simplicity: Access controls and policies apply directly at the source rather than managing copies

Best for: Real-time needs, distributed data.

2. Physical semantic layer

Permalink to “2. Physical semantic layer”A physical semantic layer materializes business logic into actual database objects like tables, views, or cubes. It pre-aggregates metrics and pre-joins dimensions, storing computed results for faster query performance.

Example: A nightly job creates a “revenue_by_region_monthly” table with pre-calculated aggregations, so dashboards query this optimized physical structure instead of scanning millions of raw transactions.

Benefits:

- Query performance: Pre-computed aggregations deliver sub-second response times for complex metrics

- Cost optimization: Reduces compute costs by calculating metrics once rather than on every query

- Predictable latency: Users experience consistent performance since data is already prepared

- Complexity abstraction: Business users interact with simplified structures rather than navigating complex source schemas

Best for: Performance-critical workloads, predictable queries.

3. Hybrid semantic layer

Permalink to “3. Hybrid semantic layer”A hybrid semantic layer combines logical and physical approaches, using materialized views for frequently accessed metrics while maintaining logical definitions for dynamic queries. It balances performance optimization with flexibility.

Example: Core KPIs like “monthly active users” exist as physical tables updated daily, while ad-hoc exploratory queries like “users who viewed feature X but didn’t complete action Y” execute logically against live data.

Benefits:

- Adaptive performance: Hot paths get physical optimization while long-tail queries remain flexible

- Resource efficiency: Materializes only high-value metrics rather than pre-computing every possible combination

- Freshness trade-offs: Teams choose appropriate refresh cadences based on business requirements

- Incremental optimization: Organizations can start logically and selectively materialize based on usage patterns

Best for: Mixed workloads, balancing freshness and performance.

4. Data virtualization layer

Permalink to “4. Data virtualization layer”A data virtualization layer provides unified access to distributed data sources through a single query interface without physically moving or copying data. It federates queries across multiple systems in real-time, handling translation between different data models and protocols.

Example: A business intelligence tool queries customer data spanning Salesforce, Snowflake, and MongoDB through one virtualization layer, which routes sub-queries to each system, combines results, and returns unified data.

Benefits:

- Zero data movement: Eliminates ETL pipelines and storage costs for integrated views

- Reduced latency: Provides immediate access to new data sources without waiting for batch loads

- Source system preservation: Leaves data in systems optimized for their specific workloads

- Simplified architecture: Reduces the number of copies and synchronization points that create governance complexity

Best for: Heterogeneous environments, legacy integration.

5. Universal semantic layer

Permalink to “5. Universal semantic layer”Universal semantic layers operate as standalone platforms that sit between data sources and consumption layers. Instead of being tied to a single BI tool, they act as a central hub for business logic across tools and use cases (BI, apps, AI agents).

Gartner recognizes the universal semantic layer as “a key component to unify data across increasingly diverse use cases,” particularly for organizations with complex data ecosystems.

Benefits include:

- Centralized governance across the entire organization

- Flexibility to support multiple BI tools simultaneously

- Consistent definitions, regardless of consumption method

- Vendor-neutral architecture that adapts as tools evolve

Still, universal semantic layers often require additional infrastructure investments and integration efforts from data teams. Therefore, they work best for enterprises managing multiple BI platforms, preparing for AI adoption, or requiring stringent governance across departments.

6. BI tool-embedded semantic layer

Permalink to “6. BI tool-embedded semantic layer”Embedded semantic layers live within specific BI platforms like Tableau, Looker, or Power BI. They define dimensions, measures, and sometimes row-level security natively within that specific tool.

Benefits include:

- Tight integration with visualization capabilities

- Easy setup with no separate infrastructure

- Optimized performance within the platform

- Familiar interface for existing tool users

The tradeoffs for BI-embedded semantic layers center on isolation and lock-in: definitions created in one tool remain unavailable to other platforms. Teams using multiple BI tools duplicate logic across systems, reintroducing the consistency problems semantic layers solve.

This approach is best for organizations standardized on a single BI platform with limited need for cross-tool analytics or AI integrations.

7. Data warehouse semantic layer

Permalink to “7. Data warehouse semantic layer”Data warehouse semantic layers define semantics directly in the data platform, not in a separate metrics service or BI tool. They use native database features like views, materialized views, and OLAP cubes to provide business abstractions directly within the storage layer.

Examples of data warehouse-native semantic layers are Snowflake Cortex semantic models/views, or semantic YAML that lives alongside dbt models and is executed in the warehouse.

Benefits include:

- Performance optimization through pre-aggregation

- Minimal additional infrastructure

- Tight coupling with existing data architecture

- Familiar SQL-based development for data engineers

However, data warehouse semantic layers offer limited flexibility and are dependent on warehouses like Snowflake, BigQuery, and Databricks. Business users often still need SQL knowledge to navigate views effectively, and changes to warehouse schemas directly impact semantic definitions.

For organizations with warehouse-centric architectures, strong SQL skills across teams, and modest self-service requirements, the data warehouse semantic layer will be the best option.

Here’s a comparison table for the semantic layer types:

Permalink to “Here’s a comparison table for the semantic layer types:”Type | Purpose | When to use | Pros | Cons |

|---|---|---|---|---|

Logical semantic layer | Maps business concepts to data structures through metadata without physical storage | Real-time analytics needs, distributed data sources, frequently changing business logic | No data duplication Always current data Instant logic updates Simple governance at source | Slower query performance Higher compute costs per query Depends on source system availability Complex queries can timeout |

Physical semantic layer | Pre-computes and stores aggregated metrics as database objects | Performance-critical dashboards, predictable reporting patterns, high query volumes | Fast query response times Lower compute costs per query Predictable performance Simplified data structures | Storage overhead from duplication Data freshness lag Pipeline maintenance burden Logic changes require rebuilds |

Hybrid semantic layer | Combines physical materialization for hot paths with logical queries for flexibility | Mixed workloads with both standard reports and exploratory analysis | Optimized for common queries Flexible for ad-hoc needs Efficient resource usage Gradual optimization path | Architecture complexity Cache invalidation challenges Requires usage monitoring Mixed freshness levels |

Data virtualization layer | Federates queries across multiple systems without data movement | Heterogeneous environments, legacy system integration, zero-copy architectures | No ETL pipelines needed Immediate new source access Data stays optimized at source Reduced storage costs | Network latency impacts performance Limited by slowest source Complex joins across systems Licensing costs for virtualization tools |

Universal semantic layer | Central hub for business logic across all tools and use cases | Multi-BI tool environments, AI/ML initiatives, enterprise-wide governance | Single source of truth Vendor-neutral definitions Supports diverse use cases Centralized governance | Additional infrastructure required Integration effort across tools Potential single point of failure Requires dedicated management |

BI tool-embedded semantic layer | Defines metrics natively within a specific BI platform | Single BI tool standardization, quick setup needs, platform-specific optimizations | Tight platform integration No separate infrastructure Optimized for tool capabilities Familiar development interface | Vendor lock-in Definitions siloed per tool Duplicated logic across platforms Limited AI/cross-tool support |

Data warehouse semantic layer | Uses native database features for business abstractions within the storage layer | Warehouse-centric architectures, SQL-proficient teams, minimal infrastructure budgets | Native performance optimization No additional infrastructure Familiar SQL development Tight data platform coupling | Warehouse vendor dependency Requires SQL knowledge Schema changes impact semantics Limited self-service capabilities |

Key insight: Organizations often layer multiple approaches based on workload characteristics. Core KPIs might use physical materialization, exploratory queries stay logical, and cross-platform access leverages universal layers—all connected through consistent business definitions.

Business use cases for semantic layers

Permalink to “Business use cases for semantic layers”While AI is pushing the conversation on semantic layers, they serve a broad range of use cases that drive enterprise data value.

1. Consistent business intelligence

Permalink to “1. Consistent business intelligence”Semantic layers eliminate the “which number is right?” debates that confuse and delay data analysis and insights. When Marketing and Finance both request “Q3 revenue,” they receive identical results because both query the same centralized definition. Apply this across departments, and everyone in the organization starts speaking the same language.

Semantic layer consistency extends beyond simple metrics, to complex calculations involving:

- Customer segmentation

- Product hierarchies

- Time period comparisons

- Multi-currency transactions

All follow uniform logic regardless of who generates the report or which tool displays the results. This can dramatically reduce reconciliation time and result in fewer escalations caused by conflicting data.

2. Self-service analytics enablement

Permalink to “2. Self-service analytics enablement”Semantic layers enable self-service data use, so business analysts access data independently without having to understand database schemas or write SQL. This helps release bottlenecks and makes business users more productive.

For instance, an analyst can drag “product category” and “gross margin” into a visualization tool, and the semantic layer automatically:

- Identifies required tables and joins

- Applies proper aggregation methods

- Enforces security restrictions

- Delivers accurate results

Analysts who previously waited days for custom queries now generate insights immediately, while maintaining governance standards.

3. AI and machine learning enablement

Permalink to “3. AI and machine learning enablement”Large language models (LLMs) grounded in semantic layers produce dramatically more accurate results. When AI agents access structured business logic and active metadata rather than raw database schemas, they understand context that aggregates the right data and prevents hallucinations.

Semantic layers for AI and ML have never been more urgent. As AI agents increasingly augment or take over human analysts’ workloads, they need to understand what those humans do.

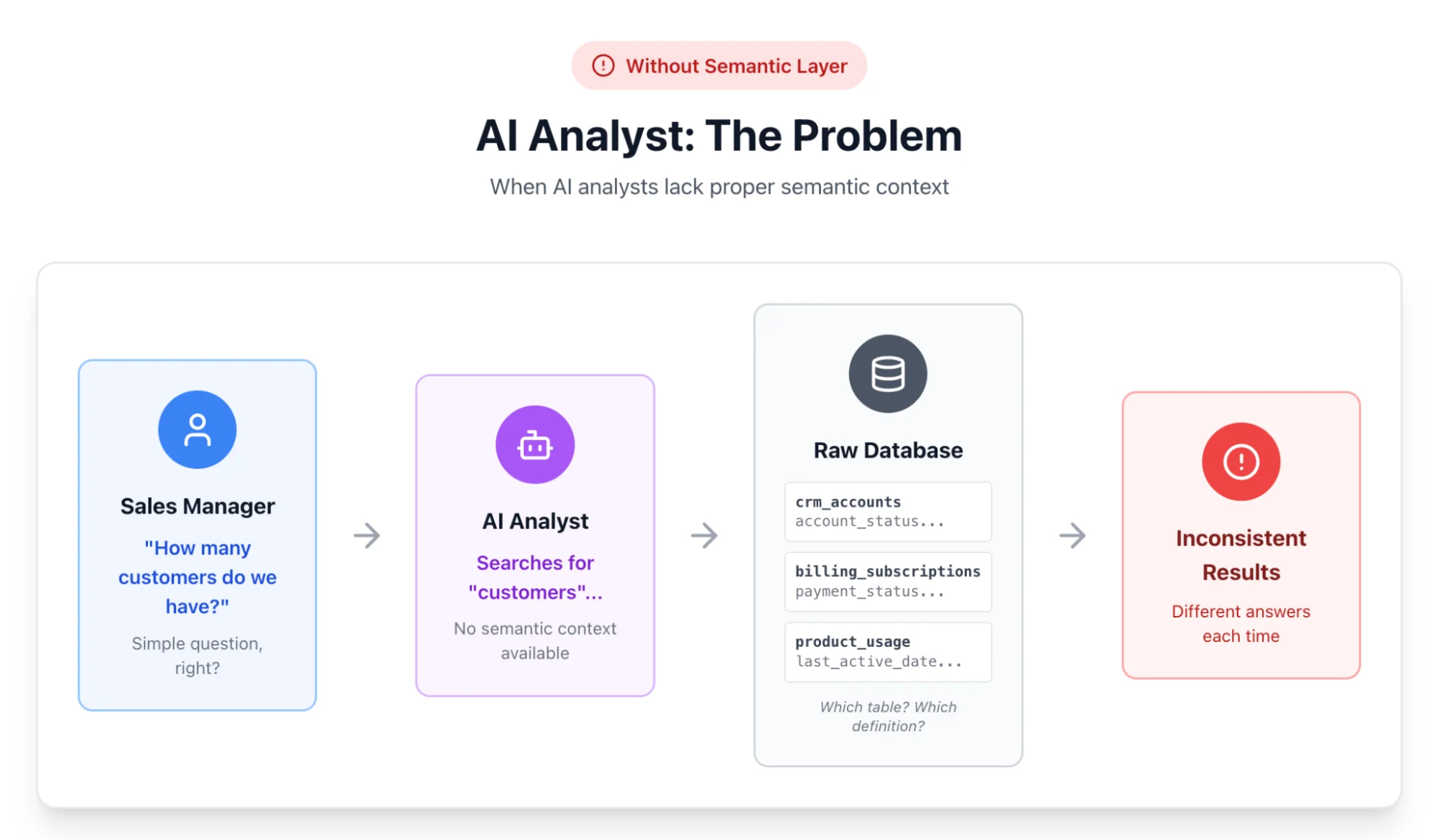

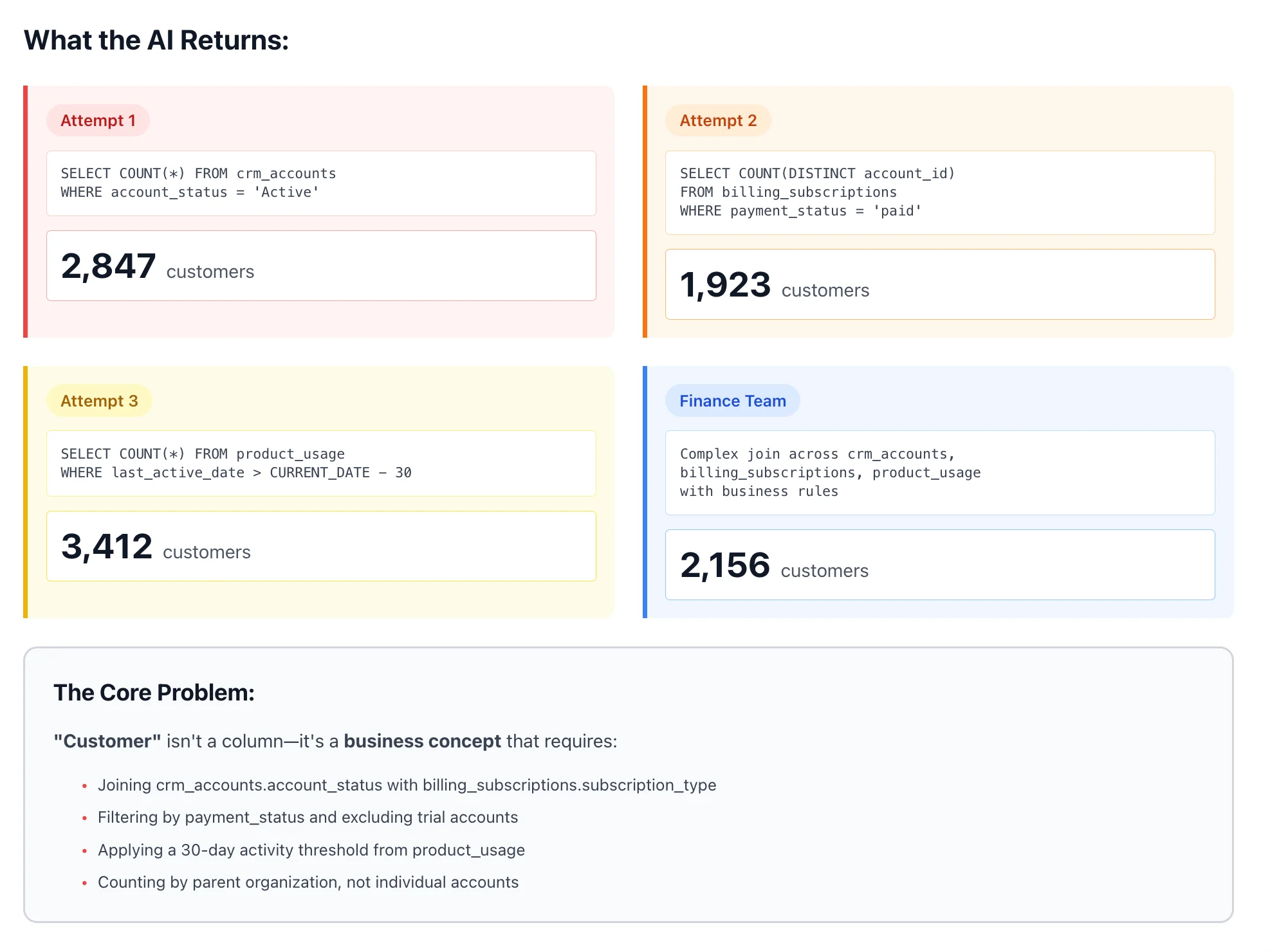

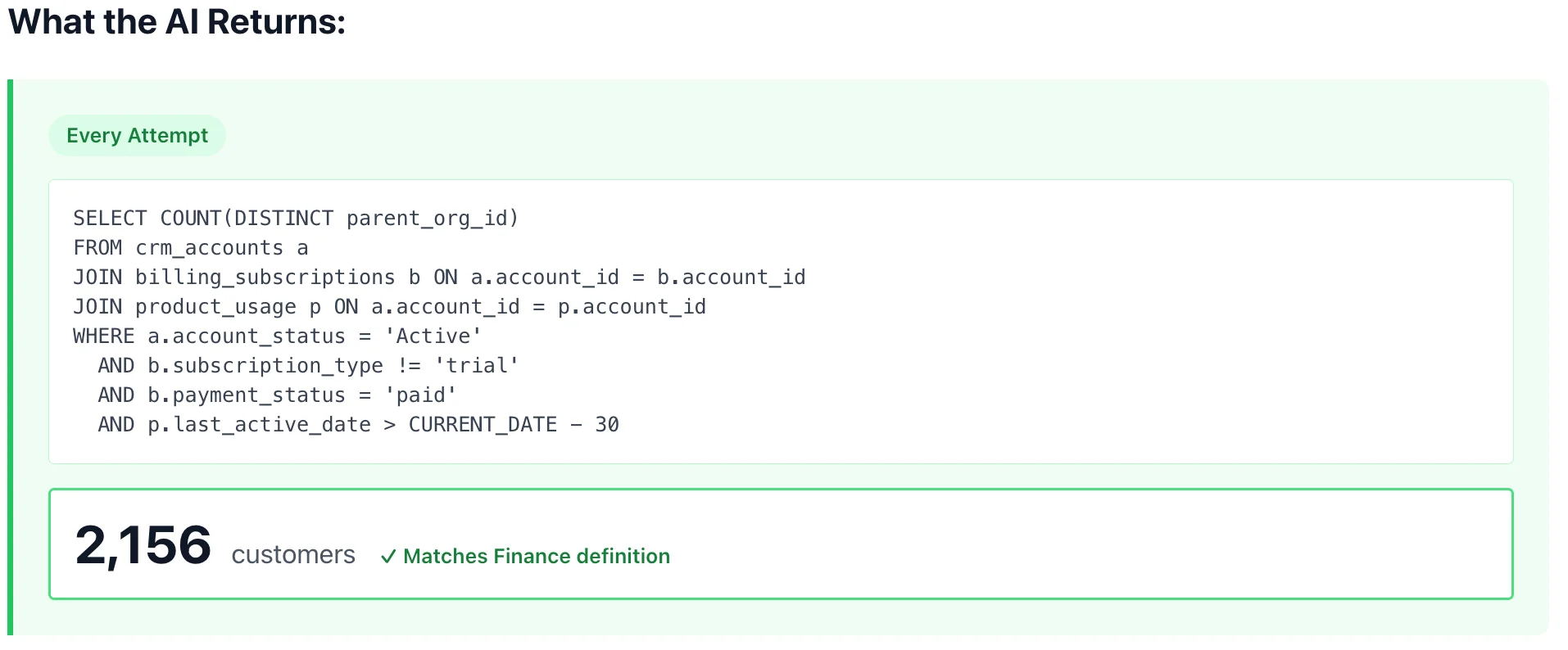

Here’s the problem: When a Sales Manager asks “how many customers do we have,” a human analyst will know all the ways in which the definition of customer may show up in tables and columns, but an AI analyst without proper semantic context will struggle or fail completely.

AI Analyst - without semantic layer - the problem. Source: Atlan.

AI Analyst - without semantic layer - the problem - what the AI returns. Source: Atlan.

The core problem is that “Customer” isn’t a column – it’s a business concept that requires:

- Joining crm_accounts.account_status with billing_subscriptions.subscription_type

- Filtering by payment_status and excluding trial accounts

- Applying a 30-day activity threshold from product_usage

- Counting by parent organization, not individual account

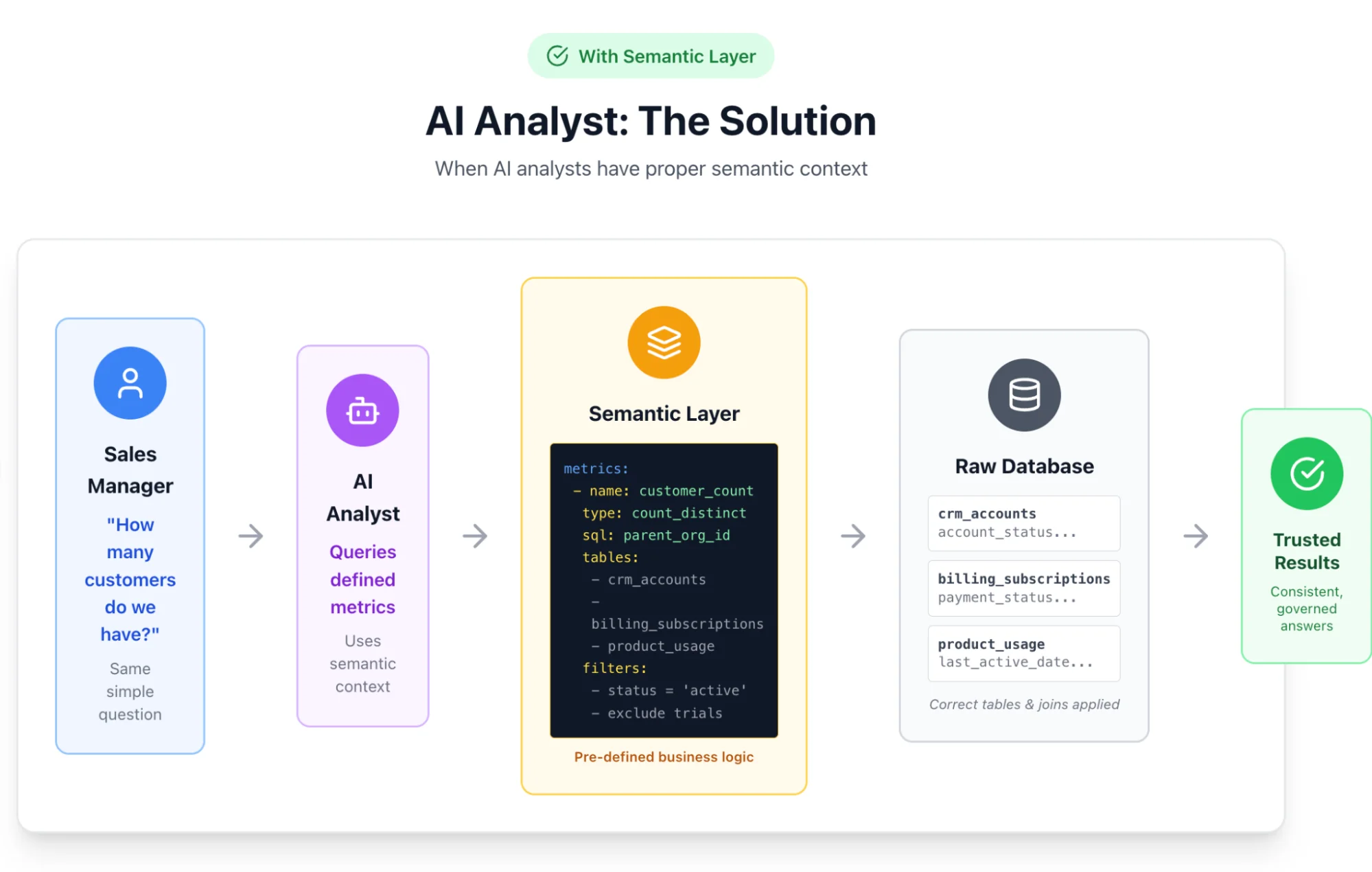

But AI agents don’t intuitively understand business context or ask clarifying questions. The semantic layer encodes this knowledge, providing machine-readable business context that helps AI understand:

- Which fields relate to which business concepts

- How to correctly calculate metrics

- What constraints and validation rules apply

- Which data combinations make logical sense

AI Analyst - with semantic layer - the solution. Source: Atlan.

AI Analyst - with semantic layer - the solution - what the AI returns. Source: Atlan.

Research shows that semantic layers and structured definitions reduce LLM hallucinations by over 50%. Text-to-SQL implementations leveraging semantic context achieve accuracy rates approaching 99.8%, compared to substantially lower rates without semantic grounding.

And the effects are compounding: more accurate AI responses improve trust in the models, which in turn helps drive adoption and value.

4. Embedded analytics for applications

Permalink to “4. Embedded analytics for applications”Rather than forcing customers to export data to external BI tools, modern SaaS products deliver analytics directly within the application itself. However, multi-tenant environments, where hundreds or thousands of customers share the same application infrastructure, present unique challenges: each customer needs analytics on their own data, but maintaining separate metric definitions for every tenant creates unsustainable complexity.

That’s where product teams embedding analytics into customer-facing applications benefit from semantic layer abstractions. Multi-tenant SaaS products use semantic layers to:

- Abstract customer-specific schema variations

- Provide consistent metric definitions across tenants

- Scale analytics capabilities without multiplying maintenance effort

- Deliver reliable product analytics dashboards

This approach allows application developers to focus on user experience rather than complex data access logic. The result is faster feature development, more reliable analytics for customers, and predictable infrastructure costs as tenant counts grow.

Industry specific use cases for semantic layers

Permalink to “Industry specific use cases for semantic layers”Finance: Regulatory reporting consistency, risk metric standardization, fraud detection accuracy

Permalink to “Finance: Regulatory reporting consistency, risk metric standardization, fraud detection accuracy”Financial institutions use semantic layers to ensure regulatory reports pull from identical metric definitions across jurisdictions. When both the U.S. SEC and European regulators request “total credit exposure,” the semantic layer guarantees consistent calculations regardless of reporting format or consumption tool.

Key applications:

- Risk standardization: Unified value-at-risk calculations across trading desks eliminate audit discrepancies

- Fraud detection: Semantic grounding reduces AI false positives by over 40% compared to raw schema models

- Cross-border compliance: Single definitions serve multiple regulatory frameworks simultaneously

Healthcare: Patient data governance, clinical trial metrics, HIPAA-compliant analytics

Permalink to “Healthcare: Patient data governance, clinical trial metrics, HIPAA-compliant analytics”Healthcare organizations leverage semantic layers to maintain patient data governance across fragmented EHR systems, research databases, and clinical applications. A unified semantic definition of “patient readmission within 30 days” ensures care quality metrics remain consistent whether accessed through operational dashboards, research portals, or payer reports.

Key applications:

- Clinical trials: Standardized endpoint definitions across multi-site studies reduce protocol deviations and accelerate FDA submissions

- Privacy automation: De-identification rules and access policies embedded in metric definitions ensure AI agents automatically enforce HIPAA controls

- Quality metrics: Consistent care measurements across departments and facilities support outcome improvements

Retail: Inventory accuracy, customer 360, omnichannel consistency

Permalink to “Retail: Inventory accuracy, customer 360, omnichannel consistency”Retailers deploy semantic layers to reconcile inventory counts across warehouses, stores, and fulfillment centers into a single “available to promise” metric that e-commerce, point-of-sale, and allocation systems all trust.

Key applications:

- Customer 360: Unified purchase history, browsing behavior, and service interactions create consistent lifetime value calculations across marketing, finance, and operations

- Omnichannel metrics: Standardized “conversion rate” and “cart abandonment” definitions across web, mobile, and in-store channels

- Inventory optimization: Real-time visibility prevents stockouts and reduces excess inventory holding costs

Manufacturing: Supply chain visibility, quality metrics, predictive maintenance

Permalink to “Manufacturing: Supply chain visibility, quality metrics, predictive maintenance”Manufacturing operations use semantic layers to create consistent supply chain visibility metrics across procurement, production, and distribution systems. When executives query “on-time delivery rate,” the semantic layer ensures identical definitions whether data comes from ERP, MES, or logistics platforms.

Key applications:

- Global quality standards: Six Sigma metrics and defect calculations standardized across worldwide facilities eliminate plant-by-plant variations

- Predictive maintenance: AI models learn from consistent sensor data and failure definitions rather than reconciling incompatible structures

- Root cause analysis: Unified metrics across production lines reveal true patterns obscured by inconsistent measurements

Telecom/Energy: Network performance, usage analytics, regulatory compliance

Permalink to “Telecom/Energy: Network performance, usage analytics, regulatory compliance”Telecommunications and energy companies leverage semantic layers to standardize network performance metrics across infrastructure types and geographies. A unified definition of “service availability” ensures consistency whether measuring cellular networks, fiber connections, or power grid segments.

Key applications:

- Revenue forecasting: Reconciled billing data, network telemetry, and customer activity create trusted consumption patterns for capacity planning

- Automated compliance: FCC and energy commission requirements embedded in metric definitions eliminate manual validation

- Performance monitoring: Consistent SLA metrics across technology generations and vendor equipment support fair customer billing

4-step guide to implementing a semantic layer

Permalink to “4-step guide to implementing a semantic layer”

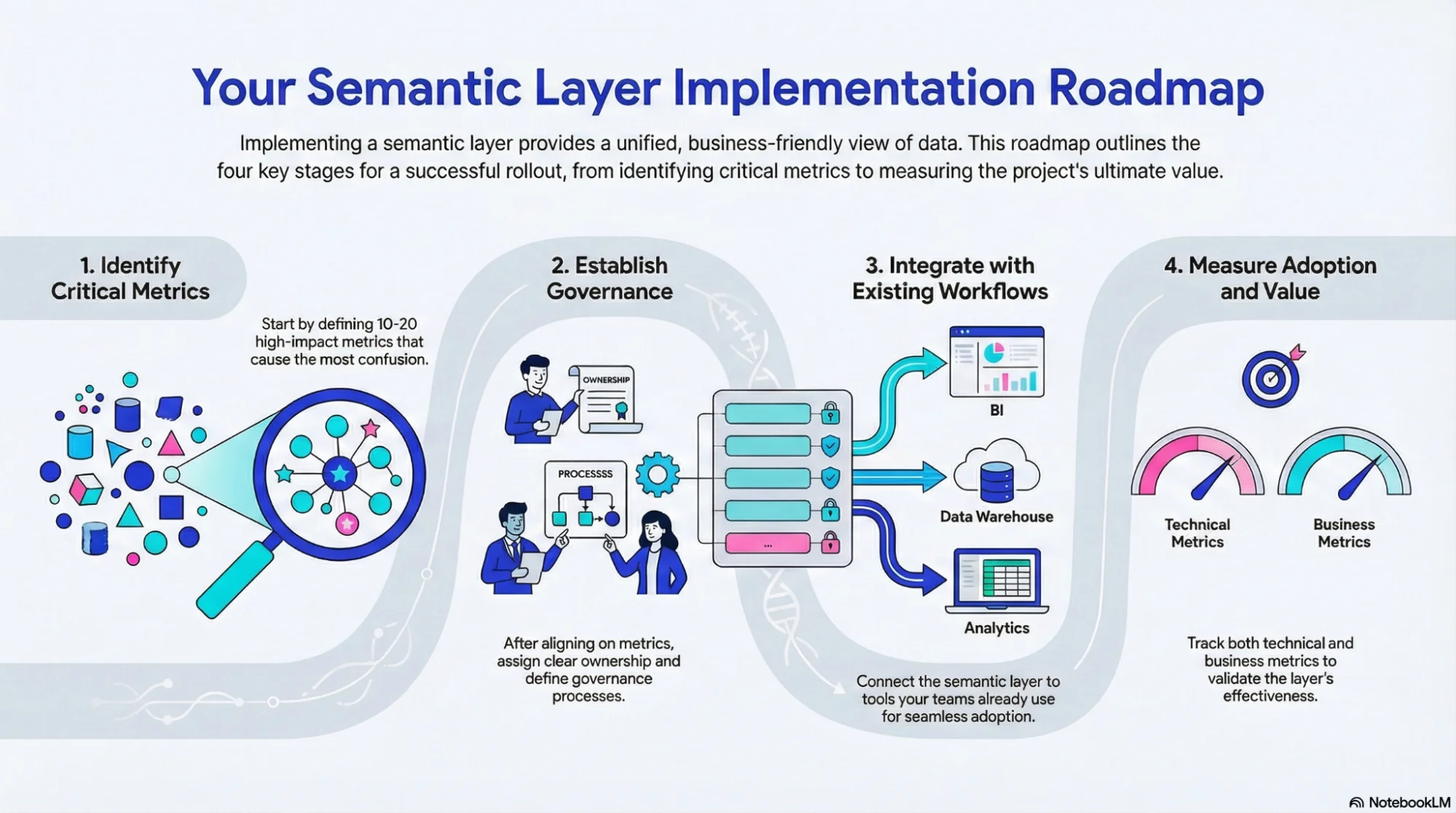

Guide to implementing a semantic layer. Source: Atlan.

1. Start with critical metrics

Permalink to “1. Start with critical metrics”Identify 10-20 high-impact metrics that cause the most confusion or require frequent clarification. Focus on those that:

- Appear in executive dashboards or board presentations

- Generate “which number is right?” debates

- Exist across multiple departments or tools

- Drive key business decisions

Document how different teams currently calculate these metrics in order to understand discrepancies. That will help prioritize which definitions need standardization first, and reveal the scale of consistency problems.

2. Establish governance processes

Permalink to “2. Establish governance processes”Governance starts with accountability. After you’ve aligned on metrics, define clear ownership for them:

- Business stakeholders own metric definitions and approve changes

- Technical owners implement and maintain calculations

- Data governance teams oversee approval workflows

At this step, it’s important to create change management processes that balance agility with control. Organizations need to update definitions as business operations evolve without introducing chaos. Version control for metric definitions helps track changes and enables rollback if issues emerge.

Platforms like Atlan centralize metric and semantic definitions with builtin workflows, ownership, and approvals, so teams can evolve business logic quickly while maintaining clear governance and guardrails. Automated version history for terms, metrics, and semantic models allows organizations to track every change, compare revisions, and safely roll back if a new definition introduces issues.

3. Integrate with existing workflows

Permalink to “3. Integrate with existing workflows”Connect the semantic layer to tools teams already use, rather than forcing them to adopt entirely new platforms. Prioritize integrations based on:

- User adoption rates of current tools

- Business impact of consistent metrics in each tool

- Technical complexity of the integration

For example, organizations using dbt can integrate semantic models directly into their transformation workflows; BI tools consume definitions through native connectors or API calls; and modern data catalogs provide integration patterns for various technology stacks.

4. Measure adoption and value

Permalink to “4. Measure adoption and value”Track both technical and business metrics to validate semantic layer effectiveness. Be sure to record baseline metrics in order to understand progress.

Metrics to prioritize include:

- Usage statistics showing which metrics get queried most frequently

- Reduction in “which number is right?” support requests

- Time saved on report reconciliation

- Increase in self-service query volume

- Decrease in data team bottleneck complaints

Quantify business impact by measuring:

- Faster decision cycles due to trusted data

- Reduced time spent investigating metric discrepancies

- Increased analyst productivity through self-service access

- Improved AI/ML model accuracy with grounded context

Organizations typically see measurable improvements within four to eight weeks for initial metric sets, with broader value accumulation as more teams adopt the semantic layer.

Post-implementation KPI framework

Permalink to “Post-implementation KPI framework”KPI Category | Metric | Target | What It Measures | Review Cadence |

|---|---|---|---|---|

Consistency & Trust | “Which number is right?” support tickets | 60-80% reduction within 6 months | Alignment on metric definitions across teams | Monthly |

Certified metrics coverage | 80% of high-impact metrics certified in year 1 | Percentage of metrics with formal ownership and approval | Monthly | |

Self-Service Adoption | Self-serve query volume | 40-60% increase from business users | Successful abstraction of technical complexity | Monthly |

Time-to-insight | 50-70% decrease in question-to-answer time | Speed from initial request to delivered insight | Monthly | |

Technical Performance | Cache hit rate | 70-85% for production dashboards | Queries served from cache vs. executed against sources | Weekly |

Query response time (P95) | Sub-second for 90% of standard metrics | Latency performance and optimization needs | Weekly | |

AI & Analytics | AI agent query accuracy | 85-95% correct responses without human help | Natural language queries producing intended results | Monthly |

Model training efficiency | 30-50% reduction in feature engineering time | Time saved using semantic definitions vs. custom prep | Quarterly | |

Governance | Downstream impact visibility | 100% of changes with automated impact analysis | Affected assets identified before metric updates deploy | Monthly |

Access policy enforcement | Zero unauthorized access violations | Row-level and column-level security applied correctly | Weekly |

Quick health check: Traffic light indicators

Permalink to “Quick health check: Traffic light indicators”Health Signal | 🟢 Healthy | 🟡 Needs Attention | 🔴 Critical Issue |

|---|---|---|---|

Support ticket trend | Declining month-over-month | Flat for 2+ months | Increasing |

Self-serve adoption | Growing 10%+ monthly | Growth under 5% | Declining |

Cache hit rate | Above 70% | 50-70% | Below 50% |

Query performance | P95 under 1 second | P95 1-3 seconds | P95 over 3 seconds |

AI accuracy | Above 85% | 70-85% | Below 70% |

Metric certification | 5+ new per month | 1-4 new per month | None certified |

Pro tip: Set up automated alerts when KPIs move into yellow or red zones. Most organizations see measurable improvements within 4-8 weeks for initial metric sets, with broader value accumulation as adoption grows.

Challenges of semantic layers and how to mitigate them

Permalink to “Challenges of semantic layers and how to mitigate them”Setup complexity

Permalink to “Setup complexity”Problem: Organizations struggle to identify which metrics to define first, leading to analysis paralysis or premature standardization of low-impact definitions.

Mitigation: Start with 10-15 high-value metrics that cause the most confusion or require frequent clarification. Focus on those appearing in executive dashboards, regulatory reports, or driving operational decisions. Iterate based on usage patterns rather than attempting comprehensive coverage upfront.

Semantic sprawl

Permalink to “Semantic sprawl”Problem: Duplicate definitions proliferate as teams create similar-but-different versions of the same metric. “Active users” ends up with seven variations across departments, reintroducing the consistency problems semantic layers solve.

Mitigation: Implement certification workflows where metric owners review and approve definitions before publication. Use version control to track changes and enforce DRY principles—each business concept gets one authoritative definition with documented variations when legitimately needed.

Performance tuning

Permalink to “Performance tuning”Problem: Logical semantic layers deliver flexibility but struggle with query performance as complexity grows. Users experience timeouts on previously functional dashboards.

Mitigation: Deploy hybrid caching strategies that materialize frequently accessed metrics while maintaining logical definitions for exploratory queries. Monitor query patterns to identify candidates for physical optimization. Implement query result caching at the semantic layer to avoid redundant computation.

Change management

Permalink to “Change management”Problem: Metric definition changes break downstream dashboards and reports without warning. Teams discover discrepancies only after stakeholders question conflicting numbers.

Mitigation: Establish RACI matrices defining who owns each metric domain and who must approve changes. Set SLAs for update notifications and deprecation timelines. Use impact analysis tools that automatically identify affected reports and notify consumers before changes deploy.

Standards and interfaces

Permalink to “Standards and interfaces”Semantic layers integrate with data platforms and consumption tools through established protocols:

Query languages:

Permalink to “Query languages:”- Standard SQL and vendor-specific dialects (T-SQL, PL/SQL, SparkSQL)

- DAX (Data Analysis Expressions) for Power BI semantic models

- MDX (Multidimensional Expressions) for OLAP cube navigation

- GraphQL for flexible, client-specified data retrieval

Connectivity standards:

Permalink to “Connectivity standards:”- JDBC/ODBC drivers for legacy application integration

- XMLA (XML for Analysis) for standardized multidimensional data access

- Arrow Flight for high-performance columnar data transfer

Modern interfaces:

Permalink to “Modern interfaces:”- REST APIs enabling programmatic metric access and definition management

- gRPC for low-latency streaming query results

- WebSocket connections supporting real-time metric updates

- Native BI tool connectors (Tableau, Looker, Power BI semantic layers)

Semantic specifications:

Permalink to “Semantic specifications:”- dbt Semantic Layer YAML definitions

- LookML for Looker modeling

- Snowflake Cortex semantic models

- Context Graph standards (RDF, OWL) for relationship modeling

Brief history of semantic layers and trends to know in 2026

Permalink to “Brief history of semantic layers and trends to know in 2026”Semantic layers emerged in the 1990s with Business Objects’ Universe, which abstracted database complexity from business analysts through a metadata layer. Early implementations focused on shielding users from SQL by mapping business terminology to technical schemas within specific BI tools.

The 2010s brought Looker’s LookML, which positioned semantics as version-controlled code rather than point-and-click configurations. This evolution emphasized repeatability and governance but remained tool-specific. Modern semantic layers emerged as organizations adopted multiple BI platforms and encountered the “which number is right?” problem at scale. The current resurgence stems from AI and machine learning requirements—LLMs need structured business context to avoid hallucinations when generating queries or interpreting data. Here is the snapshot of some latest trends:

AI and machine learning integration

Permalink to “AI and machine learning integration”- Semantic grounding reduces LLM hallucinations by over 50% in text-to-SQL applications

- Structured business logic enables AI agents to reason about data appropriately

- Training data lineage tracking for model explainability and compliance

Data fabric and data mesh convergence

Permalink to “Data fabric and data mesh convergence”- Semantic layers provide the contextual translation layer for federated data architectures

- Domain-specific semantic models align with data mesh’s decentralized ownership principles

- Active metadata connects semantic definitions to quality, lineage, and governance signals

Cloud-native architectures

Permalink to “Cloud-native architectures”- Warehouse-native semantic layers (Snowflake Cortex, BigQuery semantic models) reduce infrastructure overhead

- Separation of compute and storage enables elastic scaling for semantic query workloads

- Multi-cloud semantic layers federate definitions across diverse platform investments

Real-time and streaming semantics

Permalink to “Real-time and streaming semantics”- Event-driven metric calculations support operational analytics and immediate decision-making

- Continuous aggregation patterns replace batch refresh cycles for time-sensitive KPIs

- Change data capture integration keeps semantic views synchronized with source systems

Augmented analytics and natural language interfaces

Permalink to “Augmented analytics and natural language interfaces”- Conversational BI translates business questions into semantic queries without SQL knowledge

- Context-aware search surfaces relevant metrics based on user role and recent activity

- Automated insight generation identifies anomalies in semantically defined metrics

Data marketplaces and semantic interoperability

Permalink to “Data marketplaces and semantic interoperability”- Standardized semantic definitions enable data product discovery and consumption across organizational boundaries

- Industry-specific ontologies (FIBO for finance, HL7 FHIR for healthcare) accelerate semantic layer adoption

- Cross-organizational metric sharing supports benchmarking and collaborative analytics

How modern data platforms enhance semantic layers

Permalink to “How modern data platforms enhance semantic layers”Semantic layers provide technical consistency, but technical definitions alone don’t fully enable data democratization. Users need context beyond calculation logic to trust and effectively use data. Understanding data quality, lineage, ownership, and usage patterns matters as much as knowing how metrics are calculated.

Modern platforms address this challenge by integrating semantic layers with active metadata management. Rather than treating semantic definitions in isolation, these systems create unified context layers that combine technical logic with operational intelligence.

For instance, Atlan’s approach natively ingests dbt semantic models and enriches them with ownership, lineage, and quality signals, so dbt-defined business logic is governed, discoverable, and accurate across every tool. And because Atlan automatically maps semantic definitions down to column-level lineage – showing exactly what inputs power metrics – users and AI agents can trace dependencies, assess quality, and trust what they see.

Configurations like this automatically connect semantic definitions to:

- Real-time data quality metrics showing reliability

- Automated lineage mapping revealing dependencies

- Usage analytics indicating which metrics matter most

- Collaborative features enabling cross-team alignment

This is particularly valuable for AI governance. When AI agents query data, they access both structured business logic and quality-aware metadata. Machine-readable semantics and governance context enable AI to reason about data appropriately, so it understands not just what “revenue” calculates, but also which sources are certified, who owns definitions, and what quality thresholds apply.

Automated workflows minimize manual coordination overhead for governance councils, while semantic views stay synchronized with source systems. And cloud-native performance benefits don’t require separate infrastructure investments.

See how Atlan’s unified context layer enhances semantic definitions with active metadata for AI-ready data operations.

Real stories from real customers: How leading data teams built semantic layers

Permalink to “Real stories from real customers: How leading data teams built semantic layers”Workday uses Atlan’s MCP server to turn shared business language into context AI can actually use

Permalink to “Workday uses Atlan’s MCP server to turn shared business language into context AI can actually use”“As a part of Atlan’s AI labs, we are co-building the semantic layers that AI needs with new constructs like context products that can start with an end user’s prompt and include them in the development process…” — Joe DosSantos, Vice President, Enterprise Data & Analytics, Workday

Watch how Workday overcame the AI struggles with structured data

Watch Workday’s story →Key takeaways

Permalink to “Key takeaways”Semantic layers have evolved from niche BI features into critical infrastructure for modern data operations. Organizations managing multiple analytics tools, preparing for AI adoption, or scaling self-service capabilities need centralized business logic to maintain consistency and trust. The foundation you build today determines how quickly you can adapt to new tools, respond to business changes, and leverage AI capabilities tomorrow.

Implementation success starts with prioritization and focus, not attempting comprehensive coverage immediately. Identify the metrics causing the most pain, establish clear governance, integrate with existing workflows, and measure both adoption and business value. Modern platforms that combine semantic definitions with active metadata provide the context needed to make data not just consistent, but also trustworthy.

Atlan helps implement and enhance semantic layer capabilities with unified metadata management.

Let’s help you build it

Book a demo →FAQs about semantic layers

Permalink to “FAQs about semantic layers”What is a semantic layer?

Permalink to “What is a semantic layer?”A semantic layer is a translation layer between raw data and business users that maps technical database structures to business concepts like “Revenue” or “Customer Churn.” It standardizes metric definitions, enforces governance, and converts business-friendly requests into optimized queries, ensuring consistent results across BI tools, SQL, and AI agents.

How does a semantic layer support AI and machine learning?

Permalink to “How does a semantic layer support AI and machine learning?”A semantic layer improves AI accuracy by providing explicit business rules that constrain model behavior. Instead of guessing which tables define “revenue,” AI agents access structured semantic definitions specifying exact calculations, joins, and filters. Research shows hallucinations decrease by over 50% when LLMs use semantic grounding rather than inferring meaning from raw schemas.

What is a semantic layer in a data warehouse?

Permalink to “What is a semantic layer in a data warehouse?”A semantic layer in a data warehouse translates technical table structures into business-friendly terms that analysts can query directly. It sits between the warehouse’s physical tables and reporting tools, mapping columns like “txn_amt” to concepts like “Revenue.” This abstraction lets business users explore data without writing complex SQL or understanding schema details.

How does a semantic layer work?

Permalink to “How does a semantic layer work?”A semantic layer works by intercepting queries from BI tools, AI agents, or SQL clients and translating business terms into optimized database queries. When a user asks for “Q4 Revenue by Region,” the semantic layer maps “Revenue” to its defined calculation, applies filters, routes the query to the correct tables, and returns consistent results regardless of which tool made the request.

What are the benefits of a semantic layer?

Permalink to “What are the benefits of a semantic layer?”The key benefits of a semantic layer include consistent metric definitions across all tools, reduced data discrepancies between reports, faster self-service analytics, simplified governance through centralized business logic, and improved AI accuracy by grounding models in explicit business rules. Organizations typically see 50% fewer “which number is right?” disputes after implementation.

Why do we need a semantic layer?

Permalink to “Why do we need a semantic layer?”Organizations need a semantic layer because different teams calculating the same metric often get different results. Without centralized definitions, analysts create personal calculations that vary subtly, causing confusion and eroding trust in data. Semantic layers eliminate these inconsistencies by establishing a single source of truth for business logic that all tools and users share.

What is an example of a semantic layer?

Permalink to “What is an example of a semantic layer?”A common semantic layer example is defining “Monthly Recurring Revenue (MRR)” once and making it available everywhere. The semantic layer specifies which tables contain subscription data, how to handle upgrades and cancellations, which currencies to convert, and what date logic applies. Every BI tool, dashboard, and AI query then uses this exact calculation automatically.

What is the dbt semantic layer?

Permalink to “What is the dbt semantic layer?”The dbt semantic layer is a metrics framework built into dbt (data build tool) that lets teams define metrics, dimensions, and business logic directly in their transformation code. It uses MetricFlow as its query engine to serve consistent calculations to any connected tool. The dbt semantic layer is open-source and integrates with major BI platforms and AI applications.

What is a universal semantic layer?

Permalink to “What is a universal semantic layer?”A universal semantic layer is a tool-agnostic abstraction that serves consistent business definitions to any consumer, including multiple BI tools, SQL clients, AI agents, and custom applications. Unlike embedded semantic layers tied to specific platforms, universal layers federate queries across data sources and provide a single definition layer regardless of where data lives or which tool requests it.

How does Atlan support semantic layers today?

Permalink to “How does Atlan support semantic layers today?”Atlan supports semantic layers by ingesting and enriching them, rather than replacing them. Today, we integrate with sources like dbt Semantic Layer and BI tools, bringing in semantic models, metrics, and relationships as first‑class assets.

Atlan then layers on active metadata – lineage, data quality, ownership, usage, and governance – so those semantic definitions become discoverable, trusted, and AI‑ready across the stack.

What’s the difference between a semantic layer and a data catalog?

Permalink to “What’s the difference between a semantic layer and a data catalog?”A semantic layer focuses on standardizing business logic and metric calculations to ensure consistent definitions across tools, while a data catalog provides discovery, governance, and context for data assets across the organization. The two are complementary, not competitive.

The semantic layer answers “how do we calculate revenue?” while the data catalog answers “where does this data come from and who owns it?” Organizations often integrate both, using catalogs to enrich semantic definitions with quality indicators, lineage, and usage patterns.

Do I need a semantic layer if I only use one BI tool?

Permalink to “Do I need a semantic layer if I only use one BI tool?”Yes, even single-tool environments benefit from semantic layers in multi-user scenarios. Without centralized definitions, individual analysts create personal calculations that vary subtly. These variations cause problems when different team members generate reports on the same metrics.

The value increases substantially when organizations use data for purposes beyond traditional BI, including AI, ML, data science, or embedded analytics. Semantic layers become essential infrastructure as tool diversity inevitably grows.

How long does semantic layer implementation typically take?

Permalink to “How long does semantic layer implementation typically take?”Initial implementation for 10-20 critical metrics typically requires 4-8 weeks. This includes identifying metrics, documenting current calculation discrepancies, establishing governance processes, and integrating with primary consumption tools. Teams see value quickly as the first metrics go live.

Full enterprise deployment spanning hundreds of metrics across multiple departments typically extends 6-12 months. The timeline depends on organization size, existing data architecture complexity, and the degree of alignment needed across business stakeholders.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Semantic layers: Related reads

Permalink to “Semantic layers: Related reads”- Gartner® Magic Quadrant™ for Metadata Management Solutions 2025: Key Shifts & Market Signals

- The G2 Grid® Report for Data Governance: How Can You Use It to Choose the Right Data Governance Platform for Your Organization?

- Data Governance in Action: Community-Centered and Personalized

- Data Governance Framework — Examples, Templates, Standards, Best practices & How to Create One?

- Data Governance Tools: Importance, Key Capabilities, Trends, and Deployment Options

- The 10 Foundational Principles of Data Governance: Pillars of a Modern Data Culture

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- 7 Top AI Governance Tools Compared | A Complete Roundup for 2026

- Dynamic Metadata Discovery Explained: How It Works, Top Use Cases & Implementation in 2026

- 9 Best Data Lineage Tools: Critical Features, Use Cases & Innovations

- Data Lineage Solutions: Capabilities and 2026 Guidance

- 12 Best Data Catalog Tools in 2026 | A Complete Roundup of Key Capabilities

- Data Catalog Examples | Use Cases Across Industries and Implementation Guide

- 5 Best Data Governance Platforms in 2026 | A Complete Evaluation Guide to Help You Choose

- Data Lineage Tracking | Why It Matters, How It Works & Best Practices for 2026

- Dynamic Metadata Management Explained: Key Aspects, Use Cases & Implementation in 2026