Snowflake Cortex: Everything You Need to Know for 2025

Share this article

Snowflake Cortex helps you use AI to quickly analyze data and build AI applications, without the complexities of managing the underlying infrastructure.

With Cortex, Snowflake customers can perform tasks like demand forecasting, anomaly detection, summarization, and translation using simple SQL or Python queries, making advanced analytics more accessible to data practitioners.

See How Atlan Simplifies Data Governance – Start Product Tour

This article will explore how Snowflake Cortex simplifies data science workflows by looking into its core offerings and benefits. We’ll also discuss its top use cases and pricing, and address some of the most commonly asked questions.

Table of contents #

- What is Snowflake Cortex?

- What are the benefits of Snowflake Cortex?

- Snowflake Cortex core capabilities: Native AI and ML features

- Piecing it together: The role of metadata in delivering trustworthy insights with Snowflake Cortex

- Snowflake Cortex FAQs (Frequently Asked Questions)

- Summing up

- Snowflake Cortex: Related reads

What is Snowflake Cortex? #

Snowflake Cortex is a suite of AI features designed to make data analysis and generative AI (gen AI) application development easier. It incorporates large language models (LLMs), vector search, and fully managed text-to-SQL services.

Since May 2024, Snowflake Cortex is generally available. Moreover, at this year’s Build conference, Snowflake “*debuted new tools for Cortex AI, its fully managed offering for developing conversational AI apps grounded in enterprise data hosted on its platform*.”

Snowflake Cortex provides access to the top AI models and LLMs from Mistral, Reka, Meta, Google, and Snowflake Arctic via no-code, SQL and Python interfaces. Additionally, it leverages Snowflake’s existing security, scalability, and governance capabilities to ensure safe and secure LLM use.

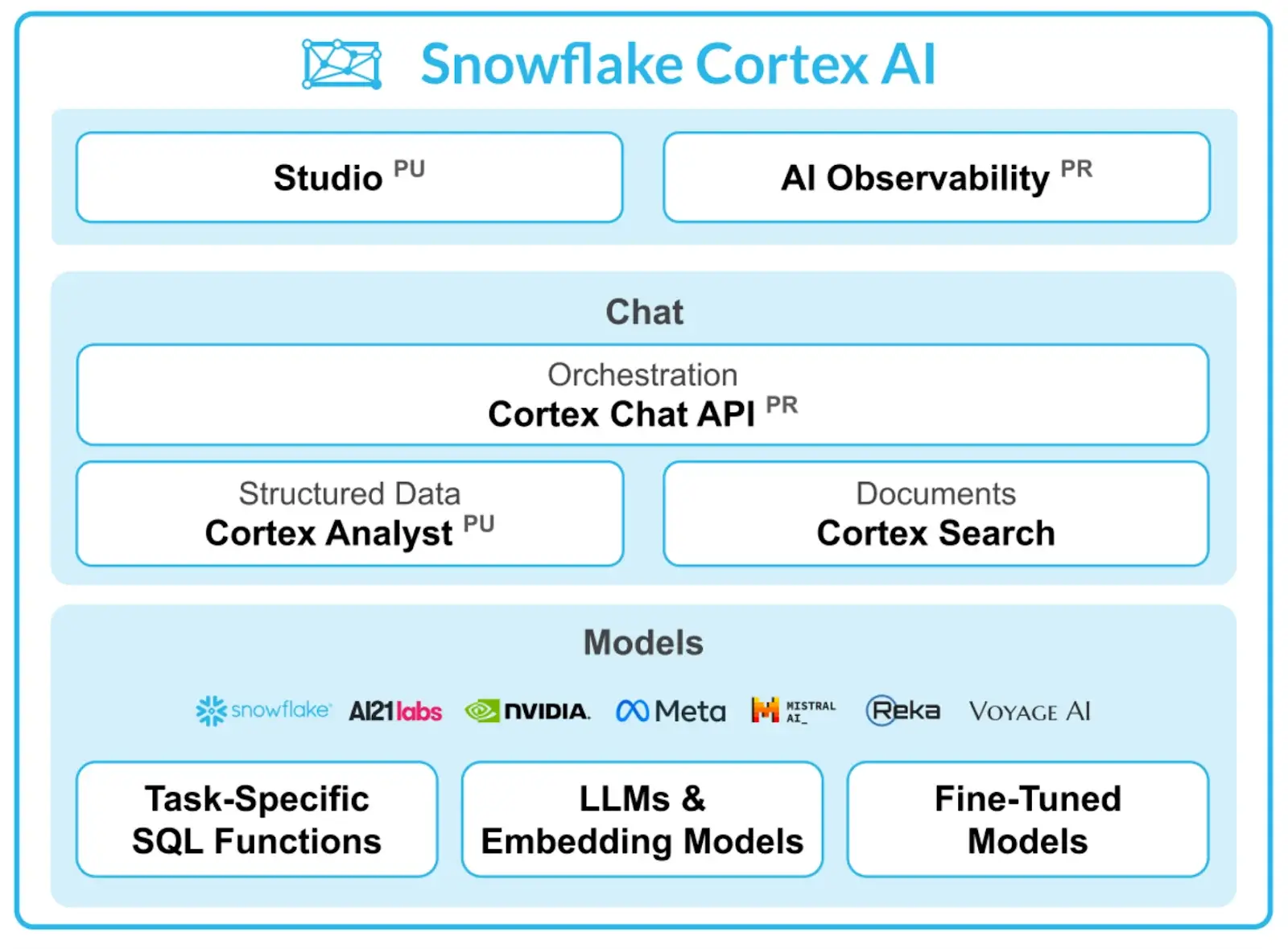

Snowflake Cortex AI - Source: Snowflake.

Snowflake Cortex supports a wide range of use cases, including:

- Generative AI applications: Create conversational AI and document chatbots

- Text analysis: Perform sentiment analysis, summarization, and translation

- Predictive modeling: Forecast demand and identify anomalies in data

- Document processing: Extract structured data from unstructured content

What are the benefits of Snowflake Cortex? #

Snowflake Cortex “enables organizations to expedite delivery of generative AI applications with LLMs while keeping their data in the Snowflake security and governance perimeter.“

WIth Cortex, you can execute tasks such as translation, sentiment analysis, and summarization quickly for semi-structured and unstructured long-text data in Snowflake — product reviews, surveys, call transcripts, and more.

Other key benefits include:

- Access to high-performing LLMs for quick text analysis

- Development of full-stack RAG applications, such as document chatbots, within Snowflake

- Low-code tools for handling unstructured data and generating insights (like demand for resources, potential incidents)

- Quick execution of sentiment analysis, summarization, and translation tasks (without needing the expertise of an ML engineer)

- Simplified processes for predictive modeling and data science

Snowflake Cortex core capabilities: Native AI and ML features #

The Snowflake Cortex suite of AI features include:

- Snowflake Cortex LLM Functions (generally available): Understand, query, translate, summarize, and generate text with SQL or Python functions and the LLM of your choice

- ML Functions (generally available): Perform predictive analysis to get insights from your Snowflake data assets using SQL functions

- Snowflake Copilot (generally available): Use an LLM assistant to understand your data with natural language queries, generate and refine SQL queries, and understand Snowflake’s features

- Document AI (generally available): Intelligent data processing (IDP) to extract content from a large volume of documents at scale

- Cortex Fine-Tuning (public preview): Customize LLMs to increase their accuracy and performance for use-case specific tasks

- Cortex Search (generally available): A text search service that provides LLMs with context from your latest proprietary data

- Cortex Analyst (public preview): A natural language interface for asking business questions

- Snowflake AI & ML Studio for LLMs (private preview): Enable users of all technical levels to use AI with no-code development

- Snowflake Cortex Guard (generally available soon): Use an LLM-based safeguard to filter and flag harmful content, ensuring model safety and usability

Let’s explore some of the most prominent Snowflake Cortex capabilities further.

Snowflake Cortex LLM Functions #

Cortex LLM Functions can be grouped under:

-

COMPLETE function: This is a general purpose function to perform several tasks, from aspect-based sentiment classification and synthetic data generation to customized summaries.

The syntax for COMPLETE functions is as follows:

SNOWFLAKE.CORTEX.COMPLETE( <model>, <prompt_or_history> [ , <options> ] ) -

Task-specific functions: These are pre-built, managed functions that automate routine tasks, such as generating summaries or performing quick translations, without requiring customization.

Task-specific functions include:

-

CLASSIFY_TEXT: Categorizes a given text into predefined categories.

The syntax for CLASSIFY_TEXT is as follows:

SNOWFLAKE.CORTEX.CLASSIFY_TEXT( <input> , <list_of_categories>, [ <options> ] ) -

EXTRACT_ANSWER: Finds answers to a question within unstructured data

The syntax for EXTRACT_ANSWER is as follows:

SNOWFLAKE.CORTEX.EXTRACT_ANSWER( <source_document>, <question>) -

PARSE_DOCUMENT: Extracts text (OCR mode) or text with layout elements (LAYOUT mode) from documents in specific stages, returning a JSON object

The syntax for PARSE_DOCUMENT is as follows:

SNOWFLAKE.CORTEX.PARSE_DOCUMENT( '@<stage>', '<path>', [ { 'mode': '<mode>' }, ] ) -

SENTIMENT: Analyzes text sentiment, scoring from -1 (negative) to 1 (positive)

The syntax for SENTIMENT is as follows:

SNOWFLAKE.CORTEX.SENTIMENT(<text>) -

SUMMARIZE: Produces a summary of the input text

The syntax for SUMMARIZE is as follows:

SNOWFLAKE.CORTEX.SUMMARIZE(<text>) -

TRANSLATE: Translates text between supported languages

The syntax for TRANSLATE is as follows:

SNOWFLAKE.CORTEX.TRANSLATE( <text>, <source_language>, <target_language>) -

EMBED_TEXT_768: Generates a 768-dimension vector embedding for the input text

The syntax for EMBED_TEXT_768 is as follows:

SNOWFLAKE.CORTEX.EMBED_TEXT_768( <model>, <text> ) -

EMBED_TEXT_1024: Generates a 1024-dimension vector embedding for the input text

The syntax for EMBED_TEXT_1024 is as follows:

SNOWFLAKE.CORTEX.EMBED_TEXT_1024( <model>, <text> )

-

-

Helper functions: These are pre-built, managed functions that minimize failures in LLM operations, such as counting tokens in an input prompt to prevent exceeding model limits.

Helper functions include:

-

COUNT_TOKENS: Returns the token count for the given input text, based on the specified model or Cortex function.

The syntax for COUNT_TOKENS is as follows:

SNOWFLAKE.CORTEX.COUNT_TOKENS( <model_name> , <input_text> ) SNOWFLAKE.CORTEX.COUNT_TOKENS( <function_name> , <input_text> ) -

TRY_COMPLETE: Operates like the COMPLETE function but returns NULL instead of an error code if execution fails.

The syntax for TRY_COMPLETE is as follows:

SNOWFLAKE.CORTEX.TRY_COMPLETE( <model>, <prompt_or_history> [ , <options> ] )

-

Snowflake Cortex ML Functions #

ML functions in Snowflake Cortex provide automated predictions and insights through machine learning, simplifying data analysis. Snowflake selects the appropriate model for each feature, allowing you to leverage machine learning without technical expertise—just provide your data.

“These (i.e., ML functions) powerful out-of-the-box analysis tools help time-strapped analysts, data engineers, and data scientists understand, predict, and classify data, without any programming.” - Snowflake Documentation

Cortex ML Functions can be grouped under:

- Time-series functions: Use machine learning to analyze time-series data, identifying how a specified metric changes over time and in relation to other data features. The model delivers insights and predictions based on detected trends. The available functions include:

- Forecasting: Forecasting predicts future metric values based on past trends in the data.

- Anomaly detection: Anomaly detection identifies metric values that deviate from expected patterns.

- Other analysis functions: These are ML functions that don’t use time-series data:

- Classification: Classification categorizes rows into two or more classes based on their most predictive features.

- Top insights: Top Insights identifies dimensions and values that have an unexpected impact on the metric.

Snowflake Copilot #

Snowflake Copilot is an LLM-powered assistant that simplifies data analysis with natural language queries while ensuring robust data governance. Running securely within Snowflake Cortex, it respects RBAC and accesses only datasets you are authorized to use.

You can interact with Copilot in SQL Worksheets and Snowflake Notebooks in Snowsight. Copilot assists with:

- Exploring datasets

- Generating and refining SQL queries

- Optimizing performance

- Answering questions about Snowflake features

Read more → Snowflake Copilot: Uses, Benefits, Best Practices, and FAQs

Document AI #

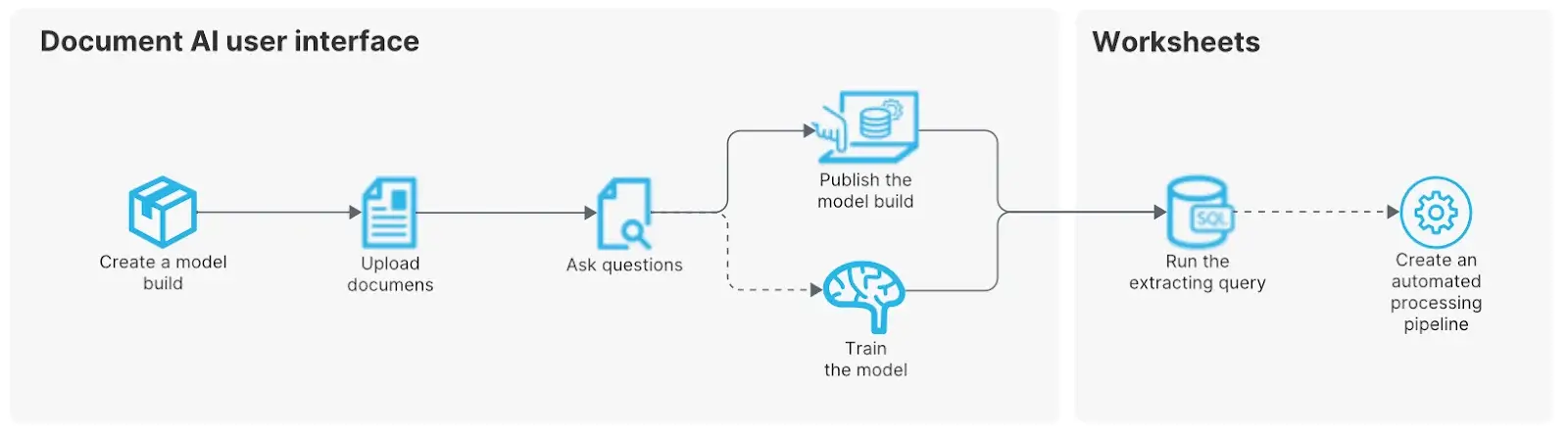

Document AI is a Snowflake AI feature that uses Arctic-TILT (Text Image Layout Transformer)–a proprietary LLM–to extract data (text, table values, handwritten content) from PDFs and other unstructured documents into a structured output.

You can “analyze and organize data contained in invoices, reports, intake forms, and many other electronic and handwritten documents” from AWS and Microsoft Azure environments.

Snowflake Document AI - Source: Snowflake Documentation.

Cortex Fine-Tuning #

Cortex Fine-Tuning is a fully managed service to fine-tune popular LLMs using your data. This helps you customize your LLMs and deliver more relevant and accurate results.

The fine-tuning features are provided as the FINETUNE function, supporting the following:

- CREATE: Creates a fine-tuning job with the given training data

- SHOW: Lists all the fine-tuning jobs (that you can access)

- DESCRIBE: Describes the progress and status of a specific fine-tuning job

- CANCEL: Cancels a fine-tuning job

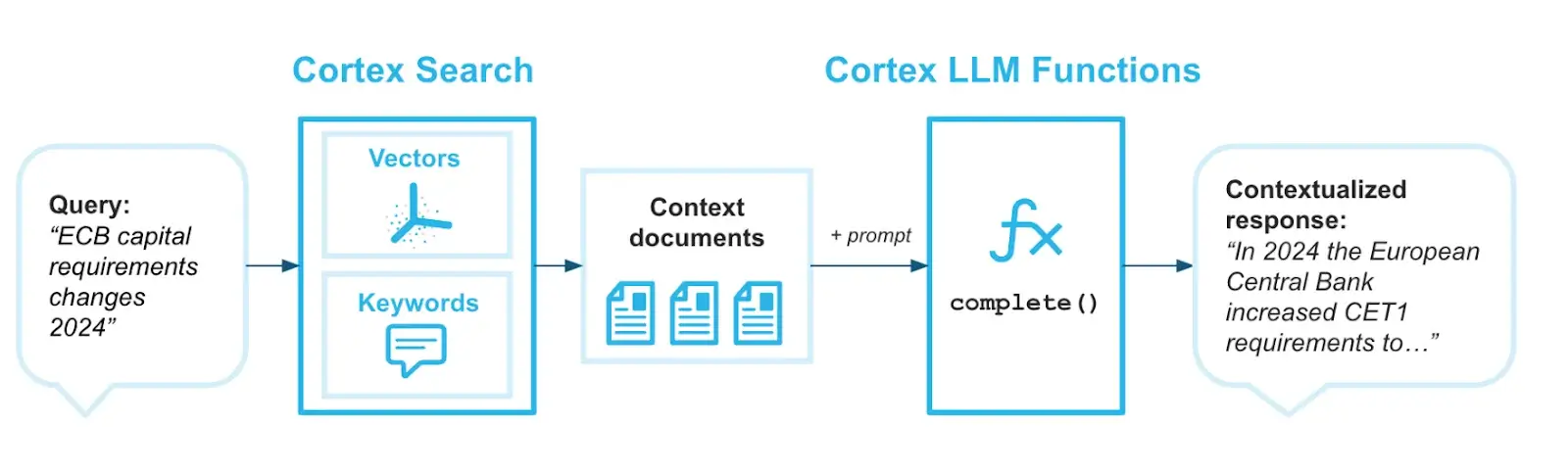

Cortex Search #

Cortex Search is a search engine to help you find the information you need from enterprise documents and other unstructured data using hybrid retrieval (combining keyword retrieval and vector retrieval):

- RAG engine for LLM chatbots: Provides contextual responses for LLM chat applications using semantic search

- Enterprise search: Powers a high-quality search bar that can be embedded in your application

Snowflake Cortex AI - Source: Snowflake Documentation.

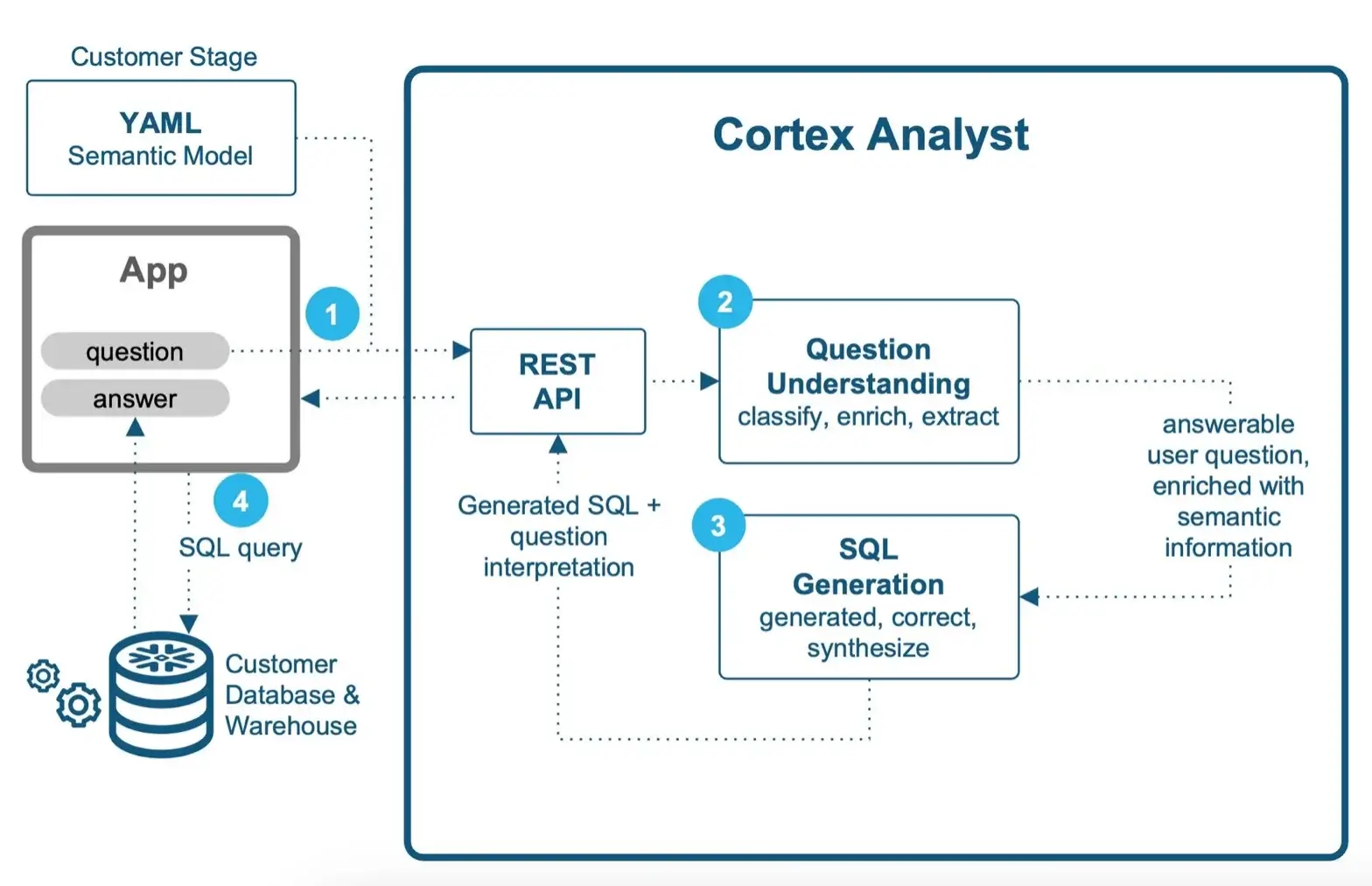

Cortex Analyst #

Cortex Analyst is a fully managed service offering a conversational interface to interact with structured data in Snowflake. So, data practitioners can ask business questions in natural language, without writing SQL, and get direct answers quickly.

Snowflake Cortex Analyst - Source: Snowflake Blog.

It’s important to note that Cortex Analyst only answers SQL-based questions and doesn’t address broader business-related queries like identifying trends.

Cortex Analyst is powered by the latest Meta Llama and Mistral models. It integrates with business tools and platforms, such as Streamlit apps, Slack, Teams, custom chat interfaces, etc. via REST API.

Cortex Analyst doesn’t use customer data to train or fine-tune models, and no data (metadata and prompts) leaves Snowflake’s governance boundary. It leverages semantic model metadata (table names, column names, value types) for SQL query generation during inference.

Piecing it together: The role of metadata in delivering trustworthy insights with Snowflake Cortex #

Snowflake Cortex represents a significant step forward in democratizing AI and ML capabilities for businesses of all sizes.

By eliminating the need for complex infrastructure setup and providing a user-friendly interface, Snowflake Cortex empowers Snowflake users to leverage AI for text analytics, predictive modeling, and custom application development.

However, AI-powered results are only as good as the underlying data and metadata. The problem with enterprise AI tools is a lack of trust in the results they deliver. While AI-powered tools like Snowflake Cortex hold immense potential, the accuracy and reliability of their outputs are fundamentally tied to the quality of the data and metadata they process.

Metadata provides context on data asset relationships, structures, and meanings across your data estate.

“Metadata itself is evolving into big data, becoming the foundation for the future of AI and LLM applications. Metadata will need to live in a metadata lake or lakehouse to power trust and context at scale.” - Prukalpa Sankar on the role of metadata in modern enterprise data stacks

Unlocking the complete potential of Snowflake Cortex is possible only when it can connect to all the tools in your data stack, understand and interpret metadata, and leverage it to provide accurate, trustworthy insights.

Also, read → The role of metadata management in enterprise AI

Snowflake Cortex FAQs (Frequently Asked Questions) #

1. What are the most common error messages received while using Snowflake Cortex LLM functions? #

According to Snowflake, here are the most common error messages received while using Snowflake Cortex LLM functions:

too many requests: Excessive system load. Try again later.invalid options object: The object contains invalid options or values.budget exceeded: You’ve overshot your model consumption budget.unknown model “<model name>”: The model you’ve specified doesn’t exist (or isn’t a part of Snowflake Cortex).invalid language “<language>”: The language you’ve specified isn’t supported by the TRANSLATE function in Snowflake Cortex.max tokens of <count> exceeded: The request has surpassed the chosen model’s supported token limit.all requests were throttled by remote service: High usage has led to your request being throttled. Models used by Snowflake Cortex have limitations on size and inputs that exceed the limit result in an error. Try again later.invalid number of categories <num categories>: The specified number of categories exceeds the limit for CLASSIFY_TEXT.invalid category input type: The specified category type is not supported by CLASSIFY_TEXT.empty classification input: The input to CLASSIFY_TEXT is either empty or null.

2. Are all Snowflake Cortex functions available globally? #

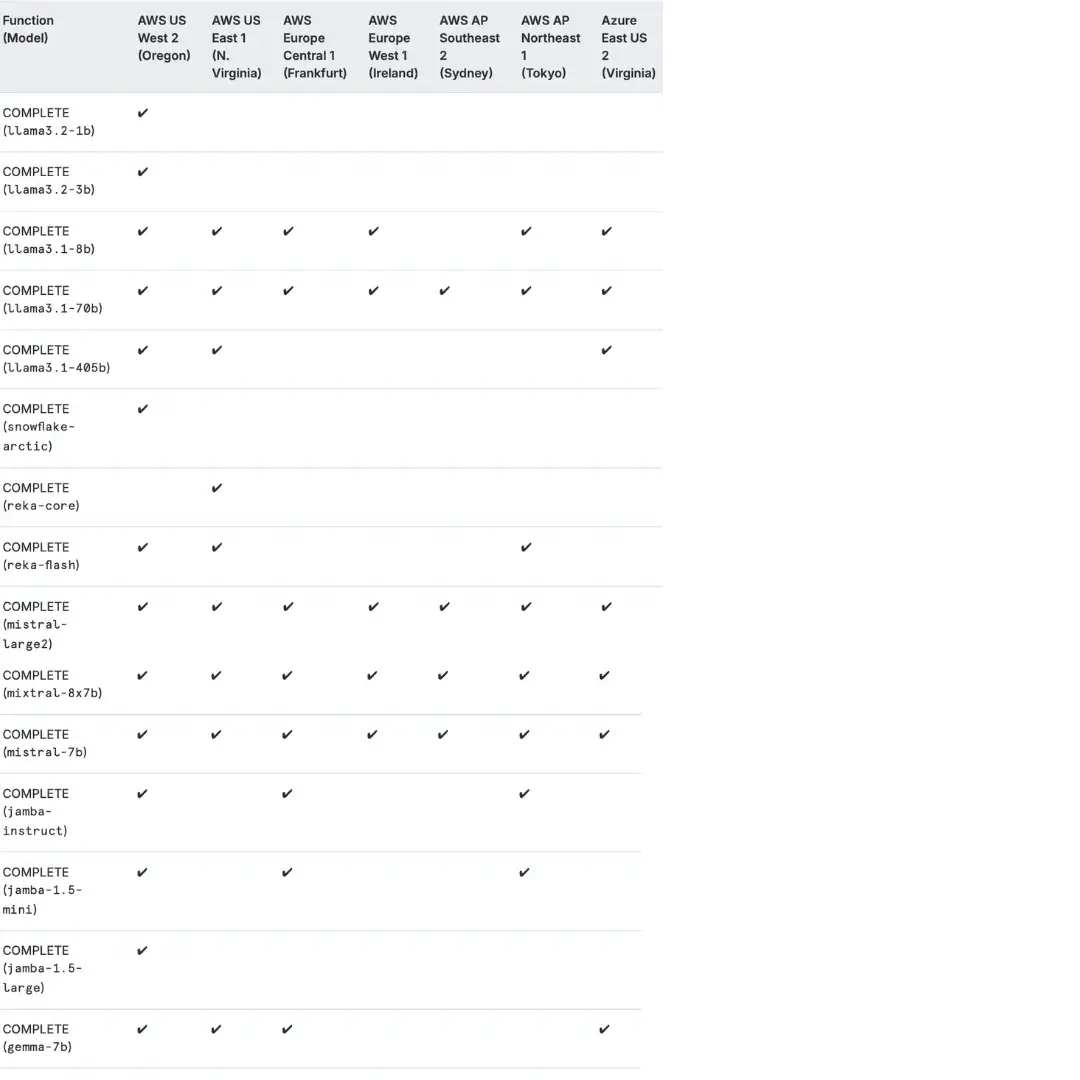

Snowflake Cortex LLM functions are currently available in certain regions across the US and Europe (for AWS and Azure).

Here’s a snapshot of the availability of the COMPLETE function across regions.

COMPLETE function availability across AWS and Azure - Source: Snowflake Documentation.

The other LLM functions (SUMMARIZE, TRANSLATE, SENTIMENT, CLASSIFY_TEXT, etc.) are available across all regions and cloud providers (AWS and Azure).

Meanwhile, EMBED_TEXT_768 and EMBED_TEXT_1024 are available across extended regions, which include GCP (US and Europe).

3. How do I choose an LLM model in Snowflake Cortex? #

To achieve the best performance per credit, choose a model that’s a good match for the content size and complexity of your task.

If you’re not sure where to start, try the most capable models first to establish a baseline. According to Snowflake, “reka-core and mistral-large2 are the most capable models offered by Snowflake Cortex, and will give you a good idea what a state-of-the-art model can do.”

Depending on your use case, you can switch to medium models (which can handle a low to moderate amount of reasoning) or small models (suitable for simple tasks like code or text completion).

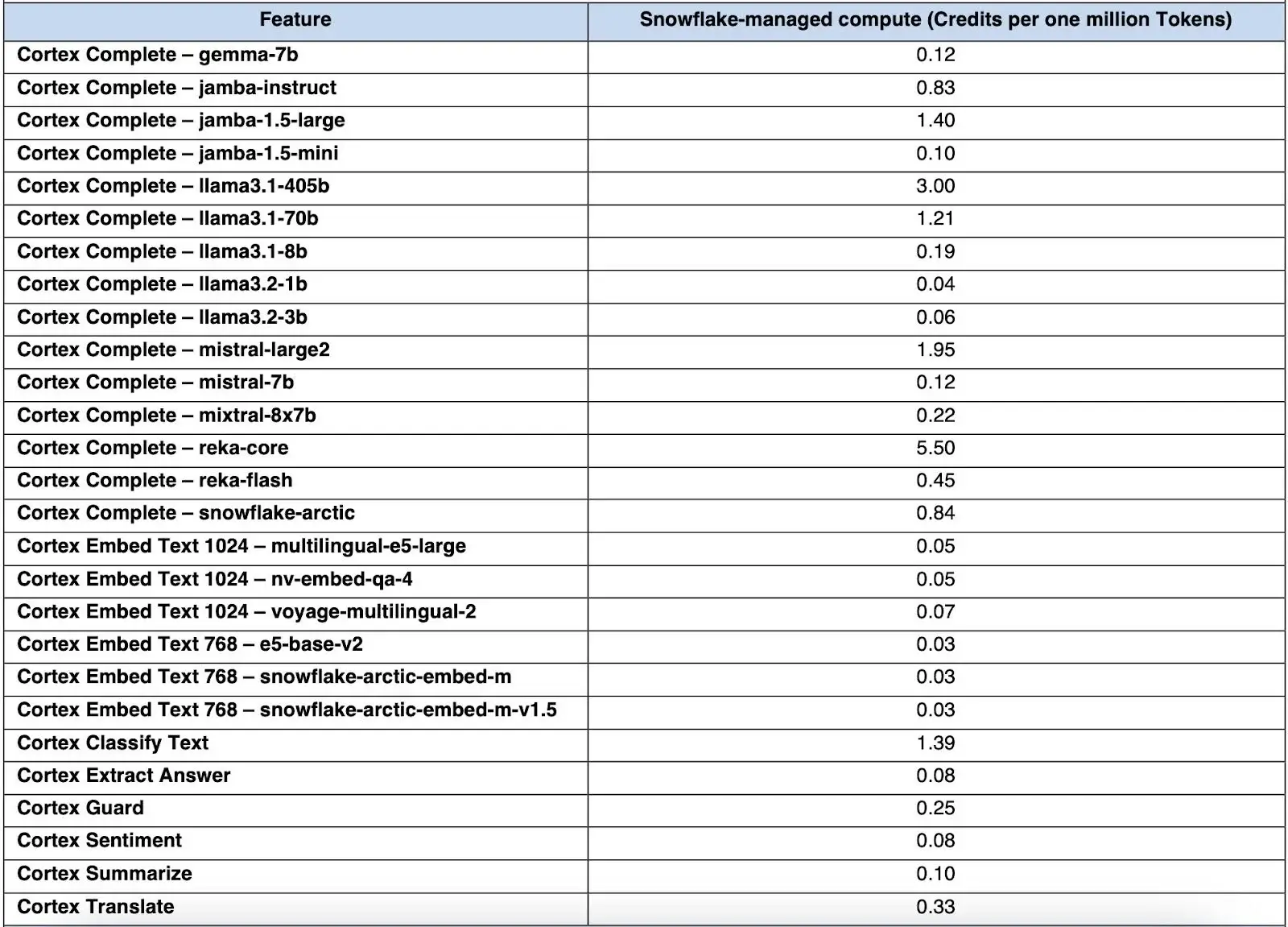

4. How can you calculate the cost of using Snowflake Cortex? #

Snowflake Cortex LLM functions incur a compute cost based on the number of tokens processed. A token is roughly four text characters.

The cost varies depending on the model and Cortex function being used. You should also note that:

- Some functions that generate new text in the response (COMPLETE, SUMMARIZE, TRANSLATE), count both input and output tokens

- For other functions (EMBED_TEXT_*, CORTEX GUARD, SENTIMENT) that merely extract information from the input, only count input tokens are counted

- For EXTRACT_ANSWER, the number of billable tokens is the sum of the number of tokens in the from_text and question fields.

- Functions that add a prompt to the input text to generate the response (TRANSLATE, EXTRACT_ANSWER, CLASSIFY_TEXT, SENTIMENT) have a slightly higher input token count

With that in mind, here’s how much it’ll cost to run different LLM functions across models.

Snowflake Cortex costs - Source: Snowflake Documentation.

For detailed information on pricing, we recommend contacting Snowflake account representatives directly.

5. Are there any limitations to using Snowflake Cortex? #

There are no usage quotas applied at the account level. However, to provide LLM capabilities to all Snowflake customers, Cortex LLM functions may experience throttling during high usage periods.

Throttled requests will return an error and can be retried later.

6. How does Cortex handle AI safety? #

Snowflake emphasizes the importance of “setting up guardrails, which evaluate inputs and/or outputs to ensure they stay “on the track” of appropriate content.“ You can protect your user-facing applications from potentially harmful LLM model responses to go to production with safety using Cortex Guard.

Powered by Meta’s Llama Guard 2, Cortex Guard evaluates LLM responses to block unsafe content, maintaining governance while allowing scalable and efficient application deployment.

It filters harmful content, including violent, hateful, or inappropriate responses, supports production-level SLAs, and help you safely use generative AI while following internal safety and AI governance standards.

Summing up #

Snowflake Cortex simplifies data workflows and allows organizations to take advantage of AI for analytics and application development. Its accessible tools and pre-built features enable users to handle complex tasks like predictive modeling, text analysis, and LLM customization.

As a result, you can work on sentiment analysis, generate SQL queries, build chatbots, and more, while ensuring the safe, secure, and efficient deployment of your AI apps.

Snowflake Cortex: Related reads #

- Snowflake Horizon for Data Governance: Here’s Everything We Know So Far

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- Snowflake Data Cloud Summit 2024: Get Ready and Fit for AI

- Snowflake Data Lineage: A Step-by-Step How to Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Glossary for Snowflake: Shared Understanding Across Teams

- Personalized Data Discovery for Snowflake Data Assets

- Snowflake Data Dictionary

Share this article