Conversational analytics vs. conversation analytics

Permalink to “Conversational analytics vs. conversation analytics”Despite having similar names, conversational analytics and conversation analytics serve completely different purposes. One improves call center operations by analyzing conversations; the other democratizes data access by making analytics conversational. The confusion stems from naming, but the use cases don’t overlap.

Conversation analytics analyzes customer service interactions—phone calls, chat transcripts, emails, and support tickets—to extract insights about sentiment, agent performance, customer pain points, and service quality. Tools like Gong, CallMiner, and Sprinklr help contact centers improve customer experience by analyzing what happens during conversations.

Conversational analytics enables business users to explore data warehouses through natural language questions instead of building SQL queries or navigating dashboards. It’s a self-service BI approach where AI interprets questions like “Show me Q4 revenue by region” and generates appropriate queries against your business data.

| Dimension | Conversational Analytics (BI) | Conversation Analytics (Contact Center) |

|---|---|---|

| Purpose | Query business data via natural language | Analyze customer service interactions |

| Data Source | Data warehouses, BI semantic layers | Call recordings, chat logs, emails |

| Outcome | Self-service insights, SQL generation | Sentiment analysis, agent performance |

The conversational analytics spectrum at-a-glance

Permalink to “The conversational analytics spectrum at-a-glance”Level 1 (Conversational Metadata)

- Answers questions about data assets

- 70-80% accuracy

- Days to deploy

- Example platforms: Alation, Atlan discovery, Collibra DGC

Level 2 (Semantic Layer Queries)

- Answers analytical questions using pre-defined metrics

- 85-95% accuracy

- Weeks to months to deploy

- Example platforms: Looker + Gemini, ThoughtSpot Sage, Power BI Copilot

Level 3 (Dynamic Text-to-SQL)

- Generates novel SQL for unanticipated questions

- 95%+ accuracy required

- Months to deploy

- Example platforms: Databricks Genie, Snowflake Cortex Analyst, custom Claude/GPT agents

How conversational analytics works

Permalink to “How conversational analytics works”At its core, conversational AI analytics transforms how people interact with data by replacing technical query languages with natural conversation. When you ask a question, several sophisticated processes happen simultaneously:

Natural language processing interprets your question, breaking down syntax and semantic meaning. “Show me Q4 revenue by region” gets parsed into structured components the system can act on.

Intent recognition identifies what you want to accomplish, whether that’s comparing performance across dimensions, identifying trends over time, drilling down into anomalies, or validating assumptions. The system distinguishes between “Why did revenue drop?” (root cause analysis) and “What was last quarter’s revenue?” (simple retrieval).

Entity extraction identifies relevant data elements within your question. From “Compare churn rates for enterprise customers who joined in 2023 versus 2024,” the system extracts: metric (churn rate), segment (enterprise customers), time periods (2023, 2024), and analysis type (comparison).

Query generation translates intent into database operations. Depending on complexity, this might mean retrieving metadata from a catalog, querying a semantic layer for pre-defined metrics, or generating novel SQL against your data warehouse. The system determines the appropriate technical implementation for your business question.

Result synthesis presents answers conversationally with visualizations. Instead of raw query output, you receive formatted responses: “Enterprise customer churn increased from 12% to 18% between 2023 and 2024 cohorts,” accompanied by trend charts and breakdowns.

Feedback loops improve accuracy over time. When users refine questions, correct interpretations, or validate results, AI-based conversational analytics systems learn which mappings between natural language and technical queries produce reliable answers for your specific business context.

This is fundamentally different from traditional BI, where users must know which dashboard contains relevant data, understand filter hierarchies, and interpret visualizations created by someone else weeks or months ago. Conversational analytics inverts the model—data comes to users in response to their questions, rather than users hunting for pre-built answers.

| Dimension | Traditional BI | Conversational Analytics |

|---|---|---|

| Interface | Dashboard navigation, SQL | Natural language questions |

| Flexibility | Limited to pre-built views | Handles unanticipated questions |

| User requirement | Technical literacy | Business context only |

| Time to answer | Minutes to days (if dashboard exists) | Seconds |

| Data freshness | Depends on refresh schedule | Real-time query capability |

The accuracy gap: Why semantic layers alone aren’t enough

Permalink to “The accuracy gap: Why semantic layers alone aren’t enough”Semantic layers prevent many LLM errors. In fact, Google reports a two-thirds error reduction when grounding Gemini in LookML models rather than raw database schemas. But production deployments reveal three additional requirements beyond semantic layers:

1. Business glossary disambiguation

Permalink to “1. Business glossary disambiguation”Semantic layers define how to calculate a metric; business glossaries define which definition to use when someone asks a question. Without glossaries, semantic layers can’t resolve ambiguity. The AI generates syntactically perfect SQL that calculates the wrong revenue definition for the context.

2. Data quality and freshness signals

Permalink to “2. Data quality and freshness signals”Semantic layers provide calculation logic, while data quality monitoring prevents stale or incomplete data from generating wrong answers. But even if the semantic layer knows how to calculate a metric, it will still produce unreliable results if the data is stale. Active metadata surfaces warnings about outdated information to help avoid answers generated with false confidence.

3. Complete lineage for explainability

Permalink to “3. Complete lineage for explainability”Semantic layers show metric definitions and how they’re calculated. Lineage traces go deeper, tracing calculations back to source systems, identifying potential data quality issues, and helping users understand exactly how raw events became aggregated metrics.

The convergence

Permalink to “The convergence”

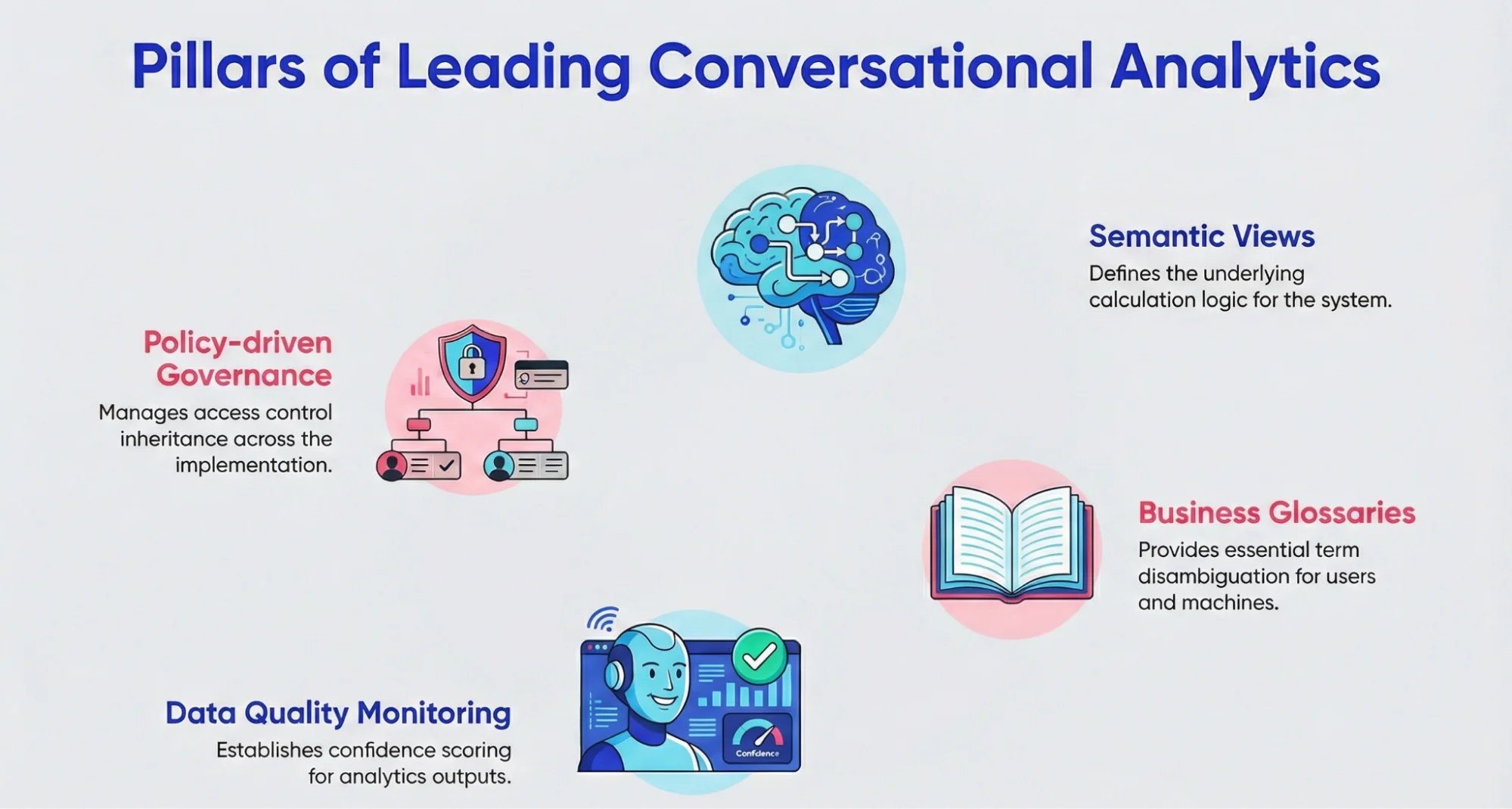

Pillars of leading conversational analytics. Image by Atlan.

Leading implementations combine all four layers. This convergence explains why some conversational analytics pilots succeed while others struggle. Semantic layers alone get you partway there by preventing many syntactic errors. But production deployments serving thousands of users across diverse business contexts require the full context infrastructure stack.

“Definitions have to sit at the crossroads of all of this in the semantic layer so that contextual meaning can be understood by everyone who’s calling it from different tools.” - Joe DosSantos, VP of Enterprise Data and Analytics at Workday, during Atlan Re:Govern

To make conversational analytics a reality, Workday:

- Started in domains with high trust requirements, like finance

- Piloted with deep narrow, high-value query sets

- Measured accuracy explicitly

- Treated conversational analytics as an interface, not a separate stack

Read more about how Workday built a semantic layer here. →

The conversational analytics spectrum: From metadata to SQL

Permalink to “The conversational analytics spectrum: From metadata to SQL”Not all conversational analytics implementations are created equal. Understanding the spectrum helps you set realistic expectations, choose appropriate platforms, and sequence your implementation properly.

| Level | What It Does | Requirements | Example Platforms | Accuracy Threshold | Time to Value |

|---|---|---|---|---|---|

| 1: Conversational metadata and search | Answers questions about data assets | Data catalog with search capabilities and indexed data, basic NLP for query interpretation | Alation’s search interface, Atlan’s AI-powered discovery, Collibra DGC metadata retrieval | 70-80% for discovery use cases | Days to weeks, depending on catalog metadata completeness |

| 2: Semantic layer queries | Answers analytical questions using pre-defined metrics | Mature semantic layer (LookML, dbt Semantic Layer, Cube) with comprehensive metric definitions, business logic encoded as reusable models, clearly defined relationships between dimensions | Looker + Gemini, Thoughtspot Sage, Power BI Copilot, Metabase with semantic models | 85-95% for production deployment | Weeks to months, depending on semantic layer maturity |

| 3: Dynamic text-to-SQL | Generate novel SQL queries for questions your semantic layer doesn’t anticipate | Semantic layers for calculation logic, business glossaries for term disambiguation, data quality monitoring for confidence scoring, lineage for explainability, comprehensive governance for security | Databricks Genie with Unity Catalog, Snowflake Cortex Analyst, Custom agents built with Claude or GPT-4 grounded in your metadata | 95%+ required | Months |

The conversational analytics distinction

Permalink to “The conversational analytics distinction”Most vendors market “conversational analytics” but deliver Level 1 (metadata search with natural language). Looker + Gemini delivers Level 2 (semantic layer queries with strong governance). Level 3 (dynamic SQL generation) remains rare in production because context infrastructure requirements are steep.

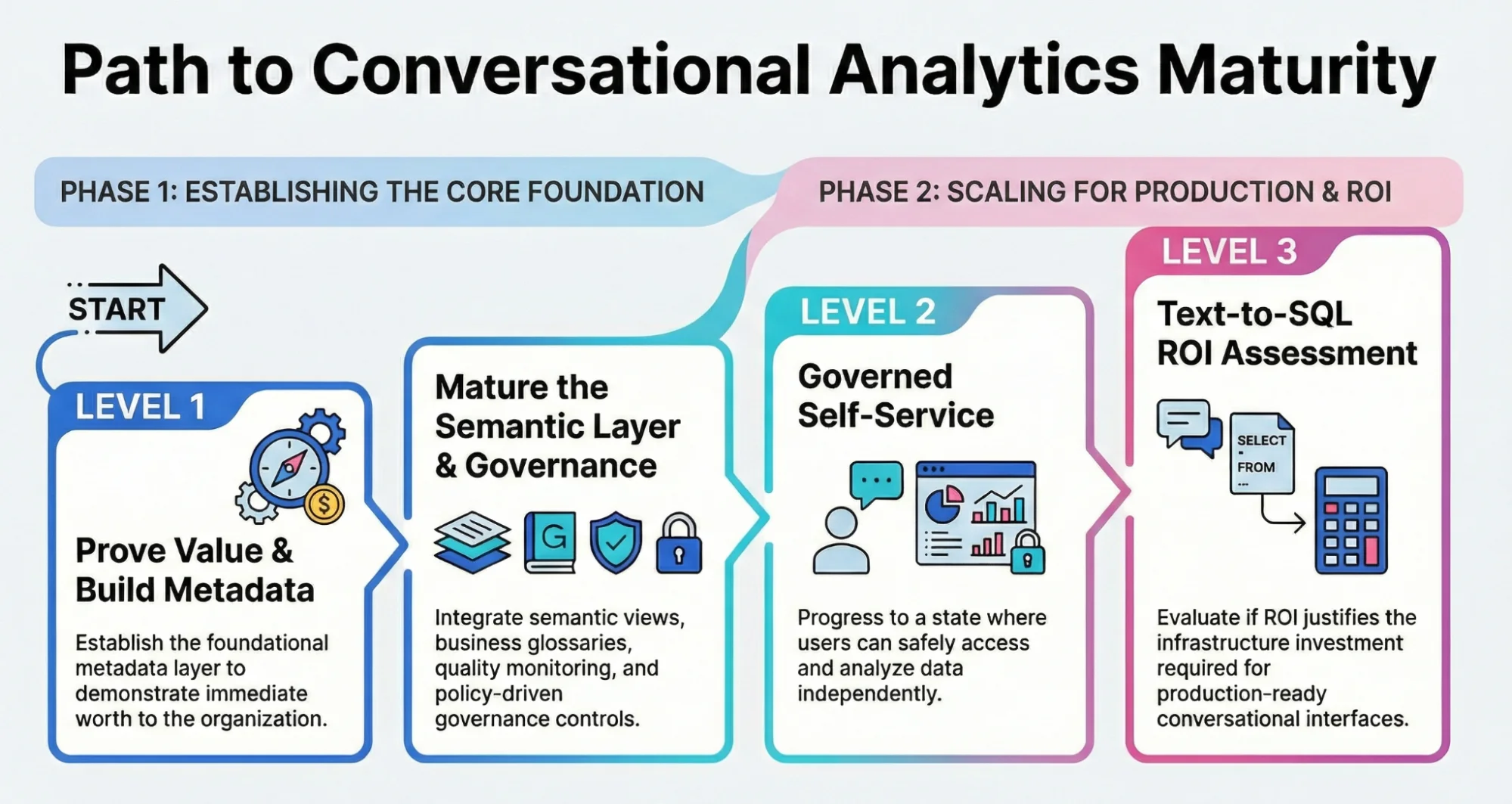

Path to conversational analytics maturity. Image by Atlan.

Understanding where you are on this spectrum—and where you need to be—prevents the common mistake of attempting Level 3 deployments without Level 2 infrastructure maturity.

What is talk to data and why does it matter?

Permalink to “What is talk to data and why does it matter?”“Talk to data” represents the most ambitious application of conversational analytics: translating natural-language questions directly into SQL queries executed against live data warehouses like Snowflake, Databricks, or BigQuery.

Instead of navigating pre-built dashboards created weeks ago by BI teams, users ask questions and receive answers generated from source data in real time. “Show me which product features correlate with customer expansion in the last 90 days” doesn’t retrieve a cached report—it generates SQL that joins product telemetry with CRM data, calculates correlations, and surfaces insights that didn’t exist until you asked.

Read about talk to data here. →

Why it matters

Permalink to “Why it matters”Eliminates dashboard dependency: Traditional BI requires someone to anticipate every question and build a dashboard. Talk to data handles unanticipated questions. Product managers exploring why a feature isn’t getting adopted can ask follow-up questions dynamically rather than waiting days for custom reports.

Enables data exploration and democratization: Data democratization promises have historically failed because “self-service” still meant learning complex BI tools. Talk to data delivers makes it possible—as long as the infrastructure beneath it is solid.

Represent higher ROI: When implemented successfully, talk to data transforms how organizations operate. Companies like Workday are able to iterate in real time instead of waiting for metrics and dashboards to update, and decision velocity increases dramatically.

What makes talk to data different

Permalink to “What makes talk to data different”Conversational analytics and talk to data are similar in many ways – but they differ in a few important ones:

Direct warehouse queries: Instead of retrieving static metadata or querying pre-computed aggregations, talk to data generates and executes SQL against production tables containing billions of rows.

Highest accuracy requirements: Wrong SQL doesn’t just frustrate users—it leads to wrong business decisions. A pricing team optimizing based on incorrect churn analysis could destroy millions in revenue. Accuracy must approach 100% – and talk to data helps reach that standard.

Most complex disambiguation: Data warehouses contain hundreds of tables and thousands of columns, many with ambiguous names. Without business glossaries documenting these distinctions, AI guesses wrong. Talk to data has the context to connect the right dots.

Greatest governance needs: Natural language makes it trivially easy to ask for data you shouldn’t access. “Show me employee salaries by department” sounds harmless, but could violate privacy policies. Access controls, column masking, and audit trails become critical.

The accuracy challenge

Permalink to “The accuracy challenge”Without proper context infrastructure, text-to-SQL systems achieve low baseline accuracy on production queries—insufficient for business-critical decisions on complex enterprise queries with realistic ambiguity.

The gap between demos or pilots and production determines success. Unavoidable factors – 20 years of M&A creating duplicate tables, inconsistent naming conventions, implicit business logic encoded in tribal knowledge – can throw a wrench in things.

But the upside is huge. Grounding AI in business glossaries, semantic layers, and active metadata achieves 3x accuracy improvements—the difference between unusable and production-ready.

Industry validation

Permalink to “Industry validation”Major platforms now offer talk to data capabilities, including Snowflake Cortex Intelligence, Databricks AI/BI with Genie, and custom agents built with Claude or GPT-4.

The technology exists. Now, context infrastructure quality has become the differentiator separating pilots from production deployments.

Why context determines conversational analytics accuracy

Permalink to “Why context determines conversational analytics accuracy”The disambiguation problem AI faces with every question underscores the importance of context infrastructure.

The context problem

Permalink to “The context problem”One of the biggest weaknesses of current AI systems is that they don’t know when – or even how – to say “I don’t know.” So when someone asks “show me revenue by product,” AI must resolve multiple layers of ambiguity:

Which “revenue” definition? Your organization likely has five or more: bookings revenue (when contracts are signed), recognized revenue (GAAP accounting), cash-based revenue (when payment arrives), recurring revenue (ARR/MRR), and consumption revenue (usage-based). Each team uses a different definition. Without context, AI picks one randomly—or worse, mixes them inconsistently.

Which “product” level? Do you mean individual SKUs, product families, product lines, or business units? Your data warehouse has tables at all these grain levels. The question doesn’t specify, but the answer differs dramatically depending on aggregation level.

Which timeframe? Current quarter? Trailing twelve months? Year-to-date? Fiscal year versus calendar year? The question says “show me revenue” but doesn’t specify when—AI must infer from context or make assumptions.

Which filters apply? Should this exclude internal test accounts? Only include completed deals, or also pipeline? Specific regions, or global? Every organization has implicit filters that “everyone knows” apply to revenue analysis—except AI doesn’t know unless you’ve documented these business rules.

Without context infrastructure, AI guesses. With context, AI knows.

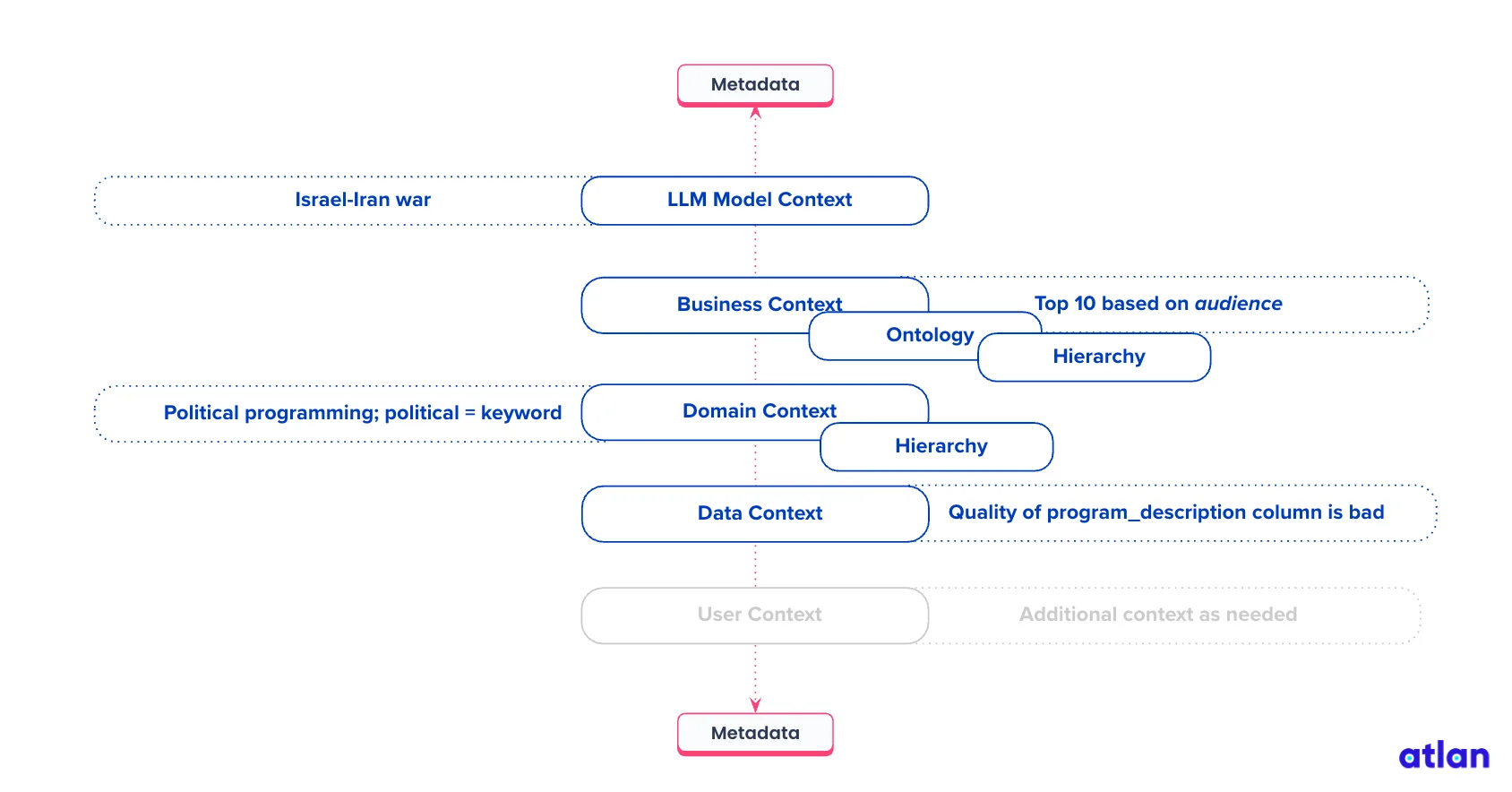

The context infrastructure

Permalink to “The context infrastructure”Context infrastructure is the invisible layer that AI needs to function accurately and reliably. It provides a single, evolving source of truth that captures how the company thinks and operates, with four key components:

1. Context extraction

In most organizations, the raw material for context already exists. But it’s fragmented across Slack messages, PR reviews, support tickets, and more. The patterns are there — they just haven’t been codified.

The context layer must connect across a fragmented landscape and intelligently mine knowledge bases to create a bootstrapped version of your organization’s collective context. This is the foundation of the Enterprise Context Layer: turning the scattered traces of human judgment into a structured, evolving system of shared understanding.

2. Context products

Just as data products turned messy datasets into reliable, consumable assets that teams could depend on, context products are structured representations of data plus the shared understanding that gives that data meaning.

Context products combine facts, relationships, rules, and definitions into a single, verified unit of context that AI systems — and humans — can trust. They tell AI not just what is true, but how to think about it. Combining data with domain-specific meaning – what we call context layering — helps agents think and act intelligently.

In practice, it looks something like this:

The context infrastructure. Image by Atlan.

Every useful AI system will eventually depend on context products. They’ll become the modular units of organizational intelligence — the blocks that power decision-making at scale.

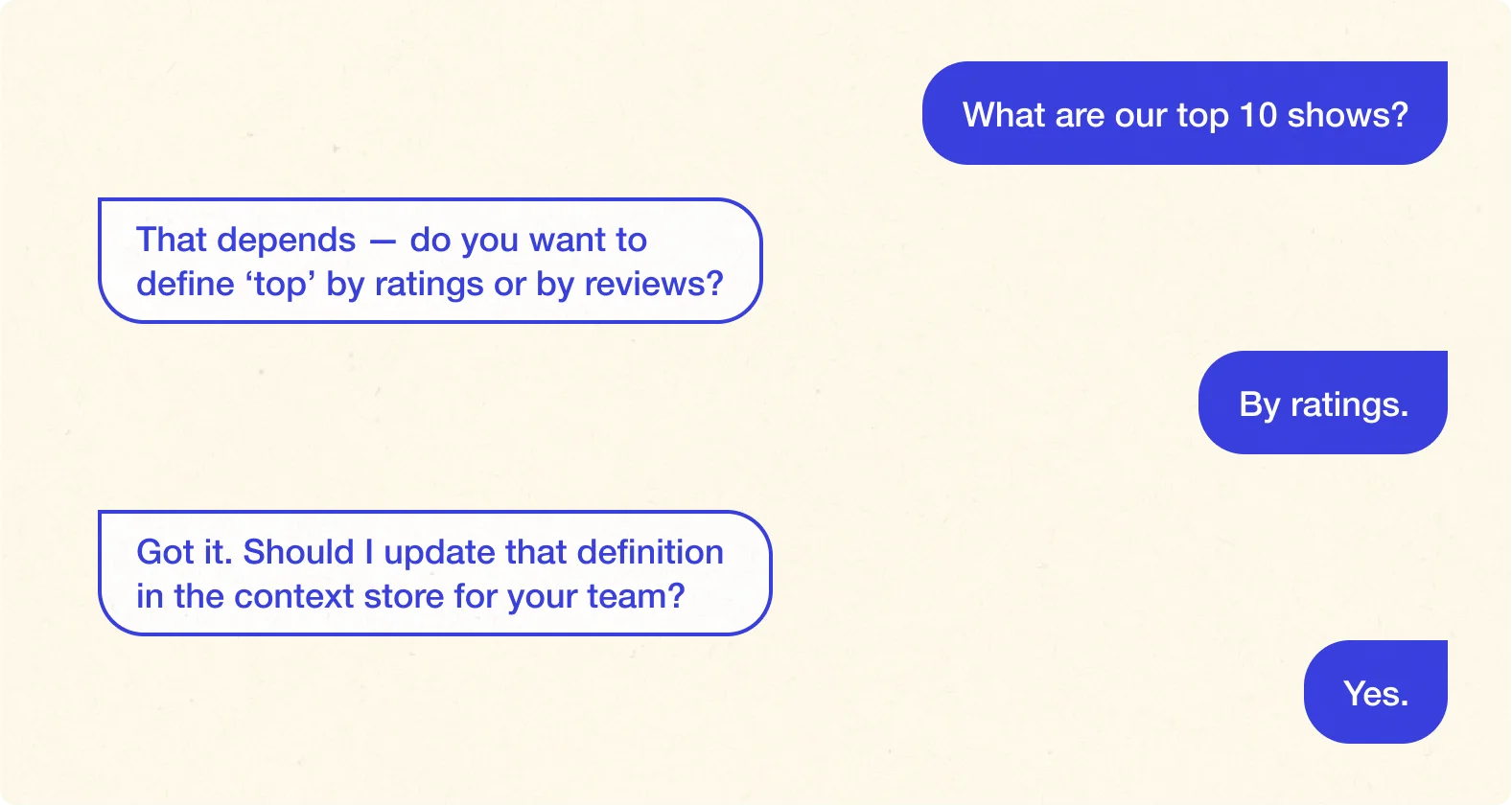

3. Context feedback loops

Context isn’t something you “set up” once and move on. It changes as your company changes, as new products, new people, and new priorities emerge.

Context feedback loops ensure that the context layer is continuously improving. Every interaction between a human and an AI agent becomes a chance to refine your organization’s shared understanding. The system answers, the human adjusts, and that correction becomes part of the institutional memory.

Imagine an exchange between a human user and an AI agent at a media company:

Context feedback loops. Image by Atlan.

Over time, this simple feedback loop — question, clarification, confirmation — builds a more precise and human-aligned system.

4. Context store

The context store is made up of where context lives (in a form accessible to humans and machines), and how it’s accessed (humans review and refine; machines pull it for instant decisions).

That means the architecture needs to do two things well: store context in multiple specialized ways, and unify how it’s retrieved. The context store might therefore include a:

- Graph store that captures relationships

- Vector store that holds unstructured knowledge

- Rules engine that encodes business logic

- Time-series store that keeps track of how things change over time

The accuracy multiplier

Permalink to “The accuracy multiplier”Context infrastructure explains why some organizations successfully deploy talk to data while others abandon pilots after months of frustration. AI models are similar. So are data warehouses. The differentiator is metadata richness and governance maturity.

Organizations implementing conversational analytics with rich context infrastructure have demonstrated 3x accuracy gains (p < 2e-10 statistical significance) when grounding text-to-SQL in business glossaries, semantic layers, and data quality metadata versus schema-only approaches.

This isn’t incremental improvement—it’s the difference between unusable and production-ready. A system that’s 60% accurate on complex queries can’t be deployed for business-critical decisions. The same system at 95% accuracy becomes reliable enough to reduce BI team workload and accelerate decision-making across the organization.

Infrastructure requirements for conversational analytics at scale

Permalink to “Infrastructure requirements for conversational analytics at scale”Successful conversational analytics deployments require three integrated layers working as a unified system.

| Layer Component | What It Is | What It Does |

|---|---|---|

| Foundation Layer | ||

| Data Warehouse or Lakehouse | Snowflake, Databricks, BigQuery, or similar platforms with compute resources | Houses data and query executions. |

| Metadata Control Plane/ Metadata Lakehouse | Centralized system that unifies technical and business metadata across tools (warehouse, BI, ETL, documentation) | Powers discovery, governance, and AI context by serving as a single source of truth for data inventory, meaning, and rules. |

| Business Glossary | Comprehensive definitions for critical business terms and metrics | Prevents semantic ambiguity by providing deep context to questions and answers. |

| Governed Data Products and Policies | Standardized data assets with documented quality, reliability, and compliance | Provides clear policies about who can access what, how data should be used, and what governance constraints apply. |

| Context Layer | ||

| Semantic Layer/Semantic Views | Governed metric definitions and business logic that map business terms to physical tables and calculations for BI tools and AI agents | Encodes complex calculation logic centrally, so every analysis uses consistent definitions. |

| Data Quality Monitoring | Real-time tracking of freshness, completeness, and accuracy | Grounds AI responses in reality by detecting anomalies or deviations, helping to avoid wrong answers. |

| Lineage Tracking | End-to-end data flow visibility showing how data moves from source systems through transformations to final consumption | Provides explainability as to how answers were generated when users have questions. |

| Access Governance | Row-level security, column masking, attribute-based access controls that enforce who can see what. | Makes it easy to ask for restricted data, and ensures requests are granted/denied appropriately, with audit trails. |

| AI/Application Layer | ||

| Conversational Analytics Software | Platforms like Snowflake Cortex Intelligence or Databricks Genie, deployed across multiple surfaces (e.g. Slack, Microsoft Teams, BI tools, internal portals) | Provides the natural-language interface with which users interact. |

| Context API/MCP Server | API that understands business context, validates data quality, and respects governance policies | Delivers metadata, glossary definitions, and quality signals to AI agents in real time when SQL is generated. |

| Feedback Systems | Evaluation sets that test accuracy as context evolves to tune context delivery and measure improvements | Captures user corrections and refinements for continuous improvements. |

Integration architecture: Why disconnected systems fail

Permalink to “Integration architecture: Why disconnected systems fail”These layers must integrate seamlessly. Conversational interfaces query semantic layers, which reference business glossaries, which rely on active metadata, all while enforcing access governance.

Reasoning (LLMs/agents) must call into this context stack via APIs. When AI generates SQL without consulting business glossaries, it makes semantic errors. When it ignores data quality signals, it generates confident wrong answers. When it bypasses governance, it creates compliance risks.

Disconnected systems create accuracy gaps. Organizations that implement Databricks Genie without Unity Catalog metadata, or Snowflake Cortex Intelligence without semantic models, discover their accuracy floors at levels too low for production deployment.

Maturity model progression

Permalink to “Maturity model progression”When assessing where you fall in conversational analytics maturity, consider these three levels:

Level 1: Conversational metadata and search with data catalog integration. Minimal infrastructure—just metadata indexing and search.

Level 2: Conversational analytics with semantic layer queries. Requires mature semantic models, governance policies, and integrated BI platforms.

Level 3: Talk to data / AI analyst with full context infrastructure. Demands comprehensive metadata maturity—business glossaries, quality monitoring, lineage, and robust governance across your entire data estate.

Most organizations spend 3-6 months maturing from Level 1 to Level 2, and 6-12 months progressing from Level 2 to Level 3. Attempting to skip levels leads to failed pilots and abandoned investments.

Where you’ll see conversational analytics: The platform landscape

Permalink to “Where you’ll see conversational analytics: The platform landscape”The conversational analytics market has evolved rapidly, with platforms falling into distinct categories based on their approach and integration points.

BI-native semantic layer platforms

Permalink to “BI-native semantic layer platforms”| Platform | Strength | Requirement | Architecture |

|---|---|---|---|

| Looker + Gemini | Gemini models ground themselves in LookML semantic models, interpreting natural-language questions and mapping them to governed metric definitions. Google reports a two-thirds error reduction compared to ungrounded LLMs querying raw schemas. | Looker Premium licensing and mature LookML models. | Queries the semantic layer only (Level 2 on the maturity spectrum). |

| Microsoft Power BI Copilot | Integrates deeply with the Power BI ecosystem, leveraging existing data models and relationships. | Power BI Premium capacity and properly configured data models. | Queries Power BI’s semantic models (Level 2), making it effective for organizations already invested in Microsoft’s analytics stack. |

| Thoughtspot | Built around a search-first user experience emphasizing self-service exploration. | Thoughtspot semantic models defining relationships and calculations. | Queries pre-defined relationships (Level 2), excelling when semantic models are comprehensive. |

Warehouse-Native Text-to-SQL

Permalink to “Warehouse-Native Text-to-SQL”| Platform | Strength | Requirement | Architecture |

|---|---|---|---|

| Databricks Genie | Integrates with Unity Catalog to understand data assets, governance policies, and semantic definitions. Combines semantic layer queries with dynamic SQL generation capability. | Unity Catalog implementation and data quality monitoring for production reliability. | Represents a Level 2-3 hybrid—it can query semantic definitions but also generate novel SQL when needed. |

| Snowflake Cortex Analyst | Leverages Snowflake’s native integration for governance, semantic models, and metadata tags. Generates SQL with built-in guardrails based on semantic model definitions. | Properly configured semantic models and governance tags. | Operates as a Level 2-3 hybrid, balancing governed semantic queries with dynamic SQL generation when appropriate. |

Custom Agent Development

Permalink to “Custom Agent Development”| Platform | Strength | Risk | Warning |

|---|---|---|---|

| General LLMs with data connectors (Claude, ChatGPT, Gemini) | Offer maximum flexibility for rapid prototyping and custom implementations. Organizations can build conversational analytics tailored precisely to their workflows and terminology. | No built-in governance or semantic grounding. These agents require explicit integration with context infrastructure in order to avoid semantic errors and bypassing governance. | Prototypes work impressively on clean demo data, but production deployments require the full context infrastructure stack. |

Metadata-Powered Foundations

Permalink to “Metadata-Powered Foundations”Atlan, Alation, and Collibra provide the business glossaries, data quality alerts and monitoring, and lineage that ground conversational systems. These platforms don’t offer conversational interfaces themselves—they provide the context infrastructure layer that makes conversational analytics accurate.

When Databricks Genie or Snowflake Cortex Intelligence generates SQL, it queries these metadata platforms for business definitions, quality signals, and governance policies. The metadata platform ensures AI interprets “revenue” correctly, validates data freshness, and respects access controls.

The key insight

Permalink to “The key insight”Every platform above relies on metadata, semantic models, and governance. The differentiator isn’t the conversational interface—it’s the quality of context infrastructure underneath.

Strong semantic layers enable Level 2 implementations with 85-95% accuracy on governed metrics. Full context stacks (business glossaries + data quality monitoring + lineage + comprehensive governance) enable Level 3 implementations with 95%+ accuracy on dynamic SQL generation.

Choose platforms that integrate deeply with your existing metadata infrastructure rather than operating in isolation. Conversational analytics isn’t a standalone product—it’s the visible layer atop a mature data platform.

Implementing conversational analytics: Challenges and solutions

Permalink to “Implementing conversational analytics: Challenges and solutions”Real-world deployments surface predictable challenges. Organizations that anticipate these obstacles and implement solutions proactively achieve faster time-to-value and higher adoption.

Challenge 1: Standardized metric definitions

Permalink to “Challenge 1: Standardized metric definitions”The issue: Different teams calculate the same metrics differently. Finance uses GAAP revenue. Sales tracks bookings. Customer success monitors ARR. Product teams measure active usage. Each definition serves valid purposes, but conversational analytics amplifies inconsistency—500 users now getting 500 different “revenue” numbers because AI doesn’t know which definition to use.

Business impact: Executives make conflicting decisions based on different numbers claiming to represent the same metric. Trust in data erodes rapidly.

Solution: Establish canonical definitions in your business glossary before enabling talk to data. Document which definition applies in which contexts. Encode these definitions in your semantic layer so AI uses consistent calculations.

Sequencing: Align on metric definitions first, then enable conversational analytics. Attempting the reverse—deploying conversational tools then trying to standardize definitions—creates technical debt that’s painful to unwind.

Challenge 2: Data quality and trust

Permalink to “Challenge 2: Data quality and trust”The issue: Stale or incomplete data undermines trust in AI-generated answers. A single confident wrong answer can destroy months of adoption efforts. Users remember the one time conversational analytics gave them outdated churn numbers more vividly than the hundred times it worked correctly.

Business impact: Skepticism spreads. “I can’t trust these AI answers” becomes organizational consensus. Adoption stalls permanently.

Solution: Deploy active metadata monitoring that surfaces quality signals proactively. Systems should say “customer activity data incomplete, churn calculation unavailable” rather than generating wrong answers with false confidence.

Best practice: Instrument data quality checks throughout your pipeline. Surface freshness metadata, completeness metrics, and anomaly detection directly in conversational responses. Organizations like GitLab integrate quality scores into every AI-generated answer, building trust through transparency.

Challenge 3: Governance and security

Permalink to “Challenge 3: Governance and security”The issue: Natural language makes it trivially easy to ask for data you shouldn’t access. “Show me employee salaries by department” is straightforward, but potentially violates privacy policies, compliance requirements, and internal security standards.

Business impact: Privacy breaches, compliance violations, security incidents. Conversational analytics without governance creates liability.

Solution: Access policies must flow automatically from your data warehouse through conversational interfaces. Row-level security, column masking, and attribute-based access controls that work in Snowflake or Databricks must also apply when users ask natural-language questions.

Implementation: Configure governance at the data layer, not the application layer. When someone who lacks permission requests salary data, the system responds: “You don’t have permission to access salary data” with audit logging capturing the attempt. Don’t rely on conversational analytics tools to implement security—enforce it at the warehouse and let conversational tools inherit those policies.

Challenge 4: Pilot-to-production gap

Permalink to “Challenge 4: Pilot-to-production gap”The issue: Conversational metadata search (Level 1) succeeds easily on almost any infrastructure. Talk to data (Level 3) requires dramatically different infrastructure maturity. Pilots that demonstrate Level 1 create unrealistic expectations about Level 3 readiness.

Business impact: Executives see impressive demos and approve budgets, then discover the infrastructure investment required for production-grade accuracy wasn’t included in the plan.

Solution: Sequence implementation, then attempt talk to data with realistic accuracy expectations.

Measurement: Track accuracy through ground truth test sets, not just user satisfaction scores. Curated question libraries help measure accuracy improvements as context infrastructure matures.

Challenge 5: Change management

Permalink to “Challenge 5: Change management”The issue: Users are often skeptical that natural language can replace their carefully crafted SQL queries, custom dashboards, and familiar workflows. Data analysts especially resist tools that might commoditize their expertise.

Business impact: Adoption stalls despite technical success. The system works, but nobody uses it.

Solution: Start with clear pain points—analyses that currently take days to get from BI teams. Demonstrate concrete value: “That question you emailed last Tuesday? Ask the system right now and get your answer in 30 seconds.”

Adoption pattern: When implemented well, regular data users grow from 15% to 68% of workforce within the first year. But this requires focusing initial deployment on high-value use cases where conversational analytics dramatically outperforms status quo, not trying to replace everything immediately.

Stakeholder strategy: Partner with BI teams rather than threatening them. Position conversational analytics as deflecting routine queries so analysts can focus on complex modeling, strategic initiatives, and high-impact work.

Benefits of conversational analytics for business

Permalink to “Benefits of conversational analytics for business”Understanding ROI helps justify infrastructure investments and set appropriate expectations for business value. Quantifying the business benefits of conversational analytics increases opportunities for long-term focus and budget allocation.

Benefit 1: Democratized data access

Permalink to “Benefit 1: Democratized data access”Sixty percent of executives report their teams lack the technical literacy for effective self-service analytics. Conversational analytics eliminates the barriers—users don’t need to understand SQL, know which tables contain relevant data, or navigate complex BI tool interfaces.

Natural language makes data accessible to non-technical users across functions. Marketing managers explore campaign performance without learning Looker. Sales leaders analyze pipeline dynamics without querying Salesforce. Product managers investigate feature adoption without writing SQL.

Measured impact: Organizations report regular data users growing from 15% of workforce (analysts and data scientists) to 68% (employees across all functions) after successful conversational analytics deployment. Data-informed decision-making becomes organization-wide rather than concentrated in technical teams.

Benefit 2: Time-to-insight reduction

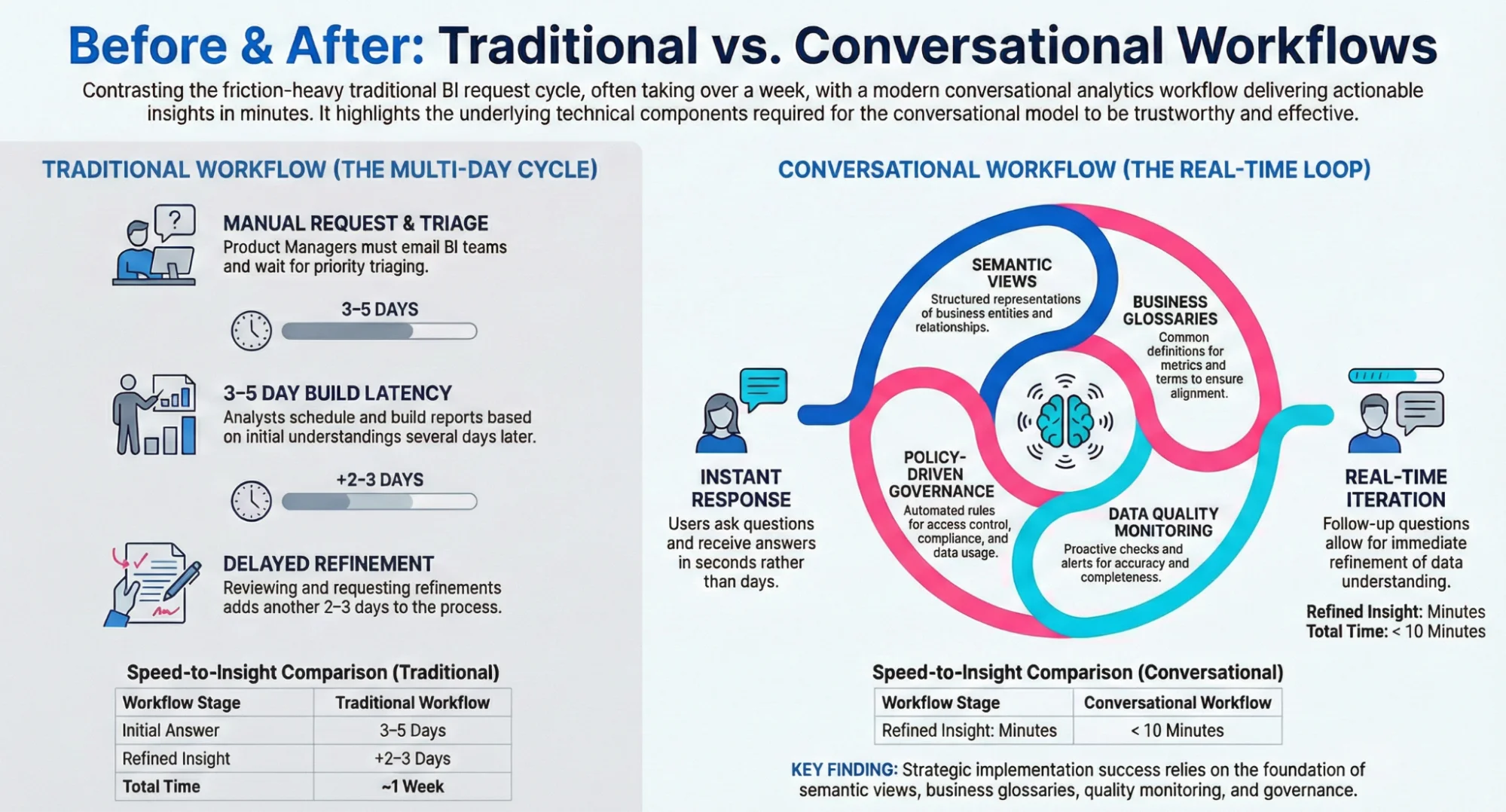

Permalink to “Benefit 2: Time-to-insight reduction”

Benefits of conversational analytics for business. Image by Atlan.

Business impact: Decisions are based on real-time data, not week-old snapshots. This velocity advantage compounds—faster insights enable faster experiments, faster learning, faster optimization.

Measured impact: Time-to-insight for routine analytical questions drops from days to seconds. Complex analyses still benefit from analyst expertise, but most questions don’t require deep expertise—they require data access.

Benefit 3: BI team efficiency

Permalink to “Benefit 3: BI team efficiency”Conversational analytics deflects simple queries to self-service, freeing analysts for complex work that requires their expertise. Instead of answering “what was revenue last quarter” over and over, analysts are able to build sophisticated models, investigate anomalies, and develop strategic insights.

At Mastercard, analysts went from spending 80% of their time wrangling data and 20% analyzing it, to flipping the script entirely – spending 80% of their time on analytics and only 20% wrangling.

Long-term impact: BI team scaling challenges diminish. Organizations grow data usage without proportionally expanding analytics headcount because self-service handles increasing query volume.

Benefit 4: Decision velocity

Permalink to “Benefit 4: Decision velocity”Product managers iterate faster when they can instantly explore feature usage patterns. Sales leaders adjust territory strategies based on real-time pipeline analytics rather than monthly reviews. Marketing teams optimize campaigns mid-flight when they can query performance data conversationally rather than waiting for scheduled reports.

Business impact: Faster decisions enable faster value realization. A/B test results inform next iterations within hours instead of days. Budget allocation adjusts based on current performance, not last month’s summary. Market opportunities get pursued before competitors react.

There’s a compounding effect: Organizations making decisions 3x faster don’t just move faster—they learn faster, optimize faster, and adapt to market changes faster.

Benefit 5: Data literacy at scale

Permalink to “Benefit 5: Data literacy at scale”Traditional training programs attempt to build data literacy through courses and workshops. Conversational analytics builds literacy through hands-on interaction. Users learn which questions yield insights through experimentation and immediate feedback.

Product managers discover that asking “why did signups drop” prompts exploration of segmentation, channels, and cohort behavior. They internalize analytical patterns through repeated use rather than abstract training. Marketing analysts learn data relationships by exploring rather than memorizing schema documentation.

Long-term impact: Data-driven culture emerges organically. More employees cite data in decisions. Fewer rely on intuition alone. Strategic discussions reference specific metrics and trend analysis rather than vague impressions.

This cultural shift represents the ultimate ROI—organizations where data literacy pervades all functions make consistently better decisions than those where data expertise concentrates in small teams.

Implementation Quickstart Checklist

Permalink to “Implementation Quickstart Checklist”- Select a pilot domain with high-trust requirements (finance, sales ops) and contained scope

- Audit glossary coverage for the pilot domain; document 80%+ of critical business terms

- Validate semantic layer maturity for pilot metrics; ensure calculation logic is encoded

- Establish data quality SLAs with freshness thresholds and completeness requirements

- Map lineage from source systems through transformations to consumption layer

- Configure access policies at the warehouse level (row-level security, column masking)

- Build an evaluation set of 50-100 representative questions with verified ground-truth answers

- Measure baseline accuracy before deploying to users

- Establish feedback loops for continuous context refinement

Getting started with conversational analytics

Permalink to “Getting started with conversational analytics”Conversational analytics represents the future of data access—from simple metadata questions to sophisticated talk to data generating SQL on live warehouses. Success requires understanding the spectrum of use cases, building context infrastructure appropriate to your ambitions, and sequencing implementation from low to high complexity.

Organizations that invest in business glossaries, semantic layers, and data quality monitoring first unlock accurate, scalable conversational analytics. Those that skip foundations discover their infrastructure wasn’t ready for this workload—pilots succeed on clean demo data, then fail when confronted with real enterprise complexity.

The difference between pilots and production isn’t AI capability—it’s context richness and architectural maturity. Your metadata infrastructure, governance frameworks, and semantic models determine whether conversational analytics delivers transformative value or joins the graveyard of abandoned AI pilots.

Start where you are. If you have basic metadata, deploy conversational search and prove value. As you mature semantic layers and business glossaries, progress to governed metric queries. When you’ve built comprehensive context infrastructure, attempt talk to data with realistic accuracy expectations and robust evaluation frameworks.

The journey takes months. But the payoff—data democratization that actually works—justifies the investment.

Atlan’s context infrastructure enables production-ready conversational analytics.

FAQs about conversational analytics

Permalink to “FAQs about conversational analytics”1. What is conversational analytics?

Permalink to “1. What is conversational analytics?”Conversational analytics enables users to explore and analyze business data through natural language questions instead of navigating dashboards or writing SQL. AI-powered systems interpret intent, generate appropriate queries (from metadata retrieval to SQL generation), and return insights conversationally. Organizations use conversational analytics to democratize data access, reduce BI team workload, and accelerate decision-making across all employee levels regardless of technical expertise.

2. What is the difference between conversation analytics and conversational analytics?

Permalink to “2. What is the difference between conversation analytics and conversational analytics?”Conversation analytics analyzes customer service interactions (calls, chats, emails) for sentiment, trends, and agent performance using tools like Gong, CallMiner, or Sprinklr. Conversational analytics enables natural language queries against business data warehouses for self-service insights and SQL generation, using tools like Looker, Databricks Genie, or Snowflake Cortex Intelligence. They serve entirely different purposes: one improves customer service operations, the other democratizes access to business intelligence data.

3. What is talk to data in conversational analytics?

Permalink to “3. What is talk to data in conversational analytics?”Talk to data translates natural language questions directly into SQL queries executed against live data warehouses like Snowflake, Databricks, or BigQuery. It is the most ambitious application of conversational analytics, generating queries on production data rather than retrieving static metadata or querying pre-computed metrics. Talk to data requires rich context infrastructure because accuracy requirements are highest, and wrong SQL generates wrong business decisions. According to Atlan’s research, proper context infrastructure improves text-to-SQL accuracy by 3x.

4. What are the benefits of conversational AI search in business analytics?

Permalink to “4. What are the benefits of conversational AI search in business analytics?”Key benefits include democratized data access (no technical expertise required), reduced time-to-insight (seconds instead of days for routine questions), BI team efficiency (analysts focus on complex strategic work rather than repetitive queries), decision velocity (faster iterations based on current data), and data literacy at scale (hands-on exploration builds intuition that training programs can’t match). Organizations report regular data users growing from 15% to 68% of the workforce, with significantly faster decision-making cycles across all business functions.

5. What software do I need for conversational analytics?

Permalink to “5. What software do I need for conversational analytics?”Conversational analytics requires several integrated components: a data warehouse or lakehouse (Snowflake, Databricks, BigQuery), conversational analytics software (Snowflake Cortex Intelligence, Databricks Genie, Looker + Gemini), metadata management platform, business glossary, semantic layer for governed metrics, data quality monitoring, and access governance. For talk to data, context infrastructure is key: grounding AI in business definitions, quality signals, and governance policies achieves 3x higher accuracy than those relying on database schemas. The conversational interface matters less than the context infrastructure beneath it.

6. Do I need Looker or Databricks to use conversational analytics?

Permalink to “6. Do I need Looker or Databricks to use conversational analytics?”No. Multiple platforms offer conversational analytics: Looker + Gemini, Databricks Genie, Snowflake Cortex Intelligence, Thoughtspot, Power BI Copilot, or custom agents built with Claude or GPT-4. The critical requirement isn’t the specific tool—it’s the underlying context infrastructure. All platforms require semantic models for production accuracy, and talk to data implementations require business glossaries, data quality monitoring, and governance. Choose platforms that integrate with your existing metadata infrastructure rather than operating in isolation. Success depends more on context maturity than vendor selection.

7. How accurate is AI-based conversational analytics?

Permalink to “7. How accurate is AI-based conversational analytics?”Accuracy varies dramatically based on context infrastructure quality. Text-to-SQL systems without business metadata achieve low baseline accuracy on complex production queries. Systems with integrated semantic layers, business glossaries, and active metadata demonstrate 3x accuracy improvements according to research by Atlan and Snowflake, reaching 95%+ production-ready reliability. The accuracy gap between demos and production determines whether implementations succeed or fail.

8. How does Google Looker’s conversational analytics work?

Permalink to “8. How does Google Looker’s conversational analytics work?”Users ask questions in natural language, Gemini interprets intent and maps questions to LookML metric definitions and relationships, then generates queries against connected data sources. Google reports a two-thirds error reduction compared to ungrounded LLMs querying raw database schemas. Looker Premium licensing and mature LookML models are required for production accuracy. This represents Level 2 on the conversational analytics maturity spectrum—querying governed semantic definitions rather than generating dynamic SQL against raw tables.

Share this article