AI analyst: System vs. job role

Permalink to “AI analyst: System vs. job role”“AI analyst” has two distinct meanings in 2026:

1. AI Analyst (System): A conversational analytics platform that interprets questions and generates insights using AI. This guide focuses on AI analyst systems—the technology that’s transforming how organizations access and act on data.

2. AI Analyst (Job): A data professional who analyzes AI system performance, implementation patterns, and business impact. While this role is growing in importance, it represents an entirely different category.

This page explores AI analyst systems—how they work, their architecture, implementation considerations, and business value. For organizations evaluating conversational analytics platforms or looking to understand how AI can democratize data access, this guide serves as a definitive resource.

How AI analysts work: Architecture and components

Permalink to “How AI analysts work: Architecture and components”AI analysts represent a fundamental shift from traditional analytics workflows. Instead of requiring users to navigate complex BI dashboards or write SQL queries, they interpret natural-language questions and deliver contextualized answers.

But what makes this possible? Understanding the architecture helps explain AI analysts’ capabilities and limitations.

1. Natural-language interface

Permalink to “1. Natural-language interface”The entry point is conversational: users ask questions as they would to a colleague – “show me Q4 revenue by region” or “which campaigns underperformed last month?”

The interface accepts follow-up questions, building on previous context to support exploratory analysis. This removes the technical barrier that has traditionally limited data access to specialists.

2. LLM reasoning engine

Permalink to “2. LLM reasoning engine”Large language models interpret user intent, decompose complex questions into analytical steps, and determine which data operations to perform. The LLM understands natural language nuances—recognizing that “last quarter” means Q4 2025, or that “top performers” implies ranking and filtering.

This reasoning layer translates business questions into executable queries. With business logic baked in, queries are more exact and answers are more accurate.

3. Context layer

Permalink to “3. Context layer”Here’s where organizational knowledge lives. The context layer contains business glossaries that define terms like “customer,” “revenue,” or “active user” with precision. It also includes governed metrics—certified calculations for KPIs that everyone uses consistently.

Data lineage shows where information originates and how it transforms. Semantic layers and relationships explain how tables connect. Without this context, even the most sophisticated LLM will struggle to understand which “revenue” calculation to use or whether a metric is trustworthy.

AI analysts access this context through active metadata platforms that maintain real-time understanding of data assets, their relationships, and their business meaning.

4. Data execution layer

Permalink to “4. Data execution layer”Once the AI analyst understands what data to retrieve, it generates and executes queries against your data warehouse, lake, or semantic layer. This layer handles the technical complexity—SQL generation, query optimization, result caching—while respecting existing security permissions and access controls. The execution layer ensures that conversational interactions translate into performant data operations.

5. Explanation mechanism

Permalink to “5. Explanation mechanism”AI analysts don’t just return numbers—they explain how they arrived at an answer. This includes showing which data sources were queried, which calculations were applied, what filters were used, and any assumptions made.

Citation to source data, calculation transparency, and confidence scoring help users understand whether to trust the answer, increasing data quality and trust. This explainability differentiates enterprise AI analysts from generic chatbots.

Comparison: AI Analyst vs. BI Dashboards vs. Traditional Data Analysts

| Capability | AI Analyst | BI Dashboard | Traditional Data Analyst |

|---|---|---|---|

| Query method | Natural language | Point-and-click | SQL/code |

| Flexibility | Handles ad-hoc questions | Pre-built visualizations | Fully customizable |

| Speed to insight | Seconds | Instant (for known metrics) | Hours to days |

| Learning curve | Minimal | Low to moderate | High |

| Best for | Exploratory analysis, one-off questions | Monitoring known KPIs | Complex custom analysis |

| Requires context | Yes (critical for accuracy) | Moderate | High (tribal knowledge) |

Why organizational context is critical for AI analyst accuracy

Permalink to “Why organizational context is critical for AI analyst accuracy”The most sophisticated AI model in the world cannot compensate for missing organizational context. This “context gap” explains why MIT research found that 95% of enterprise AI pilots fail to deliver measurable business impact—and why AI analysts specifically require robust metadata foundations to succeed.

This isn’t just a theoretical concern. Gartner predicts that organizations will abandon 60% of AI projects unsupported by AI-ready data. And it’s context – not models – that determines whether data is AI-ready.

Without organizational context, AI faces critical challenges that have serious downstream effects:

Fragmented definitions

Permalink to “Fragmented definitions”The same term means different things across teams. “Revenue” could mean:

- Gross revenue (sales team)

- Net revenue after returns (finance)

- Recognized revenue under GAAP (accounting)

- Bookings (go-to-market)

Without a certified business glossary defining these terms and their appropriate use cases, the AI analyst must guess—or worse, mix definitions inconsistently.

Missing business logic

Permalink to “Missing business logic”Data structure doesn’t equal business meaning.

A database might contain multiple revenue tables without indicating which represents the “official” number. Relationships between tables might be technically correct but illogical for the business. Calculations might exist in code without documentation of why certain filters or joins matter.

In all these scenarios AI sees data architecture, but not business intent.

Incomplete lineage

Permalink to “Incomplete lineage”When numbers look wrong, you need to trace them to their source in order to verify their accuracy. But without data lineage, the AI analyst can’t explain where “Q4 revenue” originated: which source systems fed the data, which transformations were applied, which business rules filtered results.

For 73% of data leaders, “data quality and completeness” are the primary barriers to AI success—more than model accuracy or computing costs.

Ungoverned metrics

Permalink to “Ungoverned metrics”Every organization has critical KPIs, like customer acquisition cost, net retention, inventory turns. But if these metrics aren’t governed—calculated consistently, certified by domain owners, and centrally maintained—different teams produce different numbers for the same metric.

AI analysts amplify this problem by making it easy to generate conflicting answers at scale. This is particularly pronounced if an enterprise utilizes domain-specific agents. Just as human teams are prone to metric consistency across domains, so too are their agents.

The importance of a governance and lineage foundation

Permalink to “The importance of a governance and lineage foundation”Business glossaries eliminate ambiguity by defining terms with precision. A SaaS company’s glossary might distinguish “Revenue” (recognized revenue under ASC 606 for financial reporting) from “ARR” (annualized subscription value for growth metrics) from “Bookings” (total contract value for sales performance). Without these certified definitions, AI analysts apply terms inconsistently.

Governed metrics go further by specifying exact calculations. A governed “Customer Acquisition Cost” metric defines the formula, including cost categories, time periods, and customer segments—ensuring the AI uses the same calculation as existing dashboards and reports.

Active metadata and data lineage create the “context layer” that prevents hallucinations. Lineage traces data from source systems through transformations, showing what business rules were applied and when data was refreshed. This helps AI understand data reliability and provides audit trails for explainability.

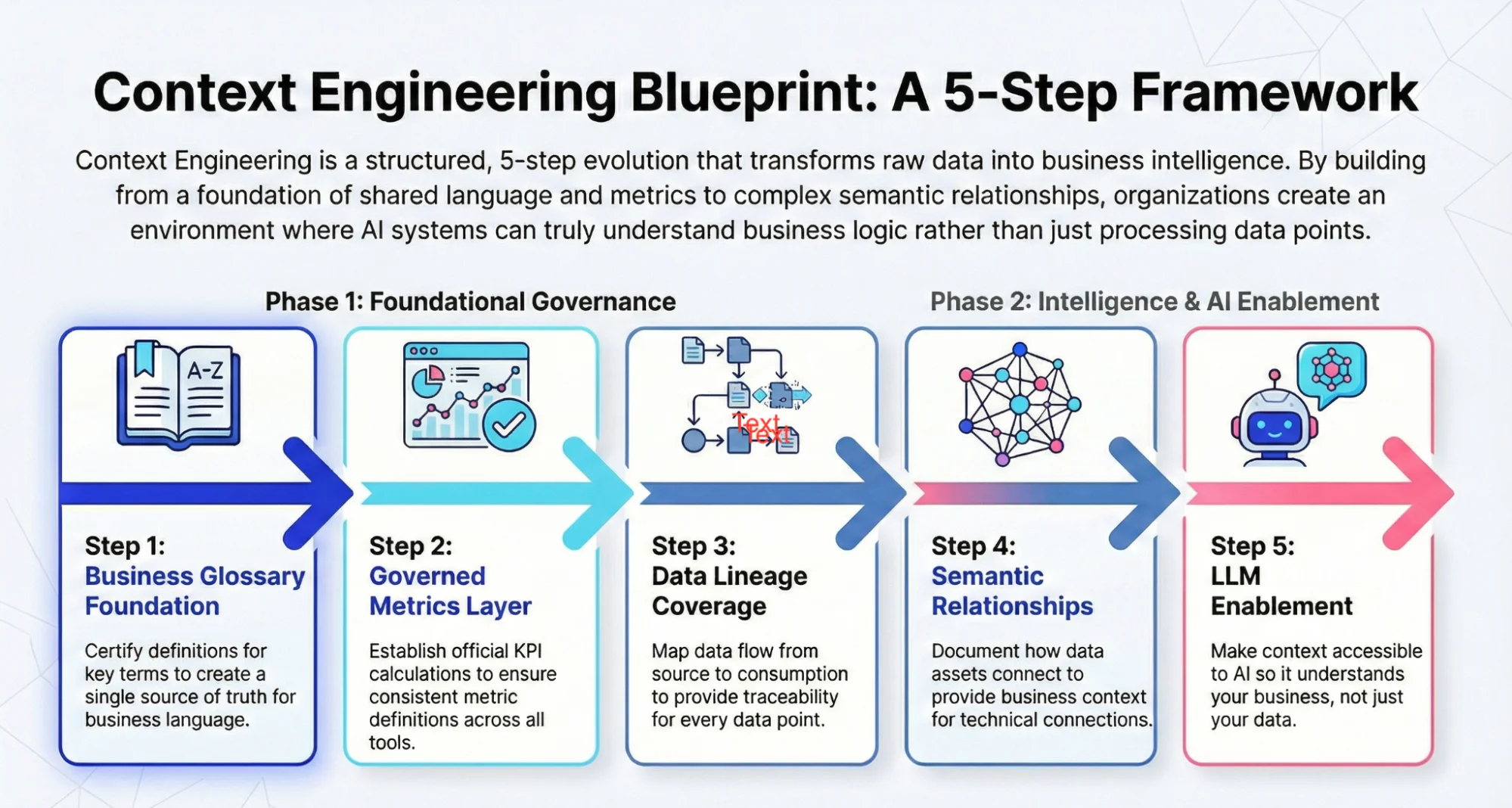

Context engineering: The foundation for accurate AI analysts

Permalink to “Context engineering: The foundation for accurate AI analysts”This framework represents the difference between an AI analyst that can answer questions and one that should be trusted with answers. Organizations that invest in context engineering before enabling conversational analytics see dramatically higher accuracy, adoption, and ROI.

Context engineering: The foundation for accurate AI analysts. Source: Atlan.

Common AI analyst use cases across industries

Permalink to “Common AI analyst use cases across industries”AI analysts deliver value across business functions and industries, but the highest-impact applications address frequent, time-sensitive questions that previously required manual analyst work. Here are their core cross-functional use cases:

1. Executive decision support

Permalink to “1. Executive decision support”Senior leaders need answers to questions like “What’s driving our margin decline?” or “Which product lines are growing fastest?” Traditionally, they relied on analysts, which meant more meetings, more time waiting for reports, and more follow-up questions that would start the cycle over again.

AI analysts compress this loop from days to minutes. Executives ask questions directly, explore anomalies immediately, and make decisions with current data rather than last week’s presentation.

A major media company that was leveraging their data warehouse’s built-in AI agent achieved a 10% query accuracy rate. When they augmented their AI-based system with context, that accuracy rate increased to 90%—a testament to the positive impact that AI can have on decision-making.

2. Operational analytics

Permalink to “2. Operational analytics”Operations teams monitor hundreds of metrics—campaign performance, production efficiency, logistics KPIs, service quality indicators. Doing this manually is not only time consuming, but it also runs the risk of inconsistent metrics definitions and stale dashboards.

AI analysts enable conversational monitoring, like “Which campaigns are underperforming against target?” or “Show me fulfillment delays by warehouse.” This shifts operational analytics from passive dashboard monitoring to active investigation, helping domain teams identify and resolve issues faster.

At DigiKey, for example, a query to identify at-risk customer orders went from days of cross-system chasing to minutes once a governed context layer was in place—the same pattern we now see with AI analysts answering “what’s driving our margin decline?” in real time.

3. Self-service analytics for business users

Permalink to “3. Self-service analytics for business users”Perhaps the most transformative use case is democratizing data access for non-technical users. With AI analysts, marketing managers can analyze campaign performance without SQL. Sales leaders can explore pipeline trends without waiting for reporting queues. Product managers can investigate feature adoption without analyst support.

Self-service BI adoption is accelerating rapidly, with the market growing from $6.73 billion in 2024 to a projected $26.54 billion by 2032—an 18.7% CAGR driven by demand for analytics democratization. And the downstream effect will be massive. Business users will be able to analyze, plan, and make decisions faster and more confidently than ever before.

For companies like Workday, standardizing business language into glossaries and semantic models enabled reliable, AI-powered self-service analytics—and increased accuracy by 5x.

4. Compliance and audit

Permalink to “4. Compliance and audit”Regulatory requirements demand answers to specific questions, like “Show me all PII usage last quarter” or “Which datasets contain GDPR-protected information?” Typically, investigating the answers when an audit arises is an all-hands-on-deck task, diverting time and resources away from business-moving jobs.

So it’s no surprise that 85% of CISOs believe that AI can improve their security posture. AI analysts with proper governance and lineage integration can answer compliance questions without the overhead (or the headaches). Automated audit trails and explainable answers provide documentation for regulators.

Even the most complex compliance standards and audit reports – for laws like the GDPR, HIPAA, and the CCPA – become something that humans can check, instead of something that bogs them down.

5. Automated reporting and alerting

Permalink to “5. Automated reporting and alerting”Company retrospectives are helpful for exposing insights and potential gaps—but they typically lag by at least a quarter. At that point, the data is stale and the company has likely moved on.

Advanced AI analysts don’t have to wait. They can proactively monitor data for noteworthy changes and surface insights in real time. When they flag a trend like “Revenue in EMEA dropped 15% week-over-week” or “Customer churn increased in the Premium tier,” teams can respond quickly and mitigate the problem, shifting from reactive to proactive.

In early deployments, Atlan has found that AI-generated insights have been accepted by humans about 70% of the time, which is why teams are increasingly comfortable letting AI analysts run as always-on monitors.

6. Embedded analytics in applications

Permalink to “6. Embedded analytics in applications”SaaS products increasingly embed AI analysts directly into user workflows, enabling customers to ask questions about their own data without leaving the application. A marketing platform might let users ask “Which emails drove the most conversions?” A financial system might answer “What caused the variance in department budgets?”

Embedded AI analysts become product features that differentiate in competitive markets. As Andrew Reiskind, Chief Data Officer at Mastercard, said during Atlan Re:Govern, “When you’re working with AI, you need that contextual data to interpret the transaction data at the speed of transaction. You need to actually embed that contextual data into the transactional information.”

This has allowed Mastercard to flip the script on data analytics. Where analysts previously spent 80% of their time wrangling data and 20% on performing analytics, those percentages are now reversed. It shows how AI analysts succeed when they’re purpose-built for specific analytical workflows, integrated with organizational context, and trusted by users because of their explainability.

Key considerations for implementing AI analysts

Permalink to “Key considerations for implementing AI analysts”The gap between a successful pilot and a failed production deployment often comes down to preparedness and intent. Organizations that treat AI analyst implementation as a technical integration project tend to stall. Those that approach it as a data readiness and organizational change initiative tend to succeed.

Implementation Readiness Checklist

Permalink to “Implementation Readiness Checklist”| Implementation Detail | Questions to Ask | Requirements |

|---|---|---|

| Data Infrastructure | Is data queryable from a central location? Are APIs available? Is access programmatic? | Centralized warehouse/lakehouse OR data catalog connecting federated sources API access to data platforms Consistent permission model |

| Metadata Maturity | Are business terms defined? Is lineage documented? Are metrics governed? | Business glossary with certified definitions Data lineage for critical assets Governed metrics with clear ownership Active metadata maintained and updated |

| Governance Framework | Who owns definitions? What’s the approval process? How are conflicts resolved? | Data governance council or equivalent Identified data stewards by domain Workflow for metadata changes Conflict resolution process |

| Security & Access | Can AI inherit permissions? Is row/column security defined? How will access be audited? | Integration with existing security infrastructure Row-level and column-level security Audit logging for all queries Policy for handling sensitive data requests |

| User Adoption | Who will champion the system? What training is needed? How will we measure success? | Identified pilot user group Training plan by persona Feedback collection mechanism Success metrics defined |

1. Data infrastructure readiness

Permalink to “1. Data infrastructure readiness”Question to ask: Is your data centralized, federated, or fragmented?

AI analysts perform best with clear data architecture.

- Centralized data warehouses or lakehouses provide a single query target.

- Federated data meshes require more sophisticated integration but can work with proper catalog infrastructure.

- Fragmented data scattered across silos without consistent access patterns will limit AI analyst effectiveness—and expose larger data architecture problems that likely affect all analytics, not just AI.

Assessment checkpoint:

- Can you query your critical business metrics from a single platform or semantic layer?

- Are data access permissions consistent and programmatically accessible?

- Do you have API access to your data platforms?

2. Metadata maturity

Permalink to “2. Metadata maturity”Question to ask: How complete are your business definitions, data lineage, and metric certifications?

This is the most important factor for AI analyst accuracy. Organizations with mature metadata management—business glossaries, governed metrics, complete lineage, semantic relationships—see dramatically better results than those treating metadata as an afterthought.

Assessment checkpoint:

- Do you have certified business definitions for your top 50 terms?

- Can you trace any metric back to its source systems?

- Are KPI calculations documented and consistent across tools?

- Is your metadata actively maintained, not just documented once?

Research has found that 77% of organizations rate their data quality as average or worse, with poor data quality contributing to 60% higher project failure rates. Metadata maturity directly impacts data quality—and therefore AI analyst success.

Assess your organization's metadata maturity

Take the Assessment →3. Governance framework

Permalink to “3. Governance framework”Question to ask: Who owns data definitions, and what’s the approval process for new metrics?

AI analysts make it easy to generate answers at scale, which makes governance even more important. You need clear ownership:

- Who certifies that a metric definition is correct?

- Who approves changes to business glossary terms?

- What’s the workflow for promoting new metrics from experimental to production?

Without governance, different AI analysts will deliver different answers to the same question—quickly breaking down trust in the system.

Assessment checkpoint:

- Is there a data governance council or equivalent body?

- Are data stewards identified for critical domains?

- Is there a workflow for proposing and approving metadata changes?

- How do you handle conflicts when different teams define terms differently?

Read more in the Atlan Governance Blueprint →

4. Security and access controls

Permalink to “4. Security and access controls”Question to ask: How do you handle permissions in conversational interfaces?

Traditional BI dashboards respect row-level security and column masking because they query databases that enforce these controls. AI analysts must do the same—but the conversational interface creates new challenges.

If a user asks, “Show me all customer records,” the AI must respect their permission scope, not expose restricted data. Integration with your existing security infrastructure isn’t optional.

Assessment checkpoint:

- Can your AI analyst inherit permissions from your data platform?

- Do you have row-level and column-level security defined?

- How will you audit what questions were asked and what data was accessed?

- What’s your plan for handling requests that would expose sensitive data?

5. User adoption strategy

Permalink to “5. User adoption strategy”Question to ask: How will you train users and drive adoption?

Technology adoption fails when organizations assume “build it and they will come.” Users need training on how to ask effective questions, what types of analysis the AI analyst handles well, and how to interpret results.

Change management matters: you’re shifting analytical workflows from centralized (submit requests to analysts) to distributed (ask questions directly). Identify quick wins and build on them.

Assessment checkpoint:

- Have you identified champion users to pilot the system?

- What’s your training plan for different user personas (executives vs. analysts vs. business users)?

- How will you collect feedback and iterate on the implementation?

- What’s your communication plan for explaining capabilities and limitations?

The most successful implementations follow a phased approach: start with a well-defined use case, ensure strong metadata foundation for that domain, pilot with champion users, iterate based on feedback, then expand to additional use cases and user groups. Trying to deploy enterprise-wide on day one typically fails.

Measuring AI analyst success and ROI

Permalink to “Measuring AI analyst success and ROI”Organizations that can’t measure impact struggle to justify continued investment. Research from IDC shows that 70% of the Forbes Global 2000 companies will soon make ROI analysis a prerequisite for any new AI investment.

Fortunately, AI analysts lend themselves to clear, quantifiable metrics across multiple dimensions.

Accuracy metrics

Permalink to “Accuracy metrics”Response correctness measures whether the AI analyst provides the right answer to a question. This requires establishing test sets of known questions with verified answers, then tracking accuracy over time. Organizations typically see accuracy improve as metadata maturity increases—another data point supporting the importance of organizational context.

Hallucination rate tracks the percentage of answers containing incorrect data, unsupported claims, or fabricated information. More than three-quarters of organizations are concerned about hallucinations, and 47% of enterprise AI users have made at least one decision based on hallucinated information.

Citation validity measures whether the AI analyst correctly attributes data sources and shows its work. Explainability isn’t just about user trust—it’s a measurable quality indicator. If an AI analyst can’t cite sources or explain calculations, it’s guessing.

Measurement kit:

Hallucination Rate = (Responses with Incorrect Data / Total Responses) × 100

Target: Establish baseline, then track improvement as metadata maturity increases

Measurement frequency: Weekly during pilot, monthly in production

Efficiency gains

Permalink to “Efficiency gains”Time-to-answer reduction compares how long it takes to answer questions with the AI analyst versus traditional methods (submitting requests to analysts, building dashboard filters, writing SQL).

Support queue reduction tracks decrease in routine analytical requests to centralized teams. When business users can answer their own questions through AI analysts, the volume of requests to data teams typically shifts away from simple queries (data lookups, standard reports, basic metrics) toward more complex strategic work.

Measurement kit:

Support Queue Reduction = ((Pre-Implementation Requests - Post-Implementation Requests) / Pre-Implementation Requests) × 100

Track by request type: Tier 1 (simple queries), Tier 2 (moderate analysis), Tier 3 (complex custom work)

Expectation: Tier 1 requests should decrease while Tier 3 strategic work increases

Adoption metrics

Permalink to “Adoption metrics”Active users and query volume indicate whether the system is actually being used. Early-stage implementations should track daily/weekly active users and queries per user. Low engagement signals either limited awareness, poor user experience, or mismatch between capabilities and user needs.

Repeat usage matters more than one-time experimentation. Organizations should track what percentage of users return after their first session. High initial trial with low repeat usage suggests the system isn’t delivering sufficient value to change behavior.

Measurement kit:

Weekly Active Users (WAU) = Unique users asking at least one question per week

Query Volume = Total questions asked per day/week/month

Repeat Usage Rate = (Users with 2+ Sessions / Total Users Who Tried) × 100

Target: > 60% repeat usage within 30 days of first session

Business impact

Permalink to “Business impact”The ultimate measure: did the AI analyst drive better business outcomes?

Faster decisions: Measure cycle time from question to action for key decision types. Did executives make resource allocation decisions faster? Did operations teams resolve issues with shorter detection-to-resolution cycles?

Improved data trust: Survey users about confidence in data-driven decisions before and after AI analyst implementation. Organizations with strong metadata governance typically see trust scores increase alongside AI analyst adoption—because explainability and context improve confidence even for non-AI workflows.

Reduced errors: Track incidents caused by data misunderstanding—teams using wrong metrics, applying incorrect business logic, analyzing incomplete data sets. AI analysts with good context layers reduce these errors by making definitions explicit and calculations transparent.

Measurement kit:

Decision Cycle Time = Time from question asked to action taken

Track before/after implementation for key decision types

Example: Product prioritization decisions averaged 5 days (data gathering + analysis + review)

After implementation: 2 days

Data Trust Score = Survey-based metric (1-10 scale)

Question: "How confident are you that data used for decisions is accurate and consistent?"

Track quarterly; expect improvement as AI analyst demonstrates explainability

The organizations seeing the strongest ROI from AI analysts share a pattern. They:

- Instrument measurement from day one

- Track leading indicators (adoption, accuracy) alongside lagging indicators (business impact)

- Use data to drive continuous improvement in metadata quality, user experience, and system capabilities

Ecosystem and interoperability

Permalink to “Ecosystem and interoperability”AI analysts don’t exist in isolation—they’re part of a broader analytics infrastructure. Understanding how they integrate with existing tools enables realistic implementation decisions and avoids vendor lock-in.

Semantic layer connectors are particularly critical. Modern AI analysts work best when they can query through a semantic layer rather than directly against raw tables.

Semantic layers (e.g. dbt Semantic Layer, Cube, AtScale, etc.) provide business-friendly abstractions, centralized metric definitions, and caching—making AI analyst queries faster and more accurate. Organizations with mature semantic layer deployments typically see smoother AI analyst implementations than those querying warehouses directly.

BI tool integration varies by vendor. Some AI analysts embed within existing BI platforms (Tableau, Power BI, Looker), appearing as conversational interfaces alongside traditional dashboards. Others operate standalone but share the same underlying semantic model.

The key question is: can your AI analyst and your dashboards use the same definitions, ensuring consistency across modalities?

Warehouse and lakehouse compatibility determines what data sources the AI analyst can access. Most enterprise AI analysts support major cloud warehouses (Snowflake, Databricks, BigQuery, Redshift) through standard connection protocols. Broader compatibility with on-premises systems, legacy databases, and real-time streaming platforms varies significantly by vendor.

Metrics standards and governance frameworks like OpenMetrics and Common Metrics Layer (CML) are emerging to create interoperability between tools. AI analysts that adopt these standards can inherit metric definitions from your BI layer, data catalog, or governance platform—reducing duplicate configuration and ensuring consistency.

API-first architecture signals vendor maturity. Organizations should evaluate whether an AI analyst offers:

- REST APIs for programmatic querying

- Webhook integration for embedding in workflows

- Export capabilities for results and audit logs

- Authentication and authorization APIs that integrate with enterprise SSO

The best AI analyst implementations treat the conversational interface as one component in an integrated analytics ecosystem, not a replacement for everything else. Teams should map their current stack—semantic layers, catalogs, BI tools, warehouses—and evaluate how an AI analyst would connect to and enhance these existing platforms.

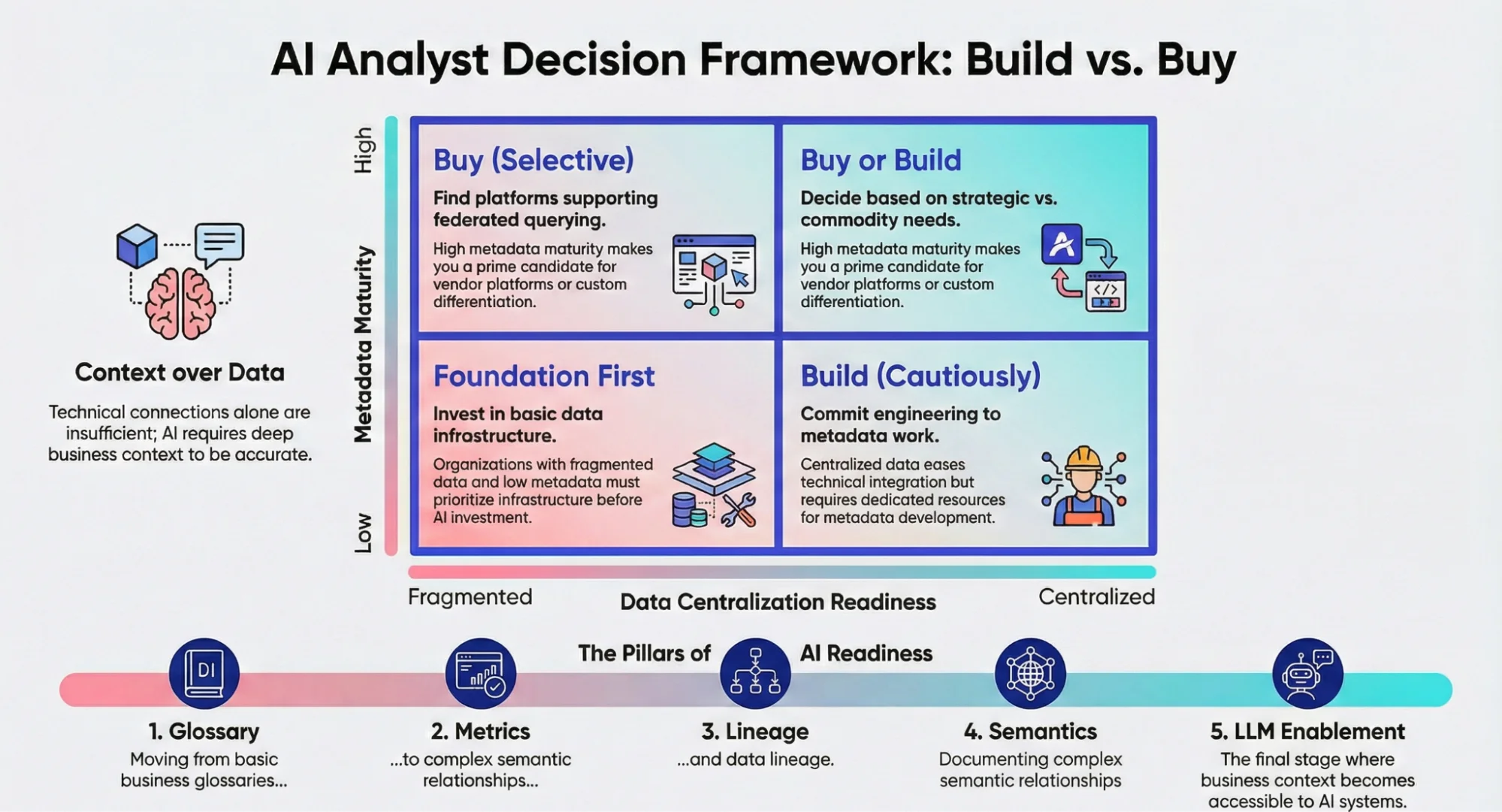

Build vs. buy: Choosing your AI analyst approach

Permalink to “Build vs. buy: Choosing your AI analyst approach”The “build vs. buy” question for AI analysts is more nuanced than for traditional software. Organizations can build conversational interfaces using LLM APIs and open-source frameworks, but the real complexity lies in everything around the LLM: context engineering, semantic integration, security, governance, and ongoing maintenance.

Build vs. buy: Choosing your AI analyst approach. Source: Atlan.

When to build

Permalink to “When to build”Building an agent in-house can be cost-effective if your organization has:

- Conversational analytics as a core competitive differentiation for your product

- Unique data patterns or analytical workflows not supported by commercial tools

- A strong engineering team available with expertise in LLMs, data architecture, and security

- A long time horizon (6-12+ months to production)

- A willingness to maintain and evolve the system as LLM technology changes rapidly

When to buy

Permalink to “When to buy”Investing in an out-of-the-box agent is a wise business decision if your organization has:

- Analytics as an important function but not core IP

- Standard analytical patterns (similar to other enterprises in your industry)

- Limited engineering resources or competing technical priorities

- Shorter time-to-value requirements (weeks to months)

- Governance-first requirements (compliance, audit, explainability) that commercial tools have solved

- Desire to leverage vendor expertise in prompt engineering, context optimization, and security

Hybrid approaches are increasingly common: organizations buy a commercial AI analyst platform but customize it heavily with internal semantic layers, custom data connectors, and proprietary business logic. This balances faster deployment with organizational specificity.

The build decision often underestimates ongoing costs. LLM APIs, compute for query processing, and engineering maintenance add up quickly. More importantly, metadata governance and context engineering represent continuous work, not one-time projects. Organizations that build should budget not just for initial implementation but for ongoing evolution as data sources, definitions, and business logic change.

The MIT research showing 95% AI pilot failure rates found that internally-built solutions succeed only about 33% of the time, while specialized vendor solutions succeed 67% of the time. This isn’t because internal teams are less capable—it’s because building production-grade AI analyst systems requires expertise in multiple domains (LLMs, data engineering, semantic modeling, security, UX) that few organizations maintain in-house.

The next step with AI analysts

Permalink to “The next step with AI analysts”AI analysts represent a genuine step forward in analytics democratization—not by replacing analysts, BI tools, or dashboards, but by adding a conversational layer that makes data more accessible to more people. The technology works. The question is whether organizations are ready to deploy it effectively.

Three factors separate successful implementations from failed pilots:

Organizational context as the unlock. The most sophisticated LLM cannot compensate for missing business definitions, ungoverned metrics, or incomplete lineage. Organizations that invest in metadata maturity before—or alongside—AI analyst deployment see dramatically better accuracy, adoption, and ROI.

Governance-first approach. AI analysts make it easy to generate answers at scale, which means governance becomes more critical, not less. Clear ownership, certification workflows, and conflict resolution processes prevent the erosion of trust that kills analytics initiatives.

Realistic expectations and phased deployment. Start with well-defined use cases, strong metadata coverage for those domains, and champion user groups. Iterate, measure, and expand based on results. Enterprise-wide deployments on day one typically fail.

The organizations succeeding with AI analysts treat them as one component of a modern analytics stack—integrated with semantic layers, governed by metadata platforms, secured through existing infrastructure, and measured against clear business outcomes. They recognize that “conversational” is an interface improvement, not a replacement for analytical rigor.

If you’re evaluating AI analysts, start with two questions:

- What’s our metadata maturity level? If you can’t answer basic questions about business definitions, metric calculations, and data lineage, address that first.

- What specific use case would demonstrate value quickly? Pick something that’s currently time-consuming, happens frequently, and matters to business outcomes.

The future of analytics isn’t purely conversational, purely dashboards, or purely analyst-driven. It’s all three, integrated thoughtfully and grounded in trustworthy data.

Ready to explore how context-aware analytics drives better decisions?

Join Great Data Debate 2026 →FAQs about AI Analysts

Permalink to “FAQs about AI Analysts”1. How is an AI analyst different from a traditional BI dashboard?

Permalink to “1. How is an AI analyst different from a traditional BI dashboard?”AI analysts interpret natural language questions and perform analysis on demand, while dashboards require pre-built visualizations. AI analysts can handle follow-up questions and adjust analysis based on context. Dashboards excel at monitoring known metrics; AI analysts excel at exploratory analysis and ad-hoc questions that weren’t anticipated when the dashboard was built.

2. What makes an AI analyst “explainable”?

Permalink to “2. What makes an AI analyst “explainable”?”Explainability means showing your work: which data sources were queried, what calculations were applied, what filters or assumptions were used, and how confident the system is in the answer. Enterprise AI analysts cite sources like academic papers cite references. This transparency lets users verify correctness, understand limitations, and build trust in AI-generated insights.

3. Do I need a semantic layer to use an AI analyst?

Permalink to “3. Do I need a semantic layer to use an AI analyst?”Technically no—AI analysts can query data warehouses directly. Practically, semantic layers dramatically improve accuracy and performance. They provide business-friendly abstractions (so the AI can work with “revenue” instead of complex SQL joins), centralized metric definitions (ensuring consistency), and caching (making queries faster). Organizations with semantic layers see better AI analyst results.

4. How do you prevent AI analysts from hallucinating wrong answers?

Permalink to “4. How do you prevent AI analysts from hallucinating wrong answers?”The primary solution is organizational context. Without standardized definitions, complete lineage, and governed metrics, AI analysts struggle to understand which “revenue” calculation to use or whether data is trustworthy. Technical issues like hallucinations are less common than context gaps. Strong metadata governance is the best defense against incorrect answers.

5. Can AI analysts handle complex analytical workflows?

Permalink to “5. Can AI analysts handle complex analytical workflows?”Modern AI analysts can perform multi-step analysis including aggregations, trend detection, cohort comparisons, and anomaly identification. However, they work best when organizational context helps them understand business logic, not just data structure. Complex workflows benefit from strong metadata foundations that explain why certain calculations matter and how data should be interpreted.

6. What’s the typical implementation timeline for an AI analyst?

Permalink to “6. What’s the typical implementation timeline for an AI analyst?”Implementation timeline depends on metadata maturity. Organizations with strong governance, clear definitions, and documented lineage can deploy in weeks. Those needing to build foundational context first should expect 2-4 months. The upfront investment in context engineering pays dividends in accuracy and user adoption.

7. Will AI analysts replace data analysts and BI teams?

Permalink to “7. Will AI analysts replace data analysts and BI teams?”AI analysts augment rather than replace human analysts. They handle routine questions and exploratory analysis, freeing analysts for strategic work like designing data products, building complex models, and providing business context that AI cannot infer. Analyst roles evolve rather than disappear—research shows that 87% of data analysts report increased strategic importance and only 17% express deep concern about job replacement.

8. How do AI analysts handle data security and permissions?

Permalink to “8. How do AI analysts handle data security and permissions?”AI analysts must respect existing data access controls, prevent unauthorized disclosure through conversational interfaces, and maintain audit trails of all queries. Integration with governance platforms ensures AI analysts inherit organizational security policies rather than requiring separate permission management. Row-level security, column masking, and audit logging are essential for enterprise deployment.

Share this article