OpenLineage: Understanding the Origins, Architecture, Features, Integrations & More

Share this article

OpenLineage is an open-source project aimed at providing standardized metadata tracking for data workflows. It enables organizations to capture, manage, and visualize lineage data, allowing them to trace data sources, transformations, and destinations.

See How Atlan Streamlines Metadata Management – Start Tour

OpenLineage integrates with modern data tools like Apache Airflow, dbt, and Apache Spark, making it easier to monitor data pipelines, troubleshoot errors, and ensure compliance with data governance policies.

OpenLineage is an open-source tool to capture, manage, and maintain lineage data from various data engineering workflows in real time.

Initially developed by WeWork, OpenLineage is now a community project maintained by contributors from other open-source projects, such as Amundsen, DataHub, Pandas, and Spark. It follows observability efforts like Open Telemetry and Open Tracing.

This guide on OpenLineage aims to look into the OpenLineage origin story, architecture, features, setup, and alternatives.

Is Open Source really free? Estimate the cost of deploying an open-source data catalog 👉 Download Free Calculator

Table of contents #

- What is OpenLineage?

- OpenLineage architecture

- OpenLineage features

- OpenLineage use cases

- How to set up OpenLineage

- OpenLineage alternatives: Other open-source data lineage tools

- Atlan for Data Security & Compliance

- Wrapping up

- FAQs on OpenLineage

- OpenLineage: Related reads

What is OpenLineage? #

OpenLineage is an open-source metadata management solution that captures metadata from data pipelines and their dependencies.

You can use OpenLineage to capture lineage metadata from their workflows and persist it in a supported backend tool, such as Astro, Egeria, Manta, or Marquez.

OpenLineage aims to be the answer to a question that frustrates most data practitioners:

“If a pipeline fails, how are you supposed to solve the problem quickly without knowing the root cause, how it’s affecting upstream and downstream applications, and how to prevent it from happening again?”

Note: OpenLineage isn’t a metadata server. It is a standard or a specification for lineage metadata. Marquez is an example of a metadata server that can receive and analyze OpenLineage events.

Before delving into the architecture and capabilities, let’s explore the history and goals behind the OpenLineage project.

What is the goal of OpenLineage? #

According to Micheal Collado, the Staff Software Engineer at Astronomer, the goal of OpenLineage is to:

“Reduce issues and speed up recovery by exposing hidden dependencies and informing data practitioners about the state of that data and the potential blast radius of changes.”

Here’s why we need this level of visibility into our data pipelines.

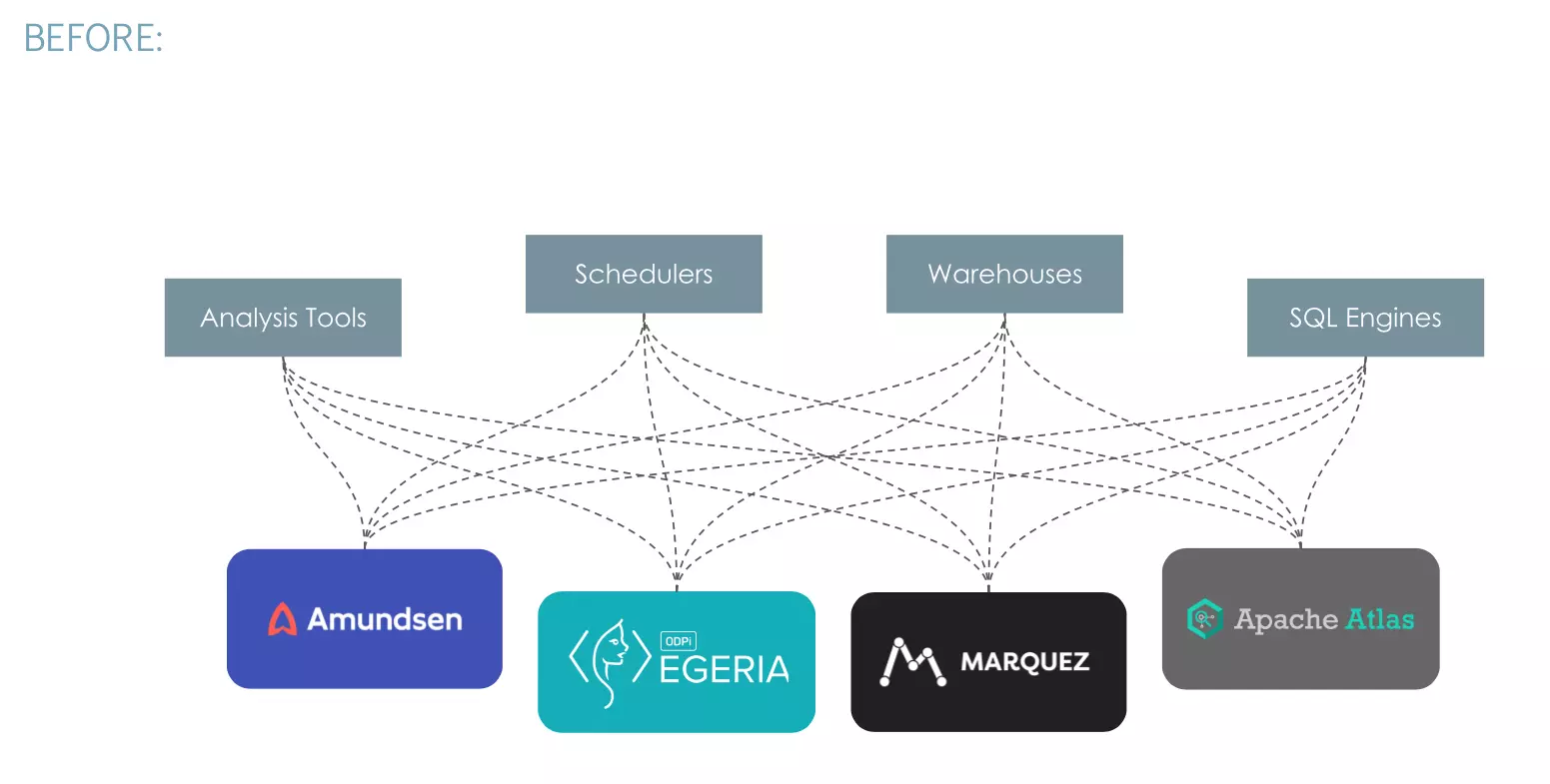

Every change to a data workflow can affect someone’s work. For instance, if you have a custom integration with Spark and a new version was just released, you must update your setup to ensure compatibility with the latest version.

However, you don’t know which applications use data from Spark nor how the update will affect them. Moreover, there’s a chance that the update might lead to errors, certain workflows breaking, or other unexpected outcomes.

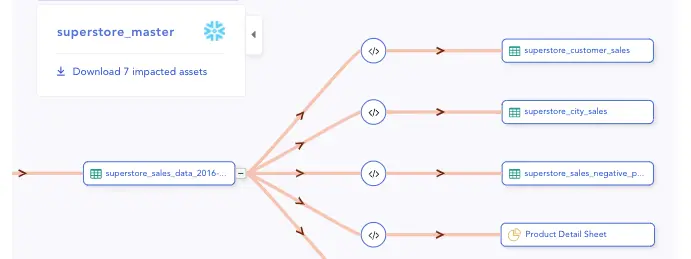

The data stack without OpenLineage. Source: OpenLineage Docs.

Without proper visibility into data flow, it’s tough to analyze the impact and inform the people involved.

That’s where OpenLineage can help.

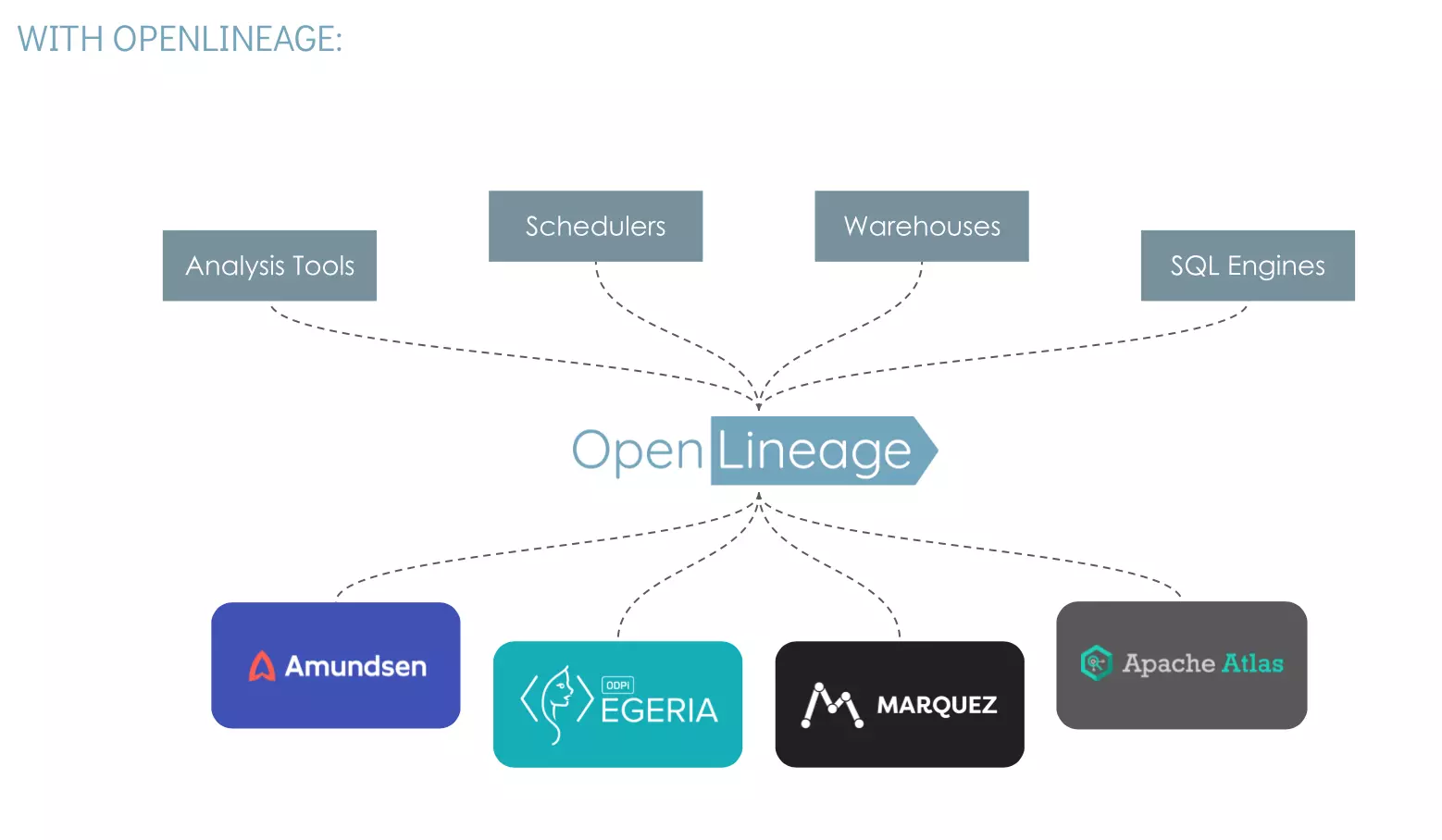

How OpenLineage can help you get data flow visibility and collaborate more effectively with other data practitioners. Source: OpenLineage Docs.

With OpenLineage, you can track how changes to Spark will impact the connected pipelines, as well as any dependencies on other frameworks or libraries.

This helps you plan and prepare for potential outcomes and reduce the risk of workflows breaking because of compatibility issues.

Data engineer Vinodhini Duraisamy highlights the difference OpenLineage can make. Source: Twitter.

The origin story of OpenLineage stems from similar aspirations. So, let’s take a quick detour.

A brief history of OpenLineage: The Google Maps for data pipelines #

As data pipelines and the modern data stack matured, the priorities of data engineers have shifted. Instead of merely thinking about building pipelines, a bigger priority is to understand what’s happening inside the existing pipelines and draw insights from them.

In 2017, one of the co-founders of OpenLineage, Julien Le Dem, was the Senior Principal Engineer at WeWork. A problem that plagued his team was a “need to build a map of how all the jobs and datasets depend on each other.”

“It was about providing this visibility, understanding where the data you’re consuming is coming from, and understanding where the data you’re producing is going to.”

This led to the development of Marquez, the precursor to OpenLineage, around 2017.

The goal was to build Google Maps for data pipelines. Eventually, WeWork open-sourced the project to solve pipeline visibility problems for all companies.

In 2020, the team behind Marquez started focusing on data observability and decided to launch OpenLineage as a separate project. The aim was to set up an end-to-end management layer for your data ecosystem.

Here’s how Julien Le Dem highlights the importance of focusing on OpenLineage:

“Data lineage is the backbone of DataOps. Lineage can help reduce fragmentation and duplication of efforts across industry players, and enable the development of various tools and solutions in terms of data operations, governance, and compliance.”

While OpenLineage is still under development, it has gained widespread adoption, becoming a critical tool for data teams looking to optimize their complex data pipelines.

Data engineer Sarah Krasnik on the problems that OpenLineage can solve for data teams. Source: Twitter.

OpenLineage architecture #

OpenLineage has an extensible architecture that can be integrated with a wide range of data tools and platforms, such as Apache Airflow, Apache Spark, Flink, dbt, and Snowflake.

Before looking into the architecture, let’s familiarize ourselves with OpenLineage terminology.

OpenLineage terminology #

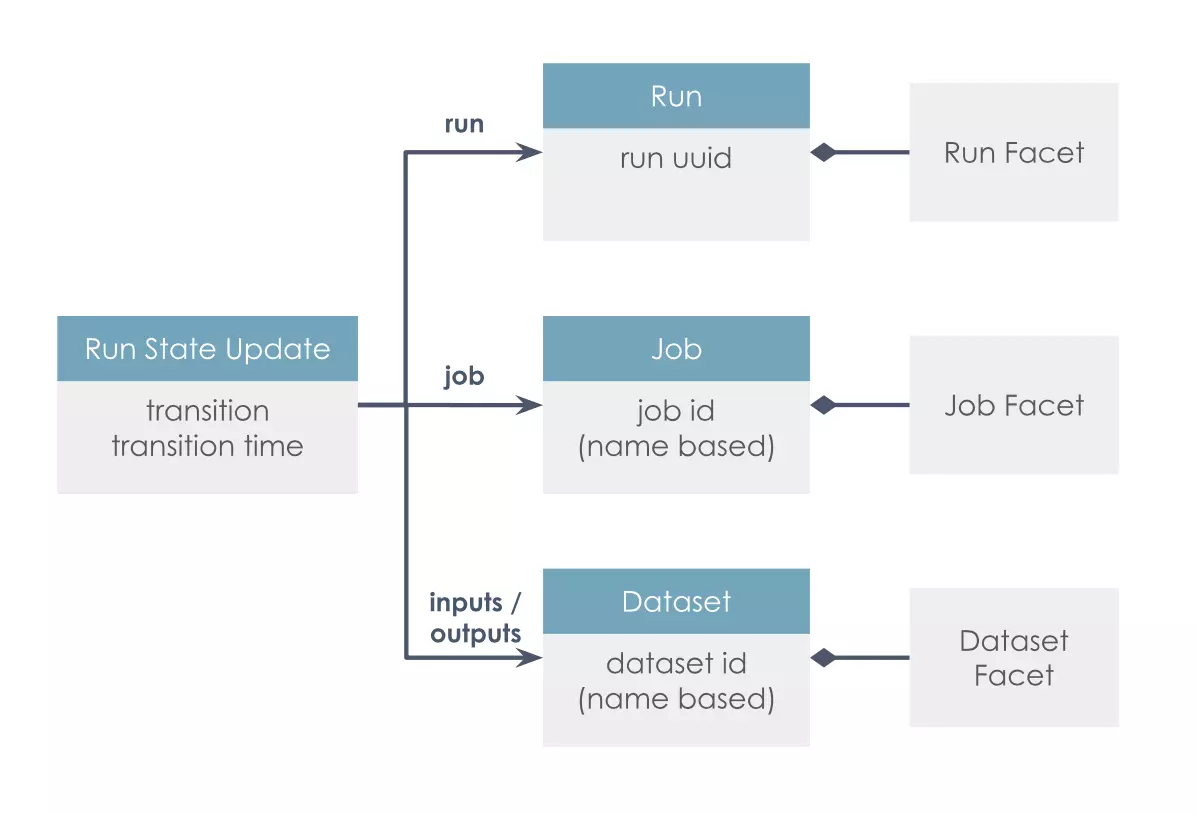

The OpenLineage architecture uses terms such as:

- Dataset: A dataset is a unit of data, such as a table in a database or an object in a bucket for cloud storage. A dataset changes when a job writing to it gets completed.

- Job: A job is a data pipeline process that creates or consumes datasets. Jobs can evolve, and documenting these changes is crucial to understanding your pipeline’s mechanism.

- Run: A run is an instance of a job that has been executed. It contains information about the job, such as the start and completion (or failure) time. Runs help in observing changes to jobs.

- Run state update: All jobs have states (i.e., Run State). When something important takes place within your pipeline, you get a Run State Update. These updates occur whenever a job starts or ends. Each Run State Update can include information about the Job, the Run, and the Datasets involved.

- Facet: Facets offer context on lineage events. It is user-defined metadata and provides further information on Jobs, Runs, and Datasets. 1. A Dataset Facet could be a schema, a Job Facet thesource code, and a Run Facet theBatch ID.

The core entities of the OpenLineage architecture. Source: GitHub.

Now let’s look at OpenLineage’s architecture that makes it possible to observe datasets as they move through data pipelines.

Architecture #

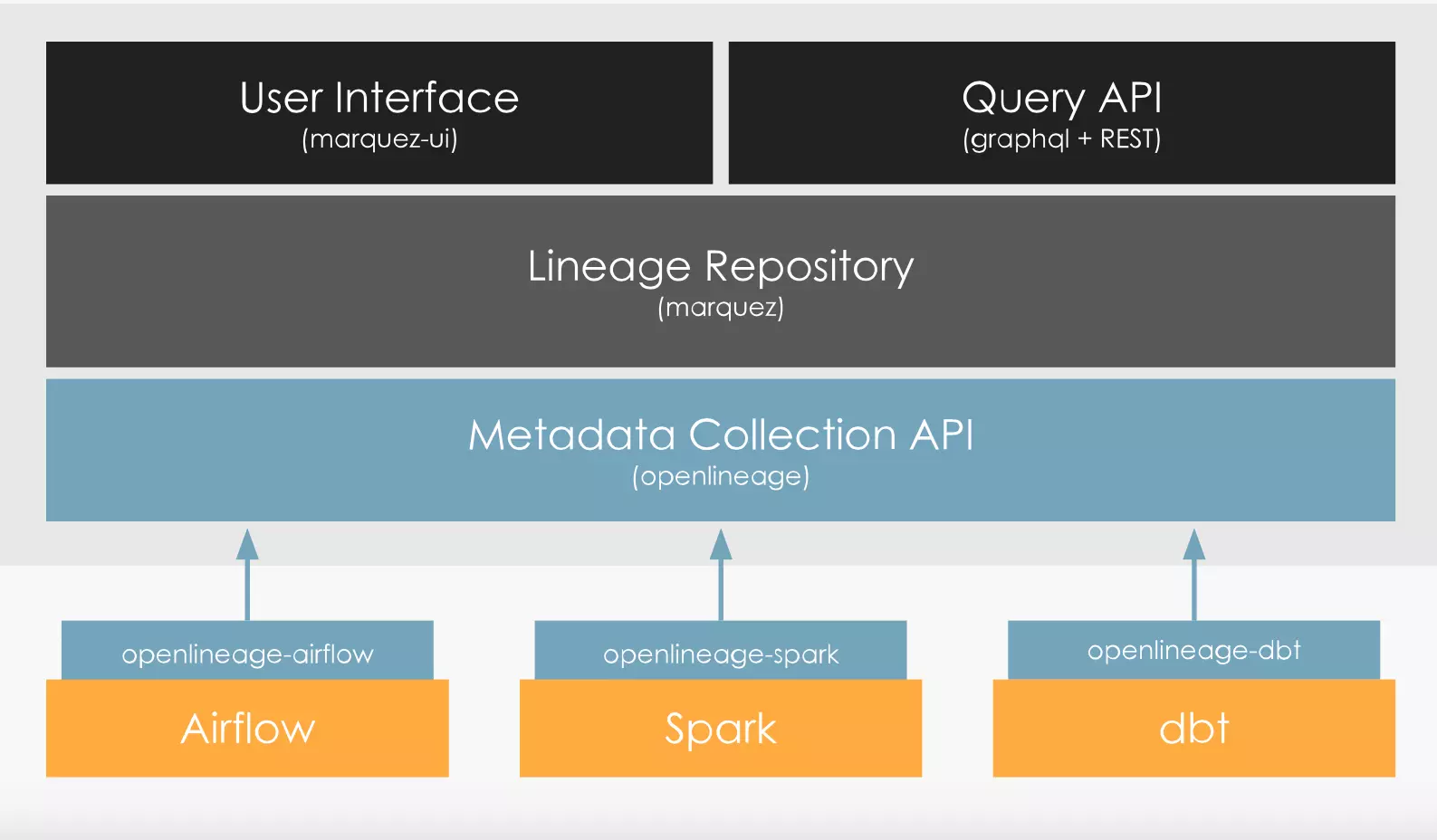

The OpenLineage architecture includes the following:

- The metadata collection APIs that integrate with various data sources and data pipeline tools (Spark, Airflow, and dbt)

- A lineage server (Marquez) that acts as the central repository to store metadata about lineage events

- A web-based UI to visualize data lineage and dependencies and REST APIs for capturing and querying metadata

- Libraries for common languages

The OpenLineage platform for lineage data collection and analysis. Source: OpenLineage.

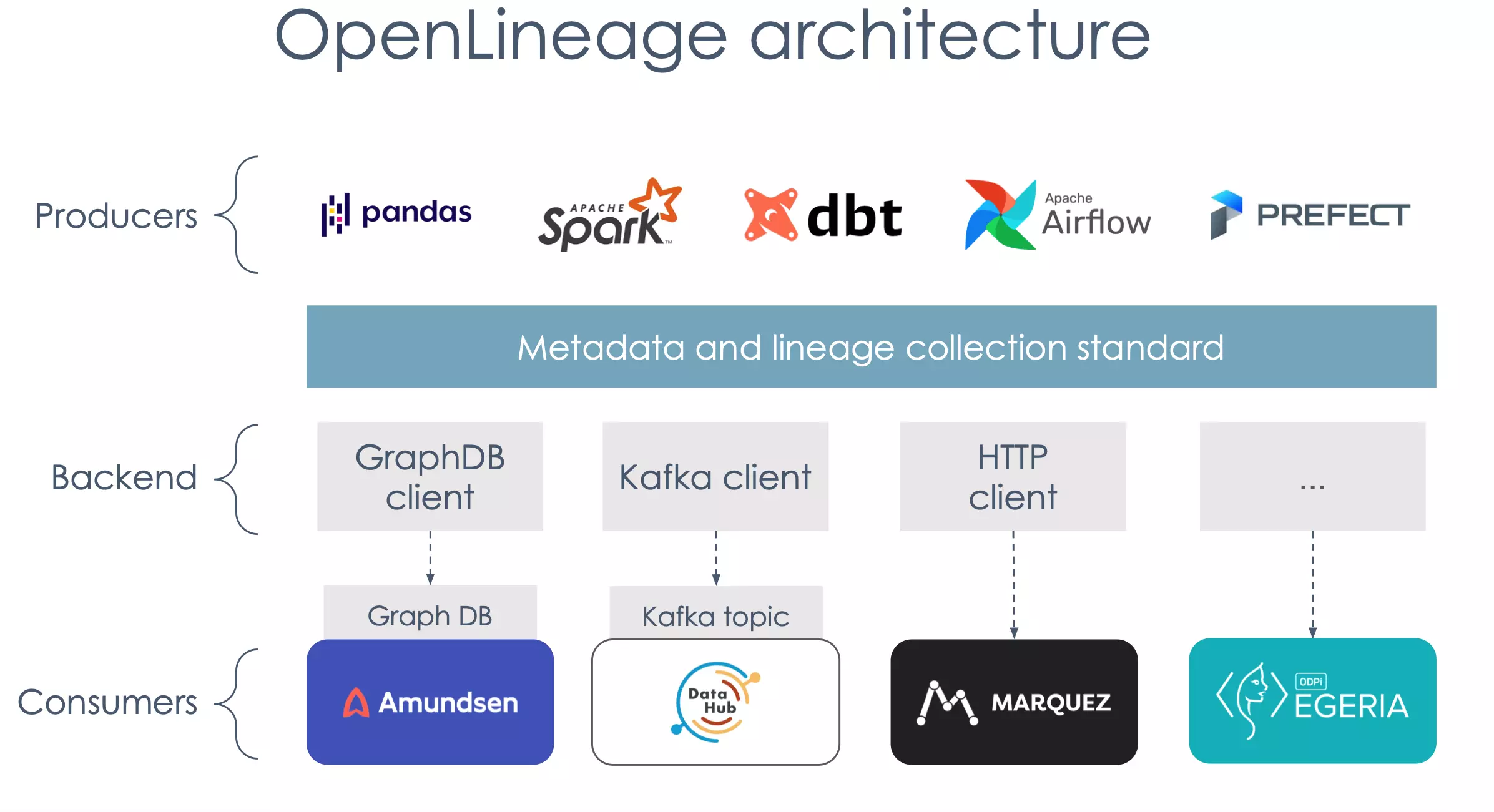

A simpler way to look at the OpenLineage architecture is to break it down into three essential components:

- Producers: The components that capture and send metadata about data pipelines and their dependencies to the OpenLineage server

- Backend: The infrastructure that helps you store, process, and analyze metadata

- Consumers: The components that fetch metadata from the server and feed it to web-based interfaces so that you can visualize the data lineage mapping

OpenLineage architecture. Source: The OpenLineage slides from the 2021 Airflow Summit.

OpenLineage features #

OpenLineage offers a range of features to collect, track, and analyze lineage for your data ecosystem. Even though it’s still under development, some of the key features include:

- Capture metadata:

- Technical metadata, such as the dataset schema, version, ownership details for datasets and jobs, run time, job dependencies, and more

- Dataset-level and column-level data quality metrics, such as row count, byte size, null count, distinct count, average, min, max, and quantiles

- Trace column-level lineage for Apache Spark data

- Integrate with ETL frameworks, data orchestration engines, data quality engines, and data lineage tools:

- Data stores and warehouses, such as Amazon S3, Amazon Redshift, HDFS, Google BigQuery, PostgreSQL, Azure Synapse, Snowflake

- Data orchestration tools, such as Apache Airflow, Apache Spark, dbt

- Data lineage tools like Egeria

OpenLineage use cases #

OpenLineage can help you with several use cases, such as:

- Data observability with a map of your entire data ecosystem

- Root cause identification and impact analysis for data pipelines

- Impact planning to ensure changes occur with minimal risks

- Real-time anomaly detection

- Support compliance and governance requirements by capturing metadata about data lineage and dependencies

- Prioritize the right issues to ensure efficient data pipeline management

- Optimize ETL jobs and ensure data quality throughout the pipeline

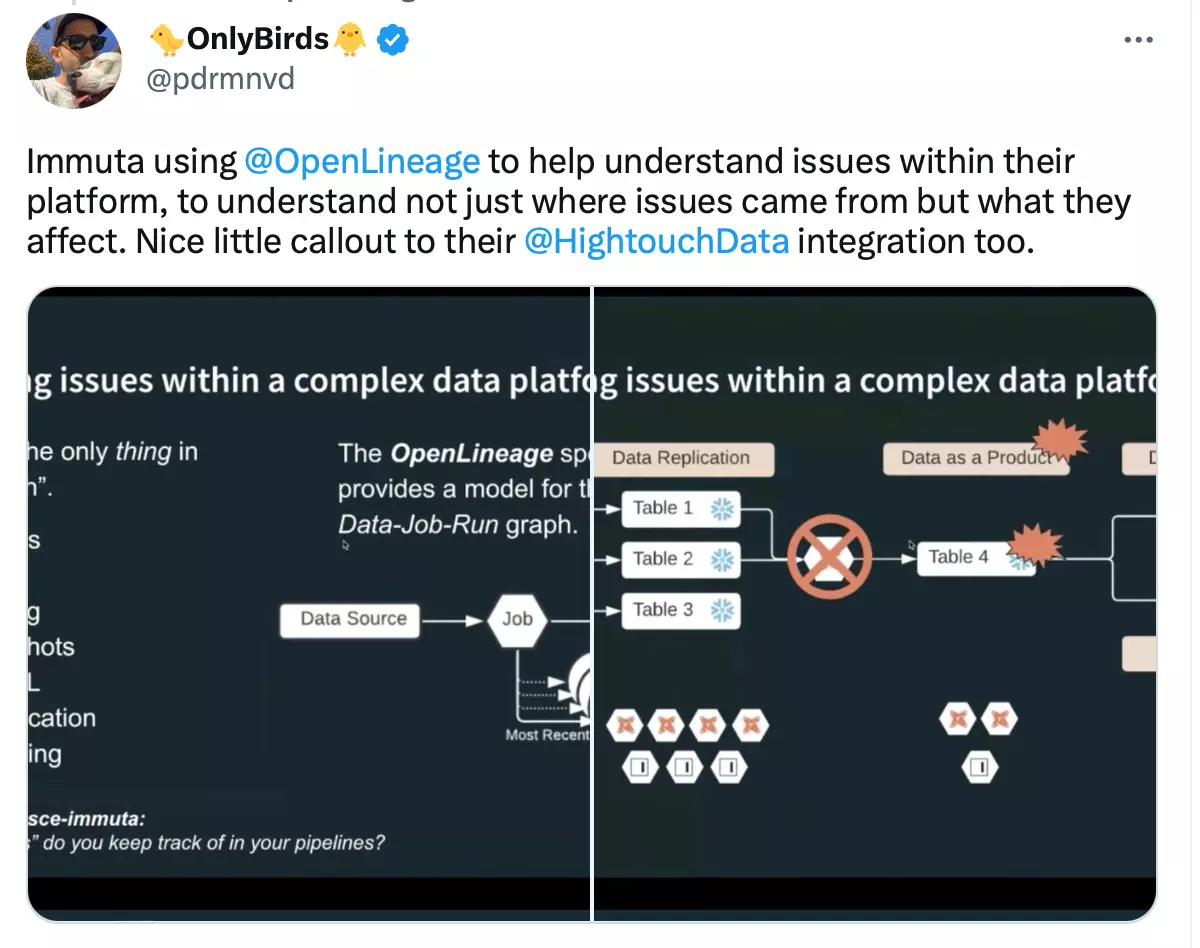

How Immuta uses OpenLineage, according to Pedram Navid, the former Head of Data at Hightouch. Source: Twitter.

Also check out how Northwestern Mutual simplified data observability with OpenLineage and Marquez.

How to set up OpenLineage #

Before getting started with OpenLineage, make sure that you have the following prerequisites in place:

- Docker 17.05+

- Docker Compose

- Marquez (as the OpenLineage HTTP backend)

The next step is to prepare the environment so that you can connect with the relevant data sources (like Snowflake) and orchestration tools (like Airflow). The GitHub repository has clients for Python and Java, which you can access here.

To know more about the step-by-step process to connect OpenLineage with your favorite data tools, check out the following resources:

Once you’ve configured the OpenLineage integration for your tool stack, the next step is to start capturing metadata about pipeline runs, jobs, datasets, and other relevant information.

This metadata should be captured and sent to the OpenLineage server so that you can visualize it to understand data flow across your landscape.

OpenLineage alternatives: Other open-source data lineage tools #

In addition to OpenLineage, there are other open-source alternatives available, such as:

- Tokern

- Pachyderm

- TrueDat

- Spline

- Egeria

To know more about each tool and how it stacks up against OpenLineage, check out our guide on the complete list of open-source data lineage tools for 2023.

Atlan for Data Security & Compliance #

Atlan helps customers ensure data security and compliance when using semi-structured data with features that include:

- Automated data classification

- Access control features like Role-Based Access Control

- Masking policies

- Bi-directional tag syncing

These features work together to protect sensitive data, automate compliance tasks, and provide visibility into data access and usage.

Also, Atlan can help to improve data security and compliance by providing visibility into data lineage, including across different systems and environments. By understanding the flow of data, organizations can more easily identify and mitigate potential risks.

Legendary Consumer Brand Dr. Martens Improves Data Discoverability, Impact Analysis, and Business Collaboration on Data With Atlan.

At a Glance #

- Dr. Martens, an iconic global footwear brand with a six-decade heritage, evaluated the data catalog space in order to drive self-service atop their quickly modernizing data stack.

- Choosing Atlan, their data team quickly implemented a self-service catalog to provide context around their most critical data assets.

- Atlan’s implementation has accelerated time-to-insight for Dr. Martens’ internal data consumers, and is reducing time spent on impact analysis from four to six weeks, to under 30 minutes for data practitioners.

Book your personalized demo today to find out how Atlan can help your organization in ensuring data security and compliance.

Wrapping up #

OpenLineage is a community-driven, technology-agnostic, extensible framework to help data practitioners understand how data is produced and used.

As mentioned earlier, it captures metadata about Datasets, Jobs, and Runs to help data engineers unearth the root cause of data flow issues, study the impact of changes or updates, and collaborate with the people involved to mitigate pipeline-related risks.

While OpenLineage promises several advancements with observability for data pipelines, it is still under development. So, we’ll have and wait and watch to know what’s in store for us.

However, it will keep developing capabilities to integrate seamlessly with the modern data stack and capture essential metadata that helps data practitioners understand how data flows across their organizations.

FAQs on OpenLineage #

What is OpenLineage? #

OpenLineage is an open-source framework designed to capture, manage, and maintain data lineage across diverse data pipelines. Initially developed by WeWork, it now benefits from contributions by organizations supporting open-source projects like Amundsen and DataHub, ensuring interoperability and standardization in lineage tracking.

How does OpenLineage improve data tracking? #

OpenLineage enhances data tracking by providing a standardized approach to metadata capture across workflows. This consistency enables accurate, real-time lineage tracking, which is essential for diagnosing issues, understanding data dependencies, and ensuring data integrity across systems.

How can OpenLineage benefit data observability? #

By integrating with various data sources and platforms, OpenLineage strengthens data observability through comprehensive, continuous metadata collection. This supports proactive monitoring and issue resolution in data workflows, enhancing the reliability and transparency of data operations.

What standards does OpenLineage support for metadata management? #

OpenLineage follows open-source standards for metadata management, focusing on interoperability and ease of integration with existing tools. These standards are aligned with industry best practices for data tracking and observability, fostering a unified approach to lineage and metadata management across platforms.

What tools work with OpenLineage for lineage tracking? #

OpenLineage integrates with popular data ecosystem tools like Apache Spark, Airflow, and dbt, supporting seamless lineage tracking across these platforms. This compatibility enables organizations to incorporate lineage tracking into their workflows without extensive reconfiguration.

How does OpenLineage handle complex workflows or multi-cloud data? #

OpenLineage is built to handle complex, multi-layered workflows, including multi-cloud environments, by providing a scalable and adaptable framework. It ensures that lineage information is accurate and up-to-date, allowing teams to manage dependencies and data flows across diverse infrastructures effectively.

Share this article