Marquez by WeWork: Origin, Architecture, Features & Use Cases for 2025

Share this article

Marquez by WeWork is an open-source metadata management service designed to track the lineage of your data assets. It helps organizations understand where their data originates and how it flows through systems.

See How Atlan Simplifies Data Governance – Start Product Tour

By offering clear insights into data dependencies, Marquez simplifies data management and enhances transparency.

Its open-source framework allows seamless integration, ensuring teams can monitor, analyze, and optimize data workflows efficiently.

With advanced functionalities, Marquez enables teams to monitor and optimize data workflows. It integrates seamlessly with existing infrastructures, ensuring efficient data pipeline tracking.

Marquez by WeWork is a metadata management service that helps you understand the origin and destination of your data assets clearly. It collects, stores, and allows you to visualize the metadata of your data ecosystem.

WeWork open-sourced Marquez in 2019, now an incubation-stage project of the LF AI & Data Foundation.

Let’s dive deeper into Marquez’s origins, architecture, and features and explore how this tool can help you with your data and analytics use cases.

Is Open Source really free? Estimate the cost of deploying an open-source data catalog 👉 Download Free Calculator

Table of contents #

- What is Marquez?

- Marquez architecture

- Marquez: Use cases

- Marquez features

- Advanced Marquez functionalities

- How to set up Marquez

- How organizations making the most out of their data using Atlan

- Summary

- WeWork’s journey toward trust, transparency, and governance

- FAQs about Marquez

- Related reads on Marquez

What is Marquez? #

Marquez is a metadata managementservice that collects metadata about data sources, transformations, and outputs and stores this information in a centralized repository.

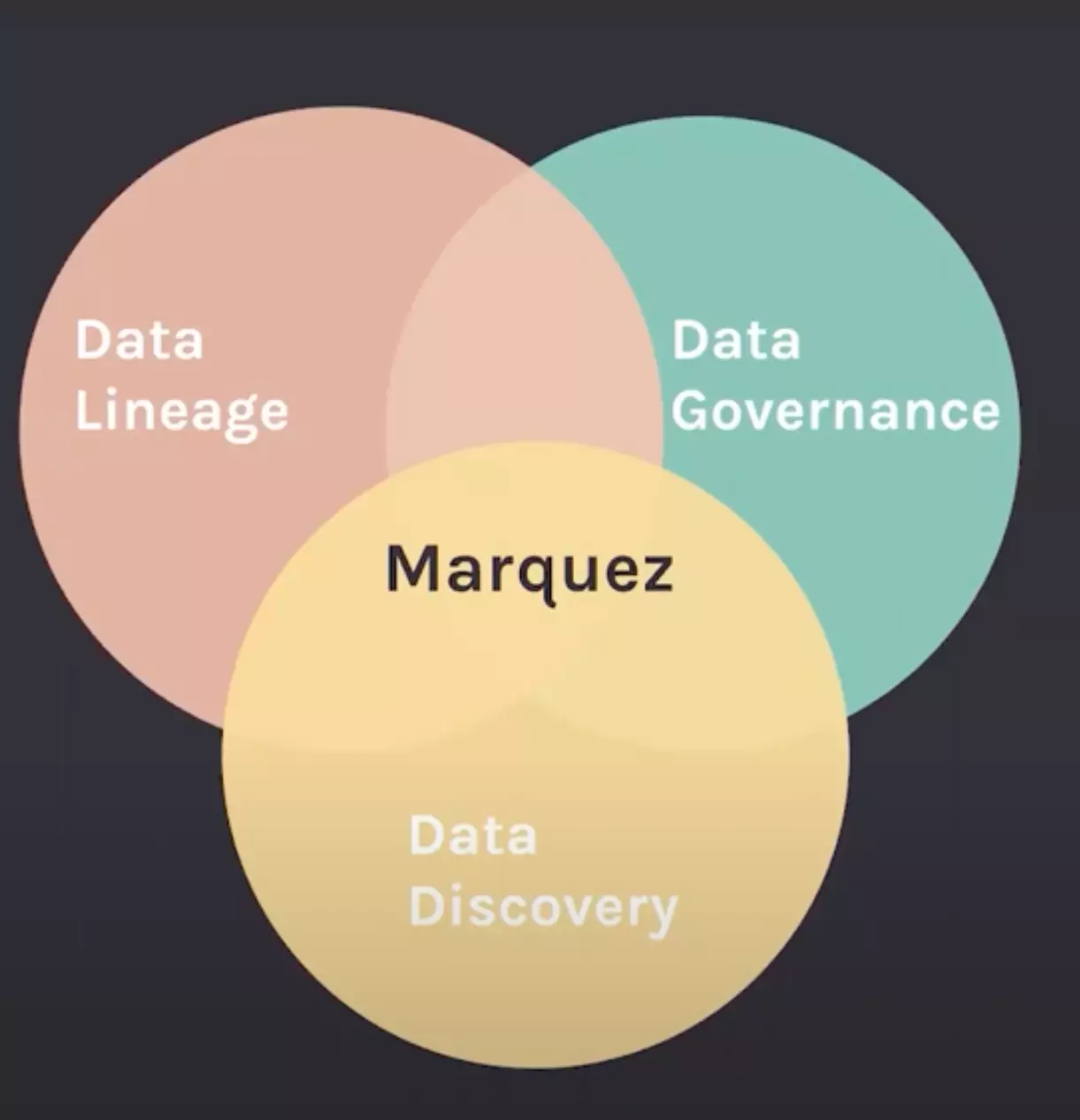

Marquez is at the heart of data discovery, lineage, and governance - Source: Data Council.

Marquez was developed by Willy Lulciuc and Julien Le Dem at WeWork in 2017 and then open-sourced in 2019.

Its user-friendly and intuitive interface makes finding and accessing your data sets easier.

Marquez also enables better collaboration and communication between team members.

DAG lineage metadata in Marquez - Source: Twitter.

You can share insights and updates with your colleagues in real time. Moreover, since everyone works off of the same database, keeping track of changes and updates becomes easier.

The need for Marquez #

According to Willy Lulciuc, the co-creator of Marquez, the need for a tool like Marquez stems from the challenges associated with managing metadata in increasingly complex environments.

“A lot of times you go into these organizations … and you ask them, “Tell me about your metadata.” They’ll respond by, “You know we have Redshift and a data warehouse, and that’s where all of our data is.” Sadly, that’s only a data blob.”

Marquez co-creator Julien Le Dem highlights the need for understanding and tracking dependencies:

“To build a healthy ecosystem, you need to start understanding the dependencies between teams and within a team.”

Before creating Marquez, Willy Lulciuc and Julien Le Dem worked as software engineers designing and implementing large-scale data processing projects.

The difficulties they faced revolved around three aspects:

- Data quality

- Data lineage

- Data democratization (self-service data culture)

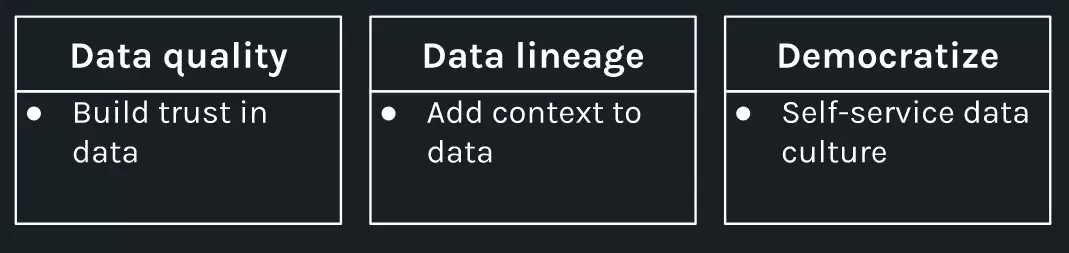

The need for Marquez revolves around three aspects - Source: Marquez.

Let’s explore each challenge further.

Data quality #

Inconsistencies across datasets and variable origins, transformation processes, and their reliability lead to data quality issues.

The challenge here is designing systems capable of storing, analyzing, tracking, and presenting rich metadata. By doing so, organizations may improve data quality and the entire analytics lifecycle.

Data lineage #

In the world of complex analytics workflows and intricate data pipelines, comprehending data provenance remains fundamental.

Debugging data glitches, meeting compliance requirements, detecting fraudulent activities, and auditing datasets for internal purposes — all require uncovering each piece of data’s travel log.

This is where data lineage can help. Tracking this journey of data flow accurately is possible with proper metadata handling.

Data democratization #

Building an enriched self-service environment presents seven primary difficulties. It should:

- Provide sufficient functionality without requiring intricate tech expertise

- Offer effortless access to relevant data descriptions

- Facilitate close collaboration among technical and business users by providing simple yet robust interaction options

- Integrate effectively with your existing data stack

- Guarantee transparent navigation for users of all levels

- Encourage exploratory actions via captivating visualization techniques

- Supply ample educational resources to aid skill acquisition

Addressing these difficulties would involve creating a system that manages metadata, ensures data quality and lineage tracking, and provides an intuitive interface to interact with data.

So, the founders embarked on a mission to build and launch a great metadata repository service.

Here’s how Julien Le Dem puts it:

“I was convinced there was a big missing piece in collecting all the jobs and all the datasets. So that’s where we started this open source project called Marquez.”

Today, Marquez is being developed to cater to a wide variety of metadata management use cases.

Marquez is a trending tool to watch out for data pipelines - Source: Twitter.

WeWork and Marquez #

As early adopters, WeWork provided valuable insight and support during the initial stages of the company’s creation, providing critical resources such as flexible co-working spaces, networking opportunities, and strategic partnerships.

The Marquez team leveraged these advantages to accelerate product refinement and business expansion, ultimately contributing to WeWork’s commitment to fostering thriving startup communities.

According to the GitHub repository Marquez, as of November 2024, the repository has garnered approximately 1.8k stars and 318 forks, indicating a robust and active community.

Today, WeWork continues to play a vital part in shaping Marquez’s future by offering essential infrastructure, mentorship, and connections.

Marquez architecture #

Marquez is built on a modular foundation with three core components — metadata repository, user interface, and API.

At the heart of the metadata repository is an integrated graph database, which efficiently stores and manages the relationships between different elements in the data model.

In addition, it maintains detailed lineage records between all datasets, jobs, and runs involved in a given pipeline.

These links enable the automatic discovery of upstream and downstream dependencies, supporting impact analyses for planned alterations or unexpected incidents. It also simplifies diagnostic efforts during troubleshooting exercises, easily pinpointing bottlenecks or anomalies.

According to the article Marquez Project Overview by LF AI & Data Foundation, in September 2023, Marquez graduated to a full-fledged project under the LF AI & Data Foundation, signifying its maturity and stability.

The data model captures intricate details of diverse enterprise systems, streamlining complex data pipelines and resource collections, thereby facilitating operational efficiency and decision-making.

The three elements of Marquez’s data model — datasets, jobs, and runs — are interconnected in the graph database, allowing for clear lineage tracking and enhanced metadata management.

Let’s examine the architecture and data model further.

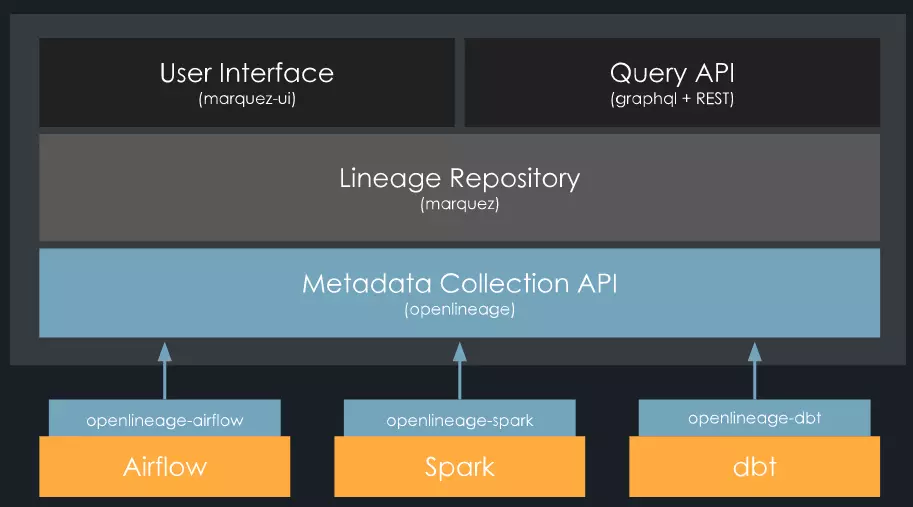

The architectural design of Marquez #

As mentioned earlier, the architecture design of Marquez has three principal components, each playing a vital part in data exploration and management. These include:

- Metadata repository

- Metadata API

- Metadata UI

Marquez architecture design - Source: Marquez.

Let’s explore each component further.

Metadata repository #

The metadata repository is a core module that stores details regarding all data assets.

From data set titles and explanations to schemas, origins, and data workflows, this component functions as a single source of truth (SSOT) for everything associated with your company’s datasets.

The metadata repository is vital to finding, grasping, and analyzing information from data sources and applications.

Metadata API #

Next comes the Metadata API, acting as the link between the SSOT system and third-party software and tools, such as Apache Zeppelin Notebook, Apache Airflow DAG Editor, etc.

By enabling real-time interoperability between diverse platforms via APIs, Marquez lets you collaborate when producing or using data products.

This helps streamline communication and reduce bottlenecks encountered during traditional development cycles.

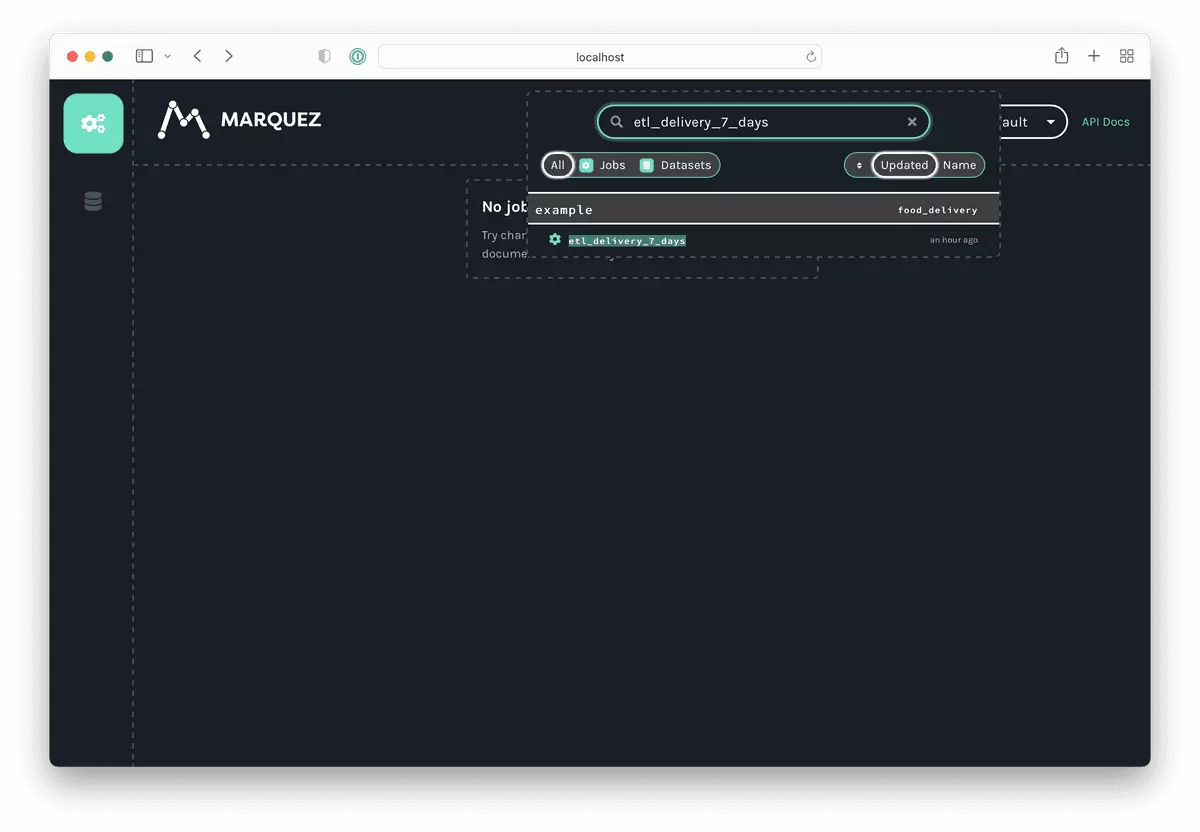

Metadata UI #

Metadata UI offers a means to find and explore the wealth of data stored in your system.

Using this intuitive interface, you can look for particular datasets and get an in-depth insight into the connections between them.

Additionally, the metadata UI presents a flexible dashboard framework that can be adjusted depending on individual preferences.

Marquez uses OpenLineage technology to trace data lineage by collecting metadata from various data sources and applications.

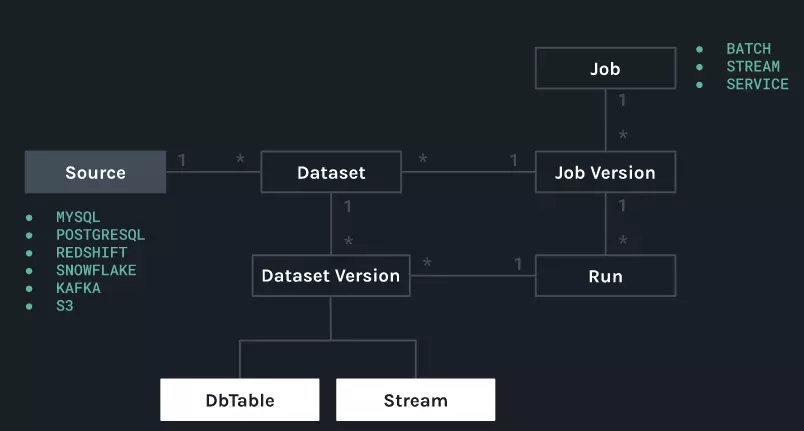

The data model of Marquez #

Marquez’s architecture design is closely tied to its data model — the foundation upon which Marquez’s system is built.

The data model captures all aspects of a company’s data assets and processes. Its building blocks include:

- Datasets

- Jobs

- Runs

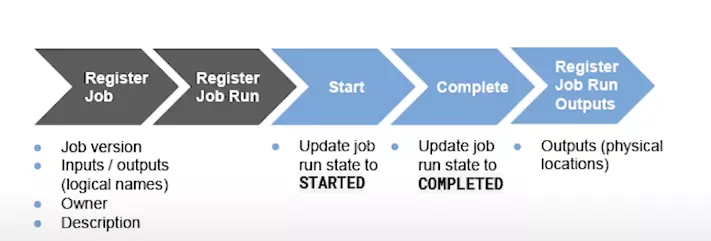

The Marquez data model - Source: Marquez.

The modular architecture of Marquez is specifically designed to accommodate the flexible and extensible nature of its data model.

Now let’s explore each building block further.

Datasets #

Datasets represent the raw data stored across disparate systems and databases. They serve as the source material for subsequent processing jobs, which apply transformation logic to shape the data according to business requirements.

Each dataset has a distinct ID that links it back to the respective parent job and can carry additional descriptive attributes (like lineage or ownership).

Jobs #

Jobs form the foundation of Marquez’s entire platform and contain the instructions to execute complex ETL workflows (i.e., moving data from various sources, transforming it to fit the destination system, and then loading it into the target system).

The job definition can incorporate:

- Parameters

- Arguments

- Schedules

- Logging preferences

- Error-handling rules

- Environment-specific configuration settings

- Validation policies

Runs #

Runs encapsulate the outcomes of running jobs under specific configurations and environmental conditions.

Every run receives a unique ID and records all details related to execution, such as:

- Start and end times

- Completion rates

- Failure indicators

- Stderr/stdout outputs

- Metrics tracking progress and performance

By aggregating runs together, you get insightful historical views of your data pipeline evolution over time.

Marquez: Use cases #

A major use case for Marquez is implementing a centralized metadata repository. This helps an entire team or department to stay on the same page regarding tasks and workflows.

Because this repository acts as an SSOT for all critical metadata, your teams don’t have to store or manage different copies of files individually.

Instead, they can all access the same metadata at any time, ensuring that everyone understands what needs to be done next and who is responsible for each task.

According to Kevin Mellot, who oversaw the implementation and delivery of Northwestern Mutual’s enterprise data platform:

The Unified Data Platform at Northwestern Mutual relies on the OpenLineage standard to formulate technical metadata within our various platform services. Publishing these events into Marquez has provided us with an effortless way to understand our running jobs.

As a result, the team at Northwestern Mutual can easily trace a downstream dataset to the job that produced it and examine individual runs of that job or any preceding ones.

Because this repository acts as an SSOT for all critical metadata, your teams don’t have to store or manage different copies of files individually.

Lastly, as team members can contribute to this shared resource, the repository serves double duty as a collaboration space.

Marquez features #

Essential features of Marquez include:

- The ability to scale infrastructure and performance

- A modular architecture to support extensibility

- The ability to gain deep insights into data workflows

- Multi-user editing and sharing to improve collaboration

- A single source of truth (SSOT) for all metadata

- Machine-learning-powered continuous learning and improvement

- Automated compliance monitoring for risk mitigation

The ability to scale infrastructure and performance #

Marquez processes millions of entities representing billions of database rows and efficiently deals with numerous file paths exceeding one million items.

It can navigate extensive data sets without any noticeable slowdowns or hitches, making it suitable for substantial and sophisticated applications.

A modular architecture to support extensibility #

Built with Java and Python libraries, Marquez can provide endless opportunities for customization and extension.

You can fine-tune Marquez according to your specific needs, expanding its functionality past standard use cases. So, integrating with third-party software and handling non-standard situations becomes straightforward.

The ability to gain deep insights into data workflows #

Marquezoffers comprehensive lineage features which help you understand complex interdependencies between various components of your data ecosystem.

As a result, you can identify areas demanding attention and ensure regulatory requirements fulfillment.

By providing intuitive visualizations and drill-down capabilities, you get end-to-end data transparency, prompting swift resolution of issues.

Multi-user editing and sharing to improve collaboration #

By employing collaboration functionalities like notebook integration, Marquez empowers you to:

- Edit shared resources concurrently

- Share comments

- Publish content

- Maintain version histories

A single source of truth (SSOT) for all metadata #

Marquez is a centralized repository for storing, organizing, and keeping track of vital asset descriptors, documentation, and lineage mapping.

This ensures an SSOT for all data-related assets, which can be accessed and maintained by different teams across the organization.

Such a consolidation promotes consistency and accuracy in data usage and management, reducing the risk of errors or discrepancies caused by using multiple sources of information.

Machine-learning-powered continuous learning and improvement #

Marquez incorporates machine learning models to understand workflow behavior and anticipate future events by automatically recognizing patterns and anomalies.

As new insights emerge, Marquez adjusts its operations and grows smarter over time.

Automated compliance monitoring for risk mitigation #

Marquez ensures adherence to prevailing standards, such as SOX, GDPR, or PCI DSS, by tracking relevant policy implementations throughout your system.

Automatic flagging of potential violations helps auditors prioritize their workload during critical examinations and stay vigilant about crucial risk factors.

Advanced Marquez functionalities #

Certain abilities make Marquez stand out among other programs. These include:

- Data lineage visualization to better understand data flows

- Custom metadata to quickly find what you need

- Versioning

- APIs/SDKs to ensure compatibility

- Notifications

- Workflow management to ensure proper data processing

Let’s explore each advanced functionality.

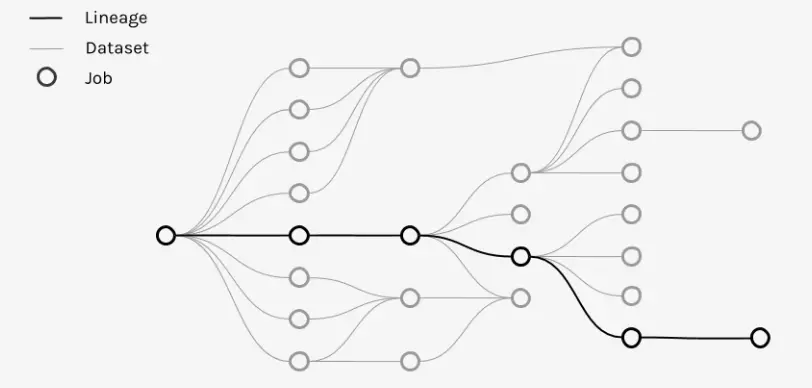

Data lineage visualization to better understand data flows #

Data lineage visualization helps you better understand how your data flows across various processing stages within the pipeline. It also gives you a clear overview of where there might be delays, bottlenecks, or errors in the flow process, allowing for quick troubleshooting.

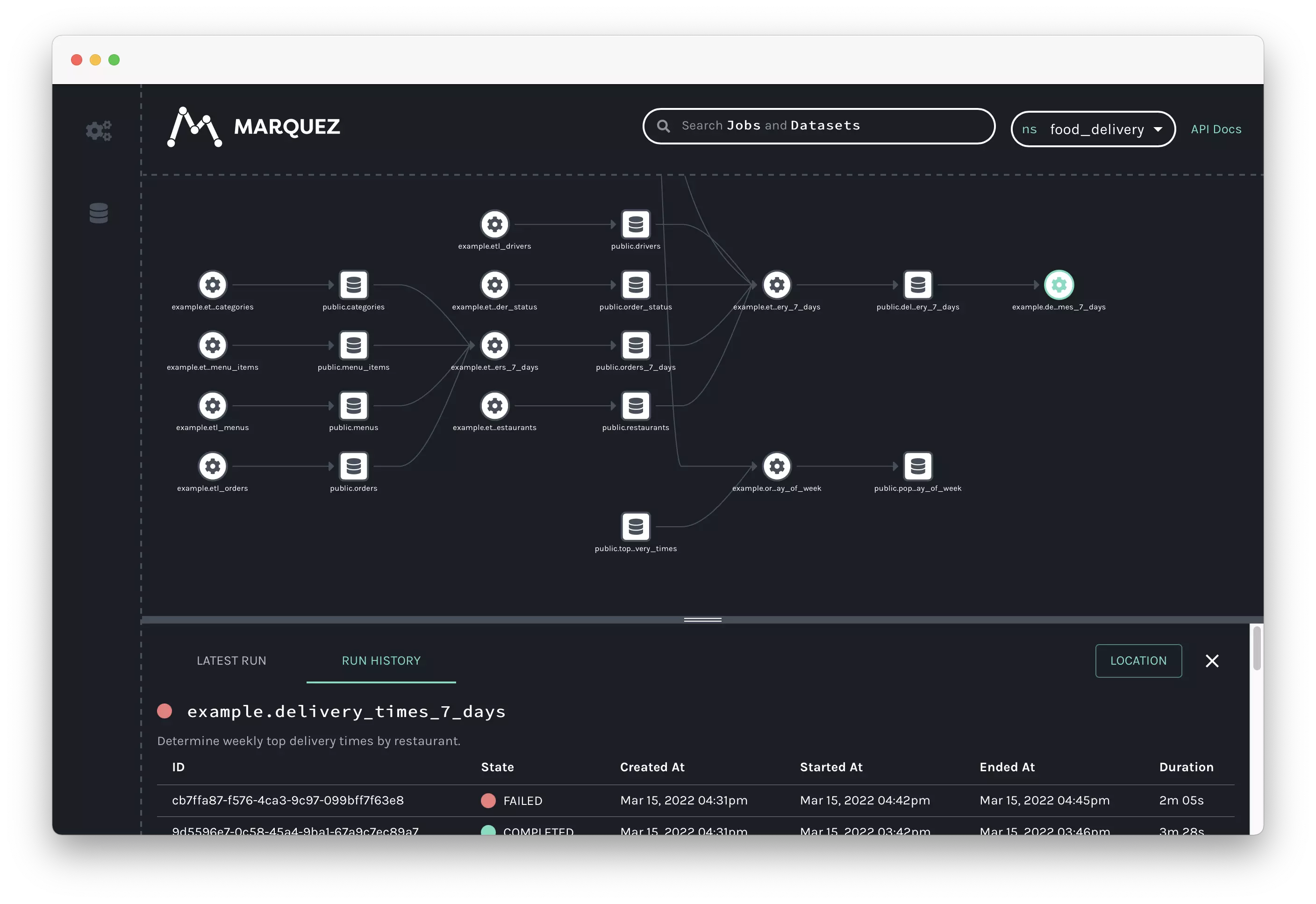

Data lineage visualization in Marquez - Source: Marquez.

Custom metadata to quickly find what you need #

Custom metadata lets you define your own attributes for use on your pipelines. This feature monitors pipeline details that default attribute values from Marquez may miss.

According to the issue tracker on Marquez GitHub, users have expressed interest in integrating OpenID Connect (OIDC) authentication with Marquez. Discussions are ongoing, with plans to introduce this feature in version 0.52.0, scheduled for release on December 18, 2024.

Additionally, there is an ongoing discussion about redesigning the Marquez UI to improve user experience, including features like enhanced search functionality and a more intuitive dashboard.

You can use this metadata to filter and sort pipelines in your repository and quickly find what you need amidst many different instances.

Exploring metadata in Marquez - Source: Marquez.

Versioning #

Versioning provides an added layer of safety, so you don’t risk losing changes made during development cycles.

If unexpected changes arise, one-click rollbacks allow you to revert from the latest developments to earlier revisions that worked well before.

Revisiting old versions like this eliminates re-work, especially when something goes awry after implementing recent updates.

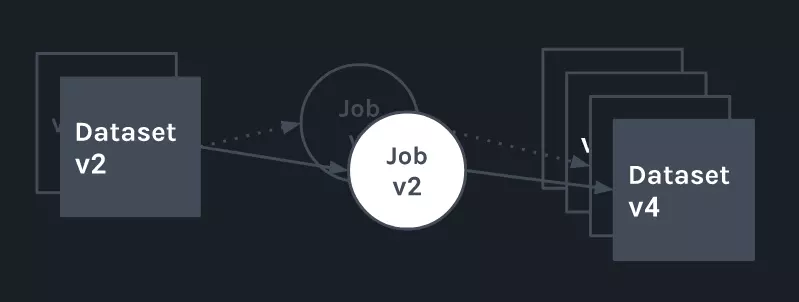

Marquez’s metadata versioning model - Source: Marquez.

APIs and SDKs to ensure compatibility #

Marquez’s APIs and SDKs provide access to a diverse collection of libraries that developers often require while building applications.

These libraries cover numerous tasks and technologies. As a result, Marquezremains very compatible with existing coding environments. In addition, the variety of APIs fosters seamless integration of all kinds with third-party tools.

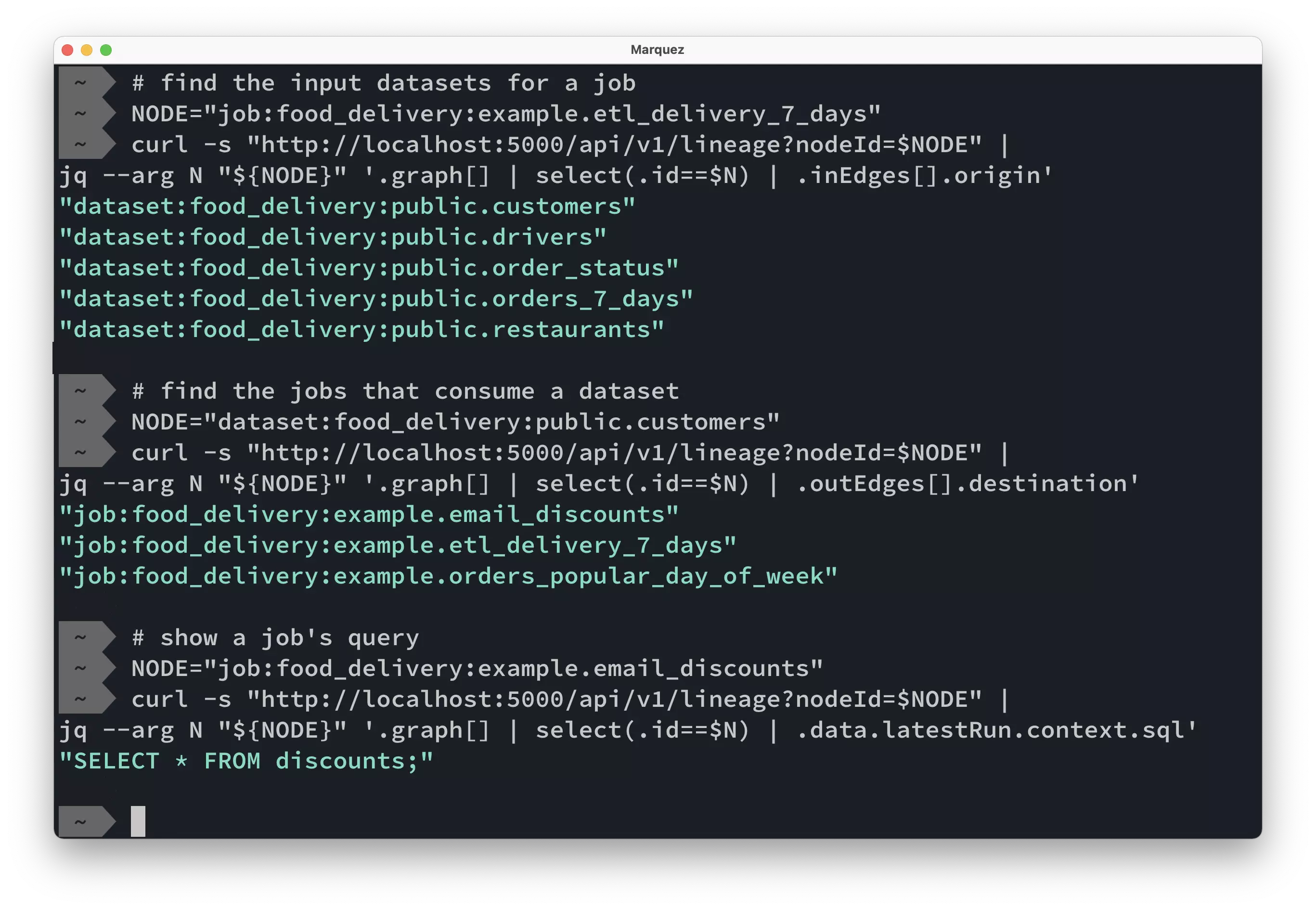

Querying lineage metadata via lineage API - Source: Marquez.

Notifications #

An important benefit of the Marquez platform is its real-time delivery system. You receive alerts at every step of the pipe during execution. Additionally, email communications keep you informed via periodic reports regarding pipeline performance. Lastly, you get immediately advised of anything unanticipated happening throughout the runtime.

Marquez’s real-time delivery system - Source: YouTube.

Workflow management to ensure proper data processing #

Marquez allows users to manage entire workflows, including dependencies between pipelines, ensuring that data is processed in the correct order and with the correct dependencies.

Marquez’s visual map of complex interdependencies - Source: Marquez.

How to set up Marquez for data pipeline tracking and managing infrastructure complexity #

To install Marquez, there are several steps you must take:

- Install and configure Marquez

- Capture metadata from various sources

- Manage data infrastructure complexity

- Enable data pipeline tracking

It is time to set up Marquez - Source Twitter.

1. Install and configure Marquez #

Marquez is a web-based tool that can be deployed as a Docker container, which makes it easy to set up and use.

Here’s a step-by-step guide to installing Marquez:

- Install Docker on your machine. You can download Docker from the official website and follow the instructions to install it.

- Once Docker is installed, you can use the following command to pull the Marquez Docker image:

bashCopy code

docker pull marquezproject/marquez

- After the image is pulled, you can use the following command to start a new Marquez container:

arduinoCopy code

docker run -p 5000:5000 marquezproject/marquez

- This will start a new Marquez container on your local machine, which you can access by navigating to http://localhost:5000 in your web browser.

2. Capture metadata from various sources #

You can capture metadata from databases, data warehouses, and data pipelines. Let’s see how:

- Capturing metadata from a database: You can use one of Marquez’s built-in connectors, such as the PostgreSQL connector. To set up, provide database credentials and specify which tables to collect metadata from.

- Capturing metadata from a data warehouse: You can use one of Marquez’s built-in connectors, such as the BigQuery connector. To set up the connector, enter your Google Cloud credentials and select the datasets and tables for metadata capture.

- Capturing metadata from a data pipeline: You can use Marquez’s REST API to create a new job and specify the input and output datasets.

3. Manage data infrastructure complexity #

As your data infrastructure grows, it is important to manage complexity to ensure that your data pipelines remain reliable and maintainable. There are two ways to manage complexity:

- Use Marquez’s built-in graph visualization tool: This tool allows you to visualize the dependencies between jobs and datasets. This can help you identify potential issues and bottlenecks in your data pipelines.

- Use Marquez’s tagging feature: This feature lets you assign and remove tags to jobs and datasets. This can help you organize your data infrastructure and make it easier to search and filter for specific components.

4. Enable data pipeline tracking #

Once you have captured metadata from your various sources, you can use Marquez to track your data pipelines and dependencies.

To create a new pipeline in Marquez, you can use the following steps:

- Create a new job by specifying the input and output datasets.

- Specify the dependencies for the job, such as the upstream and downstream jobs.

- Run the job and monitor its progress in the Marquez UI.

Marquez’s REST API also allows you to automate creating and managing jobs and datasets.

How organizations making the most out of their data using Atlan #

The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes #

- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

Summary #

Marquez is a popular data pipeline management solution with an intuitive interface and a comprehensive feature set. Marquez empowers you to track and optimize the most critical data pipelines by gathering, aggregating, and visualizing all metadata.

This guide covers the origin, architecture, use, and essential features of Marquez, as well as helpful tips for better implementation.

WeWork’s journey toward trust, transparency, and governance with Atlan, Snowflake, and dbt #

Take a deep dive into WeWork’s journey and reflect on the data platform team’s work in rolling out internal platforms and frameworks, including the building of Marquez (their famous open-source project for lineage), and implementing a new, resilient data stack.

- Adopting a modern data stack with Snowflake, dbt, and Atlan

- Digging deep into user pains through a data product thinking mindset

- Finding the right metadata platform through an in-depth evaluation process

- Implementing with an Agile-based approach, including column-level lineage

- Building a data community through programs like WeWork University

- Structuring the team behind it all and building a DataOps function

Watch Video about WeWork’s journey toward trust, transparency, and governance with Atlan, Snowflake, and dbt.

FAQs about Marquez #

1. What is Marquez, and what does it do? #

Marquez is an open-source metadata management tool developed by WeWork. It helps organizations understand the lineage and lifecycle of their data assets by collecting, storing, and visualizing metadata.

2. What are the main features of Marquez? #

Marquez offers robust features such as data lineage tracking, metadata collection, and visualization. It provides insights into data workflows, helping users identify dependencies and optimize their data ecosystem.

3. How does Marquez support data lineage? #

Marquez captures data lineage by tracing the origin and destination of data assets across pipelines. This enables teams to better understand how data flows within their systems and ensures accountability for data changes.

4. Why is metadata management important for organizations? #

Metadata management is crucial because it ensures data quality, compliance, and usability. Tools like Marquez provide transparency, helping organizations track data origins and transformations to make informed decisions.

5. Can Marquez integrate with other tools in the data ecosystem? #

Yes, Marquez integrates seamlessly with various data tools and platforms. It can be used alongside data pipelines, storage solutions, and analytics platforms to provide a comprehensive view of your data landscape.

6. Is Marquez suitable for both small and large organizations? #

Absolutely. Marquez is designed to be scalable and adaptable, making it a valuable tool for organizations of all sizes that aim to enhance their data management practices.

Related reads on Marquez #

- Amundsen Data Catalog: Understanding Architecture, Features, Ways to Setup & More

- Amundsen Demo: Explore and get a feel for Amundsen with a pre-configured sandbox environment.

- Netflix’s Metacat: Open Source Federated Metadata Service for Data Discovery

- DataHub: LinkedIn’s Open-Source Tool for Data Discovery, Catalog, and Metadata Management

- LinkedIn DataHub Demo: Explore and Get a Feel for DataHub with a Pre-configured Sandbox Environment.

- Airbnb Data Catalog — Democratizing Data With Dataportal

- Pinterest Querybook 101: A Step-by-Step Tutorial and Explainer for Mastering the Platform’s Analytics Tool

Share this article