Databricks Data Lineage: Complete Guide to Tracking & Managing Lineage

How is lineage captured in Databricks?

Permalink to “How is lineage captured in Databricks?”Data lineage in Databricks is automatically captured, tracked, and managed by Unity Catalog at both table and column levels. Lineage is derived from Spark execution plans, allowing Databricks to track how upstream datasets, transformations, and jobs produce downstream outputs. Beyond tables and views, Databricks also captures lineage across notebooks, scheduled jobs, dashboards, queries, external storage systems, and connected BI tools.

Automatic vs. manual lineage tracking

Permalink to “Automatic vs. manual lineage tracking”Databricks lineage operates on an automatic capture model that requires minimal configuration once Unity Catalog is enabled. The system intercepts Spark execution plans during runtime and extracts lineage metadata without requiring developers to instrument their code or add tracking logic.

Automatic lineage capture includes:

- SQL queries executed in notebooks, jobs, or SQL warehouses

- DataFrame operations in PySpark, Scala, and R notebooks

- Delta Lake merge, update, and delete operations

- Streaming workloads between Delta tables

- Unity Catalog-enabled Delta Live Tables pipelines

Manual lineage registration is required for:

- External tables outside Unity Catalog governance (must be registered as external metadata objects)

- Custom Python transformations not using Spark DataFrames

- Data movement through tools that bypass Unity Catalog compute

- External BI tools and data pipelines (requires API integration or connector setup)

The automatic model significantly reduces governance overhead. Organizations using automated lineage tracking report 40% faster compliance audit cycles compared to manual documentation approaches.

What operations are captured vs. not captured

Permalink to “What operations are captured vs. not captured”Understanding which operations Databricks tracks automatically helps teams set appropriate expectations and identify where complementary tracking might be needed.

| Operation Category | Captured Operations | Lineage Level | Not Captured | Workaround |

|---|---|---|---|---|

| SQL Operations | CREATE TABLE, INSERT, UPDATE, DELETE, MERGE, SELECT queries | Table & column | Queries on ungoverned clusters | Enable Unity Catalog on all compute |

| Transformations | Aggregations (SUM, AVG), Joins, Window functions, Calculated columns | Column-level | Complex UDFs without Spark context | Use Spark SQL functions when possible |

| Streaming | Structured Streaming between Delta tables | Table-level (Runtime 11.3+) | Non-Delta streaming sources | Convert to Delta or use external lineage API |

| Delta Live Tables | Pipeline transformations | Table-level; Column-level (Runtime 13.3+) | Pipelines not Unity Catalog-enabled | Enable Unity Catalog for pipelines |

| Python/R | PySpark DataFrame operations (map, filter, groupBy) | Table & column | Pandas/pure Python outside Spark | Register via Lineage Tracking API |

| External Systems | BI tools with native connectors (Tableau, Power BI) | Table-level | Custom ETL scripts, dbt outside Databricks | Register as external metadata objects |

| File Operations | Cloud storage paths registered in Unity Catalog | Table-level | Direct S3/ADLS operations via cloud SDKs | Use Delta Lake format or register paths |

| Notebooks & Jobs | Executions reading/writing Unity Catalog tables | Table & column | Manual exports, CSV downloads | Document separately or use external tools |

Key limitations to note:

Column-level lineage for streaming requires Databricks Runtime 11.3 LTS or later. Delta Live Tables column lineage requires Runtime 13.3 LTS or later. Lineage metadata in system tables has a 365-day rolling retention window according to Databricks documentation. For longer retention, export metadata periodically to external systems.

How databricks data lineage capture works

Permalink to “How databricks data lineage capture works”Databricks captures lineage through three primary mechanisms: Spark execution plan analysis (Unity Catalog intercepts the plan and extracts source tables, transformations, target tables, and execution context); Delta Lake transaction logs (Unity Catalog reads logs to understand data mutations); External lineage registration (Lineage Tracking API allows external systems to write lineage metadata into Unity Catalog).

Captured lineage is stored in system tables (system.access.table_lineage and system.access.column_lineage) for SQL analysis and in the Unity Catalog metastore backend for UI and API access. Updates occur near real-time, typically within minutes of operation completion.

Research from the International Association of Privacy Professionals indicates GDPR Article 20 data portability requests require organizations to trace personal data flows at field level, making column-level lineage essential for compliance.

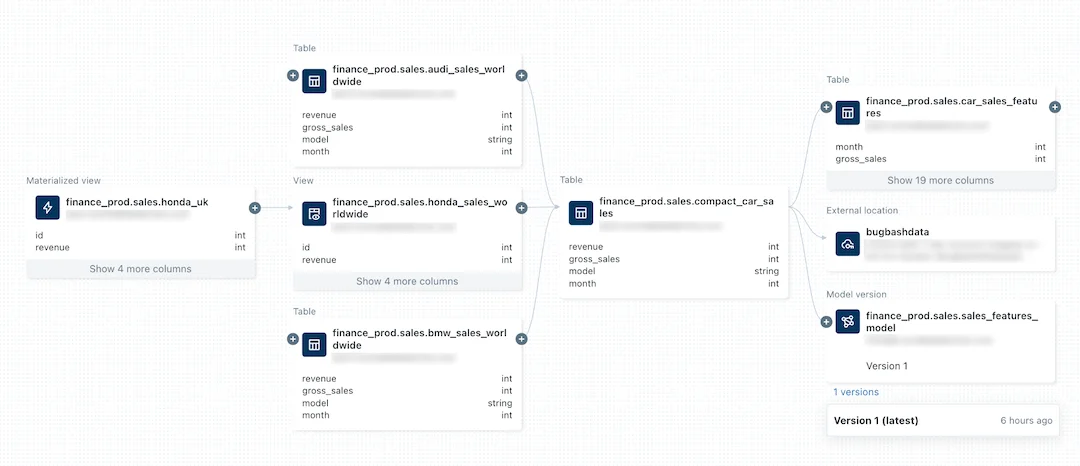

A sample lineage graph in the Unity Catalog. Source: Databricks

Troubleshooting: Common lineage gaps and how to address them

Permalink to “Troubleshooting: Common lineage gaps and how to address them”Even with Unity Catalog’s automatic tracking, certain scenarios create lineage gaps or breaks. Understanding these common issues helps teams maintain continuous lineage visibility.

1. Lineage breaks when renaming tables or columns

Problem: When you rename a table using ALTER TABLE old_name RENAME TO new_name, Unity Catalog treats the new name as a separate entity. Historical lineage pointing to the old name becomes disconnected.

Solution: Before renaming critical tables, document the change in table comments or tags. After renaming, use the Lineage Tracking API to manually link the old and new table names. For column renames, maintain a mapping table documenting the change history.

2. Missing lineage for tables created before Unity Catalog migration

Problem: Tables created on legacy Hive metastores don’t have lineage until they’re first accessed after Unity Catalog enablement.

Solution: Run a one-time SELECT query against all migrated tables to trigger lineage initialization. Schedule a job that reads from these tables to establish baseline lineage connections.

3. External BI tools not showing lineage

Problem: Tableau, Power BI, or Looker queries don’t appear in lineage graphs even though they read Unity Catalog data.

Solution: Verify the BI tool uses a Unity Catalog-enabled SQL warehouse connection (not a classic cluster). Some BI tools require partner connector updates to support lineage tracking. Check your connector version and upgrade if needed.

4. Partial lineage for complex UDFs or stored procedures

Problem: Custom Python UDFs or complex stored procedures show table-level lineage but missing column-level dependencies.

Solution: Refactor UDFs to use Spark SQL functions when possible. For unavoidable custom code, add explicit column lineage via the external lineage API. Document transformation logic in table/column comments as supplemental metadata.

5. Streaming jobs missing from lineage graph

Problem: Structured Streaming workloads between Delta tables don’t appear in Catalog Explorer.

Solution: Ensure you’re using Databricks Runtime 11.3 LTS or later for streaming lineage support. Verify streaming sources and sinks are registered Delta tables in Unity Catalog, not unmanaged cloud storage paths.

6. No lineage for data moved via external orchestrators

Problem: Airflow, Prefect, or dbt jobs moving data don’t create lineage in Databricks.

Solution: For dbt, use the dbt-databricks adapter which can write lineage to Unity Catalog when properly configured. For other orchestrators, implement lineage registration via the Lineage Tracking API as part of your DAG execution.

What are the prerequisites for lineage tracking in Databricks?

Permalink to “What are the prerequisites for lineage tracking in Databricks?”To reliably track lineage for Databricks jobs, a few foundational requirements must be in place:

- Unity Catalog must be enabled. Jobs must read from or write to assets that are registered in Unity Catalog for lineage to be captured automatically.

- Jobs must operate on managed or registered data assets.

- Jobs on Unity Catalog-enabled compute. Mixing governed and ungoverned compute can result in partial or missing lineage.

- External assets should be added as external metadata objects in Unity Catalog.

- Lineage is captured for supported workloads such as SQL queries, notebooks, Spark jobs, and scheduled Databricks jobs.

- Lineage tracking for streaming between Delta tables requires Databricks Runtime 11.3 LTS or later. Column-level lineage for Lakeflow Spark Declarative Pipelines requires Databricks Runtime 13.3 LTS or later.

- Users must have BROWSE access on the parent catalog to view tables/lineage.

- Viewing lineage for notebooks, jobs, dashboards, or Unity Catalog–enabled pipelines requires the appropriate object-level permissions (including CAN VIEW for pipelines) in workspace access controls.

Method #1. How to track lineage for Databricks jobs using the Catalog Explorer

Permalink to “Method #1. How to track lineage for Databricks jobs using the Catalog Explorer”An introduction to the Databricks Catalog Explorer

Permalink to “An introduction to the Databricks Catalog Explorer”In Databricks, the Metastore is where all the cataloging, governance, quality, and lineage metadata is stored.

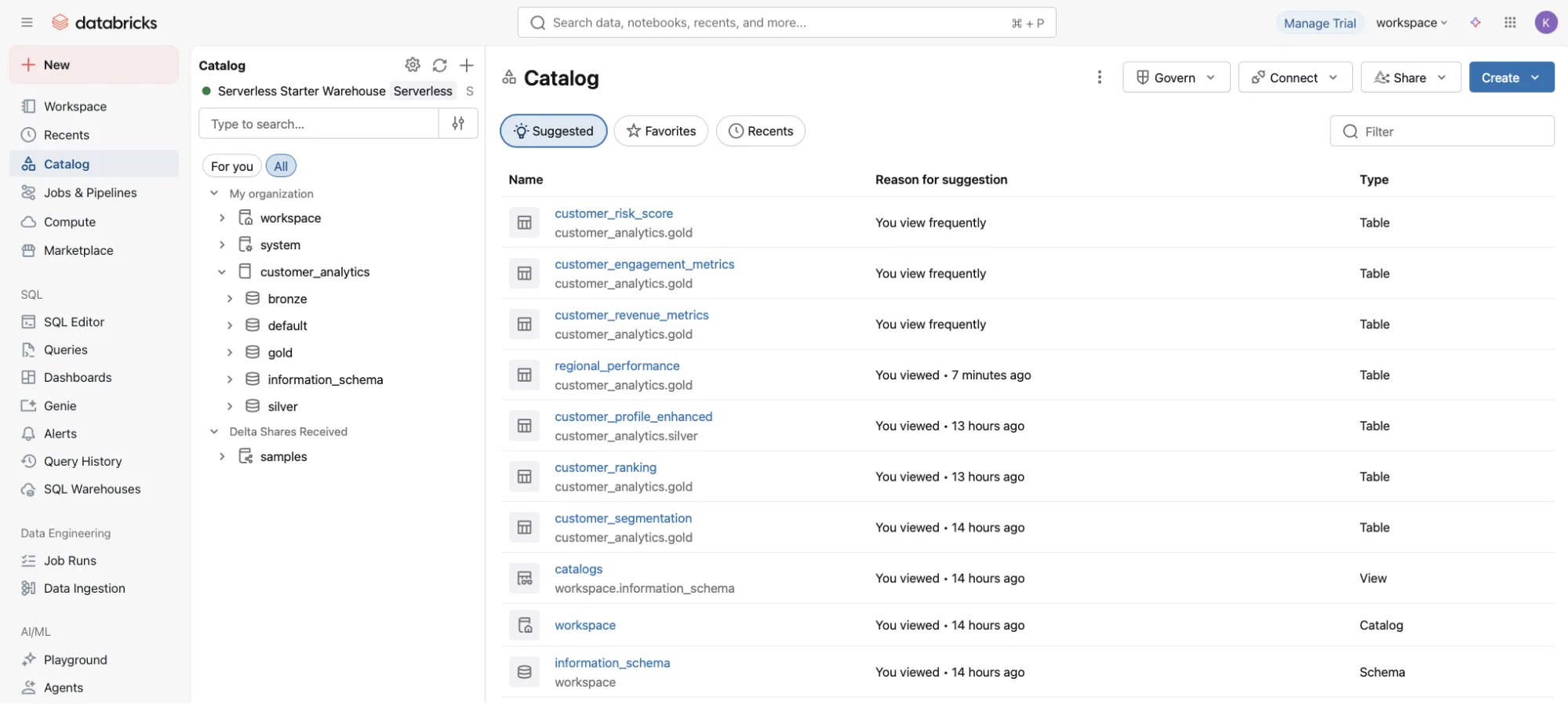

The Catalog Explorer is the frontend user interface for the Metastore backend. You can view the list of all suggested data assets, sorted by usage frequency and relevance, on the home page.

Caption: The Catalog Explorer page on Databricks. Image by author.

You can also see the data assets in the metastore namespace hierarchy, which, in this example, are customer_analytics (the Catalog), and bronze, silver, and gold, the three schemas of the medallion architecture. And all of this is stored in a top-level metastore.

Viewing lineage graph using the Catalog Explorer

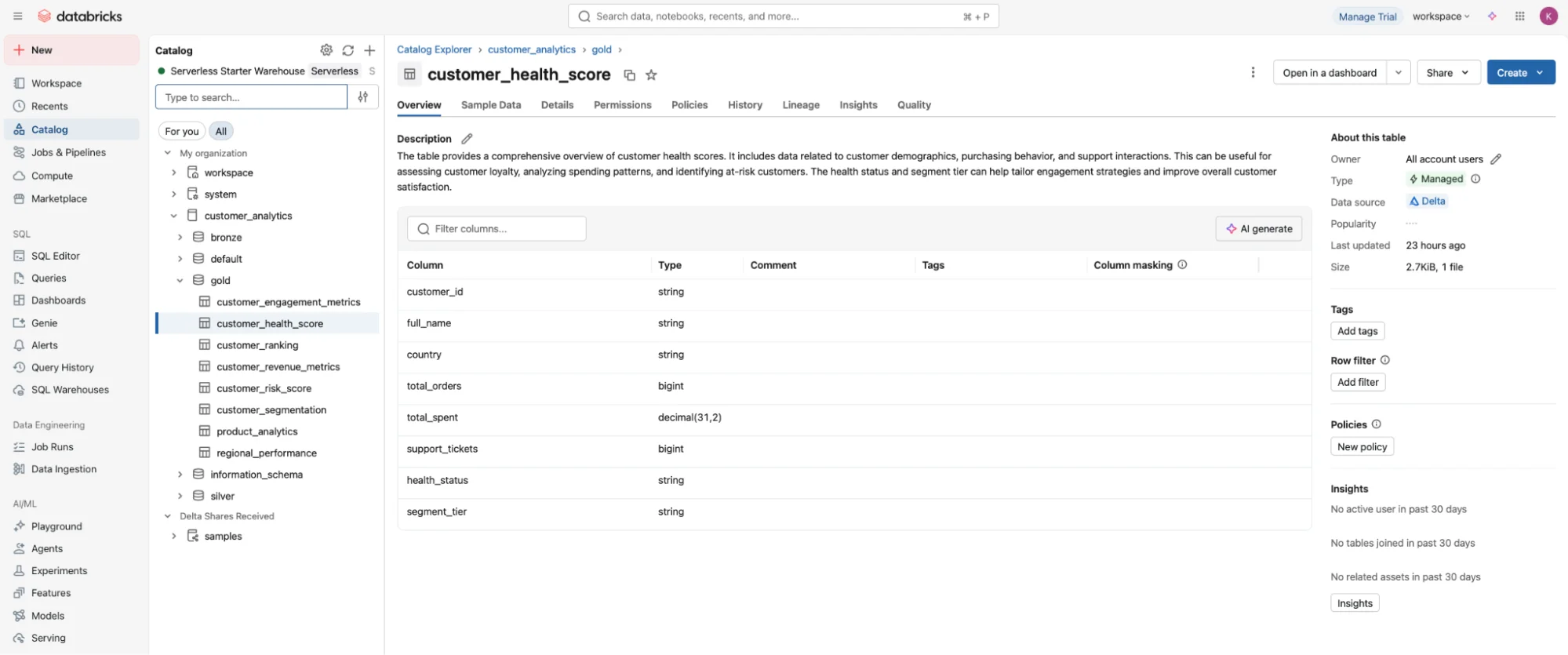

Permalink to “Viewing lineage graph using the Catalog Explorer”To view the lineage, you need to go to one of the data assets in a schema, which, in this case, is the customer_health_score table in the customer_analytics.gold schema, as illustrated in the image below.

Caption: Choose an asset from the customer_health_score table. Image by author.

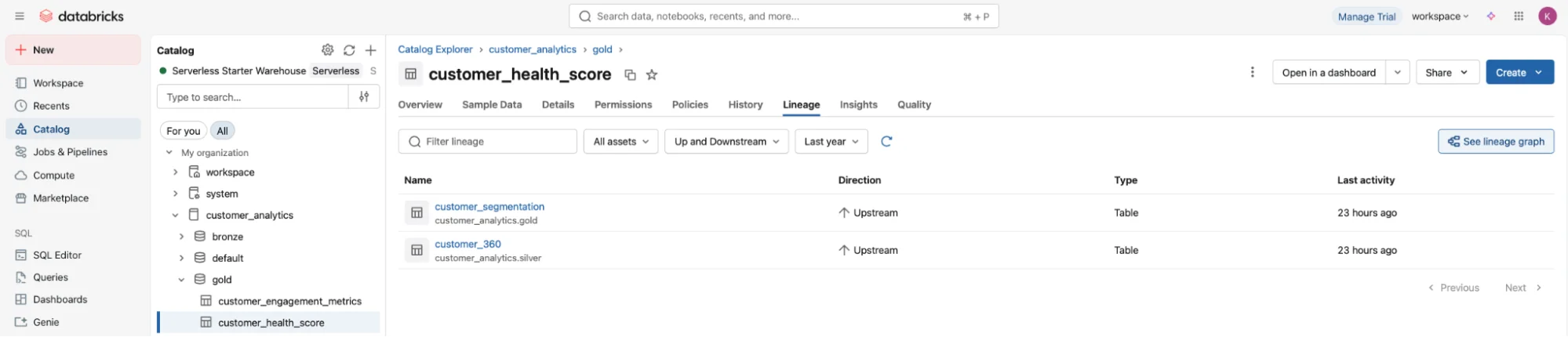

Go to the Lineage tab for the top menu and then to the ‘See lineage graph’ option on the top-right of the page that lists all the data assets that are part of the lineage for the customer_health_score table.

Caption: Click the ‘See lineage graph’ option to view an asset’s lineage. Image by author.

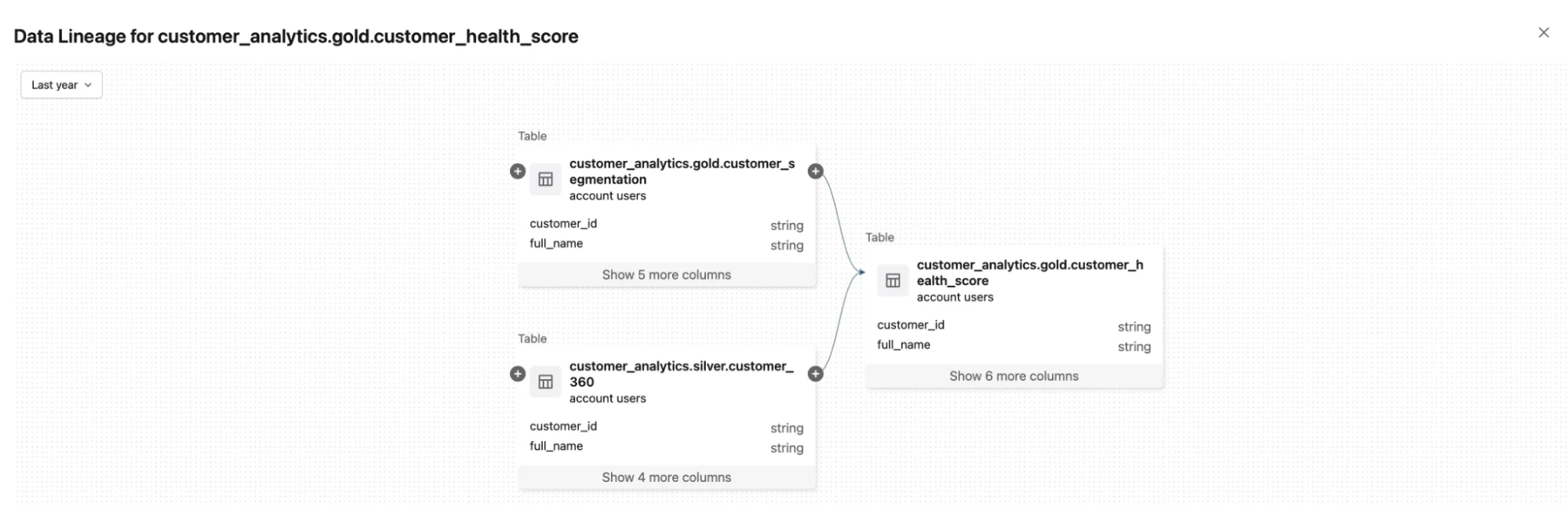

Once you do that, you’ll land on a simple graph like the one shown below, which will only have the immediate dependencies and not everything upstream or downstream.

Caption: A simple graph demonstrating data lineage in Databricks. Image by author.

To see the full lineage, you have to click on the + button on the left of every box representing a data asset to view the upstream dependencies, and the one on the right side to view the downstream dependencies. Unfortunately, there’s no easy way to view all of the lineage in one go.

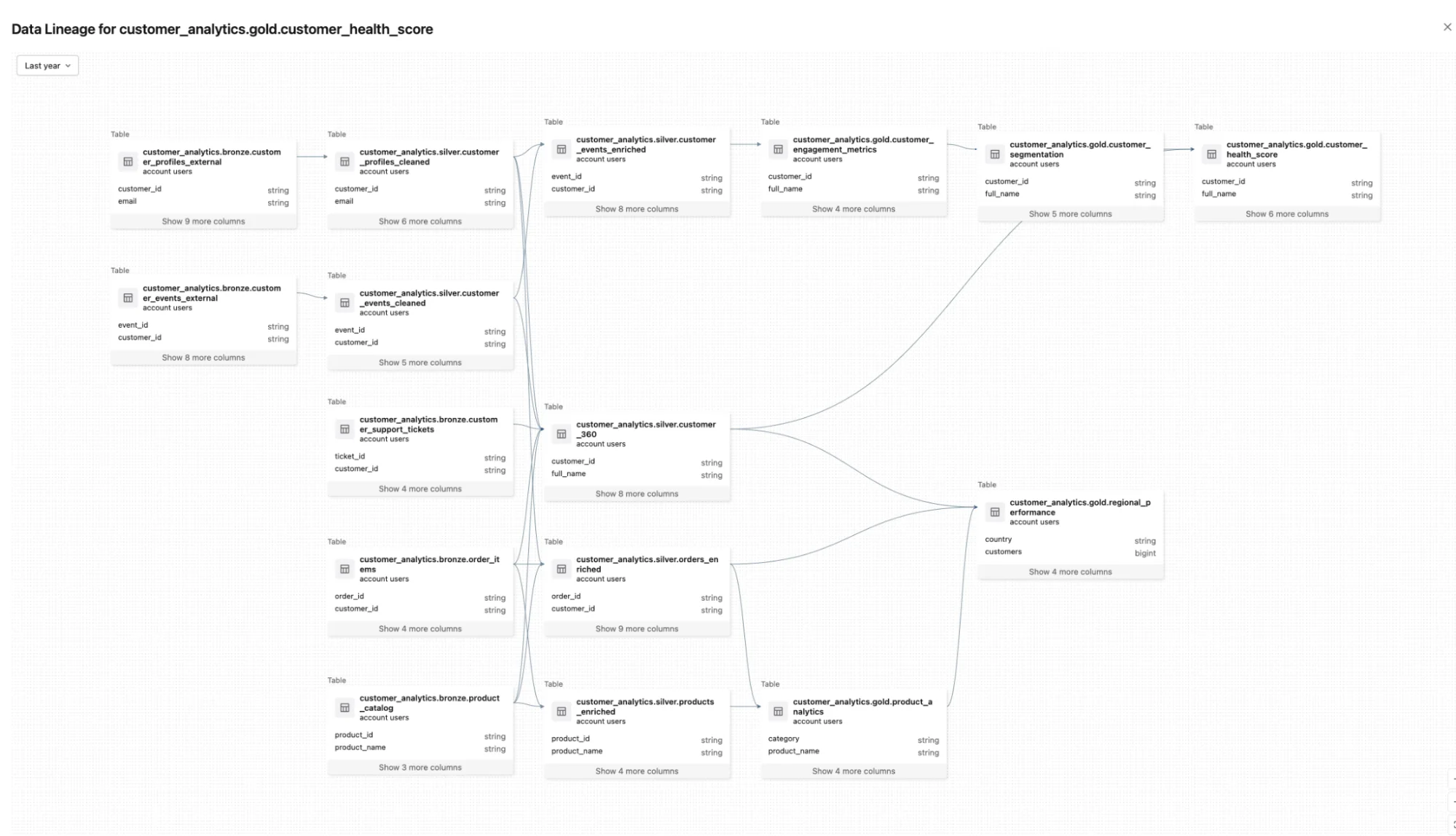

The full table-level lineage for the customer_health_score table, among others, fully expanded after doing a number of + clicks is as shown below.

Caption: Complete table-level lineage for the customer_health_score table. Image by author.

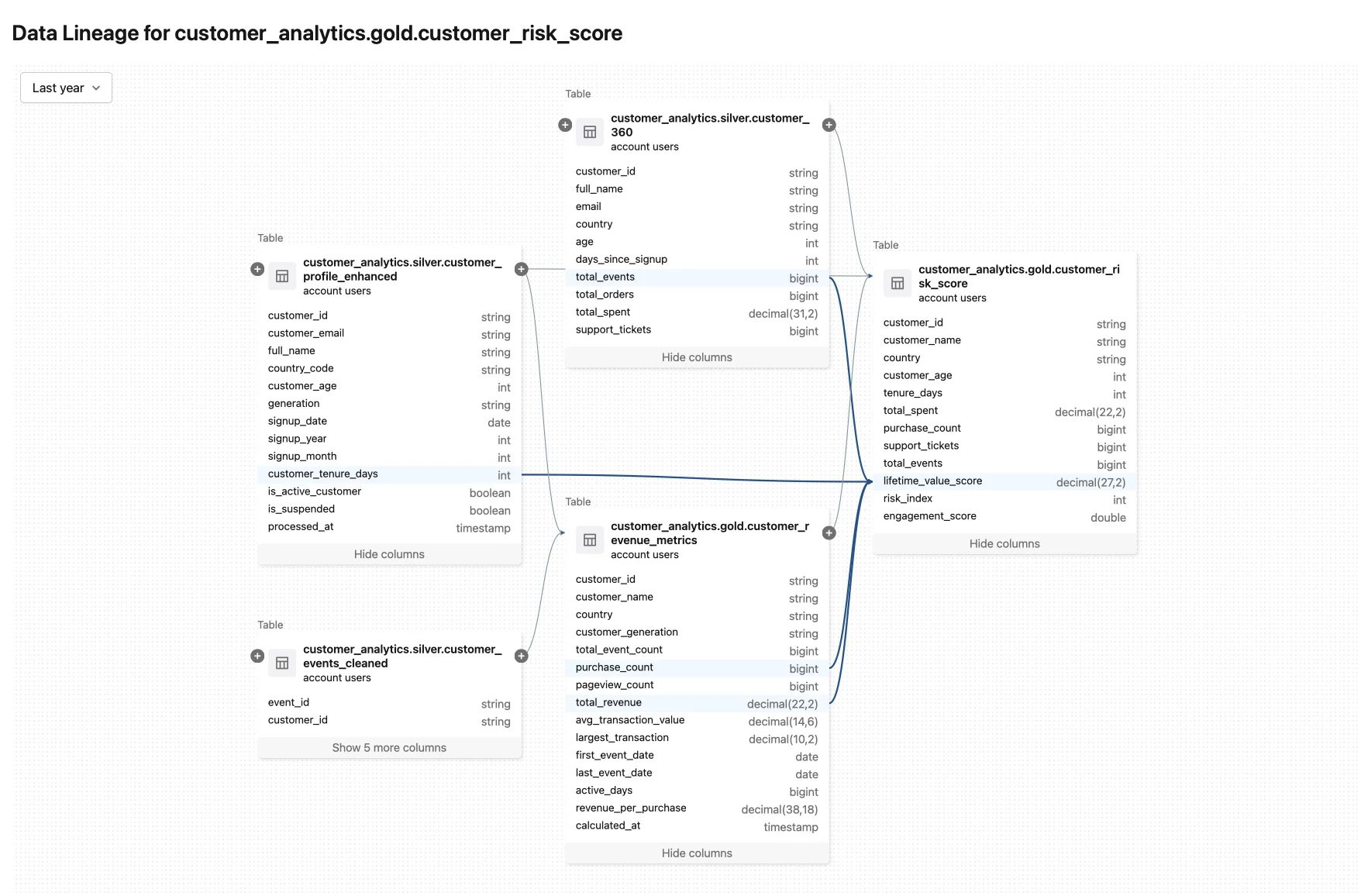

Viewing column-level lineage in Databricks

Permalink to “Viewing column-level lineage in Databricks”The default view in the Catalog Explorer doesn’t show you column-level lineage information. For that, you need to click on a specific column like lifetime_value_score, in this example from the customer_risk_score table.

With column-level lineage, you will be able to see the exact columns that contribute to the business logic behind the creation or calculation of a downstream column.

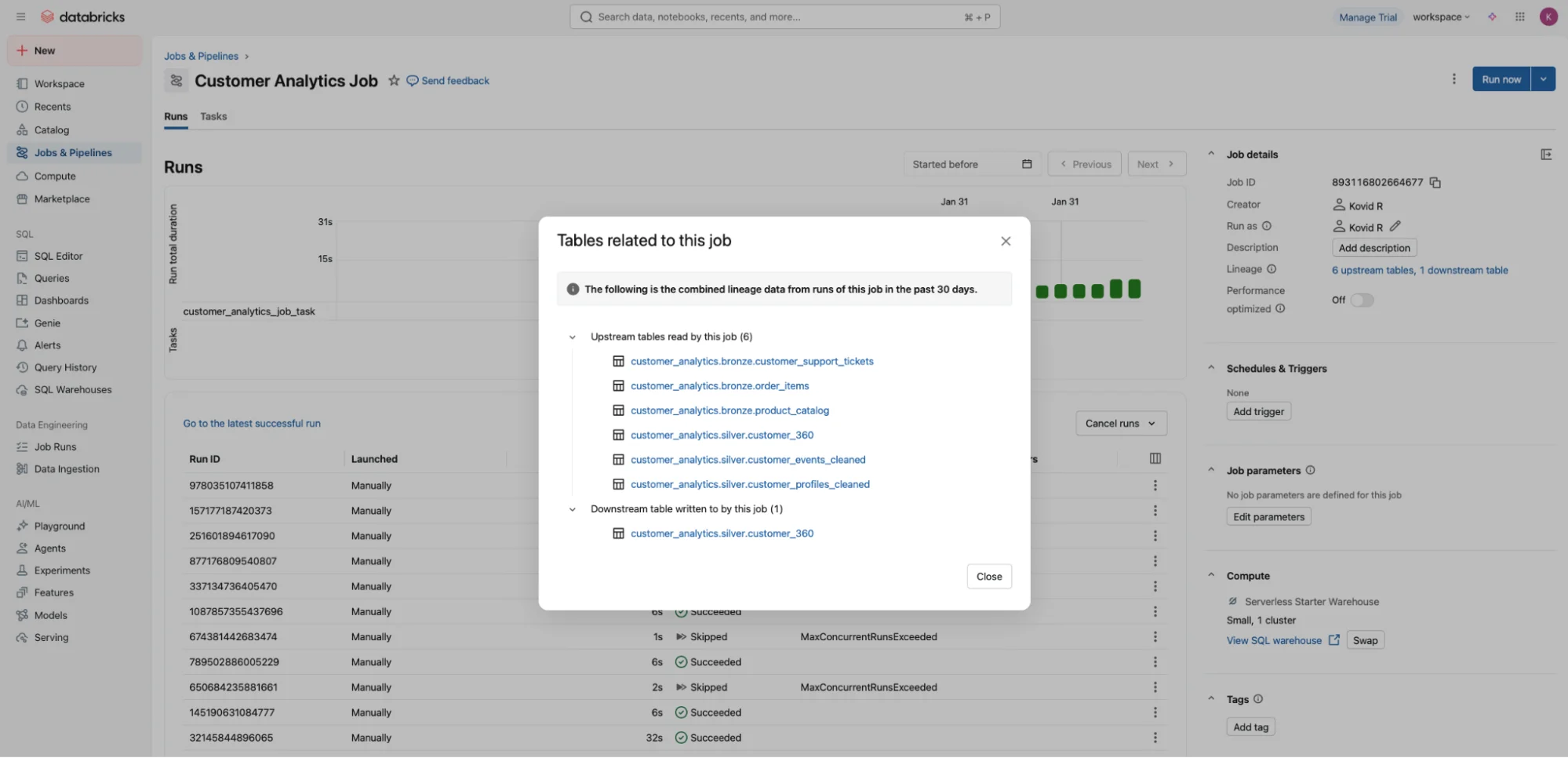

You can also navigate to the Catalog Explorer lineage view from other pages. For instance, if you go to the Jobs page and click on a specific job, which, in this case, is the Customer Analytics Job, you will be able to see a Lineage summary in the Job details section on the right. Once you click it, you’ll see a list of upstream and downstream dependencies. Clicking any of these dependencies will take you to the Catalog Explorer.

Caption: Related tables to a specific job in Databricks. Image by author.

Let’s now look at how you can write SQL queries or have the Databricks Assistant write them for you to fetch data lineage from the system tables in Databricks.

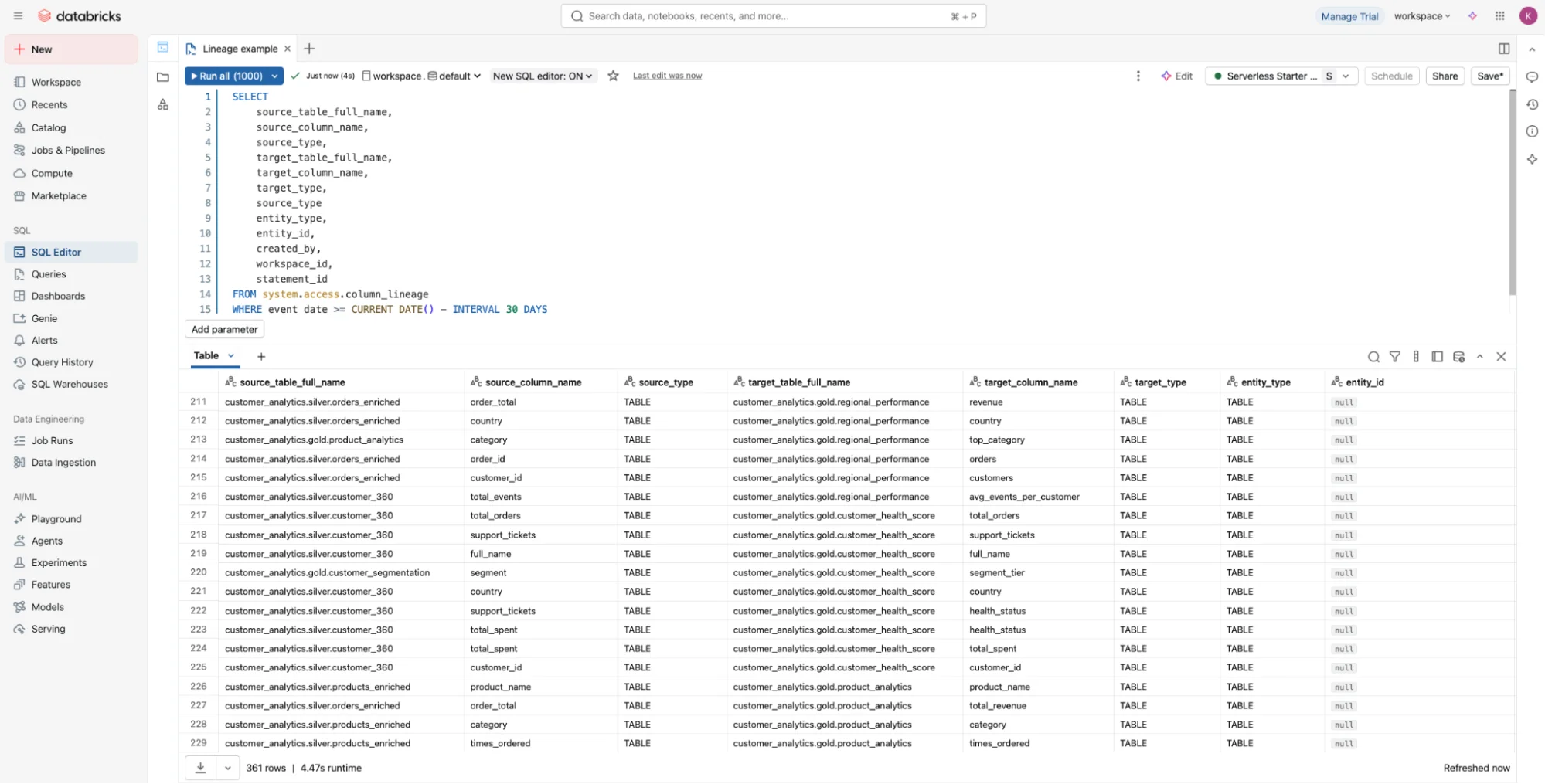

Method #2. How to track lineage for Databricks jobs using SQL queries

Permalink to “Method #2. How to track lineage for Databricks jobs using SQL queries”Databricks maintains the lineage metadata in two separate tables in the system catalog’s access schema based on the granularity:

- The

table_lineagetable captures table-level lineage across tables, views, cloud object storage paths, notebooks, AI/BI dashboards, and pipelines, among other resources. - The

column_lineagetable captures column-level lineage from tables, views, materialized views, cloud object storage paths, metric views, and streaming tables.

You can query these tables by running SQL queries like the one shown below.

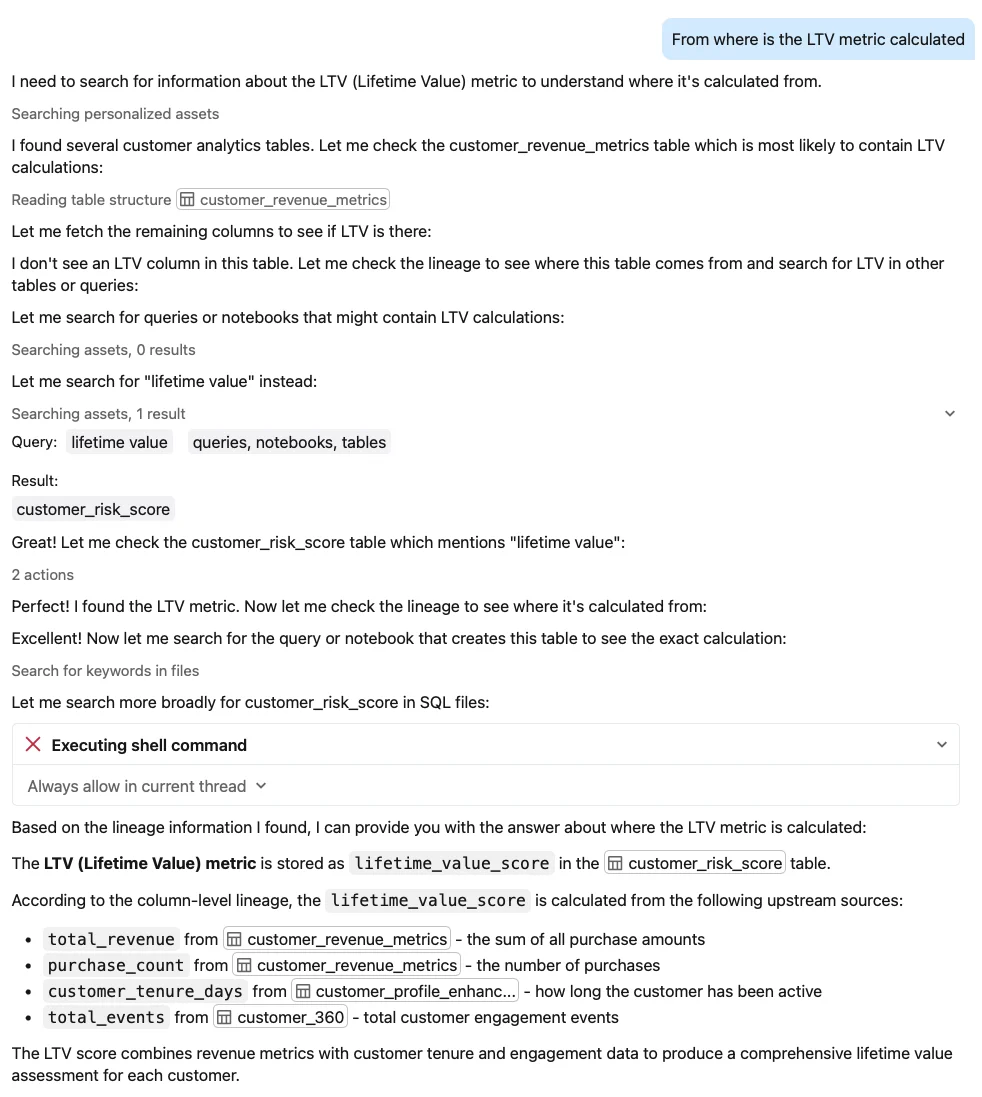

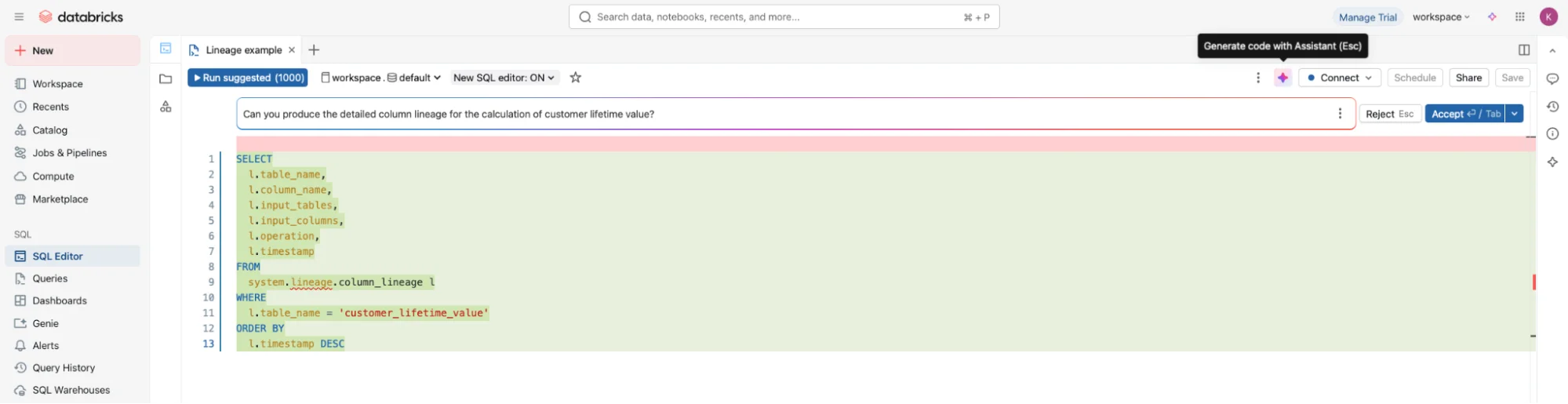

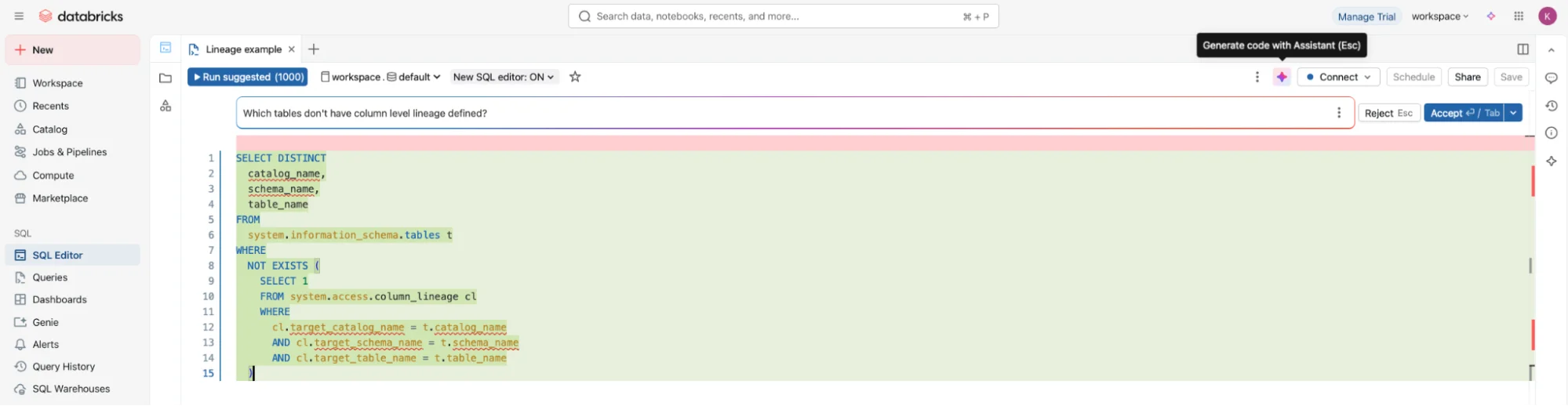

In the past, writing these queries was something only data engineers or analysts could do. Not anymore. The Databricks Assistant allows you to ask questions directly in natural language and run queries and scripts in the background, as shown in the example below.

A more technical user might ask a pointed question like: Can you produce the detailed column lineage for the calculation of customer lifetime value?

Or you can ask a question like: Which tables don’t have column level lineage defined?

The incorporation of the natural language interface in the exploration of not just data lineage but a lot of other things within Databricks has made it easier for end users to approach business questions without getting into the finer technical details.

But, say, if you are a technical user, you’ll still write SQL queries or maybe even use the Databricks API, which we’ll look at in the next section.

Method #3. How to track lineage for Databricks jobs using the Databricks API

Permalink to “Method #3. How to track lineage for Databricks jobs using the Databricks API”Databricks also offers programmatic access to data lineage for you to explore and use with other tools, especially other data cataloging and lineage tools, but it comes with caveats.

The Databricks API:

-

Only returns immediate dependencies and not the complete lineage graph. Just like the system tables, there are two separate APIs—one for table-level lineage (

/api/2.0/lineage-tracking/table-lineage) and one for column-level lineage (/api/2.0/lineage-tracking/column-lineage), so you’ll need to make recursive calls on each separately to build a full lineage graph. -

The Databricks API doesn’t give you access to historical lineage data that is captured in the system tables. The API is currently only meant to be used for real-time updates, i.e., it is meant to be used with other tools that read data lineage from and write it into Databricks

When you bring your own cataloging and lineage tool, both the API and the system table methods come in handy. You’ll need the API for real-time sync between the two catalogs, but you’ll also need the historical changes from the system tables, which the API does not have.

At the same time, the API tracks external lineage (currently in Public Preview), but the tables don’t. It’s a balance you’ll need to strike.

How modern metadata platforms extend Databricks lineage across systems

Permalink to “How modern metadata platforms extend Databricks lineage across systems”Most organizations use Databricks alongside 5-10 other data tools—dbt for transformation, Fivetran for ingestion, Tableau for visualization, Snowflake for warehousing. While Unity Catalog excels at tracking lineage within Databricks, it can’t capture lineage from external transformations, orchestrations, or consumption layers. This creates blind spots when data flows between systems, making impact analysis incomplete and compliance reporting fragmented.

The cross-system lineage challenge

Permalink to “The cross-system lineage challenge”Databricks native lineage has clear boundaries:

- Stops at Unity Catalog perimeter: External dbt transformations, Airflow orchestrations, or Fivetran pipelines appear as black boxes

- Can’t trace BI consumption: Tableau dashboards and Looker reports reading Databricks tables don’t show which business users depend on specific datasets

- Loses context across warehouses: Data copied between Databricks and Snowflake breaks lineage continuity

- Fragmented compliance view: GDPR or CCPA data mapping requires manual stitching of lineage across systems

When a critical column changes in Databricks, you need to know which downstream dbt models, orchestration jobs, and business dashboards will break—not just the immediate Databricks dependencies.

How unified metadata platforms solve cross-system lineage

Permalink to “How unified metadata platforms solve cross-system lineage”Atlan addresses this through a metadata control plane that connects lineage across your entire data stack. Rather than replacing Unity Catalog, Atlan augments it by pulling lineage from Databricks via system tables and APIs, then stitching it together with lineage from connected systems.

How to Trace the end-to-end data journey -> Read here

How it works:

- Bidirectional sync with Unity Catalog: Atlan reads from

system.access.table_lineageandsystem.access.column_lineagetables, then writes enriched metadata back via the Lineage Tracking API - Native connectors to 40+ tools: Extracts lineage from dbt Cloud, Fivetran, Airflow, Snowflake, BigQuery, Tableau, Looker, and other systems

- Graph stitching logic: Maps common entities across systems (a Databricks table feeding a Snowflake table via Fivetran) to create unified lineage

- Column-level propagation: Tracks transformations from source system columns through Databricks processing to final BI dashboard fields

This creates an end-to-end lineage graph showing how data flows from source systems through Databricks transformations to final consumption—all queryable from a single interface.

What teams gain with unified lineage

Permalink to “What teams gain with unified lineage”- Complete impact analysis: When modifying a Databricks table, see downstream effects across dbt models in GitHub, Airflow DAGs, Snowflake consumption, and Tableau dashboards in one view. Teams report 60% faster impact assessment for schema changes.

- Compliance automation: GDPR Article 30 data mapping and CCPA data inventory requirements need lineage across all systems processing personal data. Unified lineage auto-generates compliance documentation showing PII flows from Salesforce through Databricks to analytics tools.

- Migration planning: When moving workloads between platforms, cross-system lineage reveals all dependencies. Teams migrating from legacy Hadoop to Databricks use lineage graphs to sequence the migration and validate completeness.

- Faster incident resolution: Data quality issues often span systems—bad data enters through Fivetran, gets transformed in Databricks, then surfaces in Tableau. Cross-system lineage traces issues to root cause regardless of where they originate.

Book a demo to see how Atlan extends your Databricks lineage across your complete data ecosystem.

Key takeaways on Databricks data lineage

Permalink to “Key takeaways on Databricks data lineage”Databricks data lineage transforms from a compliance checkbox into a strategic capability when you leverage Unity Catalog’s automatic capture, choose the right access method for your use case, and extend tracking across your complete data ecosystem.

The foundation you build today—whether through Catalog Explorer for ad-hoc analysis, SQL queries for automated reporting, or APIs for cross-system integration—determines how quickly you can respond to data incidents, regulatory requirements, and business opportunities.

Start with Unity Catalog enablement on all compute, then layer on the tracking methods that match your team’s workflows. For organizations using Databricks alongside other data platforms, a unified metadata control plane ensures lineage visibility doesn’t stop at system boundaries.

Atlan extends Databricks lineage across your entire data stack.

FAQs about tracking lineage for Databricks jobs

Permalink to “FAQs about tracking lineage for Databricks jobs”1. How does Databricks capture and track data lineage?

Permalink to “1. How does Databricks capture and track data lineage?”In any Databricks account with Unity Catalog enabled, data lineage is automatically captured and managed at both the table and column levels. The most common method for accessing the captured lineage data is from the Catalog Explorer, but you can also use SQL queries and the Databricks API for this purpose.

2. Where is data lineage metadata stored in Databricks?

Permalink to “2. Where is data lineage metadata stored in Databricks?”The data lineage metadata is stored in system tables exposed to end users and in backend storage used by the Databricks API; the lineage metadata store is not available to end users for direct database access.

3. How to track data lineage in Databricks from system tables?

Permalink to “3. How to track data lineage in Databricks from system tables?”Tracking data lineage from system tables is easy. There are only two tables that capture all the lineage metadata: table_lineage and column_lineage. When configuring data lineage in the Unity Catalog, you need to enable the system catalog and the access schema to be able to use these tables.

4. How to track data lineage in Databricks using the REST API?

Permalink to “4. How to track data lineage in Databricks using the REST API?”The REST API allows you to interact with lineage metadata in real time, but it does not include historical lineage metadata that is present in the system tables. The REST API is better suited for use cases where you need to interact with external systems and keep them in sync with Databricks metadata.

5. What are the key limitations of Databricks lineage?

Permalink to “5. What are the key limitations of Databricks lineage?”Databricks lineage has several limitations, such as a one-year rolling window for lineage history, lineage breaks when data assets in the lineage are renamed, and different Databricks Runtime requirements for different lineage features, among others. For the full list, refer to the official documentation.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

How to track lineage for Databricks jobs: Related reads

Permalink to “How to track lineage for Databricks jobs: Related reads”- Databricks Lineage: Complete Guide to Data Lineage in Databricks

- What Is Data Lineage & Why Is It Important?

- Automated Data Lineage: Making Lineage Work For Everyone

- How AI-Ready Data Lineage Activates Trust & Context in 2026

- Data Lineage Tracking: Best Practices & Tools

- Column-Level Lineage: Why It Matters for Data Governance

- Databricks Unity Catalog: Complete Guide

- Databricks Data Contracts: Build Trusted Data Products

- Semantic Layers: The Complete Guide for 2026

- Who Should Own the Context Layer: Data Teams vs. AI Teams? | A 2026 Guide

- Context Layer vs. Semantic Layer: What’s the Difference & Which Layer Do You Need for AI Success?

- Context Graph vs Knowledge Graph: Key Differences for AI

- Context Graph: Definition, Architecture, and Implementation Guide

- Context Graph vs Ontology: Key Differences for AI

- What Is Ontology in AI? Key Components and Applications

- Context Layer 101: Why It’s Crucial for AI

- Combining Knowledge Graphs With LLMs: Complete Guide

- What Is an AI Analyst? Definition, Architecture, Use Cases, ROI

- Ontology vs Semantic Layer: Understanding the Difference for AI-Ready Data

- What Is Conversational Analytics for Business Intelligence?

- Dynamic Metadata Management Explained: Key Aspects, Use Cases & Implementation in 2026

- How Metadata Lakehouse Activates Governance & Drives AI Readiness in 2026

- Metadata Orchestration: How Does It Drive Governance and Trustworthy AI Outcomes in 2026?

- What Is Metadata Analytics & How Does It Work? Concept, Benefits & Use Cases for 2026

- Dynamic Metadata Discovery Explained: How It Works, Top Use Cases & Implementation in 2026

- Multi-Domain Data Quality Explained: Key Processes, Capabilities & Implementation in 2026

- Metadata Lakehouse vs Data Catalog: Architecture Guide 2026

- What Is Metadata Knowledge Graph & Why It Matters in 2026?

- How to Implement an Enterprise Context Layer for AI: 2026 Guide