Databricks Metadata Management: All You Need To Know in 2025

Share this article

Databricks metadata management streamlines data asset organization and governance using tools like Atlan and Unity Catalog.

See How Atlan Streamlines Metadata Management – Start Tour

It centralizes metadata across the lakehouse architecture, ensuring secure access, data lineage, and compliance.

With automated workflows and real-time synchronization, Databricks provides efficient management of metadata, enhancing data transparency and decision-making.

The platform’s scalable design supports integration with various data systems, making it an essential solution for unified metadata governance and actionable insights.

In this article, we’ll discuss the importance of metadata management for Databricks assets, the capabilities of Unity Catalog, and the benefits of integrating it with Atlan.

Table of contents #

- Why is metadata management important for Databricks assets?

- What is Databricks?

- The USP of the lakehouse architecture: Metadata management

- Databricks metadata management capabilities: Metadata management in the Unity Catalog

- What use cases can you power with metadata management of Databricks?

- Atlan + Databricks: Activating Databricks metadata

- How organizations making the most out of their data using Atlan

- FAQs about databricks metadata management

- Databricks metadata management: Related reads

Why is metadata management important for Databricks assets? #

Metadata is the key to finding and analyzing every data asset (schema, column, dashboard, query, or access log) and understanding how they affect your workflows. Since warehouses are organized and structured, handling metadata within each warehouse isn’t too complex. The challenge mainly lies in making the metadata searchable and easy to understand.

However, the data ingested into data lakes don’t have a specific format or structure. Without a mechanism to organize and manage metadata, data lakes can quickly transform into nightmarish data swamps (also known as data dumps or one-way data lakes). In such an environment, data gets stored but isn’t in any shape to be used.

As a result, there’s no context on data, leading to the chaos around questions such as:

- When was a data set created?

- Who’s the owner?

- Where did it come from?

- What’s the data type?

This information comes from the metadata and its effective management.

Managing metadata is also the most effective way to ensure data quality and governance by tracking, understanding, and documenting all types of metadata — business, social, operational, and technical metadata.

For instance, metadata management can help you ensure that the data uploaded to a table matches its schema. Similarly, it helps you verify if someone’s allowed to view a specific table of sensitive data and logs all accesses automatically for easier audits.

That’s why data lakes and lakehouses (like Databricks) can only be effective with a proper metadata management system in place.

But before exploring metadata management in Databricks, let’s understand its history and origins.

What is Databricks? #

Databricks is a company that offers a cloud-based lakehouse platform for data engineering, analytics, and machine learning use cases.

The platform started as an open-source project (i.e., Apache Spark™) in academia. One engineer describes the core Databricks platform as “Spark, but with a nice GUI on top and many automated easy-to-use features.”

Today, Databricks is valued at $38 billion and is considered to be one of the world’s most valuable startups, joining the ranks of Stripe, Nubank, Bytedance, and SpaceX.

Databricks: An origin story (TL;DR) #

The official release of Hadoop in 2006 helped companies power their data processing and analytics use cases with horizontal scaling of database systems. However, Hadoop’s programming framework (MapReduce) was complex to work with and led to the development of Apache Spark™.

Ali Ghodsi and Matei Zaharia set out to solve the problem of simplifying working with data by developing Apache Spark™ — an open-source platform for processing massive volumes of data in different formats. Companies used Spark to directly read and cache data.

According to Zaharia:

“Our group was one of the first to look at how to make it easy to work with very large data sets for people whose main interest in life is not software engineering.”

Apache Spark™ was so good that it set the world record for speed of data sorting in 2014.

However, setting up Apache Spark™ for clusters of servers and adjusting various parameters to ensure peak performance was tedious and challenging. This eventually brought the creators of Apache Spark™ together with other academics to collaborate on the Databricks project — a cloud data lakehouse platform that’s powered by Apache Spark™.

To understand the significance of the project, let’s go back to 2015 and look at the problems with the existing warehouse-lake data architecture.

Why did the lakehouse architecture become so important? #

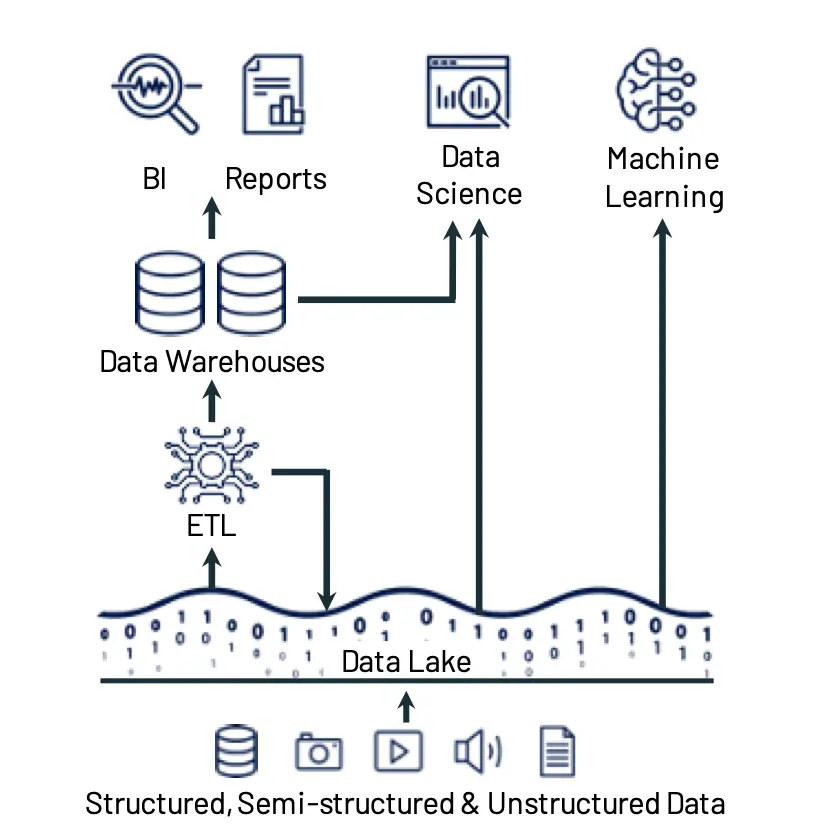

In 2015, when cloud data lakes such as Amazon S3 and Azure Data Lake Storage (ADLS) became more popular and replaced the Hadoop systems, organizations started using a two-tier data architecture:

- A cloud data lake as the storage layer for large volumes of structured and unstructured data

- A cloud data warehouse, like Amazon Redshift or Snowflake, as the compute layer for data analytics

The two-tier architecture model for data platforms. Source: Conference on Innovative Data Systems Research (CIDR).

According to the founders of Databricks — Michael Armbrust, Ali Ghodsi, Reynold Xin, and Matei Zaharia — such an architecture was:

- Complex: Data undergoes multiple ETL and ELT processes.

- Expensive: Continuous ETL processes incur substantial engineering overhead. Moreover, once the data is in the warehouse, the format is already set, and migrating that data to other systems involves further costly transformations.

- Unreliable: Each ETL step can lead to new failure modes or bugs, affecting data quality. Additionally, the data in the warehouse is stale, when compared to the lake data — constantly fresh and updated. This lag leads to increased waiting time for insightful data and introduces engineering bottlenecks as business teams wait for engineers to load new data in the warehouses.

- Not ideal for advanced analytics: ML systems such as PyTorch and TensorFlow process large volumes of data using non-SQL queries. Warehouses aren’t equipped to handle these formats, leading to further data transformations for each use case.

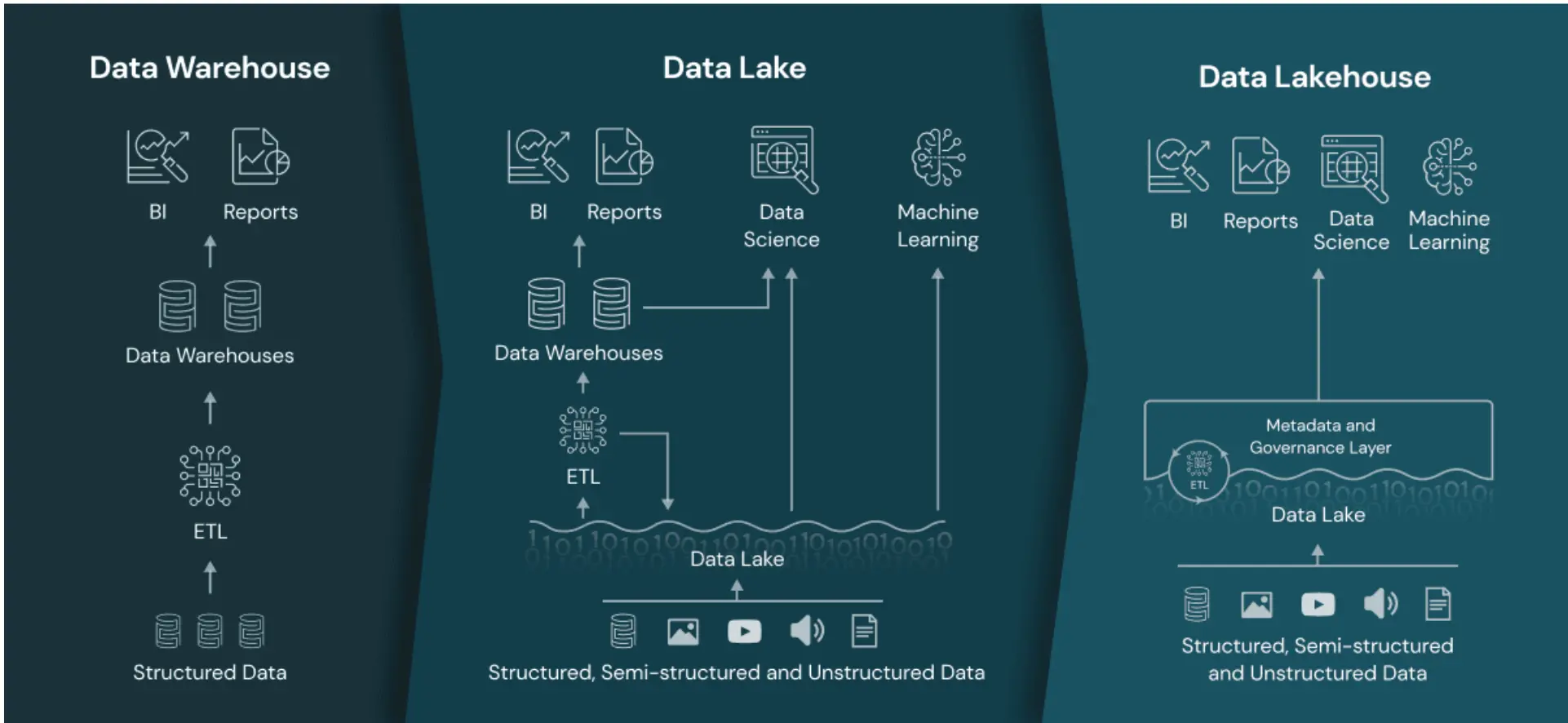

The solution is to build a platform that combines the best of both worlds — the open data format of a lake and the high-performance and data management capabilities of a warehouse.

That’s how the concept of a lakehouse, pioneered by Databricks, rose to prominence.

The USP of the lakehouse architecture: Metadata management #

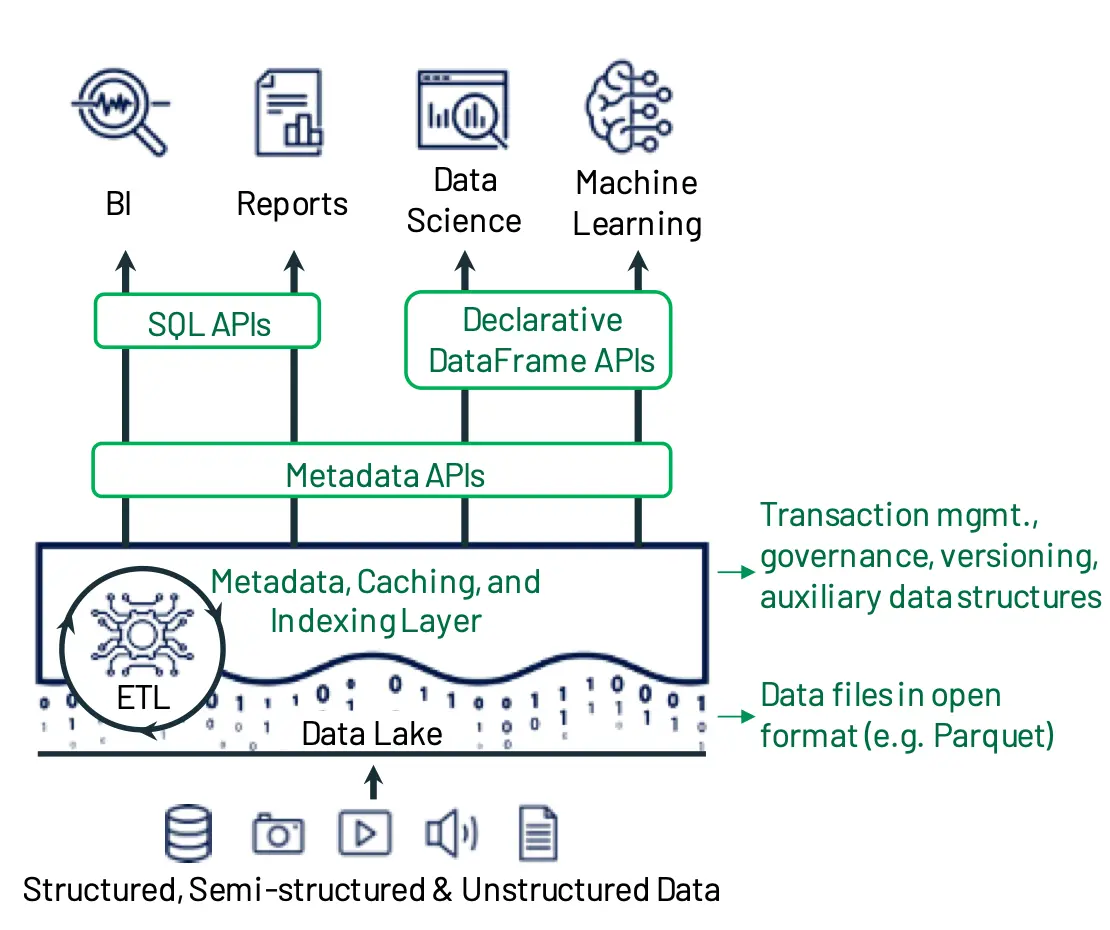

Armbrust, Ghodsi, Xin, and Zaharia define a lakehouse as:

“A low-cost, directly-accessible storage with traditional analytical DBMS management and performance features such as ACID transactions, data versioning, auditing, indexing, caching, and query optimization.”

Lakehouse system design with Databricks Delta Lake Metadata Layer. Source: Conference on Innovative Data Systems Research (CIDR)

A lakehouse has five layers — ingestion, storage, metadata, API, and consumption. The key difference between the lake-warehouse architecture vs. the lakehouse architecture is the metadata layer.

The metadata layer sits on top of the storage layer and “defines which objects are part of a table”. As a result, you can index data, ensure versioning, enable ACID transactions, and support other data management capabilities.

How a metadata layer sets the lakehouse architecture apart. Source: Databricks

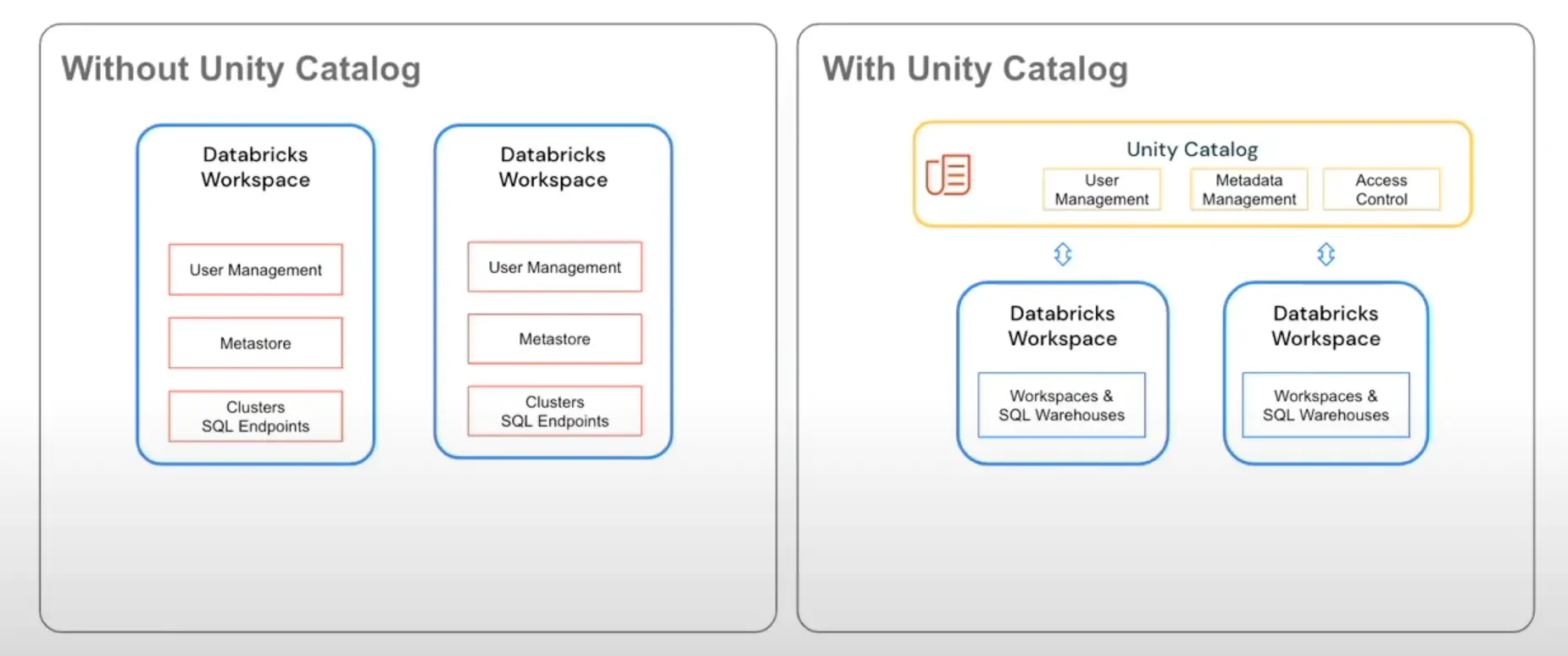

This metadata (now called metadata and governance layer) is the Databricks Unity Catalog, which indexes all data within the lakehouse architecture, such as the schemas, tables, views, and more.

Without this metadata layer, each Databricks workspace will be a silo.

The difference Unity Catalog makes for Databricks assets. Source: Data+AI Summit, 2022

Here’s how Databricks describes its Unity Catalog:

“Unity Catalog is a unified governance solution for all data and AI assets including files, tables, machine learning models, and dashboards in your lakehouse on any cloud.”

Think of Unity Catalog as a collection of all databases, tables, and views in the Databricks lakehouse.

A Guide to Building a Business Case for a Data Catalog

Download free ebook

Databricks metadata management capabilities: Metadata management in the Unity Catalog #

Unity Catalog is an upgrade to the old meta store within Databricks, the caveat being better permissions model and management capabilities. Using Unity Catalog, you can manage schemas, access controls, sensitive data cataloging, and generate automated views for all Databricks assets.

The Databricks Unity Catalog enables:

- Easy data discovery

- Secure data sharing using the Delta Sharing protocol — an open protocol from Databricks

- Automated and real-time table and column-level lineage to understand data flows within the lakehouse

- A single and unified permissions model across all Databricks assets

- Centralized auditing and monitoring of lakehouse data

Let’s look at the most prominent use cases powered by the metadata and governance layer of Databricks.

What use cases can you power with metadata management of Databricks? #

Discovery and Cataloging #

With metadata management, it’s easier to discover trustworthy data and get adequate context on it as it indexes every asset inside the lakehouse — data sets, databases, ML models, and analytics artifacts.

Lineage and impact analysis #

Metadata management powers lineage capability across tables, columns, notebooks, workflows, workloads, and dashboards. As a result, you can:

- Trace the flow of data across Databricks workspaces

- Track the spread of sensitive data across datasets

- Monitor the data transformation cycle

- Analyze the impact of proposed changes to downstream reports

Centralized governance, security, and compliance #

Modern metadata management platforms automatically identify and classify PII and other sensitive data in the lakehouse. In addition, it maintains centralized audit logs on data access and use (as mentioned earlier), which helps govern usage.

They also centralize access control with a single and unified permissions model. As a result, access control and data privacy compliance have become simpler.

The Unity Catalog is sufficient for metadata management of Databricks lakehouse assets, however, the modern data stack has several tools (dashboards, CRMs, warehouses), each with a metastore acting as the tool’s data catalog. To get a 360-degree view of all data in your stack, you must set up a catalog of catalogs with active metadata management, and that’s where Atlan comes into the picture.

Also, read → Databricks tutorial: 7 concepts for data specialists | Microsoft and Databricks | Metadata as Data Intelligence

Atlan + Databricks: Activating Databricks metadata #

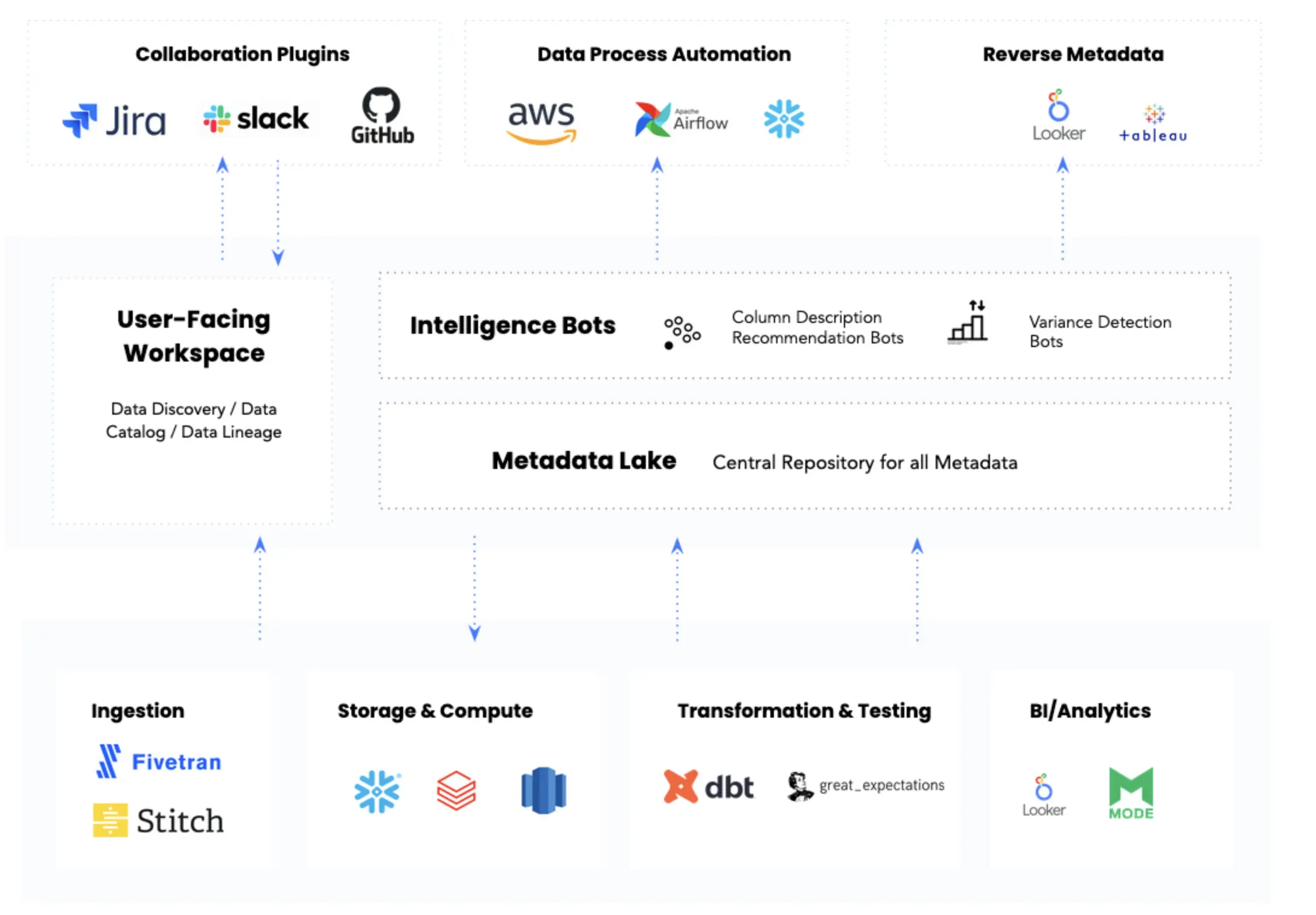

Atlan activates your Databricks data with active metadata management — a two-way flow of metadata across data tools in your stack. Think of it as reverse ETL for metadata.

Why does active metadata management matter? #

The core premises of active metadata management are:

- As and when data changes, the associated metadata also changes automatically

- As data is used, updated, or modified, metadata keeps getting generated and cataloged

- The management of metadata should be automated using open, extensible APIs

Atlan checks all three boxes to provide you with:

- An active, living single source of truth with 360° profiles of data assets

- Proper context on data using the tools of your choice, i.e., embedded collaboration

- An open API architecture, empowering you to connect Unity Catalog’s REST API with Atlan to extract metadata from Databricks clusters and workspaces

When you pair the Databricks metadata with metadata from the rest of your data assets, you can achieve true cross-system lineage and visibility across your data stack.

Atlan active metadata management platform. Source: Atlan

The benefits of activating Databricks metadata using Atlan #

- Build a home for all kinds of metadata — technical, business, personal, and custom metadata with Atlan’s no-code Custom Metadata Builder

- See column-level data flows from your lakehouse to BI dashboards without switching apps

- Analyze which downstream assets will get affected by changing any Databricks asset and alert the relevant data owners

- Create Jira tickets for broken assets for action on broken assets

- Discuss the impact of these changes using your tool of choice, like Slack

To see Atlan and Unity Catalog in action, enabling active metadata management, check out this live product demo:

A Demo of Atlan and Databricks Unity Catalog in action

Related resources on integrating Unity Catalog with Atlan #

- How to set up Databricks

- How to crawl Databricks

- How to extract lineage from Databricks

- What does Atlan crawl from Databricks?

If you are evaluating an enterprise metadata management solution for your business, do take Atlan for a spin — Atlan is a third-generation data catalog built on the premise of embedded collaboration that is key in today’s modern workplace, borrowing principles from GitHub, Figma, Slack, Notion, Superhuman, and other modern tools that are commonplace today.

How organizations making the most out of their data using Atlan #

The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes #

- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

FAQs about databricks metadata management #

1. What is Databricks metadata management? #

Databricks metadata management involves using tools like Atlan and Unity Catalog to manage, govern, and gain insights into data assets within the Databricks Lakehouse. It enables tracking and organizing metadata, which is crucial for data lineage, governance, and cataloging.

2. How does Databricks handle metadata governance? #

Databricks employs Unity Catalog as its governance layer to manage permissions, access controls, and policies. This ensures data is secure and compliant while offering detailed lineage tracking to enhance transparency and accountability.

3. What tools within Databricks support metadata cataloging? #

Unity Catalog is the primary tool within Databricks for metadata cataloging. It integrates with other components to provide a comprehensive metadata management solution, helping organizations discover, classify, and govern data assets.

4. How to set up data lineage in Databricks? #

Data lineage in Databricks is established through Unity Catalog, which automatically tracks data flow across operations. Users can visualize lineage graphs, ensuring a clear understanding of how data moves and transforms within their ecosystem.

5. What are the best practices for metadata management in Databricks? #

Best practices include implementing Unity Catalog for centralized governance, integrating metadata across tools using platforms like Atlan, maintaining clear data ownership, and regularly auditing metadata for accuracy and completeness.

6. How does Databricks metadata management enhance data security and compliance? #

Databricks metadata management strengthens security by enforcing access controls, monitoring data usage, and providing detailed audit logs. These features ensure compliance with regulations such as GDPR and HIPAA.

Databricks metadata management: Related reads #

- Databricks Summit 2025: What to Expect at the Data + AI Summit

- What is a data lake and why it needs a data catalog?

- What is active metadata management? Why is it a key building block of a modern data stack?

- Enterprise metadata management and its importance in the modern data stack

- Data catalog vs. metadata management: Understand the differences

- Activate metadata for DataOps

- Databricks data governance: Overview, setup, and tools

- Can Metadata Catalogs Enhance Data Discovery & Access?

- 6 Types of Metadata Explained: Examples & Key Uses 2025

- 6 Ways Metadata Helps You Find Specific Data

- Metadata Governance: Why You Shouldn’t Neglect It

- Data Governance & Metadata Management: A Vital Duo for Data-Driven Success

- Why is Metadata Important for Effective Data Management?

- Databricks Data Lineage: Step-by-Step Setup Guide

- Databricks Governance: What To Expect, Setup Guide, Tools

- How to Implement Master Data Management With Databricks?

Share this article