Databricks Unity Catalog Guide: How to Unlock Its Full Potential in 2025?

Share this article

Databricks Unity Catalog is a centralized metadata layer that simplifies data governance. It manages data access, security, and lineage across the Databricks platform.

See How Atlan Simplifies Data Cataloging – Start Product Tour

Unity Catalog enhances data discovery by unifying governance for tables, files, and machine learning models. It offers fine-grained access controls to ensure compliance and secure collaboration.

With audit logs and real-time lineage, it provides transparency and accountability for data usage. This robust governance framework helps enterprises streamline operations and meet regulatory requirements.

Unity Catalog supports multi-cloud environments, making it a versatile solution for modern data management needs.

In this article, you’ll explore the key features of Databricks’ built-in Unity Catalog, along with a summary of how to set it up and tips on maximizing its value. Let’s dive in!

Table of contents #

- Unity Catalog: Overview

- How to set up Unity Catalog for a Databricks account

- Getting the most out of Databricks Unity Catalog with Atlan

- Unity Catalog + Atlan: How to integrate

- Metadata plane for data and AI-readiness

- How organizations making the most out of their data using Atlan

- FAQs about databricks unity catalog

- Databricks unity catalog: Related reads

Unity Catalog: Overview #

Databricks accounts have Workspaces, which are unified environments for working with Databricks assets for specific sets of users. Workspaces can be for business units, teams, individuals, etc.

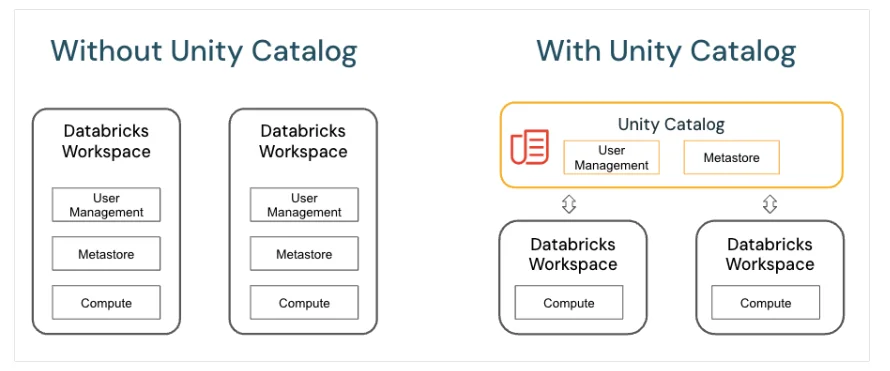

The way Databricks is structured is that every workspace has its own user management, metastore, and compute. While you don’t need shared compute, it would be good to have shared user management and metastore for better access management, governance, search, and discovery.

Unity Catalog does exactly that. It brings user management and metastore for different Databricks workspaces while allowing those workspaces to have their own separate compute, as shown in the image below:

Databricks with and without Unity Catalog - Source: Databricks website.

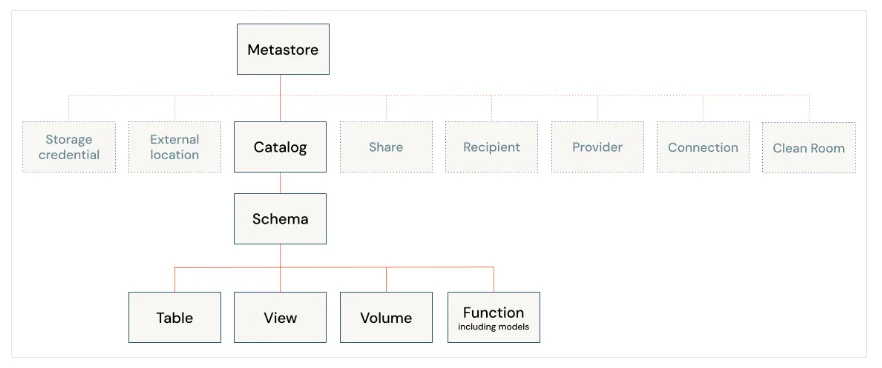

Unity Catalog is hierarchically arranged in a three-level namespace that consists of the metastore, the catalog, and the schema, as shown in the image below:

Three-level namespace for a hierarchical arrangement of objects in the technical catalog - Source: Databricks website.

This three-level namespace departs from the usual native technical catalog that comes with database and data warehousing systems like MySQL, SQL Server, Oracle, etc. This arrangement of securable objects allows you to use Unity Catalog features like access control, data sharing, discovery, lineage, and logging, among other things.

Let’s now look at how to set up a Unity Catalog for your Databricks account.

How to set up Unity Catalog for a Databricks account #

You need to follow the below-mentioned steps to set up Unity Catalog for your Databricks account:

- Enable Unity Catalog : You need to enable Unity catalog for your account if it’s not already enabled, by default.

- Set up Workspace admin : Then you need to create a user in the admins workspace-local group; this user should be able to grant the account admin and metastore admin roles.

- Provision Databricks compute: Unity Catalog workloads need to comply with the access and security requirements. These are defined in the four access modes, of which only two (single-user and shared) support Unity Catalog.

- Grant permission to users: Next, you need to grant your users permission to create objects and access them in Unity Catalog catalogs and schemas.

- Create a catalog : Before you can use Unity Catalog, you need to create at least one catalog, as some Databricks workspaces won’t have catalogs created by default. Follow these best practices when creating a new catalog.

Once you have set up Unity Catalog, you can query all the securable objects, such as schemas, tables, views, etc., and use them for discovery, lineage, access control, and data sharing purposes.

Once Unity Catalog is set up, you can query all securable objects, such as schemas, tables, and views, for purposes like discovery, lineage, access control, and data sharing.

While Unity Catalog excels as a technical data catalog and helps you manage your Databricks environment more effectively, it doesn’t provide a unified control plane to manage metadata across your entire data stack from one place. This is where Atlan steps in, leveraging the information from Unity Catalog to create that missing unified control plane. Let’s explore how Atlan and Unity Catalog work together.

Also, read → How to structure Databricks Unity Catalog?

Getting the most out of Databricks Unity Catalog with Atlan #

The data control plane is a centralized place for managing all your data assets across your wider data ecosystem, not just Databricks. While it is a centralized place, the underlying data architecture and ways of working can follow any operating model.

Atlan integrates with Unity Catalog to offer such a control plane for your data with the following features:

Intuitive user experience that allows you easy access to data assets across your data ecosystem

- Organization-wide business glossary enabling a common business language, making it easy for everyone to understand the KPIs, metrics, and overall business goals better

- Domain-driven data product marketplace for self-contained teams, especially in decentralized organizations

- Governance and quality automation with automatic data classification, tag and lineage propagation, and data contract enforcement

- Embedded collaboration with trust-enabling features like verification, certification, and freshness flags.

Many companies using Databricks to power their core data needs have leveraged Atlan as the metadata foundation for all of their data stack.

General Motors: Building their Insight Factory using Unity Catalog + Atlan #

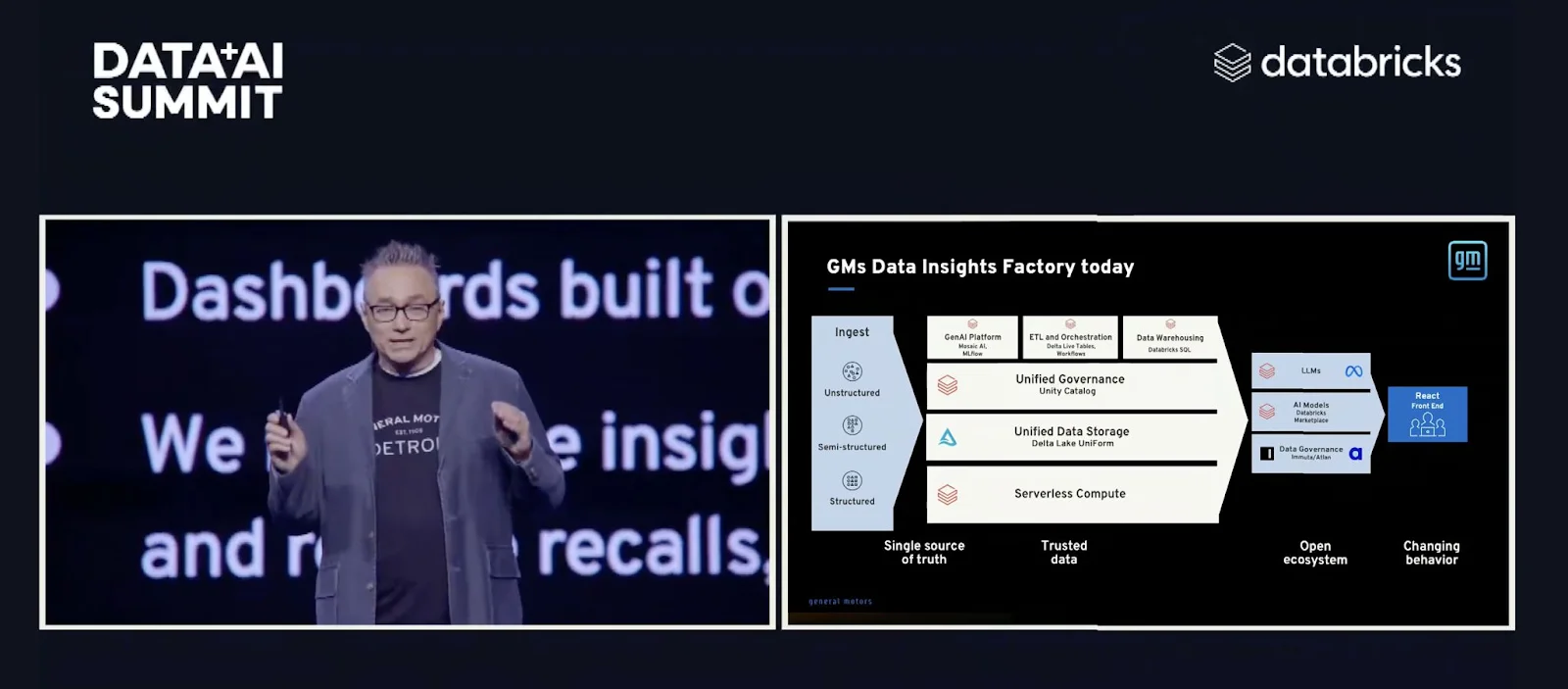

Screenshot from keynote at the DataAISummit - Source: Brian Ames - Leading AI/ML from concept to Production, Head of the AI Center and Senior Manager for Transformation and Enablement at General Motors

“We realized that AI & ML needed to be our competitive advantage and we knew that if that’s our vision, we couldn’t function like a traditional automotive company, we needed to become a software company … our data ecosystem is complex, so we needed to build GM’s Insight Factory right … [partnering with Databricks and other leading solutions], Atlan’s governance solution helps us with end-to-end lineage to understand our ecosystem.”

Brian and the team at GM are transforming their industry and already achieving significant business impact with time-to-insight down from 28 days to 3 hours and $330M added to their bottom line.

Unity Catalog + Atlan: How to integrate #

Making Atlan and Unity Catalog work together is quite easy. You can go through the following steps that are detailed on the Atlan + Databricks Connectivity page to complete the set up:

- Set up authentication between Atlan and Databricks depending on your cloud platform.

- Grant the BROWSE privilege to access an object’s metadata, the lineage graph,

information_schema, and the REST API, among other things.

- Grant Atlan the permissions to import tags from Databricks, reverse sync tags back to Databricks, and extract lineage and usage metadata from Databricks.

Once you’ve set up the connection, Atlan can crawl the metadata from Unity Catalog.

Metadata plane for data and AI-readiness #

Thanks to Atlan’s innovative design, architecture, and cataloging features, it was recognized as a market leader in the Forrester Wave report, surpassing other enterprise data cataloging tools.

This comparison was based on 24 different aspects of data cataloging, which can broadly be categorized under the following three themes:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

With the rise of generative AI in recent years, organizations now have the ability to do more with both structured and unstructured data—provided that the data is searchable, discoverable, and trustworthy. A metadata plane like Atlan can help you prepare to tackle any data or AI-driven challenges your organization may face.

Curious how? Talk to us!

Also, read → Databricks To Pursue Automated Data Intelligence | What’s New with Databricks Unity Catalog | A Comprehensive Guide to Databricks Lakehouse AI For Data Scientists

How organizations making the most out of their data using Atlan #

The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes #

- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

FAQs about databricks unity catalog #

1. What is Databricks Unity Catalog? #

Databricks Unity Catalog is a centralized metadata layer designed to manage data access, security, and lineage within the Databricks platform. It simplifies data governance by unifying data management across workspaces, enabling secure collaboration.

2. How does Unity Catalog enhance data governance? #

Unity Catalog provides fine-grained access controls, centralized governance, and auditing capabilities. This ensures compliance with data regulations while enabling secure data sharing and collaboration within teams.

3. What are the benefits of using Unity Catalog for metadata management? #

Unity Catalog centralizes metadata, making it easier to discover, audit, and govern data. This results in improved data quality, better regulatory compliance, and enhanced collaboration across teams.

4. How can I configure access control in Unity Catalog? #

Unity Catalog allows users to set role-based access controls (RBAC) and fine-grained permissions at the table, file, and cluster levels. This ensures only authorized users can access sensitive data.

5. How does Unity Catalog ensure compliance with data regulations? #

Unity Catalog supports detailed audit logs, data lineage, and governance policies. These features help organizations comply with regulatory requirements by providing transparency and control over data access and usage.

6. What are the key differences between Unity Catalog and traditional data catalogs? #

Unlike traditional data catalogs, Unity Catalog integrates directly with the Databricks platform, offering seamless governance, real-time lineage tracking, and built-in security controls for both structured and unstructured data.

Databricks unity catalog: Related reads #

- Databricks Summit 2025: What to Expect at the Data + AI Summit

- Databricks Lineage — Overview, Benefits, How to Set Up?

- Databricks Governance: What To Expect, Setup Guide, Tools

- Databricks Metadata Management — FAQs, Tools, Getting started

- Data Catalog: What It Is & How It Drives Business Value

- What Is a Metadata Catalog? - Basics & Use Cases

- Modern Data Catalog: What They Are, How They’ve Changed, Where They’re Going

- Open Source Data Catalog - List of 6 Popular Tools to Consider in 2025

- 5 Main Benefits of Data Catalog & Why Do You Need It?

- Enterprise Data Catalogs: Attributes, Capabilities, Use Cases & Business Value

- The Top 11 Data Catalog Use Cases with Examples

- 15 Essential Features of Data Catalogs To Look For in 2025

- Data Catalog vs. Data Warehouse: Differences, and How They Work Together?

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Data Catalog vs. Data Lineage: Differences, Use Cases, and Evolution of Available Solutions

- Data Catalogs in 2025: Features, Business Value, Use Cases

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- Amundsen Data Catalog: Understanding Architecture, Features, Ways to Install & More

- Machine Learning Data Catalog: Evolution, Benefits, Business Impacts and Use Cases in 2025

- 7 Data Catalog Capabilities That Can Unlock Business Value for Modern Enterprises

- Data Catalog Architecture: Insights into Key Components, Integrations, and Open Source Examples

- Data Catalog Market: Current State and Top Trends in 2025

- Build vs. Buy Data Catalog: What Should Factor Into Your Decision Making?

- How to Set Up a Data Catalog for Snowflake? (2025 Guide)

- Data Catalog Pricing: Understanding What You’re Paying For

- Data Catalog Comparison: 6 Fundamental Factors to Consider

- Alation Data Catalog: Is it Right for Your Modern Business Needs?

- Collibra Data Catalog: Is It a Viable Option for Businesses Navigating the Evolving Data Landscape?

- Informatica Data Catalog Pricing: Estimate the Total Cost of Ownership

- Informatica Data Catalog Alternatives? 6 Reasons Why Top Data Teams Prefer Atlan

- Data Catalog Implementation Plan: 10 Steps to Follow, Common Roadblocks & Solutions

- Data Catalog Demo 101: What to Expect, Questions to Ask, and More

- Data Mesh Catalog: Manage Federated Domains, Curate Data Products, and Unlock Your Data Mesh

- Best Data Catalog: How to Find a Tool That Grows With Your Business

- How to Build a Data Catalog: An 8-Step Guide to Get You Started

- The Forrester Wave™: Enterprise Data Catalogs, Q3 2024 | Available Now

- How to Pick the Best Enterprise Data Catalog? Experts Recommend These 11 Key Criteria for Your Evaluation Checklist

- Collibra Pricing: Will It Deliver a Return on Investment?

- Data Lineage Tools: Critical Features, Use Cases & Innovations

- OpenMetadata vs. DataHub: Compare Architecture, Capabilities, Integrations & More

- Automated Data Catalog: What Is It and How Does It Simplify Metadata Management, Data Lineage, Governance, and More

- Data Mesh Setup and Implementation - An Ultimate Guide

- What is Active Metadata? Your 101 Guide

Share this article