AI Governance in Healthcare: Why It Matters & How to Implement It

Share this article

What is AI Governance in Healthcare?

Permalink to “What is AI Governance in Healthcare?”AI governance in healthcare ensures AI models are used responsibly, transparently, and in compliance with regulations. It safeguards patient care, diagnostics, clinical decision-making, and research by promoting fairness and safety.

See How Atlan Simplifies AI Governance ✨ – Start Product Tour

Key areas AI governance addresses:

- Transparent and ethical AI operations

- Compliance with global regulations

- Fair and safe AI in healthcare processes

In this article, we’ll explore why AI governance is essential, highlight key use cases, and provide a step-by-step guide to implementing AI governance in healthcare. We’ll also discuss how a metadata control plane can enhance data governance, ensuring compliance, transparency, and responsible use of AI in healthcare and life sciences systems.

Table of contents

Permalink to “Table of contents”- What is AI governance in healthcare?

- Why do we need AI governance for healthcare?

- What are some of the key use cases for AI governance in healthcare?

- How to implement AI governance for healthcare

- Understanding the role of a metadata control plane in AI governance for healthcare

- AI governance in healthcare: The key to building trust

- FAQs about AI governance in healthcare and lifesciences

- AI governance for healthcare: Related reads

Why do we need AI governance for healthcare?

Permalink to “Why do we need AI governance for healthcare?”AI in healthcare can enable early detection, risk prediction, patient identification, machine learning for diagnostics, and large language models (LLMs) for clinical decision support.

However, trust in these AI-driven insights is critical. Errors in AI-driven diagnostics or treatment recommendations can have life-threatening consequences.

With AI governance for healthcare and life sciences, you get benefits, such as:

- Promoting transparency and improving trust in AI-assisted decision-making: Trust in AI systems is vital for value creation. Governance frameworks promote transparency and explainability by implementing model documentation, version control, and clear reporting mechanisms.

- Reducing biases and ensuring fairness in AI-driven medical decisions: AI governance in healthcare and life sciences helps monitor, detect, and mitigate bias with data quality checks, diverse training datasets, and fairness audits.

- Navigating global and regional regulations: Healthcare AI is subject to evolving regulations like the EU AI Act, Executive Order 14110, and US FDA guidelines. AI governance ensures compliance with these standards by embedding automated policy enforcement, audit trails, and risk assessments at every stage of the AI lifecycle.

By embedding governance at every stage, healthcare organizations can mitigate risks, reduce biases, and promote trust by ensuring models are trained on high-quality, well-documented, and unbiased data.

The role of trust in AI governance for healthcare and life sciences

Permalink to “The role of trust in AI governance for healthcare and life sciences”“As a foundational issue, trust is required for the effective application of AI technologies. In the clinical health care context, this may involve how patients perceive AI technologies.” - Congressional Research Service

Trust depends on the quality, transparency, and governance of the data that powers AI systems. With effective AI governance for healthcare, you can ensure that the AI models are trained on accurate, unbiased, and well-documented data, reducing risks like hallucinations or biased outcomes.

Metadata plays a crucial role in this process by providing context, lineage, and traceability, which helps decision-makers verify data sources, track changes, or monitor compliance. By embedding governance at every stage of the AI lifecycle and facilitating active metadata management, healthcare institutions can feed relevant, accurate, well-defined data to their AI systems.

Also, read → Gartner on AI governance

Let’s explore the role of AI governance in real-world applications like ICU shift handoffs, automating surgery authorization, and drug discovery in life sciences.

ICU shift handoffs

A hospital is considering integrating LLMs to summarize critical patient data for ICU shift handoffs, reducing communication errors. For these summaries to be reliable, they must:

- Be easily traceable to their original structured and unstructured data sources

- Include metadata tags such as data source, timestamp, ownership, and accuracy scores

Automating surgery authorization

AI can automate prior authorization for surgeries by analyzing payer policies, clinical guidelines, and patient history, reducing administrative burden and approval delays.

With active metadata management and embedded governance, healthcare institutions can:

- Track patient history, clinical reasoning, and policy compliance for auditability and explainability

- Integrate healthcare systems with real-time electronic health record (EHR) and claims updates

- Secure documentation with role-based access, preventing unauthorized use

Drug discovery in life sciences

AI in life sciences can speed up the identification of potential drug candidates. Models can analyze large biomedical datasets to predict molecular interactions, reducing the time required for preclinical research.

However, data integrity, bias, and regulatory compliance remain key concerns and strong AI governance is required to:

- Maintain an auditable lineage of research data and AI model inputs

- Ensure compliance with global regulatory guidelines on AI-assisted drug development

- Mitigate biases by tracking training data sources and applying fairness checks

What are some of the key use cases for AI governance in healthcare?

Permalink to “What are some of the key use cases for AI governance in healthcare?”Some of the top use cases of AI governance in healthcare include:

- Clinical decision support: AI can analyze patient data, medical literature, and clinical guidelines to provide AI-powered recommendations to healthcare professionals. AI governance ensures these recommendations are accurate, unbiased, and explainable, reducing the risk of AI-driven errors.

- Predictive models to forecast patient health trajectories: AI models can anticipate disease progression, hospital readmissions, patient deterioration, and more. AI governance frameworks enforce fairness, data integrity, and compliance, preventing biased predictions and ensuring safe, effective use of AI-driven insights.

- Personalized treatment plans: AI can tailor treatment strategies based on patient history, genetics, and real-time health data. AI governance ensures transparency in decision-making, protects patient privacy, and supports regulatory compliance.

- Regulatory compliance and risk management: AI can automate compliance checks by continuously monitoring evolving regulations like HIPAA, GDPR, and the EU AI Act. AI governance enables real-time audit trails, role-based access controls, and automated risk assessments, ensuring organizations maintain compliance at scale while reducing manual effort.

- Digital twins to accelerate clinical trials: AI-driven virtual patient models optimize clinical trial design, drug testing, and treatment strategies. AI governance ensures regulatory compliance, safeguards patient data, and mitigates bias risks, improving the reliability of AI-assisted trials.

AI governance in healthcare use case: Digital twins for clinical trials

Permalink to “AI governance in healthcare use case: Digital twins for clinical trials”Digital twins are AI-driven virtual patient models that reflect the unique genetic makeup of a patient. Using digital twins in healthcare can accelerate clinical trials and innovation while reducing costs.

“With predictive algorithms and real-time data, digital twins have the potential to detect anomalies and assess health risks before a disease develops or becomes symptomatic.” - Sunil Soares, Founder & CEO at YDC

The caveat – digital twins require diverse, high-quality datasets to produce accurate results, making governance essential for mitigating risks like biased simulations or inaccurate predictions.

AI governance ensures data integrity checks, consent management, and compliance tracking throughout the AI lifecycle. Additionally, active metadata management ensures that virtual patient models are built on verifiable, high-quality and representative datasets.

How to implement AI governance for healthcare

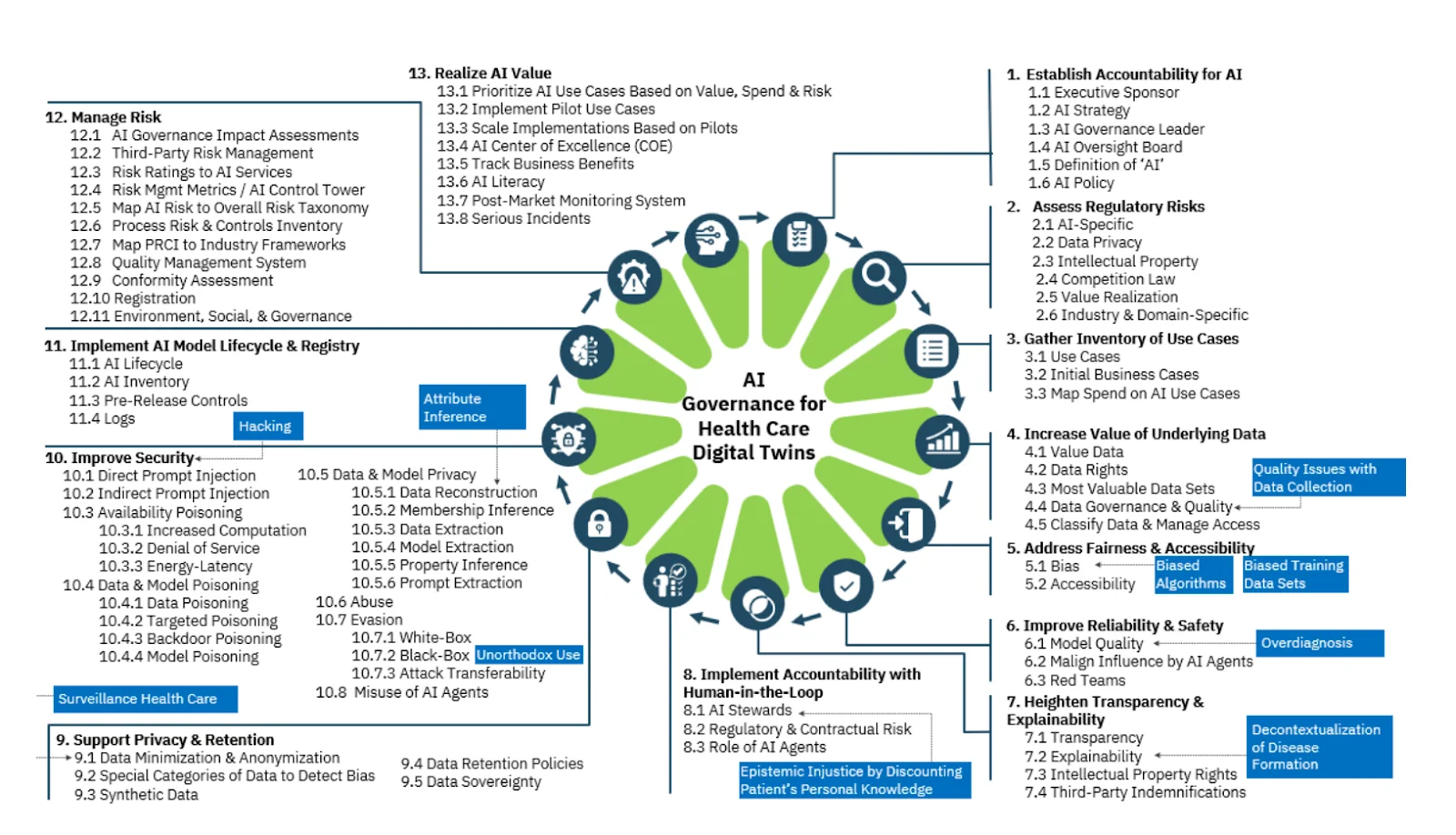

Permalink to “How to implement AI governance for healthcare”Here’s a step-by-step guide to implementing AI governance for healthcare:

- Establish accountability for AI: Define clear leadership and governance structures to oversee AI implementation. This includes appointing an AI governance leader, setting up an oversight board, defining the term ‘AI’, and formalizing AI policies to align with organizational strategy and regulatory requirements.

- Assess regulatory risks: Identify and address AI-related legal and compliance risks, such as data privacy, intellectual property concerns, competition laws, and industry-specific mandates.

- Gather inventory of AI use cases: Catalog all AI-driven applications within the healthcare ecosystem. This includes identifying high-risk and business-critical use cases, mapping associated costs, and prioritizing them based on value, regulatory impact, and ethical considerations.

- Increase the value of underlying data: Ensure that the data fueling AI models is high-quality, well-governed, and accessible while respecting data rights and ownership. Implement strong metadata management practices to classify, validate, and maintain data integrity for accurate AI outcomes.

- Address fairness and accessibility: Establish protocols to identify and mitigate biases in training datasets and ensure AI applications remain accessible to all patients, regardless of demographics or medical conditions.

- Improve reliability and safety: Monitor AI models for errors, biases, and performance fluctuations to enhance safety and accuracy. Implement red teams to rigorously test AI applications and mitigate potential risks such as overdiagnosis or harmful AI-driven recommendations.

- Heighten transparency and explainability: AI models must be interpretable, ensuring that healthcare professionals can understand how decisions are made. Transparency mechanisms, such as model documentation, audit trails, and explainability (covering third-party applications too) must be in place.

- Implement accountability with Human-in-the-Loop: Ensure AI decisions are reviewed and validated by human experts before implementation. Establish AI stewards to oversee regulatory compliance, ethical considerations, and the role of AI agents in clinical workflows.

- Support privacy and retention: Enforce strong data privacy measures, including data minimization, anonymization, and synthetic data techniques. Implement strict retention policies to manage data lifecycle, ensuring compliance with patient rights and regulatory requirements.

- Improve security: AI applications must be protected against prompt injections, data and model poisoning, and adversarial attacks. Implement advanced security protocols to prevent unauthorized access, manipulation, or exploitation of AI systems.

- Implement AI model lifecycle and registry: Maintain a registry of all AI models used in healthcare, documenting their lifecycle from development to deployment and monitoring. Implement logging mechanisms to track changes, improve auditability, and ensure models remain compliant with evolving regulations.

- Manage risk: Conduct AI governance impact assessments to evaluate and mitigate risks related to third-party AI applications, process failures, and regulatory non-compliance. Implement conformity assessments and environmental, social, and governance (ESG) evaluations for ethical AI use.

- Realize AI value: Prioritize AI use cases based on value, spend, and risk involved. Track AI performance, establish a post-market monitoring system, and set up AI literacy programs and Centers of Excellence (COEs) to continuously refine governance strategies.

Implementing AI governance for healthcare digital twins - Source: YDC.

Why metadata is vital for effective implementation of AI governance in healthcare and life sciences

Permalink to “Why metadata is vital for effective implementation of AI governance in healthcare and life sciences”While the implementation process may seem like a tall order, there’s a common element serving as the connective tissue that enables transparency, accountability, and compliance across AI systems – metadata.

As implied earlier, metadata plays a crucial role in tracking AI model ownership, policy changes, data asset origins and usage, changes to data quality, and more.

For instance, human oversight of AI requires clear documentation of interventions and decisions. Metadata logs capture manual overrides, expert feedback, and decision rationales, ensuring that AI-assisted workflows remain accountable and aligned with clinical best practices.

By embedding metadata-driven governance at every stage, healthcare organizations can ensure that AI models operate with transparency, accountability, and compliance. That’s where a metadata control plane can help.

Understanding the role of a metadata control plane in AI governance for healthcare

Permalink to “Understanding the role of a metadata control plane in AI governance for healthcare”A control plane for the data and AI stack integrates trust and context into the digital fabric. It stitches together a business’s disparate data infrastructure via cataloged metadata so data and business teams can find, trust, and govern AI-ready data.

The control plane supports:

- Active metadata management: Continuously track changes in data assets for compliance

- Data lineage: Provide full visibility into how data flows through your tech stack – tracing its origins, transformations, use, and role in training AI models

- Context-rich data discovery: Ensure interpretability of AI-assisted decisions

Such a setup creates a governed AI ecosystem where accuracy, transparency, and compliance are embedded at every stage.

Use case spotlight: Implementing AI governance for digital twins using Atlan

Permalink to “Use case spotlight: Implementing AI governance for digital twins using Atlan”Let’s revisit the digital twins use case. By simulating real-world physiological and clinical conditions, digital twins enhance personalized medicine, optimize clinical trials, and improve treatment efficacy.

However, the success of digital twins in clinical trials depends on tackling the following challenges:

- Data quality issues: Digital twins rely on vast datasets, including patient records, imaging data, and sensor readings. Inconsistent, incomplete, or inaccurate data can compromise model reliability.

- Addressing potential biases in simulation models: If training data lacks diversity, digital twins may reinforce biases, leading to a misdiagnosis or ineffective treatments.

- Ensuring data privacy and regulatory compliance: Digital twins use sensitive patient data, requiring strict compliance with HIPAA, GDPR, and emerging AI laws.

To address the above challenges,the team at YDC implemented AI governance using Atlan. They started off by integrating Atlan with their existing systems to ingest and manage metadata from the Commercial-off-the-Shelf (COTS) apps into Atlan.

1. Ingesting metadata into Atlan

The information ingested included metadata, such as, Application Name, Privacy Policy URL, Data Specifically Excluded from AI Training, Embedded AI and Opt-Out Option.

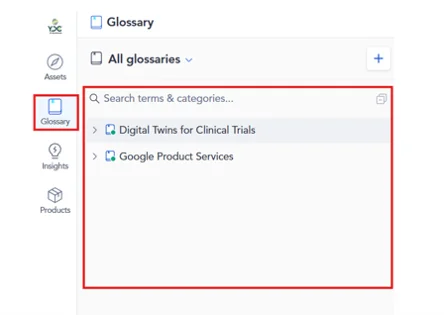

The screenshot below shows Atlan before running the integration with the YDC_AIGOV agents. The catalog only contains one AI Use Case (Digital Twins for Clinical trials) and one application (Google Product Services).

Using Atlan for supporting digital twins in clinical trials - Source: YDC.

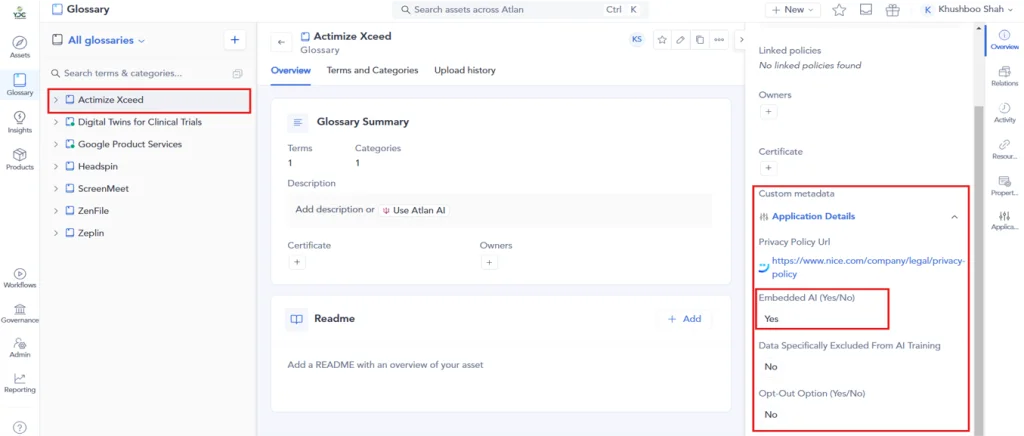

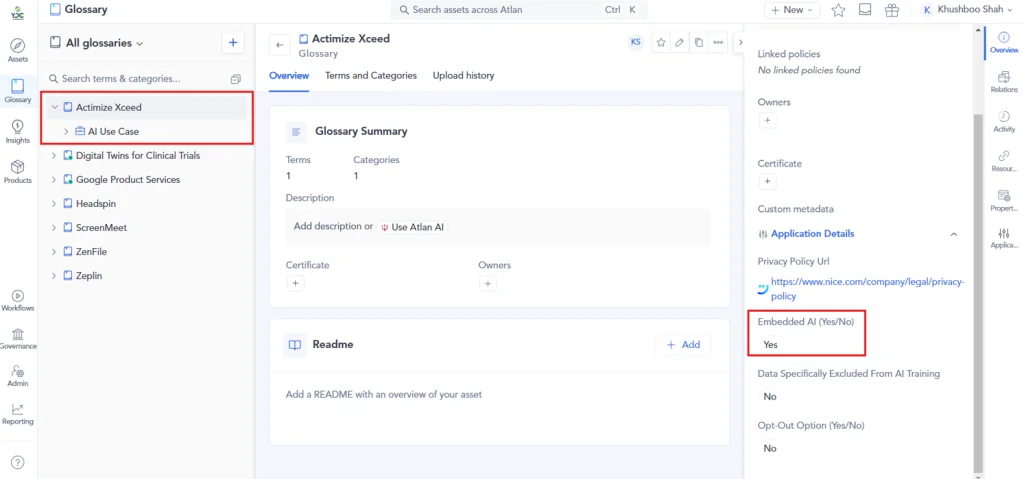

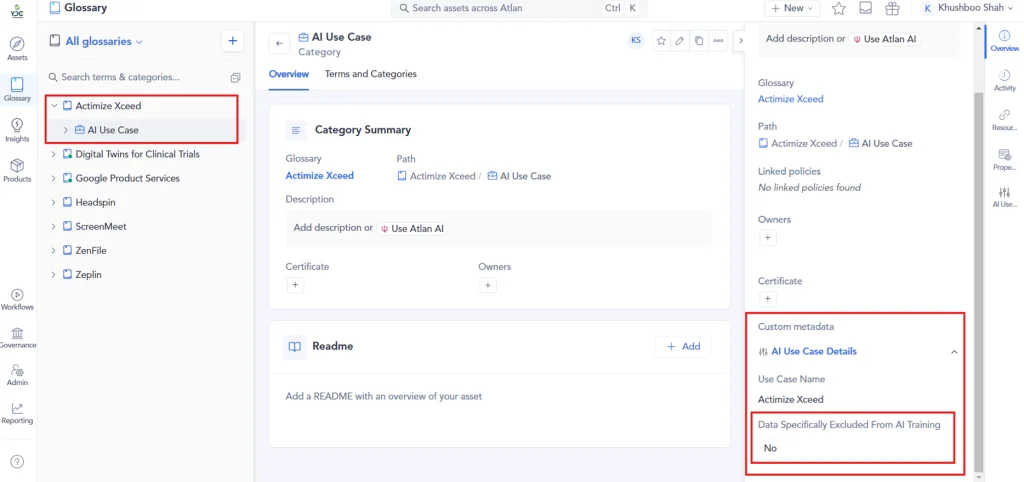

After running the integration with Atlan API, Atlan contains a broader list of applications including Actimize Xceed including metadata in the right panel.

Integrating Atlan with the YDC_AIGOV agents - Source: YDC.

This brought metadata from YDC’s data ecosystem under one roof – building a control plane for data, metadata, and AI.

2. Defining custom metadata attributes

Diverse data teams have unique needs, which must be captured using custom metadata.

Standard metadata captures basic attributes – owners, timestamp, description, and certification. Custom metadata provides extra context on assets by allowing you to set up unique properties that convey your organization’s perspective.

For example, you could add IPR as a new metadata group to capture intellectual property rights. This could include custom metadata fields like:

- License type: Define the type of license under which the asset can be used.

- Provider: Define the source of the asset, in cases where the license requires attribution

The team at YDC created custom metadata attributes to implement conditional logic in Atlan API to auto-create AI use cases only for applications with embedded AI. The custom metadata attributes created include:

- Embedded AI (Yes/No)

- Data specifically excluded from AI training (Yes/No)

- Opt-out option (Yes/No)

“We created an AI use case object in Atlan for Actimize Xceed because Embedded AI = “Yes.” We also implemented conditional logic in the Atlan API to auto-create AI Risk Assessment objects where Data Specifically Excluded for AI Training = “No.” Obviously, this logic is configurable.” - Sunil Soares, Founder & CEO at YDC

The custom metadata field titled Embedded AI - Source: YDC.

Other custom metadata fields used by YDC - Source: YDC.

3. Documenting risk assessments using Atlan

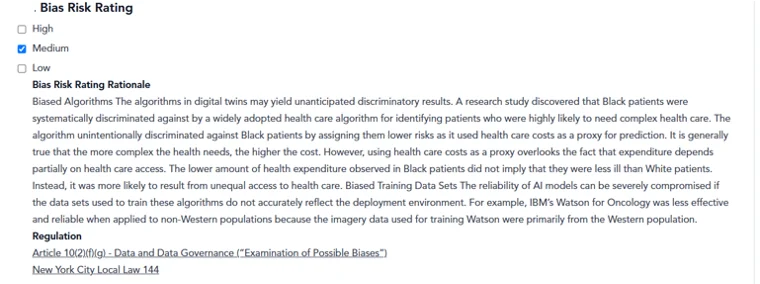

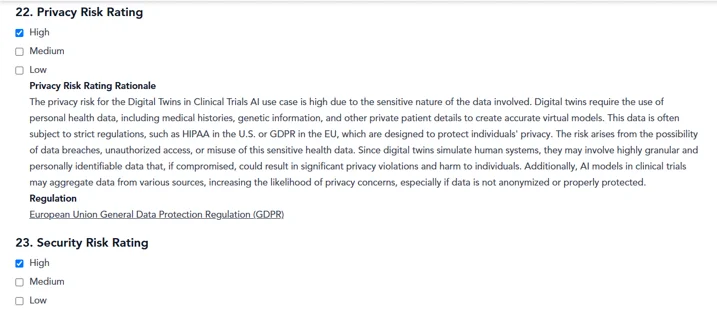

Using Atlan, YDC documented the bias risk assessment and mapped it to the associated regulations. They also documented the privacy risks, as well as other dimensions of AI risk – reliability, accountability, explainability, and security.

Documenting bias risk rating using Atlan - Source: YDC.

Documenting privacy risk rating using Atlan - Source: YDC.

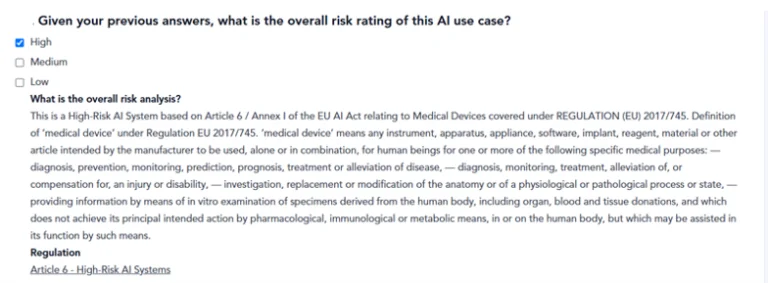

According to the YDC team, the digital twin use case would be “classified as High Risk based on the Medical Device category of Article 6 of the EU AI Act.” They documented the same in Atlan.

Documenting overall risk analysis in compliance with EU AI Act using Atlan - Source: YDC.

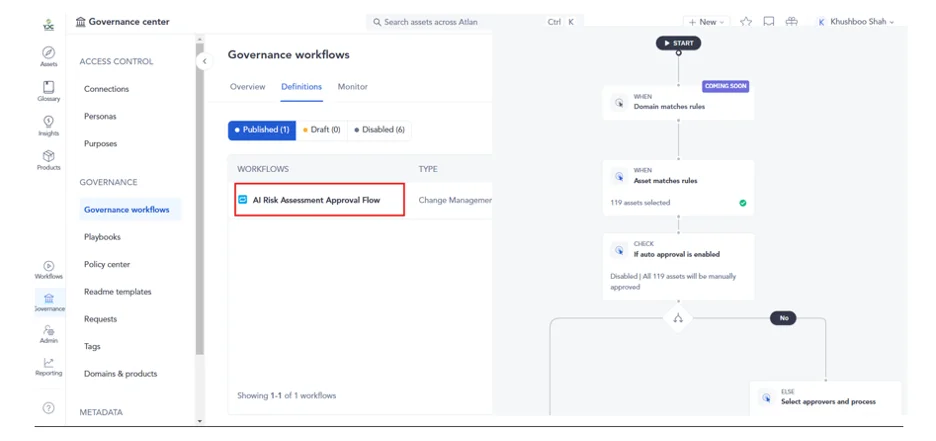

4. Configuring an AI risk assessment workflow in Atlan

Next, YDC configured an AI Risk Assessment workflow in Atlan to route the AI Risk Assessment to the appropriate parties for approval. This helps streamline approvals and maintain compliance.

Building AI risk assessment workflow using Atlan - Source: YDC.

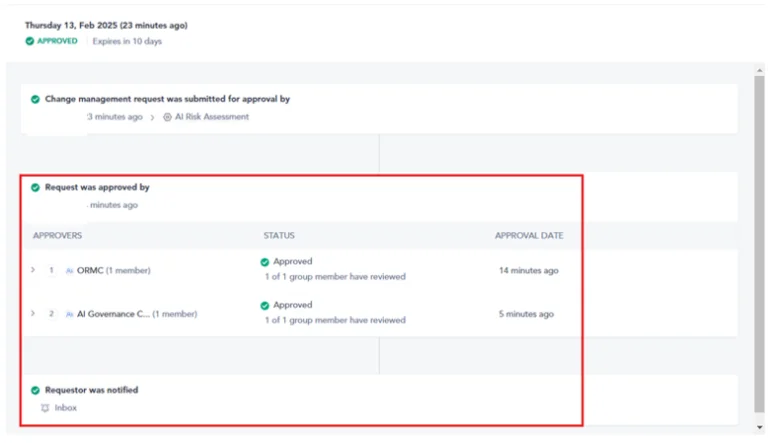

The screenshot below shows the AI Risk Assessment in Approved status based on approvals from the Operational Risk Management Committee (ORMC) and the AI Governance Council.

Approving an AI risk assessment workflow in Atlan - Source: YDC.

AI governance in healthcare: The key to building trust

Permalink to “AI governance in healthcare: The key to building trust”AI governance in healthcare and life sciences ensures that AI systems operate with trust, transparency, and accountability, minimizing risks while maximizing their potential for improving patient care.

As seen from the digital twins for clinical trials use case, embedding governance and metadata-driven oversight throughout the AI lifecycle can reduce risks and biases, improve decision-making, and support regulatory compliance.

The use case also illustrated the role of a metadata control plane like Atlan in providing the foundation needed for building scalable, responsible, and AI-ready data ecosystems. To know more, book a demo.

FAQs about AI governance in healthcare

Permalink to “FAQs about AI governance in healthcare”What is AI governance in healthcare?

Permalink to “What is AI governance in healthcare?”AI governance in healthcare refers to the framework that ensures the responsible, transparent, and ethical use of AI technologies in healthcare systems. It safeguards processes such as clinical decision-making, patient diagnostics, and research by promoting fairness, accountability, and safety. Proper governance ensures that AI models comply with regulations, mitigate biases, and operate with transparency, enhancing trust and patient outcomes.

Why is AI governance important for healthcare?

Permalink to “Why is AI governance important for healthcare?”AI governance is crucial in healthcare because errors in AI-powered systems can lead to life-threatening consequences, especially in areas like diagnostics and treatment recommendations. Governance frameworks enforce transparency, compliance with regulations, and ethical use of data. By managing risks, promoting fairness, and ensuring accuracy, AI governance builds trust between healthcare providers and patients, fostering safer and more effective AI use in clinical workflows.

How does AI governance help reduce biases in healthcare AI models?

Permalink to “How does AI governance help reduce biases in healthcare AI models?”AI governance in healthcare ensures that AI models are trained on high-quality, unbiased, and diverse datasets, reducing the risks of biased medical decisions. Governance frameworks include data quality checks, fairness audits, and ongoing monitoring of AI outcomes to detect and mitigate biases. This leads to more equitable healthcare decisions and improves patient trust in AI-powered systems.

What are the key regulations AI governance must comply with in healthcare?

Permalink to “What are the key regulations AI governance must comply with in healthcare?”AI governance in healthcare must comply with global and regional regulations like the EU AI Act, HIPAA in the US, and FDA guidelines. These regulations mandate transparency, accountability, data privacy, and risk management. AI governance ensures that healthcare institutions adhere to these standards by embedding automated compliance checks, audit trails, and regular assessments, reducing the risk of regulatory violations.

How can healthcare organizations implement AI governance effectively?

Permalink to “How can healthcare organizations implement AI governance effectively?”Healthcare organizations can implement AI governance by establishing accountability frameworks, assessing regulatory risks, and ensuring data quality. This involves setting up leadership to oversee AI policies, cataloging AI use cases, and enhancing data governance with metadata management. By prioritizing fairness, transparency, and safety, organizations can mitigate risks and maximize the value of AI technologies in healthcare systems.

AI governance for healthcare: Related reads

Permalink to “AI governance for healthcare: Related reads”- AI Governance: How to Mitigate Risks & Maximize Benefits

- AI Governance Framework: Why It’s Indispensable for Successful AI Projects

- Gartner on AI Governance: Importance, Issues, Way Forward

- Data Governance for AI

- AI Data Governance: Why Is It A Compelling Possibility?

- AI Data Catalog: It’s Everything You Hoped For & More

- 8 AI-Powered Data Catalog Workflows For Power Users

- GMLP: An Essential Guide for Medical Device Manufacturers in 2025

- ELVIS Act: What Is It & How To Ensure Compliance In 2025

- The EU AI Act: What does it mean for you?

- Colorado AI Act (CAIA): All You Need To Know To Ensure Compliance in 2025

- Transparent Automated Governance Act (TAG): Your 2025 Guide

- Data Readiness for AI: 4 Fundamental Factors to Consider

- Role of Metadata Management in Enterprise AI: Why It Matters

- HIPAA Compliance: Key Components, Rules & Standards

- CCPA Compliance: 7 Requirements to Become CCPA Compliant

- How to Comply With GDPR? 7 Requirements to Know!

- Benefits of GDPR Compliance: Protect Your Data and Business in 2025

- IDMP Compliance: It’s Key Elements, Requirements & Benefits

- Atlan AI for data exploration

- Atlan AI for lineage analysis

- Atlan AI for documentation

Share this article