AI Governance: How to Mitigate Risks & Maximize Business Benefits

Share this article

AI governance ensures the secure, responsible, and accountable use of AI for decision-making.

The goal is to minimize risks (biases, unexpected outputs, hallucinations), while maximizing business benefits. As we rapidly steer toward a world where AI is your real-time, 24x7 digital assistant, AI governance is vital to build trust in the outcomes delivered by AI models.

This article explores the concept of AI governance, followed by its principles, benefits, framework, roles and responsibilities, and implementation. We’ll also look at emerging AI-centric regulations.

Table of contents

Permalink to “Table of contents”- What is AI governance?

- Why do we need AI governance?

- What is an AI governance framework?

- Who is responsible for AI governance?

- How to implement AI governance: Decide where to govern

- Summing up

- Related Reads

What is AI governance?

Permalink to “What is AI governance?”AI governance is not just a set of rules, but a crucial approach to defining guidelines, protocols, and policies that oversee the development, deployment, and use of AI systems. It ensures that AI is used in a way that delivers trustworthy, meaningful, and ethically-sound outcomes.

AI governance is the cornerstone of responsible AI, i.e., making appropriate business and ethical choices when adopting AI.

As AI continues to evolve, so will the definitions of AI governance. Here’s how leading industry experts and tech companies currently define it:

What is AI governance? Gartner's view - Source: Gartner.

What is AI governance? IBM's view - Source: IBM.

What is AI governance? Snowflake's view - Source: Snowflake.

What is AI governance? UN AI advisory's view - Source: UN AI Advisory.

With AI governance, organizations can establish the necessary guardrails to harness the power of AI for decision-making while mitigating risks and ensuring the ethical use of this technology.

Anurag Raj, Sr Principal Analyst at Gartner, emphasizes the need to ensure that your organization’s core, genetic information is well governed before embarking on AI initiatives.

So, how can you ensure AI governance? Let’s look at some of the key elements dictating the principles of AI governance.

What are the principles of AI governance?

Permalink to “What are the principles of AI governance?”Financial intelligence corporation S&P Global identifies several common principles underpinning established AI governance practices:

- Human centrism and oversight

- Ethical and responsible use

- Transparency and explainability

- Accountability

- Privacy and data protection

- Safety, security, and reliability

Even though the principles of AI governance will continue to evolve as the use cases mature, the fundamentals will remain intact. These include transparency, accountability, privacy, security, fairness, and trustworthiness.

These principles ensure that AI systems don’t perpetuate biases, violate privacy or regulatory compliance, and produce adverse outcomes. Let’s explore the reasons behind adhering to them and establishing effective AI governance.

Why do we need AI governance?

Permalink to “Why do we need AI governance?”AI systems generate value when fed relevant, accurate, well-defined data.

Durga Malladi, who represents Qualcomm at the World Economic Forum AI Governance Alliance, observes that, “AI models are trained on data, and that data needs the right level of curation.” The quality and relevance of data used to train AI models are central to their performance and trustworthiness.

However, ensuring quality and relevance requires governance at every stage of the AI lifecycle — training, fine-tuning, production, monitoring, etc. Neglecting governance at any stage can lead to inadequate or misleading outcomes and expose organizations to risks like data breaches.

Why do we need AI governance? - Source: Executive Order on AI Use from the White House, US.

That’s why we need AI governance. It sets standards for data quality, relevance, security, privacy, and bias mitigation. It promotes transparency, explainability, and accountability with comprehensive documentation, source-level lineage across systems, and granular access roles.

Dig deeper → Why does AI governance matter?

AI governance is also necessary to navigate the ever-evolving regulatory landscape surrounding AI systems. Compliance with these regulations is not just about avoiding penalties; it’s about upholding ethical standards and ensuring AI is used responsibly.

So, let’s look at the most common regulations that underscore the need for AI governance.

Policy initiatives and emerging regulations warranting AI governance

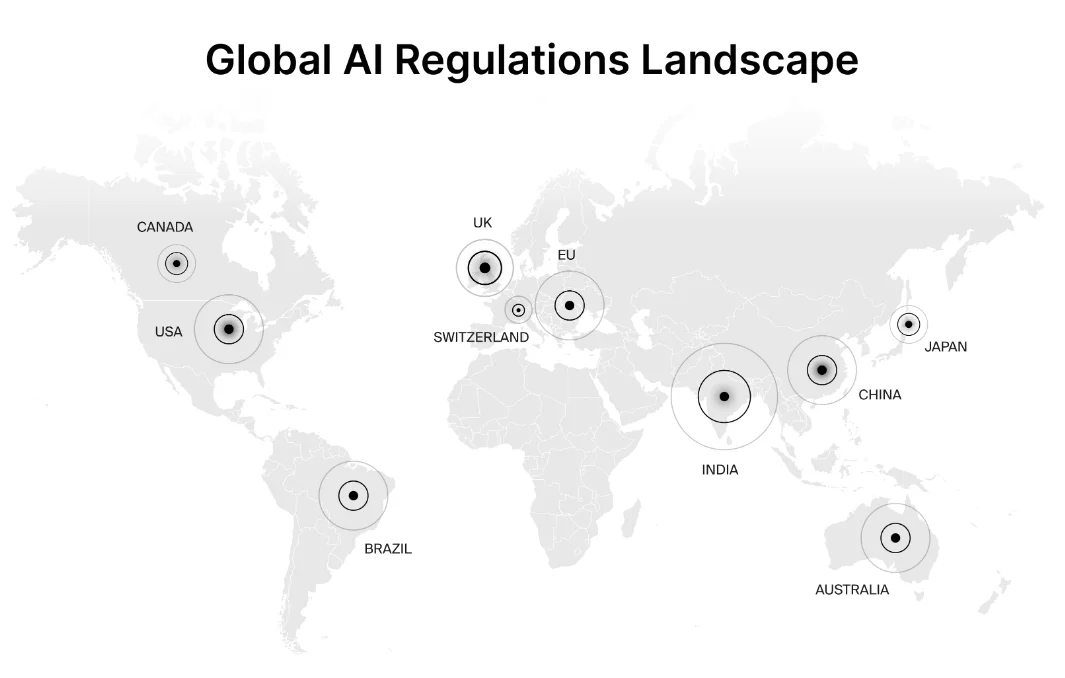

Permalink to “Policy initiatives and emerging regulations warranting AI governance”The rapid advancement of AI has spurred a wave of policy initiatives and regulations, making AI governance not just a necessity, but a pressing priority. Governments and organizations worldwide are recognizing the need to establish guardrails to ensure AI’s ethical and responsible use.

Let’s look at EU’s AI Act, China’s Artificial Intelligence Development Plan, and the United States Executive Order, issued by White House.

Countries and regions where AI regulations are in the process of being developed - Source: Legal Nodes.

EU AI Act

The European Union is close to implementing its EU AI Act, the world’s first comprehensive AI law, approved by the EU Council in May, 2024. This act will also apply to non-EU companies providing AI services in Europe, setting a precedent (like GDPR).

The EU AI Act establishes clear rules and obligations for AI developers, categorizing AI systems based on their risk level. It aims to address concerns like bias, discrimination, and safety, while setting a global standard for responsible AI development and deployment.

Non-compliance can lead to hefty fines — as much as 35 million euros ($38 million) or 7% of their annual global revenues — whichever is higher.

Artificial Intelligence Development Plan (China)

China, too, is actively shaping the AI landscape with its Artificial Intelligence Development Plan. The Chinese State Council has issued a guideline on AI development, highlighting the need to “minimize risk and ensure the safe, reliable and controllable development of artificial intelligence.”

According to the law, Chinese regulators can conduct security assessments and impose penalties for violations.

US AI Executive Order

In the United States, a case-by-case approach is emerging through executive orders and legislative proposals.

For instance, the US AI Executive Order outlines eight guiding principles for the safe and responsible development and use of AI.

The specific compliance implications remain unclear (for now). However, companies developing dual-use foundation models and large-scale computing clusters must provide information and reports to the relevant authorities.

Additionally, numerous recommendations and guiding principles from global bodies, such as the G7 (Hiroshima AI Process), WEF’s AI Governance Alliance, and the UN’s AI Advisory interim report, have emerged — further enriching the global conversation on AI governance.

These initiatives foster international collaboration and contribute to developing a shared understanding of ethical and responsible AI practices.

Now that we’ve looked at the concepts, principles, and regulations on AI governance, let’s move on to the practical aspects — framework, accountability, and implementation guidelines.

What is an AI governance framework?

Permalink to “What is an AI governance framework?”An AI governance framework is a defined structure with guidelines, protocols, processes, and rules that direct responsible and ethical development, deployment, and use of AI in enterprises.

how AI governance framework can help - Source: World Economic Forum, on how an AI governance framework can help.

Your AI governance framework should embody the principles guiding your governance initiative, and establish guidelines that assure the AI readiness of your data assets. This ensures that AI systems are trained on reliable and unbiased data.

The framework should also outline guidelines for AI model development, testing, deployment, monitoring, and retraining.

Since there are several widely recognized AI governance frameworks available, you don’t have to reinvent the wheel. You can use any of the following as reference to adjust your existing data governance framework so that it extends to AI use cases:

- Gartner’s AI TRiSM (Trust, Risk, Security Management) framework: Recommends integrating governance by focusing on explainability, ModelOps, AI application security, and privacy

- NIST’s AI Risk Management Framework: Identifies how organizations can frame AI risks, outlines the characteristics of trustworthy AI systems, and suggests four functions — govern, map, measure, manage — to address AI risk

- Singapore’s Model AI Governance Framework: Offers guidance on internal governance structures, risk profile analysis, operations management, and stakeholder interaction and communication

- The AIGA AI Governance Framework (an academy-industry collaboration from Finland): Describes the organizational practices and capabilities, operational governance of AI systems, and lists 67 tasks to support organizational AI governance

Each framework listed above offers comprehensive guidance on everything from risk management and security to stakeholder communication and ethical considerations.

Executing the framework and enforcing AI governance requires assigning well-defined responsibilities to relevant stakeholders. So, let’s explore that further.

Who is responsible for AI governance?

Permalink to “Who is responsible for AI governance?”Responsibility for AI governance is a shared endeavor within an organization, involving multiple stakeholders across different levels and functions.

World Economic Forum on the need for an AI steering committee - Source: World Economic Forum, on the need for an AI steering committee.

There are several ways to manage roles and responsibilities related to AI governance.

For instance, Gartner calls for setting up an AI CoE (Center of Excellence) to determine policies and procedures for implementing an AI governance framework. It also recommends leveraging business and technology leadership to “effectively assess the possibilities, power and perils of AI and GenAI.”

IBM, on the other hand, puts the onus on the CEO and senior leadership, with other functions assisting in the following manner:

- Legal and general counsel assess and mitigate legal risks, ensuring AI applications comply with relevant laws and regulations

- Audit teams validate the data integrity of AI systems, confirming that the systems operate as intended without introducing errors or biases.

- CFO oversees the financial implications, managing the costs associated with AI initiatives and mitigating any financial risks.

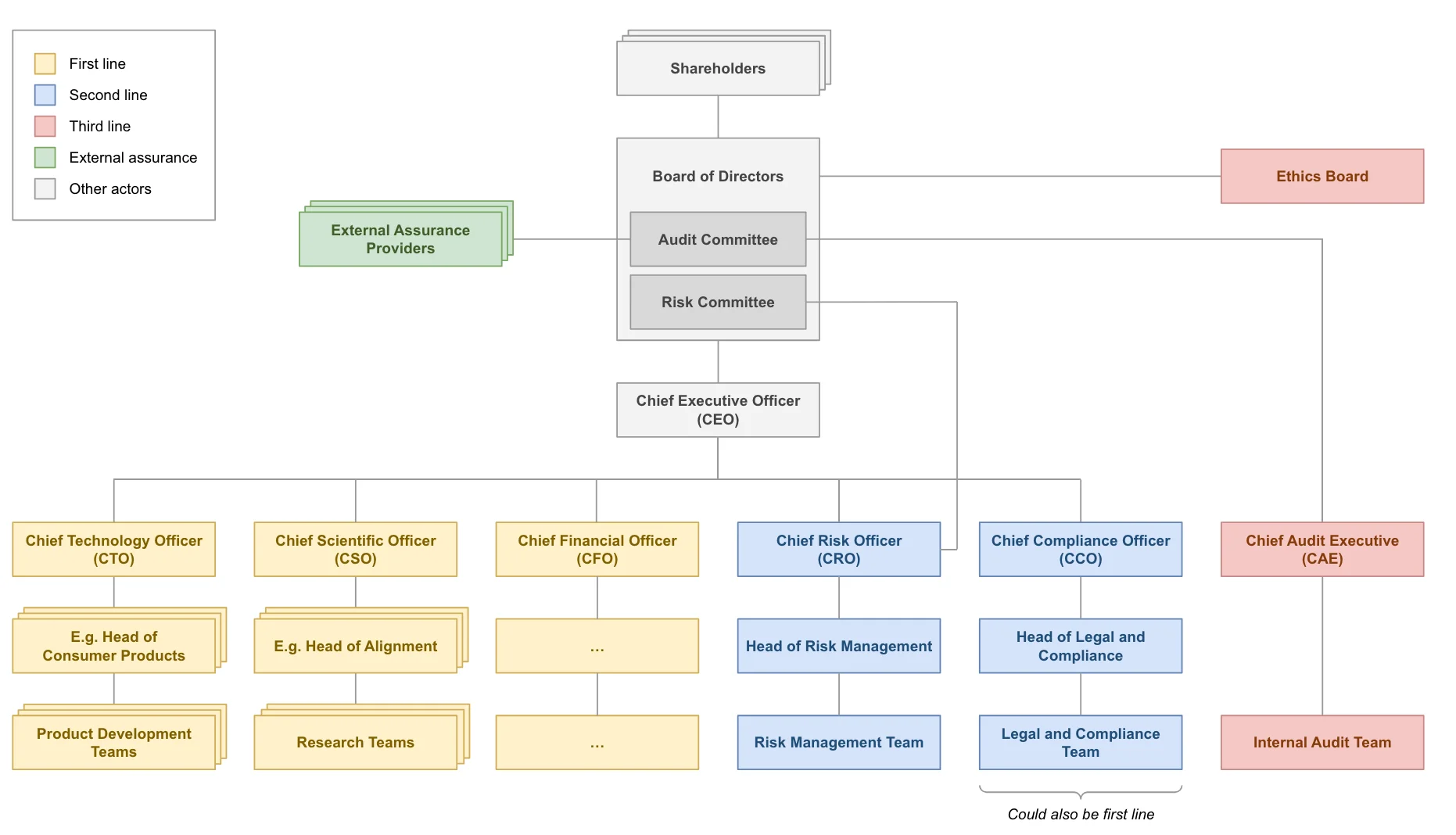

Meanwhile, the Center for the Governance of AI recommends a three lines of defense (3LoD) approach to oversee AI governance:

- The first line of defense — product owners, research teams, technology teams, and stewards — offers products and services to clients, corresponding to AI research and product development. The people involved also manage associated risks by setting up the necessary structures and processes.

- The second line of defense — risk, legal, and compliance teams — assists in risk management. The people involved are SMEs in risk management and compliance. They support the first line of defense, while monitoring risk management practices.

- The third line of defense — ethics board and audit teams — assesses the work of the first two lines and reports any shortcomings to the governing body. It’s responsible for providing independent assurance.

Responsibilities related to AI governance in an organization - Source: Center for the Governance of AI.

Successful AI governance warrants clearly defining roles and responsibilities, establishing robust oversight mechanisms, and fostering a culture of ethical AI use.

It also requires effective implementation, and one of the biggest concerns is deciding where to govern. So, let’s have a look.

How to implement AI governance: Decide where to govern

Permalink to “How to implement AI governance: Decide where to govern”The most common challenges with AI governance are related to:

- Having the right skills to navigate AI governance implementation

- Ensuring transparency and explainability of AI models

- Getting clarity on AI’s business impact

- Adopting a production-first mentality

Dig deeper → Challenges in implementing AI governance: Gartner’s perspective

Some of these challenges can be overcome with specific initiatives. Addressing the skill gap, for instance, means setting up AI-centric training and awareness programs.

An example of bridging the skills gap in AI - Source: An example of bridging the skills gap in AI, according to WEF.

However, an even bigger concern is deciding where to govern, since there are several factors involved:

- Data: Ensuring source-level lineage, security, privacy, and integrity of data used to train an AI model and then generate outcomes once it’s in production

- AI algorithm: Explaining how an AI algorithm functions, makes decisions, and ensures fairness

- AI model: Understanding the accuracy and reliability of the model, as well as mechanisms for monitoring the output and ensuring continuous improvement

- AI-powered application: Ensuring responsible and ethical use of the application, along with user trust and transparency

Additionally, assuring explainability, transparency, accuracy, or reliability are complex problems, since they depend on multiple factors. Here’s how the AI Ethics Impact Group underlines this issue for transparency and explainability:

Multiple factors affect the transparency and explainability of AI - Source: AI Ethics Impact Group on multiple factors affect the transparency and explainability of AI.

So, what’s the solution? Going back to the drawing board and embedding governance throughout the data and AI lifecycle — from data collection and processing to model deployment and beyond.

Embedding governance throughout the AI lifecycle

Permalink to “Embedding governance throughout the AI lifecycle”Embedding governance means making it a part of your code, workflows, and tools, which creates a holistic and proactive approach. You can embed governance in four ways:

- Shift-right: Embedding governance into BI tools that end users rely on for data analysis and reporting so that they can access the data they need and confidently use it

- Shift-left: Integrating governance into data producer workflows, like in dbt YAML files as data contracts or through CI/CD, so that they deliver trusted, accurate, and contextual data

- Shift-down: Connecting fragmented tools in your data stack through metadata, like standardized tags

Dig deeper → The role of metadata management in enterprise AI

- Shift-up: Bridging the gap between the data layer and the policy layer so that policies are embedded into the diverse workflows across the data estate

Read more → The active AI and data governance manifesto

This approach makes governance flexible, adaptive, and constantly evolving — building an AI-enabled future that works for everyone.

Summing up: AI governance must be flexible, adaptable, and continuously evolving

Permalink to “Summing up: AI governance must be flexible, adaptable, and continuously evolving”

Technology is neither good nor bad, nor is it neutral - Source: Dr. Melvin Kranzberg’s first law of technology.

AI systems can create useful business outcomes, but also discriminate or violate privacy laws — it depends on how we develop and use them. Since we design and program AI systems, they cannot be inherently neutral.

AI governance can help ensure that the outcomes are responsible, ethical, and compliant with existing (and future) data privacy, security, and usage laws.

It establishes clear guidelines, protocols, and policies throughout the AI lifecycle. However, to be effective, this requires collaboration among diverse stakeholders, including business teams, data engineers and scientists, legal teams, and more.

Organizations can leverage existing frameworks like Gartner’s AI TRiSM or NIST’s AI Risk Management Framework to embed governance into their workflows.

By proactively integrating governance within the daily lives of data practitioners, organizations can implement AI governance that’s flexible, adaptable, and constantly evolving alongside technology.

AI Governance: Related Reads

Permalink to “AI Governance: Related Reads”- Gartner on AI Governance: Importance, Issues, Way Forward

- Data Governance for AI

- AI Data Governance: Why Is It A Compelling Possibility?

- AI Governance for Healthcare: Benefits and Usecases

- AI Data Catalog: Its Everything You Hoped For & More

- 8 AI-Powered Data Catalog Workflows For Power Users

- Atlan AI for data exploration

- Atlan AI for lineage analysis

- Atlan AI for documentation

Share this article