Snowflake Polaris: Everything We Know About This Open-Source Technical Catalog

Share this article

Snowflake Polaris (now known as Snowflake Open Catalog) is an interoperable, open-source technical catalog for Apache Iceberg™. You can use it to provide centralized, secure read and write access to Iceberg tables across different REST-compatible query engines.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

This article explores the core capabilities, benefits, and commonly asked questions about Snowflake Polaris.

Table of contents

Permalink to “Table of contents”- What is Snowflake Polaris?

- Snowflake Open Catalog (formerly Snowflake Polaris): Core concepts

- What are the key capabilities of Snowflake Open Catalog (formerly Snowflake Polaris)?

- What are the benefits of Snowflake Open Catalog (formerly Snowflake Polaris)?

- Summing up

- Snowflake Polaris: Frequently Asked Questions (FAQs)

- Snowflake Polaris: Related reads

What is Snowflake Polaris?

Permalink to “What is Snowflake Polaris?”Snowflake Open Catalog, previously called Snowflake Polaris, is a managed service for Apache Polaris™ (incubating). It is a technical catalog for Iceberg-specific use cases, helping organizations expose metadata about data assets, products, lineage, tags, policies, and more.

Engineers, developers, and architects can use Snowflake Open Catalog to read and write Iceberg tables from any REST-compatible query engine – Apache Flink, Apache Spark, PyIceberg, Snowflake, Trino, and more.

Snowflake Open Catalog, a managed service for Apache PolarisTM - Source: Snowflake.

From Polaris Catalog to Snowflake Open Catalog: A brief history

Permalink to “From Polaris Catalog to Snowflake Open Catalog: A brief history”On June 3, 2024, Snowflake announced Polaris Catalog, a vendor-neutral, open data catalog for Apache Iceberg. The goal was to offer organizations a higher degree of control, flexibility, and choice over their data in Iceberg tables.

On July 30, 2024, Snowflake had open-sourced Polaris Catalog under the Apache 2.0 license.

By October 18, 2024, Polaris Catalog was rebranded as Snowflake Open Catalog and became generally available for Snowflake customers. It helps organizations seamlessly integrate Iceberg tables with their existing data ecosystems, fostering innovation through open standards and interoperability.

Apache PolarisTM, the open-source technical catalog for Iceberg tables - Source: Snowflake.

Snowflake Open Catalog (formerly Snowflake Polaris): Core concepts

Permalink to “Snowflake Open Catalog (formerly Snowflake Polaris): Core concepts”In Snowflake Open Catalog, you can create one or more catalog resources to organize Iceberg tables. The storage configuration can point to AWS S3, Azure storage, or GCS (Google Cloud Storage).

A catalog can either be:

- Internal: Managed by Open Catalog, and, its tables can be read and written in Open Catalog

- External: Managed by another Iceberg catalog provider (like Glue, Dremio Arctic, etc.), and its tables are synced with Open Catalog; these tables are read-only in Open Catalog

To logically group Iceberg tables within a catalog, you can create namespaces. Each catalog resource can have multiple namespaces. You can also create nested namespaces, if required.

Snowflake Open Catalog core concepts - Source: Snowflake Documentation.

Access control in Open Catalog

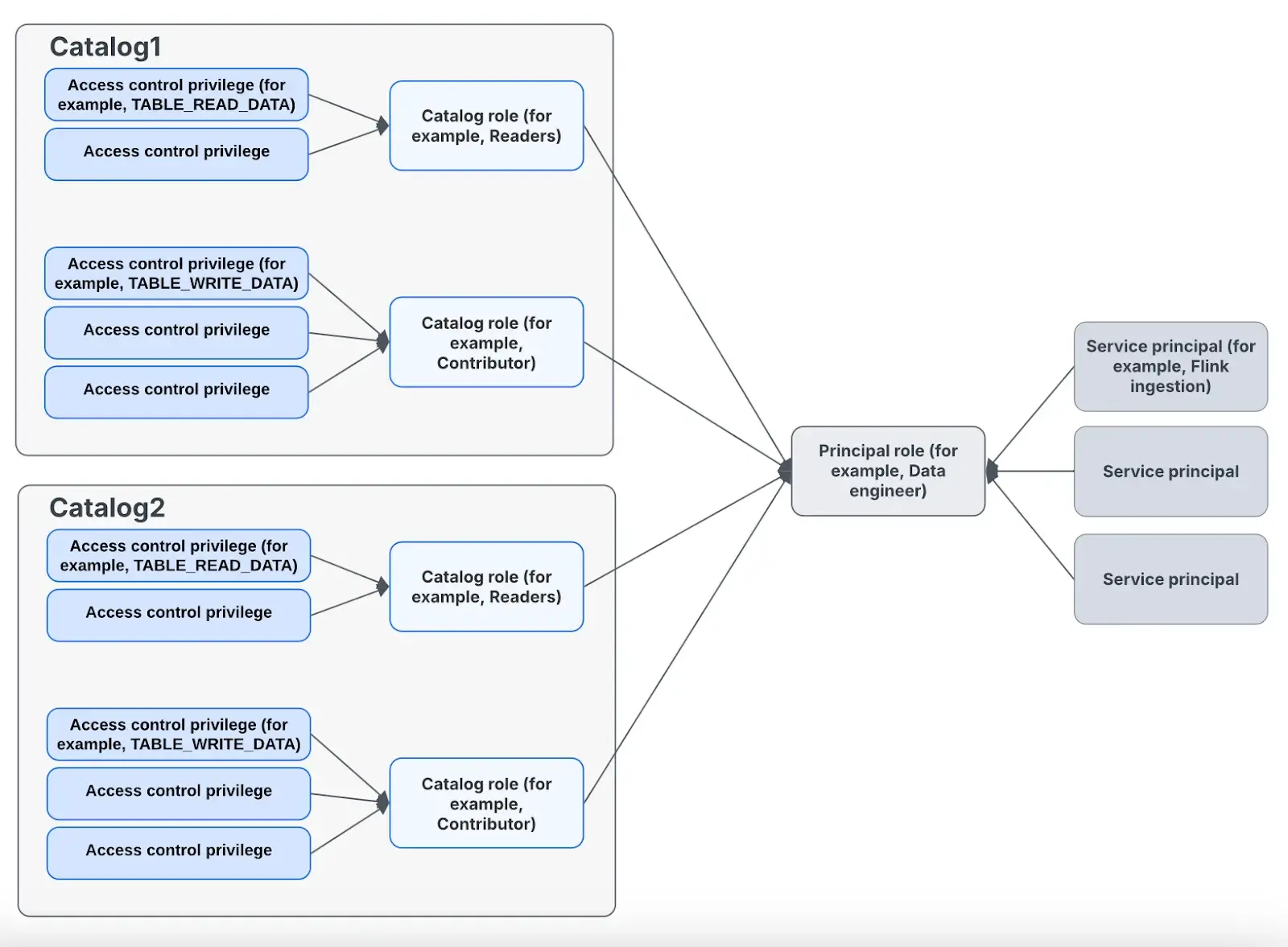

Permalink to “Access control in Open Catalog”Open Catalog uses role-based access control (RBAC) to manage access to its resources.

For each catalog, the Open Catalog catalog admin assigns access privileges to catalog roles (admins, readers, contributors, etc.). The admin then grants service principals–entities that you can create in Open Catalog–access to resources by assigning catalog roles to principal roles.

A principal role is a resource used to logically group Open Catalog service principals together and grant privileges. Examples include data engineer, data scientist, etc.

Note: Open Catalog supports a many-to-one relationship between service principals and principal roles.

Access control in Snowflake Open Catalog - Source: Snowflake Documentation.

What are the key capabilities of Snowflake Open Catalog (formerly Snowflake Polaris)?

Permalink to “What are the key capabilities of Snowflake Open Catalog (formerly Snowflake Polaris)?”Snowflake Open Catalog offers a range of features that enhance data management for Iceberg tables. These include:

- Cross-engine interoperability: You can read and write from any REST-compatible engine (Apache Flink, Apache Spark, Trino, and Snowflake), eliminating the need to move or copy data for different engines and catalogs.

- Centralized data management: You can manage Iceberg tables for all users and engines from one location, regardless of the engines. As a result, diverse data teams can modify tables concurrently, generate and run queries to analyze the data in those tables, and more.

- Vendor-neutral flexibility: You can self-host Apache Polaris in your own infrastructure or use Snowflake Open Catalog as a managed service. You can retain namespaces, privileges, and table definitions even when switching infrastructure, ensuring continuity and avoiding vendor lock-in.

- Role-based access control (RBAC): Open Catalog provides fine-grained access management through role-based permissions. As mentioned earlier, it uses service principals and principal roles to assign and manage access, catering to specific user needs like data engineers or scientists.

- Cloud-native storage integration: It supports integration with AWS S3, Azure Storage, and Google Cloud Storage for storage configuration and access.

What are the benefits of Snowflake Open Catalog (formerly Snowflake Polaris)?

Permalink to “What are the benefits of Snowflake Open Catalog (formerly Snowflake Polaris)?”The top benefits of using Snowflake Open Catalog as a technical catalog for your Iceberg tables are:

- Better collaboration: With centralized access and concurrent table modifications, your teams can work seamlessly across platforms, improving productivity and reducing delays.

- Flexibility and control: Its vendor-agnostic design gives organizations the freedom to choose their infrastructure while retaining control over their data.

- Operational efficiency: By enabling cross-engine interoperability, Open Catalog reduces the need for duplicating or transferring data, saving time and resources.

- Future-ready data management: Snowflake Open Catalog’s support for open standards and modern cloud platforms ensures its relevance in evolving data ecosystems.

- Integration with a metadata control plane like Atlan: Snowflake Open Catalog simplifies the ingestion of Iceberg metadata into Atlan, establishing it as a single pane of glass for managing and curating all types of metadata. This centralized approach fosters collaboration and governance, supporting data and AI initiatives across teams.

Read more → Polaris Catalog + Atlan: Better Together

Summing up

Permalink to “Summing up”Snowflake Open Catalog helps organizations manage Iceberg tables by providing centralized, secure, and flexible access across multiple platforms. Its commitment to open standards and interoperability with metadata control planes like Atlan makes it a key player in modern data ecosystems.

Snowflake Polaris: Frequently Asked Questions (FAQs)

Permalink to “Snowflake Polaris: Frequently Asked Questions (FAQs)”1. What is Snowflake Polaris? And what is Snowflake Open Catalog?

Permalink to “1. What is Snowflake Polaris? And what is Snowflake Open Catalog?”Snowflake Polaris is now known as Snowflake Open Catalog. Snowflake Open Catalog is a managed service for Apache PolarisTM. Apache PolarisTM is an open-source, fully-featured catalog for Apache Iceberg™ tables.

Note: “Polaris” refers to the open-source project, while “Open Catalog” is Snowflake’s specific service built on top of it.

2. Is Polaris part of the Apache ecosystem?

Permalink to “2. Is Polaris part of the Apache ecosystem?”Apache Polaris is currently undergoing incubation at the Apache Software Foundation. It is a technical catalog created and then open-sourced by Snowflake for managing Apache Iceberg™ tables.

“Incubation is required of all newly accepted projects until a further review indicates that the infrastructure, communications, and decision making process have stabilized in a manner consistent with other successful ASF projects.” - Apache Incubator

3. Is Snowflake Open Catalog (previously Snowflake Polaris) generally available?

Permalink to “3. Is Snowflake Open Catalog (previously Snowflake Polaris) generally available?”Since October 18, 2024, Snowflake Open Catalog (previously known as Snowflake Polaris) is generally available

4. How much does Snowflake Open Catalog cost?

Permalink to “4. How much does Snowflake Open Catalog cost?”You can try Snowflake Open Catalog for free for 30 days. When your trial period ends, you can retain access to your Open Catalog account by signing up for Snowflake.

“We’ve added billing support for Open Catalog, but you can use Open Catalog for free until April 30, 2025.” - Snowflake Release Notes, October 2024

Snowflake Polaris: Related reads

Permalink to “Snowflake Polaris: Related reads”- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- Snowflake Data Governance — Features & Frameworks

- Snowflake Data Cloud Summit 2024: Get Ready and Fit for AI

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- Snowflake Data Lineage: A Step-by-Step How to Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Databricks Unity Catalog: A Comprehensive Guide to Features, Capabilities, Architecture

- Data Catalog for Databricks: How To Setup Guide

Share this article