How to Set Up a Data Catalog for Snowflake in 2025: A Complete Guide

Share this article

Snowflake data catalog setup involves operationalizing its metadata layer to drive data search and discovery, access governance, self-service analytics, and more.

You can start by accessing Snowflake’s technical data dictionary through ACCOUNT_USAGE and INFORMATION_SCHEMA, accessing metadata about objects, query history, usage patterns, and governance policies.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

For built-in search and governance, Snowflake Horizon Catalog surfaces data products, tags, classifications, and usage insights directly within the Snowflake UI.

To catalog metadata across systems like dbt, AWS Glue, Looker, or Airflow, you need a unified control plane like Atlan that automatically consolidates metadata from Snowflake and beyond.

In this article, you’ll explore:

- Essential prerequisites for Snowflake data catalog setup

- A step-by-step breakdown the set up process, managing roles and permissions, and addressing key challenges

- The role of a metadata-led control plane like Atlan in enhancing data discovery and trust

- How leading companies across industries are unlocking the full potential of their data estate using the Snowflake-Atlan integration

Table of contents #

- Does Snowflake enable data cataloging?

- What are the prerequisites for Snowflake data catalog setup?

- How can you set up a data catalog for Snowflake?

- Why set up an external data catalog like Atlan for Snowflake?

- How does a metadata-led control plane like Atlan enhance Snowflake data cataloging?

- How can you deploy Atlan for Snowflake?

- How the Snowflake and Atlan integration benefits customers across industries

- Snowflake data catalog setup: Bottom line

- Snowflake data catalog setup: Frequently asked questions (FAQs)

- Snowflake data catalog setup: Related reads

Does Snowflake enable data cataloging? #

Yes, Snowflake exposes rich technical metadata through its INFORMATION_SCHEMA and ACCOUNT_USAGE views. These allow users to monitor schema objects, track query behavior, and audit data access.

Snowflake Horizon Catalog provides an interface for browsing Snowflake assets, tags, governance of Snowflake assets, and more directly within the Snowflake UI.

What are the business outcomes of cataloging Snowflake data? #

Setting up a data catalog for Snowflake tremendously helps your business derive value from your data as it will allow you to:

- Search and discover all your data assets in Snowflake using a visual interface; some catalogs, like Atlan, will also have a rich IDE for you to work with your data.

- Tag, classify, and govern your data assets and enrich them with business context — all from a central location.

- Do more with your data with add-on features like data lineage, data quality & profiling, and observability.

Also, read → What is a data catalog? Benefits & use cases

What are the prerequisites for Snowflake data catalog setup? #

When setting up a data catalog for Snowflake, you’ll need to tick a few networking, infrastructure, and security checkboxes:

- Reachability: Make sure that the data catalog can reach Snowflake. Both these tools can be in different networks, so you might need to set up VPNs, peering connections, NACLs, etc., to ensure proper function.

- Encryption: The data dictionary gives you a deep insight into application and system design. You want the metadata to be securely fetched and stored from your Snowflake account.

- Infrastructure: Ensure your data catalog has enough compute and memory to address data crawling, previewing, and querying operations.

How can you set up a data catalog for Snowflake? #

To fetch the metadata necessary to build a data catalog for Snowflake, you’ll need to go through the following steps:

- Create a database role in Snowflake

- Create a database user

- Identify the Snowflake databases, schemas, and objects that you want to crawl

- Assign relevant permissions to the role

- Assign the role to the database user

- Start crawling data using a manual or a scheduled run

Let’s explore the specifics.

Step 1: Create a database role in Snowflake #

Every database and data warehouse has different implementations of namespaces, roles, and permissions. The steps to set up a data catalog will vary based on the implementation.

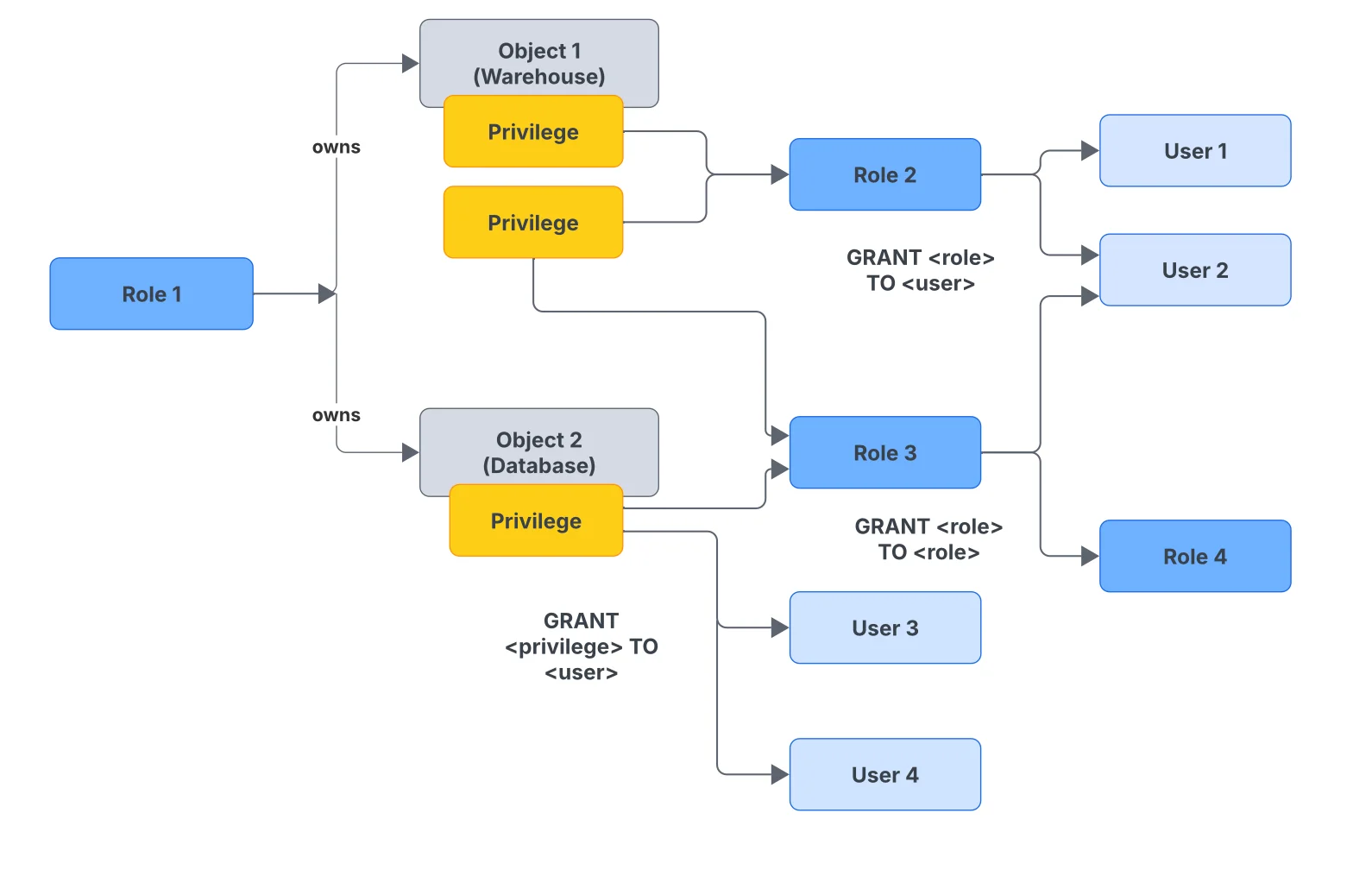

Snowflake has three access control models (DAC, RBAC and UBAC) that work side-by-side to provide a very flexible and granular control structure for your data.

Here’s an illustration explaining how they work. Role 1 owns Object 1 and Object 2, demonstrating Discretionary Access Control (DAC), where object owners grant privileges.

Role 2 receives privileges on Object 1 and is assigned to User 1 and User 2, illustrating Role-Based Access Control (RBAC).

Meanwhile, User 3 and User 4 are granted privileges directly on Object 2, showcasing User-Based Access Control (UBAC), which adds flexibility when USE SECONDARY ROLE = ALL is enabled.

Access control in Snowflake using DAC, RBAC and UBAC - Source: Snowflake Documentation.

Here’s how you create a role that has OPERATE and USAGE permissions on a Snowflake warehouse:

CREATE OR REPLACE ROLE data_catalog_role;

GRANT OPERATE, USAGE ON WAREHOUSE "<warehouse-name>" TO ROLE data_catalog_role;

Granting OPERATE on your warehouse enables the role to view all queries previously run or currently running on the warehouse. It also allows the role to start, stop, suspend, and resume a warehouse. Make sure to review that your grants are not overly permissive for the data catalog.

Step 2: Create a database user #

Once you’ve created a database role, create a database user and attach the role to it. You can create a database user in one of three ways: a password, public key, or SSO.

# Method 1: With password

CREATE USER data_catalog_user PASSWORD='<password>' DEFAULT_ROLE=data_catalog_role DEFAULT_WAREHOUSE='<warehouse_name>' DISPLAY_NAME='<display_name>';

# Method 2: With public key

CREATE USER data_catalog_user RSA_PUBLIC_KEY='<rsa_public_key>' DEFAULT_ROLE=data_catalog_role DEFAULT_WAREHOUSE='<warehouse_name>' DISPLAY_NAME='<display_name>';

Using SSO, there are two ways to do authentication on Snowflake: using browser-based SSO or programmatic SSO (only available for Okta).

Step 3: Identify the Snowflake databases, schemas, and objects that you want to crawl #

The process of data movement, cleaning, and transformation is often very messy. You end up creating a lot of playground databases and schemas for running internal experiments and testing queries.

Ideally, all this should be in a development environment, but that’s not always the case. Hence, you must identify the databases and schemas to be used for fetching data assets.

Before making the initial connection, explore your data assets by using commands like SHOW DATABASES, SHOW SCHEMAS, SHOW OBJECTS, SHOW TABLES, and so on. You can alternatively query the INFORMATION_SCHEMA directly.

Often database and object names have a prefix that signifies a stage in the data pipeline or a level of maturity of the data they contain. You can use these indicators to finalize the assets you want to fetch.

Vet the list of databases, schemas, and objects to be crawled because, in the next step, you’ll need to grant read permissions to your data_catalog_role to enable the data catalog to start fetching metadata from your Snowflake warehouse.

Step 4: Assign relevant permissions to the role #

There are two ways you can assign permissions to the data_catalog_role. Your choice should be based on the capabilities of your data catalog. Identify if you only want to:

- Catalog data assets and view structural metadata

- Catalog data assets, view structural metadata, preview data, and run queries against data assets.

This is where Snowflake offers two other schemas, ACCOUNT_USAGE and READER_ACCOUNT_USAGE, in addition to the INFORMATION_SCHEMA, which contain database metadata.

Grant access for crawling metadata #

Using the ACCOUNT_USAGE schema, you’ll need to grant additional access to DATABASES, SCHEMATA, TABLES, VIEWS, COLUMNS, and PIPES for the data catalog to be able to fetch metadata.

You can also use one or more of Snowflake’s default ACCOUNT_USAGE roles:

OBJECT_VIEWERUSAGE_VIEWERGOVERNANCE_VIEWERSECURITY_VIEWER

Similarly, to use the READER_ACCOUNT_USAGE schema for fetching query history, login history, etc., you can assign the READER_USAGE_VIEWER role to your data_catalog_role.

Given that using INFORMATION_SCHEMA is the most common and extensive method for crawling metadata, let’s look at it in more detail.

Using the INFORMATION_SCHEMA, you can use the following GRANT statements to provide crawling access for current data assets to the data_catalog_role:

GRANT USAGE ON DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT USAGE ON ALL SCHEMAS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON ALL TABLES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON ALL EXTERNAL TABLES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON ALL VIEWS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON ALL MATERIALIZED VIEWS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT SELECT ON ALL STREAMS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT MONITOR ON PIPE "<pipe_name>" TO ROLE data_catalog_role;

The aforementioned GRANT statements assign the data_catalog_role privileges to fetch the metadata for all the current data assets. To be able to bring the metadata for all the future data assets, too, you’ll need to explicitly specify the FUTURE keyword in the GRANT statements, as shown below:

GRANT USAGE ON FUTURE SCHEMAS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON FUTURE TABLES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON FUTURE EXTERNAL TABLES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON FUTURE VIEWS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT REFERENCES ON FUTURE MATERIALIZED VIEWS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT SELECT ON FUTURE STREAMS IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT MONITOR ON FUTURE PIPES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

If you stop here, you’ll be in a position to list all data assets for the <database_name> database, but you won’t be able to preview or query the data from your data catalog.

Grant access for previewing and querying data #

Many data catalogs, such as Atlan, provide you with an integrated IDE so that you can preview the data and even work on it by writing queries, saving them, and sharing them with your team.

You can allow access to previewing and querying data from your data catalog by running the same statements that you ran in the previous step with a minor difference. Instead of GRANT REFERENCES, you’ll need to GRANT SELECT on TABLES, EXTERNAL TABLES, VIEWS, and MATERIALIZED VIEWS.

Note that you’ll need to do the same thing twice, once for current data assets and once for future data assets.

Here’s an example of granting SELECT on TABLES, present and future:

GRANT SELECT ON ALL TABLES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

GRANT SELECT ON FUTURE TABLES IN DATABASE "<database_name>" TO ROLE data_catalog_role;

Now, you should be able to preview and query your Snowflake data from the data catalog.

Consider granting other relevant privileges #

There are a host of other grants and privileges that you can assign to the data_catalog_role. What you need will eventually depend on the capabilities of your data catalog.

For example, if your data catalog has data lineage capabilities, you might need to grant enhanced privileges, such as IMPORTED PRIVILEGES to the SNOWFLAKE database to the data_catalog_role.

Step 5: Assign the role to the database user #

Once you’re done assigning all the relevant permissions to the role, you’ll need to assign the role to the data_catalog_role using the following GRANT statement:

GRANT ROLE data_catalog_role TO USER data_catalog_user;

You should now be ready to connect to your Snowflake account from your data catalog.

Step 6: Start crawling data using a manual or a scheduled run #

Once you’ve run the GRANT statements, you’ll be in a position to test whether you’re able to fetch data from your data catalog. To configure the Snowflake connector in your data catalog, you must provide the connector with the database credentials.

Most connectors can test the connection before you go on to the next step and start crawling the data from your Snowflake database. If you fail to connect to your Snowflake warehouse, you’ll probably need to check if any networking or security considerations were missed. You can use the SnowCD (Snowflake Connectivity Diagnostic) tool to evaluate your network connectivity.

Once you resolve any connectivity issues, you can start crawling data. Most data catalogs provide you with an option to run the crawler in three different ways:

- Ad-hoc crawl (manual crawl using a CLI command or the data catalog console)

- Scheduled crawl (E.g., based on a cron expression)

- Event-based crawl (E.g., crawl triggered from an event that the data catalog can listen to)

After putting your Snowflake data into your data catalog, you can identify other data sources and connect them with your data catalog.

Why set up an external data catalog like Atlan for Snowflake? #

While Snowflake’s built-in metadata schemas and Horizon Catalog provide visibility into Snowflake-native assets, they don’t cover upstream and downstream dependencies across your broader data stack — like dbt models, Airflow pipelines, BI dashboards in Looker, or policies in AWS.

Atlan addresses this gap by serving as a metadata-led control plane—consolidating and activating metadata from across your stack. This makes your Snowflake catalog more discoverable, trustworthy, and actionable for business and technical users alike.

How does a metadata-led control plane like Atlan enhance Snowflake data cataloging? #

A metadata-led control plane like Atlan orchestrates how metadata flows between Snowflake and every other tool in your stack. It turns passive metadata into an operational layer that enables automation, active governance, and team-wide collaboration.

Think of Atlan as the connective tissue across pipelines, tools, and teams.

For instance, Atlan auto-ingests technical, business, and usage metadata from Snowflake and other systems, connects them through lineage, and surfaces relevant context via a search-first interface. This allows data teams to understand asset freshness, usage, ownership, and compliance posture within Snowflake as well as across the entire analytics lifecycle.

Atlan also supports bidirectional tag sync with Snowflake – tags added in Atlan reflect in Snowflake and vice versa, keeping your governance aligned across teams and platforms.

How can you deploy Atlan for Snowflake? #

To set up a data catalog for Snowflake in Atlan, you can go through the following steps:

- Create role in Snowflake

- Create a user

- With a password in Snowflake

- With a public key in Snowflake

- Managed through your identity provider (IdP)

- Grant role to the user

- Choose the metadata fetching method

- Information schema (recommended)

- Account usage (another alternative)

- Grant permissions to:

- Crawl existing assets

- Crawl future assets

- Mine query history for lineage

- Preview and query existing assets

- Preview and query future assets

- Allowlist the Atlan IP

How the Snowflake and Atlan integration benefits customers across industries #

The Snowflake-Atlan integration is helping organizations across industries modernize their data stack, simplify governance, and prepare for AI-driven initiatives. Named a Leader in The Forrester Wave™, Atlan acts as a unified metadata control plane, enabling teams to streamline data discovery, access, and compliance by tapping into Snowflake’s rich metadata layer.

Here’s how these leading companies have transformed their data operations using this powerful combination:

- In banking, Austin Capital Bank managed to simplify and streamline access governance. As Ian Bass, Head of Data & Analytics, put it, “Atlan gave us a simple way to see who has access to what."

- Scripps Health used the integration to manage HIPAA-compliant healthcare data securely. “Since Atlan is virtualized on Snowflake, security is no longer a concern,” says Victor Wilson, Data Architect.

- FinTech company Tala automated dbt documentation sync via Atlan, making their data dictionary more accessible to business teams.

- Manufacturing leader Aliaxis improved cross-team data visibility, with Atlan serving as a ‘bridge’ for understanding Snowflake data. “If there’s any question you have about data in Snowflake, go to Atlan,” shares Nestor Jarquin, Global Data & Analytics Lead.

These examples reflect the real-world impact of unifying metadata, governance, and collaboration across modern data platforms.

Snowflake data catalog setup: Bottom line #

Snowflake’s native tools lay the foundation for metadata visibility. But cross-system discoverability or business-friendly metadata requires extending beyond Snowflake with a metadata platform.

Setting up a Snowflake data catalog is about activating metadata for real-world outcomes.

A unified control plane like Atlan turns metadata into a living, searchable, collaborative layer for your data team, helping them gain adequate context, access, and trust.

Snowflake data catalog setup: Frequently asked questions (FAQs) #

1. Does Snowflake have a built-in data catalog? #

Yes, Snowflake offers metadata views (like ACCOUNT_USAGE and INFORMATION_SCHEMA) and Horizon Catalog for in-platform data discovery. These help track assets, query history, and governance, but don’t cover tools outside Snowflake.

2. What’s the difference between Horizon Catalog and external data catalogs? #

Horizon Catalog is Snowflake’s native catalog for searching and managing Snowflake assets. External catalogs (like Atlan) consolidate metadata across your broader stack—connecting dbt, Airflow, BI tools, and more—for lineage, governance, and collaboration beyond Snowflake.

3. What are the first steps to setting up a Snowflake data catalog? #

Start by creating a role with appropriate permissions, setting up a user, and granting access to the metadata schemas. You’ll also need to identify which databases, schemas, and objects to crawl, and configure how metadata will be ingested.

4. How do I make sure my catalog reflects newly created Snowflake assets? #

You’ll need to grant FUTURE privileges in your Snowflake permissions so the catalog can access new databases, schemas, tables, and views as they’re created. Most modern catalogs also support scheduled crawls to keep metadata current.

5. Can I catalog metadata from multiple Snowflake accounts or regions? #

Yes—but you’ll need to configure separate connectors or integrations for each account. Make sure your catalog tool supports multi-account visibility and can unify metadata across regions without duplicating effort.

6. What role does metadata play in your Snowflake data catalog setup? #

Metadata provides the context that makes Snowflake data discoverable and usable. It includes technical details (like schema and usage), business definitions, lineage, ownership, and policy information. Without metadata, data remains siloed, hard to govern, and difficult to trust across teams.

7. How does a unified control plane further strengthen your Snowflake data catalog setup? #

A unified control plane consolidates metadata from Snowflake and connected tools like dbt, Airflow, and BI platforms. It enriches Snowflake’s native metadata with broader lineage, classification, usage insights, and governance context, enabling more accurate discovery, trust, and control across your entire data ecosystem.

Snowflake data catalog setup: Related reads #

- Snowflake Summit 2025: How to Make the Most of This Year’s Event

- Snowflake Cortex: Everything We Know So Far and Answers to FAQs

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- Polaris Catalog from Snowflake: Everything We Know So Far

- Snowflake Cost Optimization: Typical Expenses & Strategies to Handle Them Effectively

- Snowflake Horizon for Data Governance: Here’s Everything We Know So Far

- Snowflake Data Cloud Summit 2024: Get Ready and Fit for AI

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- How to Set Up Snowflake Data Lineage: Step-by-Step Guide

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- Snowflake + AWS: A Practical Guide for Using Storage and Compute Services

- Snowflake X Azure: Practical Guide For Deployment

- Snowflake X GCP: Practical Guide For Deployment

- Snowflake + Fivetran: Data movement for the modern data platform

- Snowflake + dbt: Supercharge your transformation workloads

- Snowflake Metadata Management: Importance, Challenges, and Identifying The Right Platform

- Snowflake Data Governance: Native Features, Atlan Integration, and Best Practices

- Snowflake Data Dictionary: Documentation for Your Database

- Snowflake Data Access Control Made Easy and Scalable

- Glossary for Snowflake: Shared Understanding Across Teams

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Managing Metadata in Snowflake: A Comprehensive Guide

- How Can to Query Information Schema on Snowflake? Examples, Best Practices, and Tools

- Snowflake Summit 2023: Why Attend and What to Expect

- Snowflake Summit Sessions: 10 Must-Attend Sessions to Up Your Data Strategy

Share this article