Data Catalog for Databricks: How To Setup Guide

Share this article

Databricks is an essential data lakehouse platform for projects of all stripes and sizes. That’s why it’s critical to document all Databricks objects within a data catalog so that they’re discoverable and governed.

In this article, we’ll show how to set up Databricks to work with an external data catalog. We’ll talk about the prerequisites needed, including the differences between Databrick’s Unity Data Catalog and an external data catalog.

Then, we’ll show you the detailed steps required to connect to Databricks and crawl tables, views, data lineage information, and other important Databricks data objects.

Let’s start by looking into the prerequisites for setting up a data catalog for Databricks.

Table of contents #

- How to set up a data catalog for Databricks: Prerequisites

- Connecting Databricks to an external data catalog

- Crawl data from Databricks

- Business outcomes for cataloging data from Databricks

- How to deploy Atlan for Databricks

- Related reads

How to set up a data catalog for Databricks: Prerequisites #

You should already understand what a data catalog is and its role in data discovery and governance in an organization. For more details, see our comprehensive guide to data catalogs.

Additionally, you will also need to satisfy the following prerequisites:

- Admin privileges to Databricks

- Databricks Unity Catalog setup

- Access to an external data catalog

Let’s explore each prerequisite further.

Databricks access level #

You will require Admin privileges to Databricks, as well as SQL access.

Databricks Unity Catalog (internal data catalog) setup #

Databricks has its own data catalog, the Unity Catalog. Unity Catalog defines a metastore that captures metadata and controls user access to data. Data is further aggregated into catalogs, or collections of schemas, which serve as the primary unit of data isolation.

Read more → Databricks Unity Catalog: A Comprehensive Guide to Features, Capabilities, and Architecture

Unity Catalog tracks data lineage down to the column level. Unity Catalog gathers metadata automatically for all assets - notebooks, workflows, and dashboards - across all Databricks-supported languages. This provides detailed insight into the flow and movement of data through Databricks-managed assets.

If you need to track data lineage information from within Databricks, you’ll need to enable the Unity Catalog in your Databricks account.

Access to an external data catalog #

Unity Catalog is an essential tool for tracking metadata and data lineage within Databricks. However, you also need an external data catalog.

An external catalog acts as a “catalog of catalogs”. It aggregates data from independent data sources, such as data lakes and data meshes, across an organization. The end result is a single, searchable source of truth for all data within a company.

In this article, we’ll use Atlan as an example of connecting Databricks to an external data catalog. However, most of these same steps will remain relevant regardless of the data catalog you use.

Connecting Databricks to an external data catalog #

To connect Databricks to an external data catalog, you must:

- Decide on the extraction method

- Configure Databricks for external data catalog access

Let’s see how.

Decide on the extraction method #

First, discover the options your data catalog has for extracting data from Databricks. Data catalogs generally support one of two options:

- JDBC extraction: The original and well-supported method for externally connecting to Databricks. You can download the latest JDBC drivers from the Databricks Web site.

- REST API extraction. Retrieves data by calling into the Databricks REST API.

In both of these scenarios, you authenticate using a personal access token, which is slightly more secure than using the administrator’s username/password. Tokens have a set lifetime (default 90 days), after which they must be renewed. This helps keeps tokens fresh and reduces the risk of a security compromise.

For more information, see the Databricks documentation on authentication.

Configure Databricks for external data catalog access #

To access a Databricks cluster from an external data catalog, you will need to configure it as an all-purpose (interactive) cluster as opposed to a job cluster.

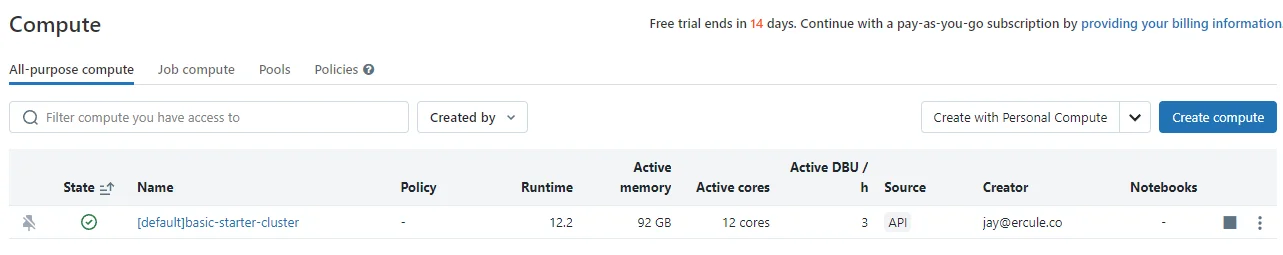

To ensure your Databricks instance is configured as an all-purpose cluster, log in to your instance and, from the left-hand menu, select Compute. Then, ensure that the cluster you’re connecting to is listed under All-purpose clusters.

All-purpose compute in Databricks - Image by Atlan.

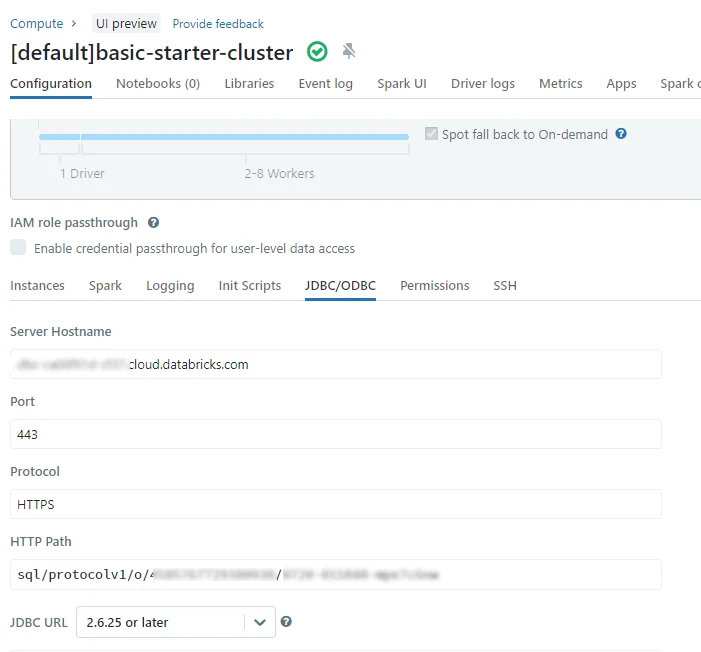

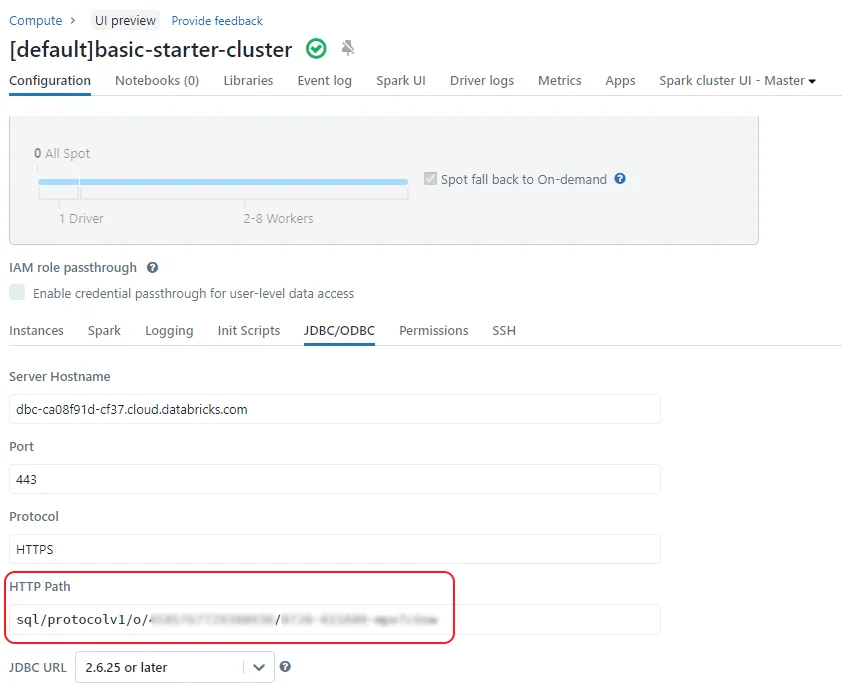

If you’re connecting via JDBC driver, select the name of your cluster, and on the Configuration tab, expand Advanced Options and, in JDBC/ODBC, copy the information you’ll need to connect to your Databricks cluster.

Configuring the JDBC driver for Databricks - Image by Atlan.

The values you need here may differ. For connecting via Atlan as your external data catalog, you’ll want to copy:

- Server Hostname

- Port

- HTTP Path

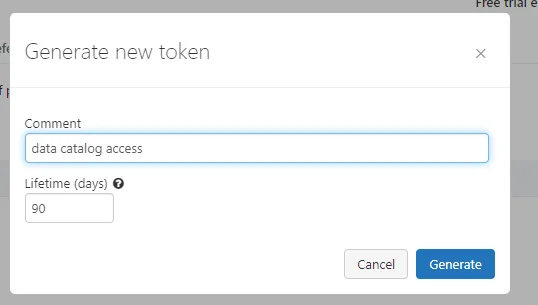

Next, create a personal access token:

- In your Databricks instance, select Settings, then select User Settings.

- Select the Access tokens tab, and then select Generate new token.

- In the Generate New Token dialog, enter a Comment about the token’s purpose and select the token’s Lifetime in number of days. (Note: You will need to regenerate and re-populate the token in your external data catalog before the expiration date or connectivity will cease to work.)

Generating a new personal access token - Image by Atlan.

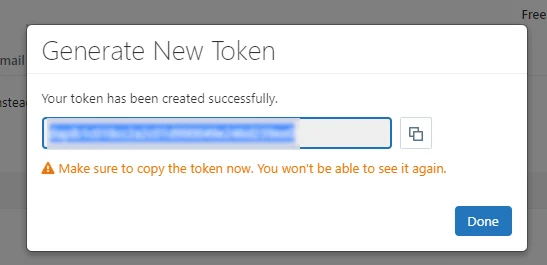

- Select Generate.

Make sure to copy your token and place it in a temporary text editor window on your local computer. You will not be able to retrieve the access token again after this. If you lose the token, delete it and create a new one.

Copy the token generated - Image by Atlan.

Finally, if your external data catalog is hosted in a private VPC in your cloud provider, consider whether you should use a private virtual network connection to keep sensitive data from flowing through the public Internet. Options include PrivateLink on AWS and Private Link on Azure.

Note that each cloud provider has their own requirements for private connectivity to your Databricks instances. Additionally, you may need to work with your data catalog provider to ask them to initiate the private network link request from their service.

Crawl data from Databricks #

Finally, you can configure your external data catalog to crawl data assets in Databricks.

Once set up, your data catalog will poll Databricks and Unity Catalog periodically for new data and metadata. Your external data catalog will then document and track these assets. This adds Databricks-managed data to your governed data catalog, giving you a holistic picture of the movement of data across your organization.

This procedure will differ by data catalog manufacturer. Let’s look briefly at how you’d set up a crawler in Atlan, which contains first-class support for integrating with Databricks.

In Atlan, you would start by defining a new workflow using Atlan’s Databricks Assets package.

You’d then specify the following attributes for connectivity:

- Databricks instance host

- Port (usually 443, but may differ depending on setup)

- The personal access token you defined earlier in Databricks

- The HTTP path from your Databricks instance. This is a string that starts with either sql/protocolv1/ or sql/1.0/warehouses. You can copy/paste this value directly from the HTTP Path setting in the JDBC/ODBC settings for your cluster that we mentioned above.

HTTP path setting for the Databricks cluster - Image by Atlan.

After this, you’d test your connection to ensure it works and then move on to configuring the connection itself. This includes giving it a name and defining permissions around who can manage it.

Finally, you’d configure the crawler itself. Crawler configuration includes defining inclusion and exclusion patterns that specify which objects should be crawled and which should not. You can also use an exclusion regular expression for more sophisticated screening criteria.

In Atlan, you also specify the extraction method to use for data. As discussed above, you can choose between JDBC extraction and REST API extraction.

JDBC is the original and recommended method for extracting metadata from Databricks. The REST API extraction method requires a Unity Catalog-enabled workspace as it uses Unity Catalog to extract metadata from your Databricks instance.

Note: If you choose REST API extraction, Atlan will still use JDBC for querying SQL data; Atlan uses the REST API primarily for metadata extraction.

Finally, you can choose whether to run the crawler once or on a schedule. You can schedule the crawler to run hourly, daily, weekly, or monthly.

Crawl data lineage information from Databricks #

Extracting data lineage information from Databricks requires setting up a separate workflow. Fortunately, this is a straightforward process.

To extract data lineage from Databricks using Atlan, create a new workflow with the Databricks Lineage package. After selecting the Databricks connection you created earlier, run your data lineage extraction once or set it to run on a schedule.

Remember that, besides having Unity Catalog enabled, any tables and views that you created before you enabled Unity Catalog must be upgraded. To do this, follow the detailed instructions on the Databricks Web site.

Business outcomes for cataloging data from Databricks #

The major benefit of integrating Databricks into your external data catalog is that it ties Databricks data in with your organization’s other data assets. This gives you a full picture of how data flows over time across your organization.

A data catalog can crawl and catalog the key data assets that Databricks manages. Specifically, Atlan crawls the following assets:

- Databases

- Schemas

- Tables

- Views

- Columns

- Stored procedures

Additionally, with all of your data (Databricks and non-Databricks assets) cataloged in a single, central location, you can tag, classify, and govern all of your data uniformly - regardless of where it lives.

How to deploy Atlan for Databricks #

We’ve covered most of the information you’d need to deploy Atlan for Databricks above. For more details, consult our detailed documentation:

- How to set up Databricks

- How to set up an AWS private network link for Databricks

- How to set up an Azure private network link for Databricks

- How to crawl Databricks

- How to extract lineage from Databricks

- What does Atlan crawl from Databricks?

- Troubleshooting Databricks connectivity

- Preflight checks for Databricks

Data Catalog for Databricks: Related reads #

- What Is a Data Catalog? & Do You Need One?

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- 8 Ways AI-Powered Data Catalogs Save Time Spent on Documentation, Tagging, Querying & More

- Data Catalog Market: Current State and Top Trends in 2023

- 15 Essential Data Catalog Features to Look For in 2023

- What is Active Metadata? — Definition, Characteristics, Example & Use Cases

- Data catalog benefits: 5 key reasons why you need one

- Open Source Data Catalog Software: 5 Popular Tools to Consider in 2023

- Data Catalog Platform: The Key To Future-Proofing Your Data Stack

- Top Data Catalog Use Cases Intrinsic to Data-Led Enterprises

- Business Data Catalog: Users, Differentiating Features, Evolution & More

Share this article