Apache Iceberg vs Delta Lake: A Practical Guide to Data Lakehouse Architecture

Share this article

Data lakehouses are taking the next step in data storage—they’re now blending the flexibility of data lakes with the reliability of data warehouses. The key to making this work? The table format. It decides how your data is stored, managed, and accessed. Two standards currently lead the way: Apache Iceberg and Delta Lake.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

Both formats offer similar core features, but deciding between them requires understanding their differences and best use cases. Each has its own perks that can affect performance, scalability, and efficiency, depending on how well they align with your goals. Read on to skip the basic feature lists and dive straight into the pros and cons of each format, so you can choose the one that works best for your needs, setup, and long-term plans.

Table of Contents

Permalink to “Table of Contents”- What are the similarities between Apache Iceberg and Delta Lake?

- Apache Iceberg vs. Delta Lake: An overview of key features

- What are the differences between Apache Iceberg and Delta Lake?

- Implementation tips: 3 best practices to keep in mind

- How the two open table formats stack up

- Set your data architecture up for success

- Apache Iceberg vs Delta Lake: Related reads

What are the similarities between Apache Iceberg and Delta Lake?

Permalink to “What are the similarities between Apache Iceberg and Delta Lake?”

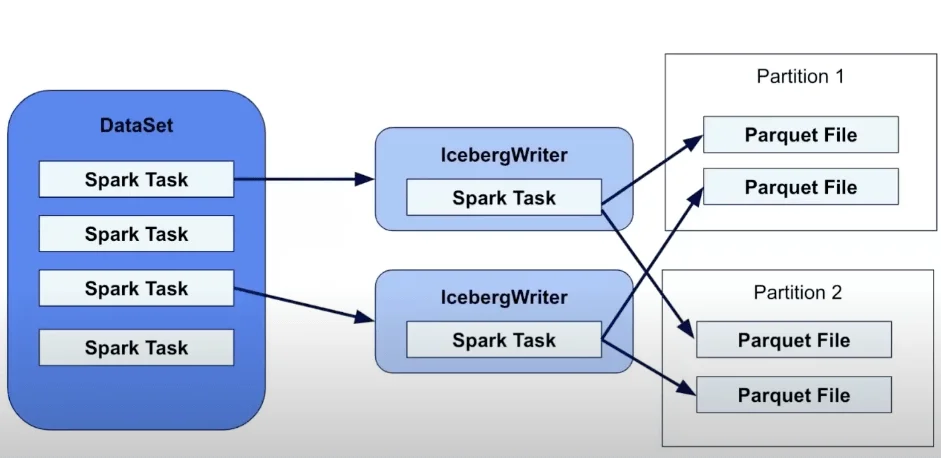

Apache Spark tasks write datasets to Apache Iceberg, which then partitions and stores the data as Parquet files for efficient querying and storage. - Source: Dremio YouTube.

Let’s start with what Apache Iceberg and Delta Lake have in common. Both formats tackle similar challenges in data lakehouses and architecture, making them powerful tools for managing large-scale data efficiently.

Here are some key features they share:

- ACID transaction support: Both formats handle ACID (Atomicity, Consistency, Isolation, and Durability) transactions like pros. This means that all changes to the data are consistent and reliable, avoiding issues like partial writes or data corruption, even when hardware or software fails.

- Schema evolution: You can modify your table schemas easily in either format without rewriting existing data. Whether you’re adding new columns, dropping old ones, or renaming fields, it’s easy to adapt your data models as your business needs evolve.

- Time travel: Want to see older versions of your data? Both platforms have you covered. Time travel is helpful for reproducing analyses, debugging issues, or recovering from accidental changes. It’s a great safety net.

- Metadata management: Iceberg and Lake both handle metadata efficiently, which is important for scalability, reliability, and performance. Even as your data grows into the petabyte-scale range, querying metadata stays fast and smooth.

- Partition evolution: Changing how you partition your data? No big deal. These systems let you adjust partitioning schemes without physically moving or rewriting data. Query performance remains optimized while reducing the hassle of manual updates as your data access patterns evolve.

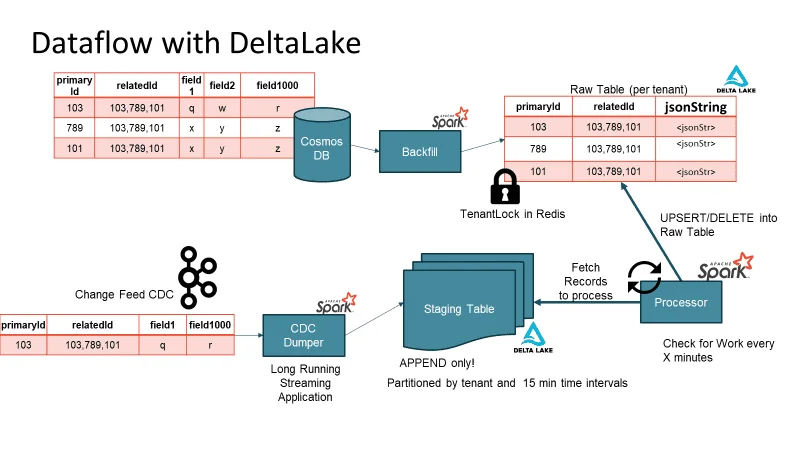

Data pipelines integrating Cosmos DB, Apache Spark, and Delta Lake process change data capture (CDC) streams, ensuring efficient ingestion, staging, and processing of raw data for tenant-based analytics. - Source: Delta Lake.

Apache Iceberg and Delta Lake share robust features for handling the complexity of different data systems. They give you the flexibility and reliability needed to build high-performing architectures that can grow with you.

Apache Iceberg vs. Delta Lake: An overview of key features

Permalink to “Apache Iceberg vs. Delta Lake: An overview of key features”Diving into the foundations of each table format reveals some core differences between Apache Iceberg and Delta Lake, which you’ll see below:

Feature | Apache Iceberg | Delta Lake |

|---|---|---|

Primary Maintainer | Apache Software Foundation | Databricks |

Storage Format Support | Parquet, ORC, Avro | Parquet |

Schema Enforcement | Yes, with type-safe evolution | Yes, with schema enforcement and constraints |

Query Engine Support | Spark, Flink, Trino, Presto, Hive | PrestoDB, Flink, Trino, Hive, Snowflake, Google BigQuery, Athena, and DuckDB |

Cloud Support | AWS, GCP, Azure | AWS, GCP, Azure |

File Management | Hidden files with manifest lists | Transaction log (_delta_log) |

Partition Evolution | Dynamic partitioning with hidden partitioning | Dynamic partitioning |

Community Adoption | Growing, multi-vendor support | Strong, with origins in the Databricks ecosystem, now broadened by open-source contributions under the Linux Foundation |

What are the differences between Apache Iceberg and Delta Lake?

Permalink to “What are the differences between Apache Iceberg and Delta Lake?”Now, let’s get more into specific capabilities and declare winners for each category.

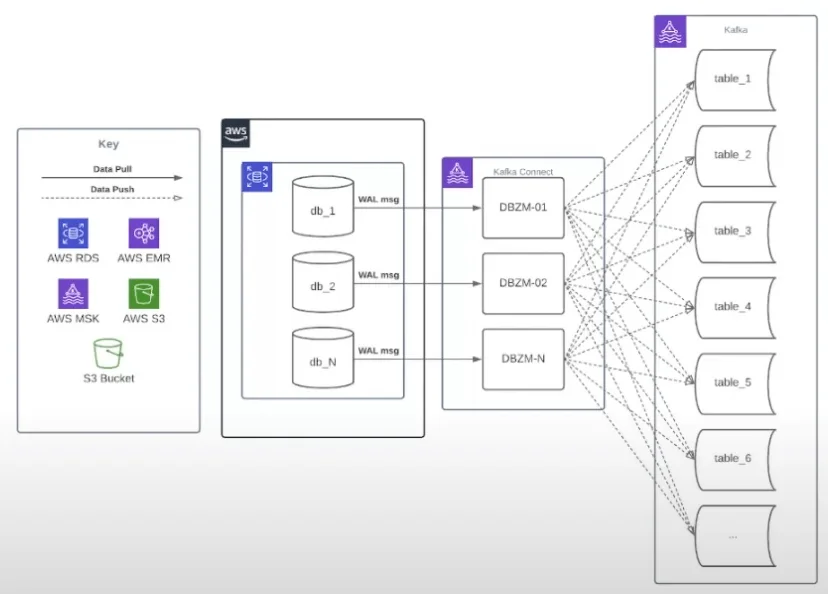

AWS and Kafka work together to stream change data capture (CDC) from databases using Kafka Connect and Debezium, enabling real-time data ingestion into multiple tables. - Source: YouTube.

Schema evolution and data type support

Permalink to “Schema evolution and data type support”Iceberg makes schema evolution easier with type-safe column updates and hidden partitioning. It handles complex type changes without needing to rewrite data. Delta Lake also supports schema enforcement and evolution but can struggle with more complex type changes, such as changing column data types or incompatible type conversions.

Winner: Apache Iceberg. Iceberg supports type-safe updates and flexible schema options, making it the clear choice because data type changes are inevitable in evolving data ecosystems.

Partitioning and performance optimization

Permalink to “Partitioning and performance optimization”Iceberg makes partitioning simple with hidden partitioning and partition evolution, all without needing to rewrite data. It also uses a Z-order clustering equivalent (MinIO) for efficient data organization. Delta Lake, on the other hand, offers Z-order clustering and data skipping—but keeps these features more tightly integrated with Apache Spark.

Winner: Tie. Both tools excel at partitioning and optimization using slightly different methods.

Metadata management and file organization

Permalink to “Metadata management and file organization”Iceberg takes a unique approach with its manifest and metadata file formats, offering atomic updates and efficient pruning. Delta Lake uses a transaction log to track all changes. Iceberg tends to be more efficient for large-scale operations, while Delta Lake’s method works well for smaller datasets.

Winner: Apache Iceberg. Iceberg’s metadata management handles large datasets and complex data engineering tasks more effectively.

Transaction support and data consistency

Permalink to “Transaction support and data consistency”Both formats stick to ACID guarantees, but they handle things a bit differently. Iceberg offers snapshot isolation with optimistic concurrency control. Delta Lake uses the same approach but adds automatic conflict resolution.

Winner: Tie. Both are solid options for enterprise-level transaction support.

Query engine compatibility

Permalink to “Query engine compatibility”Iceberg offers broader native support across query engines, including Spark, Flink, Trino, and Presto. Delta Lake shines in the Spark ecosystem but doesn’t play as well with other engines.

Winner: Apache Iceberg. The wider query engine support makes it more versatile for diverse data stacks.

Scalability and resource management

Permalink to “Scalability and resource management”When handling metadata and managing manifests, Iceberg stands out as datasets grow larger. Delta Lake performs really well within the Spark ecosystem, but it might need more resources to handle sizable tables.

Winner: Apache Iceberg. Ultimately, Iceberg is better equipped for large-scale datasets and efficient metadata management.

Implementation tips: 3 best practices to keep in mind

Permalink to “Implementation tips: 3 best practices to keep in mind”Choosing and setting up a table format isn’t just about comparing features. You need to think about your actual infrastructure and workloads, your team’s skills, and how well the format will scale in the future. Here are a few factors to keep in mind and steps you can follow to successfully implement Apache Iceberg or Delta Lake.

1. Match your table format to your data setup

Permalink to “1. Match your table format to your data setup”Start with taking a good look at your current setup and figure out how you’re using your query engines. If Spark is your go-to for data processing, Delta Lake’s tight integration can give you better performance and easier management. On the other hand, if you’re using (or planning to use) multiple query engines like Flink or Trino, Iceberg’s broader compatibility can help you avoid vendor lock-in and keep things flexible.

The size of your data operations also matters. Think about both your current and future transaction volumes and data sizes. If you’re working with billions of records daily and deploying numerous updates, Iceberg’s metadata handling could be a better fit. But if you’re dealing with smaller datasets and mostly appending data, Delta Lake’s simpler setup could work just fine.

And don’t forget about your team’s experience. Think about what your developers are most comfortable with and how they can collaborate most effectively. If they’re accustomed to working with Databricks, Delta Lake provides native integration with the platform, though Iceberg is also well-supported. Iceberg’s approach could feel more natural if your team gravitates toward Apache tools, but both formats can be equally efficient, depending on your specific workflow and use cases.

2. Streamline integration with your existing data ecosystems

Permalink to “2. Streamline integration with your existing data ecosystems”

Delta Lake powers the Extract, Load, and Transform (ELT) process, enabling efficient data ingestion, storage, and transformation. - Source: Delta Lake.

Smoothly integrating your high-performance data systems takes careful planning. Start by reviewing your data pipeline thoroughly. For ETL/ELT processes, check how your current tools handle each format. For instance, if you’re using Airflow for orchestration, make sure the operators for your chosen format are reliable and ready to go. Always test with small datasets before going all in.

When it comes to BI tools, compatibility is key. While there aren’t individual connectors, both formats work well with major BI tools such as Tableau and PowerBI through Databricks’ connectivity options. Connection setups may vary depending on your specific environment and configuration, but neither format has inherent advantages in terms of BI tool integration. Just be sure to document your configurations so everyone on your team is on the same page.

Pay close attention to security and governance. Map out how each format’s access controls of each format align with your current security policies. If you’re using Amazon AWS Lake Formation, understand how each format’s permissions tie into IAM roles. And make sure your data governance tools can track lineage and enforce policies effectively for your chosen format.

Finally, set up strong backup and disaster recovery plans tailored to your format. For Delta Lake, this could mean replicating the transaction log across regions. For Iceberg, it might involve managing manifest file replication. Regularly test your recovery process to ensure everything works when it counts.

3. Plan for scale, while still prioritizing optimal performance

Permalink to “3. Plan for scale, while still prioritizing optimal performance”Scaling effectively means staying on top of a few key areas. For metadata management, set up monitoring dashboards to keep an eye on metadata size and query performance. Make sure you have alerts in place for when metadata operations go beyond normal thresholds, like when Iceberg manifest files or Delta Lake transaction logs get bigger than expected.

Think through your partition strategy based on how you query your data. If you’re often filtering by date ranges, time-based partitioning with the right level of detail could be a good move. Keep an eye on partition sizes, and tweak your approach if you spot any major skews or too many tiny data files.

Have clear data management and retention policies that work for both your business needs and system performance. Decide how long you really need to keep historical data and set up automated processes to clean out outdated snapshots. For instance, you might keep 30 days of history for operational tables before you delete it, but hold onto compliance-related data for longer.

Lastly, automate cleanup tasks to run during off-peak hours. These should handle both data and metadata cleanup, such as deleting old snapshots in Delta Lake or clearing out expired manifests in Iceberg. Monitor these tasks to make sure they’re working smoothly and not interfering with your production systems, which could impact normal business operations.

How the two open table formats stack up

Permalink to “How the two open table formats stack up”Let’s recap the winners in each category:

- Schema Evolution: Apache Iceberg

- Partitioning: Tie

- Metadata Management: Apache Iceberg

- Transaction Support: Tie

- Query Engine Compatibility: Apache Iceberg

- Scalability: Apache Iceberg

Apache Iceberg: Best for cloud-native architectures with diverse query engines

Permalink to “Apache Iceberg: Best for cloud-native architectures with diverse query engines”Choose Apache Iceberg if you:

- Need compute support for multiple query engines

- Work with enormous datasets

- Require flexible schema evolution

- Want a vendor-neutral solution

- Plan to scale horizontally across different platforms

Delta Lake: Best for Spark-centric environments requiring tight integration

Permalink to “Delta Lake: Best for Spark-centric environments requiring tight integration”

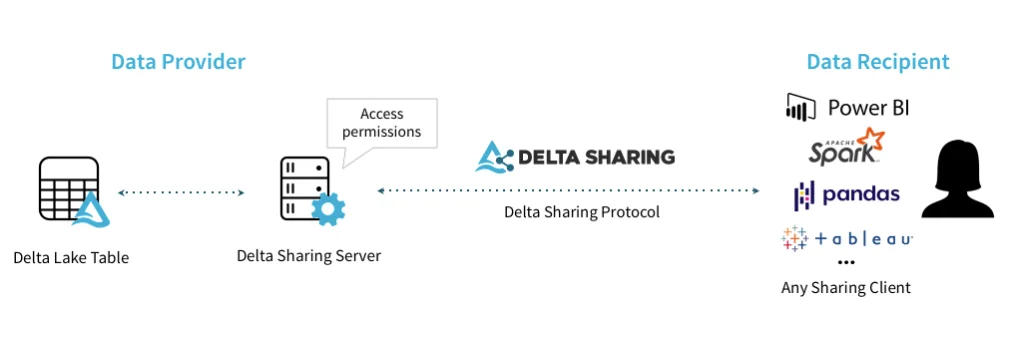

Delta Sharing enables secure data access, allowing providers to share Delta Lake tables with recipients using various analytics tools. - Source: Delta Lake.

Go with Delta Lake if you:

- Primarily use Spark for data processing

- Want deep integration with Databricks

- Need simpler setup and management

- Have smaller- to medium-sized datasets

- Require strong support for streaming operations

The choice between Apache Iceberg and Delta Lake ultimately depends on your specific use case, existing big data infrastructure, and future scalability needs.

Set your data architecture up for success

Permalink to “Set your data architecture up for success”Delta Lake and Apache Iceberg are both evolving quickly, regularly releasing new features and updates. When deciding on a table format, consider your current functionality needs, your future data architecture goals, and how each format fits into your organization’s long-term data strategy.

While Iceberg wins more categories in our comparison, Delta Lake’s tight integration with Spark and the Databricks ecosystem makes it the better choice for teams already deeply invested in that stack.

No matter which table format you choose, managing your data infrastructure effectively is key to your success. Request an interactive product demo to see how Atlan can help you streamline your data infrastructure and get the most out of your chosen table format.

Apache Iceberg vs Delta Lake: Related reads

Permalink to “Apache Iceberg vs Delta Lake: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article