Data Lineage for Databricks: Why is it Important & How to Set it Up?

Share this article

💡 Automated data lineage for all workloads was one of the biggest updates announced to the Databricks Unity Catalog recently. In the same month (June 2022), Databricks also announced that the Unity Catalog was now generally available on AWS and Azure.

The Databricks Unity Catalog can now automatically track lineage up to the column level. Data lineage is the key to understanding data flow and better transparency of everything from ML (Machine Learning) model performance to regulatory compliance.

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

Integrating Delta Lake with Unity Catalog can empower you to track data flow, manage metadata, and audit shared data effortlessly.

But before exploring the lineage capabilities of Unity Catalog and how it affects Databricks assets, let’s quickly understand the need for lineage.

Table of contents #

- 3 Reasons why understanding the lineage of Databricks assets is important

- Databricks lineage: How does the Unity Catalog handle lineage?

- Going beyond Databricks lineage: Setting up a catalog of catalogs

- Atlan and Databricks: Better together

- Databricks data lineage: Related reads

3 Reasons why understanding the lineage of Databricks assets is important

Delta Lake, the open data storage layer of Databricks, provides the edge in scaling analytics and machine learning (ML) algorithms because of its ACID capabilities and lakehouse architecture.

However, it doesn't help data teams get complete context on their data by answering questions, such as:

- Where did a data set come from?

- How was it configured? What transformations did it undergo?

- How would changes to the original data affect the downstream applications (like dashboards)?

The key to addressing these questions lies in lineage mapping and impact analysis.

What is data lineage and why is it important?

Data lineage is a visualization of the journey of data for its entire lifecycle - right from the origin, and evolution to use.

Data lineage helps in:

- Tracking data evolution

- Supporting data compliance and audit

- Enabling observability for the data stack

Let’s see how.

Tracking data evolution

As part of multiple ML lifecycle experiments, the tables and files of the lakehouse architecture are subject to sampling, feature engineering, and training. This involves running different combinations and aggregations of variables. As a result, datasets keep evolving, along with the ML model, leading to multiple versions of data.

Let’s look at an example. A business wishes to investigate a specific version of an ML model because the prediction results are accurate. So, the data scientist must understand the features from that version and explore what drove the output for that corresponding run.

Databricks lineage traces the journey of Delta Lake assets and maintains logs of each version as and when transformations occur.

Supporting data compliance and audit

An organization becomes data-driven when it democratizes data for its teams.

However, ensuring compliance with regulations such as the GDPR, CCPA, and HIPAA requires organizations to document data permissions, storage, and use. Moreover, they must encrypt sensitive data to maintain its integrity and privacy.

This calls for end-to-end traceability of data flow and monitoring access of tables, columns, and even rows. With Databricks lineage, data stewards can ensure traceability for all lakehouse data and conduct mock internal audits to prepare for regulatory compliance reviews.

Enabling observability for the data stack

Databricks lineage also helps you scan data flows to trace the root cause of an incident. For instance, if an ML model fails or predicts unexpected results, data scientists can easily trace data flow and transformation across tables and files to find out what went wrong.

Databricks lineage: How does the Unity Catalog handle lineage?

The features shipped with the latest update to the Databricks Unity Catalog lineage capabilities include the following:

- Automated run-time lineage: Unity Catalog automatically captures the lineage generated against all operations in Databricks

- Support for all workloads: The lineage capabilities of Unity Catalog extend beyond just SQL — to any language supported by Databricks

- Lineage at column level: Lineage is captured at a table, view, and column-level granularity

- Lineage for notebooks, workflows, and dashboards: Lineage is generated for diverse data assets including such as notebooks, workflows, and dashboards

Learn more about Unity Catalog and data lineage here.

However, there’s a catch — these capabilities are confined to just the lakehouse data assets. If the goal is to observe the lineage of the entire data ecosystem, it’s critical to have a modern data catalog solution in place.

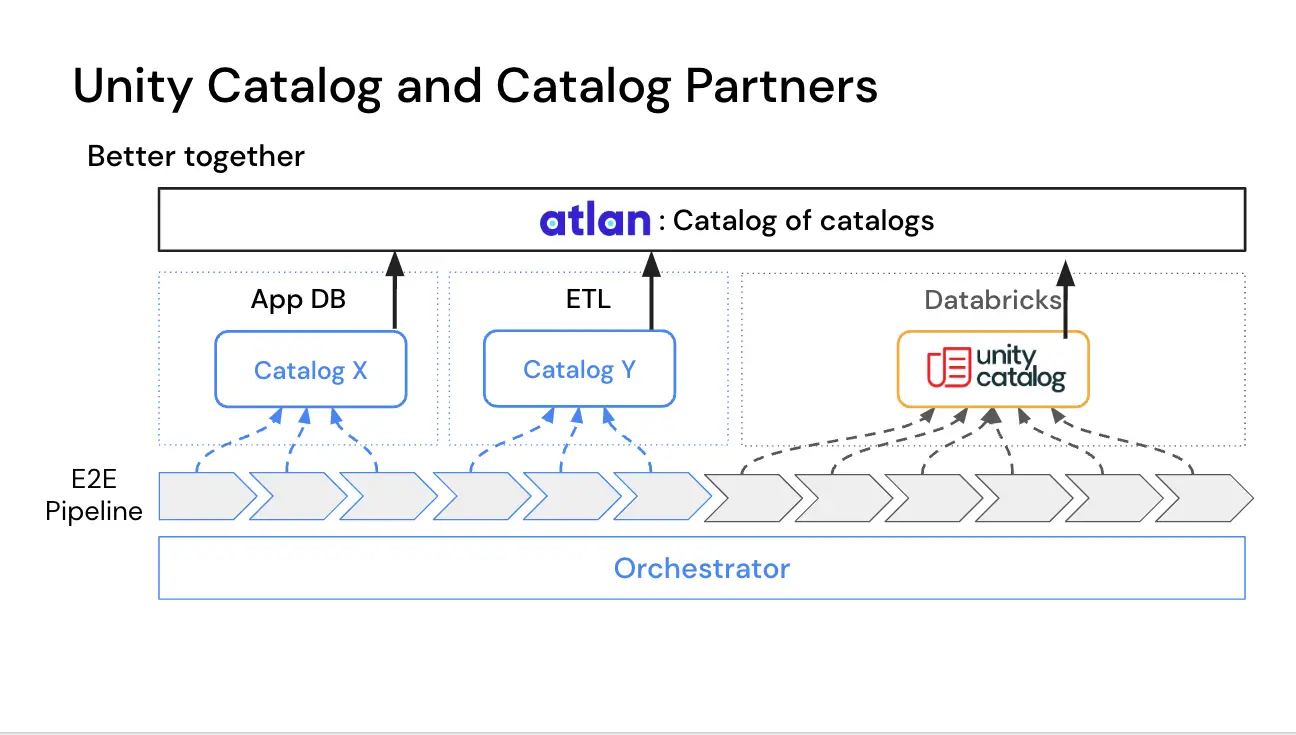

Going beyond Databricks lineage: Setting up a catalog of catalogs

Unity Catalog’s lineage capabilities map lakehouse data. However, the modern data stack has several other data sources, such as Snowflake, Amazon Redshift, Salesforce, Tableau, and more.

Each data tool comes with a dedicated data catalog, but for mapping lineage across all systems, you need a unified catalog of data catalogs.

Building a catalog of catalogs with Atlan to realize end-to-end visibility and lineage mapping.

💡 Atlan connects to Databricks Unity Catalog’s REST API to extract all relevant metadata from Databricks clusters and workspaces, powering discovery, governance, and insights inside Atlan.

This integration allows Atlan to generate column-level lineage for tables, views, and columns for all the jobs and languages that run on a Databricks cluster. By pairing this with metadata extracted from other tools in the data stack (e.g. BI, transformation, ELT), Atlan can create true cross-system lineage.

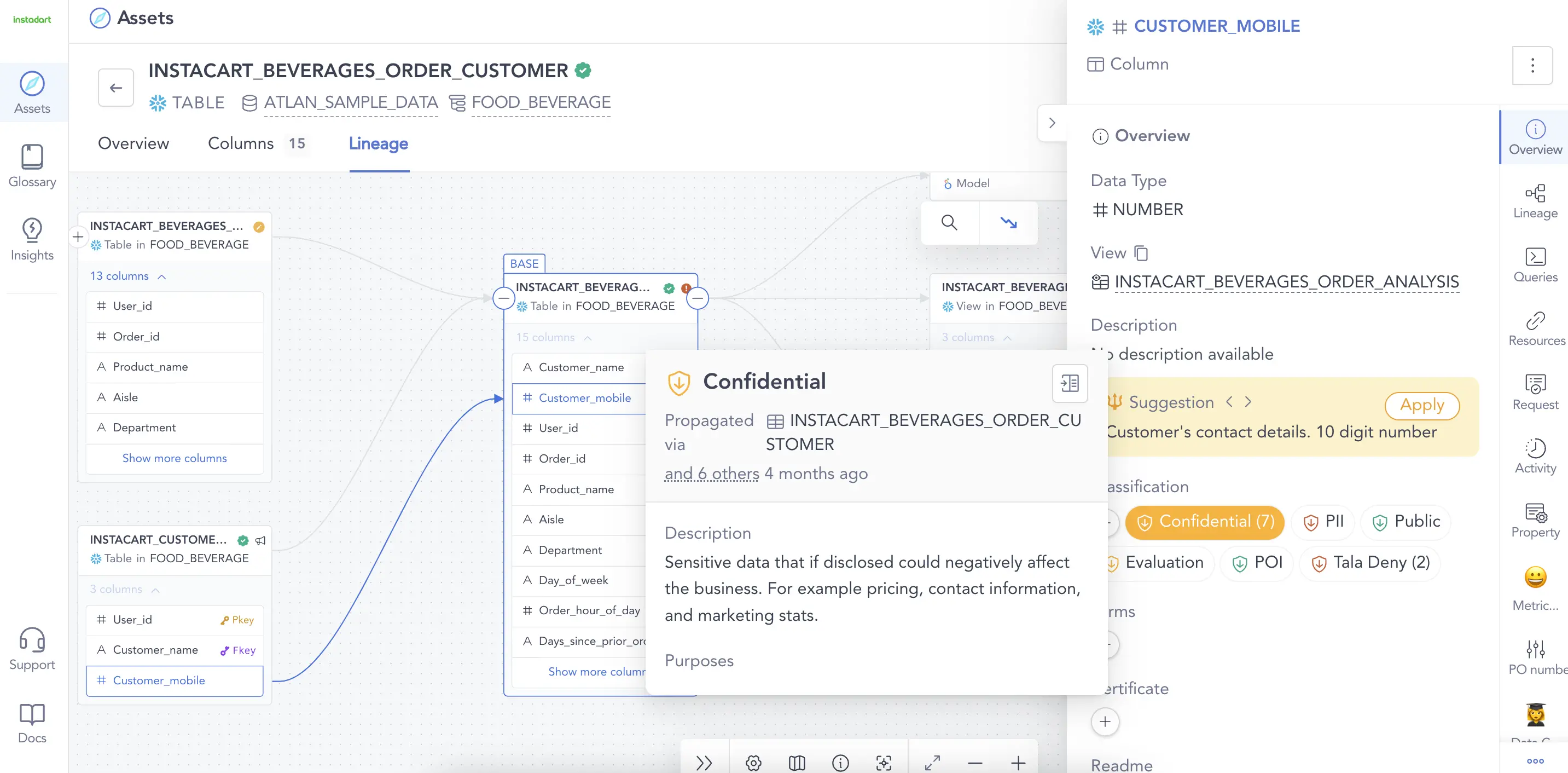

Some highlights of Atlan’s lineage capabilities include:

- Smooth, interactive, and unique lineage: Smooth navigation, interactive controls, and personalized views all build up to a pretty intuitive lineage experience in Atlan.

- Provision to act on lineage information: What happens after you’ve viewed lineage? What do you do with that information? Atlan has built-in in-line actions along with lineage to help you collaborate on lineage insights via JIRA, Slack, and your other preferred tools

- Automatic propagation: Atlan lets you automatically propagate sensitive tags, classifications, and descriptions along the lineage.

Atlan data lineage for Databricks data assets.

Atlan and Databricks: Better together

Ready to get started on the Databricks-Atlan integration? Awesome! The first step is to enable Unity Catalog in your Databricks account.

Step 1: Setting up Unity Catalog on Databricks

To create the catalog of the Databricks assets, you need:

- A Databricks account in the premium plan or above

- An AWS Account — with abilities to create S3 buckets, IAM roles, IAM policies, and cross-account trust relationships

- A Databricks workspace to configure the Unity Catalog

Configuring Databricks

- Set up an S3 bucket and an IAM role to access that S3 bucket.

- Create a Metastore at the root of your S3 bucket. This is to capture the metadata generated by Unity Catalog.

- Attach the preferred workspace to your newly created Metastore.

To understand this aspect further, check out the Databricks documentation on getting started with Unity Catalog.

Better lineage with Atlan and Databricks Unity Catalog

Step 2: Integrating Unity Catalog with Atlan

To get a complete view of the lineage in the organization’s data ecosystem, you must bring the Databricks assets into Atlan. For this purpose, you need:

- A working Databricks workspace with Unity Catalog enabled

- An active Atlan subscription

Configuring Atlan for cross-system lineage

- Choose the extractor: From the top right corner of your screen, navigate to New and choose New Workflow → Miner. From the list of packages, select Databricks Lineage.

- Configuration: Choose the appropriate Databricks connection, save it

- Extraction: Run the extractor by pressing the ‘Run’ button at the bottom. The runs of the extractor and the Databricks lineage should be visible once the run is complete.

To understand the integration process further, check out the Atlan documentation on extracting lineage from Databricks.

Bottom line

As mentioned earlier, the in-built catalogs of data tools such as Databricks hold good only for their internal assets. If you want to envisage end-to-end lineage of your Databricks assets along with the rest of your data stack, then you need a modern data catalog, such as Atlan, to work alongside Unity Catalog.

What would that look like in action?

Let’s assume that the financial transactional information comes in via an analytical processing solution. There are two different data types and sources to account for:

- Streaming data on financial transactions from various streams continuously pushed into Delta Lake (i.e., lakehouse)

- Dynamic data, like currency exchange rates, get stored in MongoDB (i.e., database) using a separate API-based connector

Creating fact and dimension tables that consider both data resources would involve building workflows that offer lineage mapping for Delta Lake and MongoDB.

If you only rely on Unity Catalog, you won’t get visibility on the data residing in MongoDB. However, when you integrate the Databricks catalog with Atlan, information from MongoDB and Delta Lake gets connected, enabling end-to-end data lineage across disparate data sources.

Excited to see how the whole setup works?

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

Databricks data lineage: Related reads

- Metadata management for Databricks data assets

- Data lineage 101: importance, use cases, and its role in governance

- Column-level lineage for Databricks

- Benefits of data lineage

- Databricks data governance: Overview, setup, and tools

Share this article