What is DataOps? - DataOps Framework & 9 Key DataOps Principles

Share this article

Quick answer:

Want a quick look at the highlights of this article? We’ve got you covered with this 2-minute summary:

- DataOps is a data management approach — a set of practices, processes, and technologies — to activate data for business value. It’s built on the principles of agile methodologies, automation, and collaboration.

- The article explains the fundamental ideas behind DataOps and explores how it’s different from DevOps. It also looks into the 9 DataOps principles and DataOps framework.

- Looking for a data catalog that enables DataOps? Make sure to include Atlan — a Leader in The Forrester Wave: Enterprise Data Catalogs, Q3 2024 report.

DataOps is a holistic approach to data management that goes beyond technology and aims to combine agile methodologies, automation, and collaboration across data professionals to improve the quality, speed, and business value of data-related activities.

DataOps, a term popularized by co-founder and CEO of Tamr Andy Palmer, has quickly gained interest in the world of data management and analytics. In a 2015 article, Palmer explained that DataOps is more than just a buzzword, but a critical approach to managing data in today’s complex, data-driven world.

Palmer had said:

“I believe that it’s time for data engineers and data scientists to embrace a similar (to DevOps) new discipline — let’s call it “DataOps” — that at its core addresses the needs of data professionals on the modern internet and inside the modern enterprise.”

As businesses and organizations continue to collect and generate vast amounts of data, the need for effective data management has never been greater. DataOps, with its focus on collaboration, automation, and continuous improvement, offers a solution to this growing problem.

By bringing together data engineers, analysts, and other data professionals, DataOps aims to streamline the process of collecting, storing, and analyzing data, enabling organizations to make more informed, data-driven decisions.

Here, we will answer what exactly is DataOps, retrace its emergence, understand the fundamental DataOps principles, run through a prescribed DataOps framework, and more.

Table of Contents #

- What is DataOps?

- Emergence of DataOps

- Why do we need DataOps?

- Four fundamental ideas behind DataOps

- 9 Principles of DataOps

- DataOps Vs. DevOps

- DataOps framework

- What is DataOps: Related reads

What is DataOps? #

DataOps is a holistic approach to data management that goes beyond technology and aims to combine agile methodologies, automation, and collaboration across data professionals to improve the quality, speed, and business value of data-related activities.

According to Michelle Goetze of Forrester, DataOps is “the ability to enable solutions, develop data products, and activate data for business value across all technology tiers, from infrastructure to experience.” This definition highlights the focus of DataOps on enabling organizations to extract value from their data by leveraging a range of technologies and approaches.

Another working definition of DataOps, proposed in a research paper by Julian Ereth, is “a set of practices, processes, and technologies that combines an integrated and process-oriented perspective on data with automation and methods from agile software engineering to improve quality, speed, and collaboration and promote a culture of continuous improvement.” This definition highlights the emphasis on collaboration and continuous improvement in DataOps, as well as the role of automation in achieving these goals.

Emergence of DataOps #

According to Wikipedia, the term DataOps was first introduced by Lenny Liebmann in a blog post on the IBM Big Data & Analytics Hub titled “3 reasons why DataOps is essential for big data success” on June 19, 2014.

The post discussed the increasing need for a more agile and efficient approach to managing big data in order to extract value from it and proposed the term DataOps as a solution to this problem.

DataOps takes inspiration from principles of Agile, DevOps, and Lean Manufacturing - and involves the same to have better management of data teams, processes, and people.

Since its introduction, the term DataOps has been popularized by data management experts such as Andy Palmer of Tamr and Steph Locke. They have stated DataOps is a core concept that addresses the needs of data professionals in the modern enterprise.

The emergence of DataOps can be attributed to several factors:

- The growing complexity of managing data in the modern enterprise

- Shift towards agile and lean methodologies in data

- Need to break the silos between Data Engineers and Data Analysts

- Need for data teams to keep pace with rapidly changing requirements

Let’s expand on those factors in the next sections.

1. Growing complexity of managing data in the modern enterprise #

With the growing complexity of managing data in the modern enterprise (due to the explosion of big data and the proliferation of data sources); it became increasingly difficult to collect, store, and analyze data in a way that was efficient, accurate, and timely. Traditional data management practices, which were focused on data warehousing and ETL, were no longer sufficient for dealing with this new reality.

Therefore, one of the main reasons for the emergence of DataOps is the increasing amount of data generated by businesses and organizations. This has led to a growing need for effective data management practices to enable organizations to extract value from their data and make informed data-driven decisions.

2. Shift towards agile and lean methodologies in data #

Another factor that has contributed to the emergence of DataOps is the shift towards agile and lean methodologies in software development and data projects in particular.

The Agile and Lean methodologies, which emphasize collaboration, continuous improvement, and flexibility, have been adopted by many organizations to improve their software development process. This has led to the application of similar approaches to data management and analytics, resulting in DataOps.

3. Need to break the silos between Data Engineers and Data Analysts #

DataOps emerged also as a way to bridge the gap between data engineers and data analysts, who often worked in silos and had different priorities and objectives.

DataOps aims to bring these teams together, fostering a culture of collaboration and continuous improvement. This enables data professionals to work together more effectively, improving the quality and timeliness of data-related activities.

4. Need for data teams to keep pace with rapidly changing requirements #

Finally, DataOps is also driven by the need to keep pace with the rapidly changing requirements of the business.

The ability to quickly and easily update data pipelines can be critical in today’s fast-paced, data-driven world, where organizations need to be able to quickly respond to changes in the market, customer behavior, and other factors that can impact their bottom line. DataOps enables organizations to be agile, lean, and responsive to changing business needs, which is critical for long-term success.

A research paper titled “Good practices for the adoption of DataOps in the software industry” by Manuel Rodriguez et al. explains that DataOps emerged as a natural response to the increased demand and complexity of data-related activities in organizations, that require better collaboration and communication between teams, more automation and more focus on the end-user. In this context, DataOps provides a way to manage data by improving data quality and integrating it into the software development process.

Learn how to build a business case for DataOps

Download ebook

Why DataOps? #

The following are the main reasons organizations are investing in DataOps:

- Data growing at an exponential rate

- Prevent duplicate reporting and analysis

- Poor data quality

- Businesses need to be more responsive to changing requirements

- Opportunity to increase revenue

- Opportunity to save costs

1. Data growing at an exponential rate #

According to a study by IDC, the global data sphere is expected to grow to 175 zettabytes by 2025. This huge amount of data can be difficult to manage, store and analyze. DataOps can help organizations navigate this sea of data by streamlining the process of collecting, storing, and analyzing data, enabling them to extract more value from their data.

2. Prevent duplicate reporting and analysis #

Gartner estimates that 85% of big data projects fail to meet all their objectives due to a lack of collaboration and siloed data. DataOps promotes collaboration across data professionals, breaking down silos and enabling teams to work more effectively.

3. Poor data quality #

Poor data quality is a significant problem for a lot of organizations. DataOps approaches aim to improve data quality through continuous integration and delivery.

4. Businesses need to be more responsive to changing requirements #

DataOps enables organizations to be more agile and responsive to changing business needs, improving the speed at which they can update data pipelines.

This can be crucial in today’s fast-paced business environment, where organizations need to quickly respond to changes in the market, customer behavior, and other factors that can impact their bottom line.

5. Opportunity to increase revenue #

DataOps can improve the quality, speed, and collaboration of data-related activities which can help organizations to make more informed, data-driven decisions and extract more value from their data.

6. Opportunity to save costs #

A properly deployed DataOps program can save data management costs. By streamlining the process of collecting, storing, and analyzing data, DataOps can help organizations to reduce costs and increase efficiency.

What are the four fundamental ideas behind DataOps? #

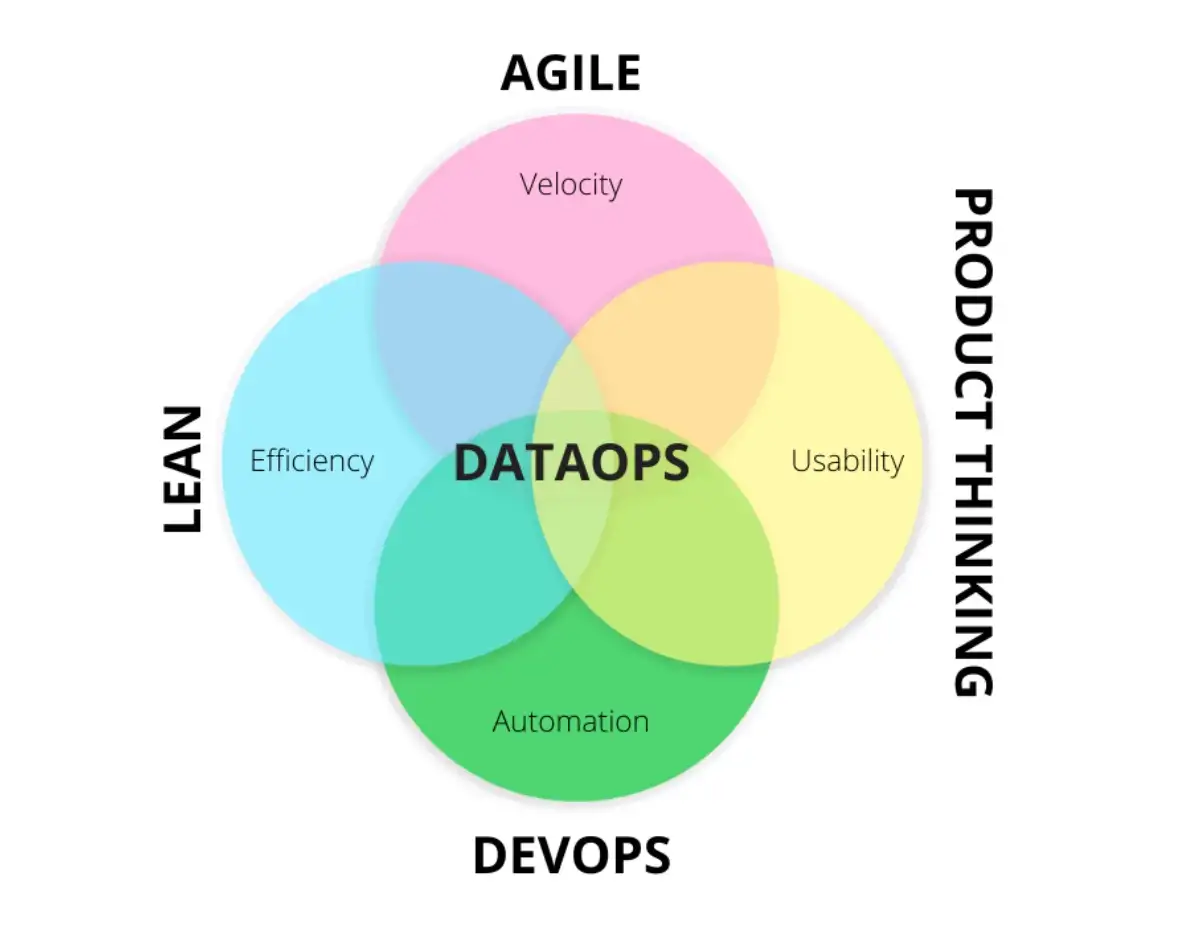

DataOps is built on four fundamental ideas: Lean, Product Thinking, Agile, and DevOps. These ideas provide the foundation for the DataOps approach, which aims to improve the quality, speed, and collaboration of data-related activities in organizations.

Four fundamental Ideas behind DataOps - Source: The Rise of DataOps.

1. Lean #

The Lean approach, which is based on the principles of Lean Manufacturing, emphasizes minimizing waste and maximizing efficiency. In the context of DataOps, this means minimizing the time and resources required to collect, store, and analyze data, while maximizing the value extracted from the data.

Lean thinking encourages data teams to focus on value and continuously improve the data management process. This can be achieved by using tools such as Value Stream Mapping, which helps to identify bottlenecks and inefficiencies in the data management process.

The lean approach can also help organizations to save costs by streamlining processes and using resources more efficiently.

2. Product Thinking #

Product Thinking is another fundamental idea behind DataOps. This approach emphasizes on the customer and the value they receive. In the context of DataOps, this means considering the needs of the business and the customers when developing data products.

It also reduces the cost of discovering, understanding, trusting, and ultimately using quality data. This enables organizations to extract more value from their data and make more informed data-driven decisions.

3. Agile #

DataOps is an agile approach to data management, which means it emphasizes flexibility, speed, and adaptability. Agile methods, such as Scrum and Kanban, are used to manage the data management process, enabling organizations to quickly respond to changing requirements and deliver value faster.

This enables data teams to break down data management tasks into smaller chunks, known as sprints, which can be completed in a shorter time frame.

This approach allows data teams to work in an iterative and incremental way, which allows them to make progress quickly and easily adapt to changing requirements.

Adopting an Agile approach to data management also means being able to work in a more collaborative way. Agile methods such as Scrum, promote regular meetings and reviews, where team members can provide feedback and share information.

4. DevOps #

DevOps is the last fundamental idea behind DataOps. This is a set of practices that aim to bring together development and operations teams, enabling them to work more effectively.

DevOps in DataOps aims to increase the speed and quality of the delivery of data products. This can be achieved through a number of practices such as Continuous Integration, Continuous Deployment, and Continuous Testing. These practices help to automate and streamline the data management process, enabling data teams to quickly and easily update data pipelines.

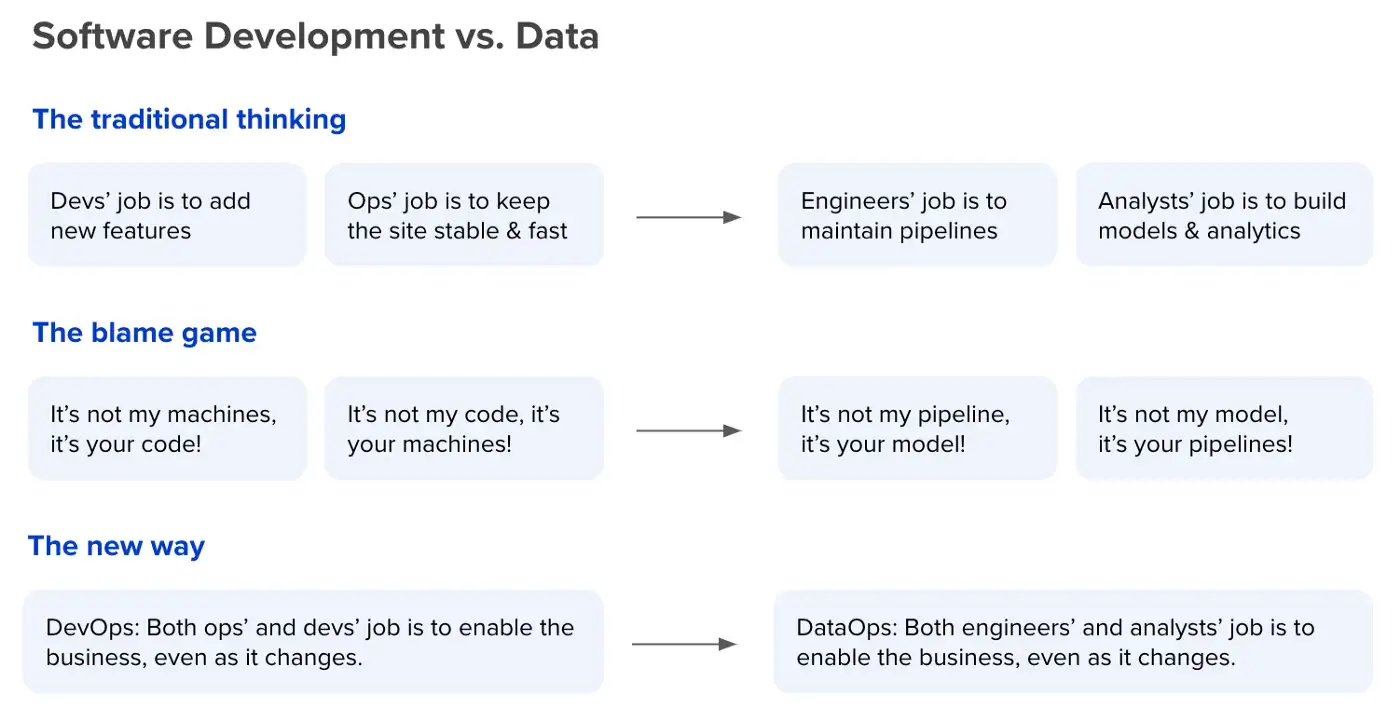

DataOps Vs. DevOps: What is the difference? #

DataOps and DevOps may sound similar, but they serve different purposes. DevOps deals with the swift release of code and software, ensuring it runs seamlessly in the production environment. With it, Data Analysts and Scientists can deploy their pipelines and Machine Learning models on their own.

On the other hand, DataOps focuses on managing and optimizing the flow and usage of data within an organization and it is not just a technology improvement but a holistic view of how the lifecycle of the data the company manages is handled.

DataOps vs DevOps - Source: Rise of DataOps.

The 9 principles of DataOps #

As suggested by Andy Palmer et al. in the eBook Getting DataOps Right, the following key principles define a DataOps ecosystem

- Highly automated

- Open

- Take advantage of best-of-breed tools

- Use Table(s) In/Table(s) Out protocols

- Have layered interfaces

- Track data lineage

- Feature deterministic, probabilistic, and humanistic data integration

- Combine both aggregated and federated methods of storage and access

- Process data in both batch and streaming modes

We’re summarizing the following principles here:

1. Highly automated #

DataOps emphasizes automation as a key principle. This includes automating repetitive tasks such as data pipelines, data quality checks, and monitoring. By creating ready-made templates for pipelines or data quality check suites for the most common checks, for example, DataOps can speed up data management processes, reduce errors and improve efficiency.

2. Open #

DataOps embraces open-source technologies and standards, making it easier for organizations to adopt, integrate and integrate their solutions. Open source solutions allow flexibility in terms of choosing the best-fit tool, reduce vendor lock-in, and help in creating a community of users, which can in turn support and improve the solutions.

3. Take advantage of best-of-breed tools #

DataOps is technology agnostic, it enables organizations to pick the best tool for a specific job. For example, a batch process will use different tools than a real-time data pipeline, by doing so it ensures that the data is processed in the best way.

4. Use Table(s) In/Table(s) Out protocols #

DataOps uses Table(s) In/Table(s) Out protocols, popularized as Data Contracts by Andrew Jones, as a way to simplify data integration by providing a well-defined interface for data integration. This allows for faster integration and a clear separation of responsibilities between different data management tasks.

5. Have layered interfaces #

DataOps supports a layered architecture that allows for different levels of abstraction (raw data vs aggregated data as one example), providing a clear separation of concerns. This allows data professionals to work more efficiently and to more easily update and maintain data pipelines and data products.

6. Track data lineage #

DataOps emphasizes the importance of tracking data lineage, which allows organizations to understand how data was collected, processed, and used. This helps organizations comply with regulations and policies.

7. Feature deterministic, probabilistic, and humanistic data integration #

DataOps supports multiple types of data integration, which allows organizations to choose the best approach for a specific data management task. Deterministic integration is when data is matched based on a set of predetermined rules, Probabilistic integration is when data is matched based on probability and humanistic integration is when data is matched by a human expert.

8. Combine both aggregated and federated methods of storage and access #

DataOps enables organizations to choose the best method of storage and access for a specific data management task. Aggregated storage means the data is stored in one place, typically a Data Warehouse, Data Lake, or Data Lakehouse; federated storage means data is stored in multiple places and accessed through a single endpoint.

9. Process data in both batch and streaming modes #

DataOps is designed to process data in both batch and streaming modes, enabling organizations to choose the best approach for a specific task. Batch processing is useful when dealing with large amounts of data for analytics; while streaming processing is useful for real-time data pipelines, for example, to serve recommendations in an e-commerce business.

DataOps framework #

In order to have a successful DataOps program in your organization you can follow this dataops framework:

- Step 1: Define your goals

- Step 2: Get top management sponsorship

- Step 3: Create cross-functional product teams

- Step 4: Form a data platform team/department

- Step 5: Create a DataOps Enablement function

- Step 6: Implement Agile methodologies

- Step 7: Automated repetitive tasks

- Step 8: Implement a Data Governance program

- Step 9: Continuously measure and monitor

DataOps enablement in Action | Forrester + Atlan - Masterclass

Step 1: Define your goals #

Before you begin implementing a DataOps framework, it’s important to define the goals and objectives of your data strategy, including which metrics you are going to use to measure success. This will help you to understand what you want to achieve and how you’re going to measure the success of the process and technologies you implement.

Step 2: Get top management sponsorship #

For your DataOps program to succeed it is key to have the alignment and buy-in from your Senior Managers in the organization.

Step 3: Create cross-functional product teams #

DataOps is a collaborative approach, so it’s important that your Product and Technology organization works in cross-functional teams that include Product Managers, Engineering Managers, UX Designers, and Data Analysts at least.

Depending on the complexity of the domain the cross-functional team owns, you might also need Data Scientists and Data Engineers in the team. This will help to ensure that everyone is working towards the same goals and that all perspectives are taken into account.

Step 4: Form a Data Platform team/department #

Form a Data Platform team/department that is responsible for the overall design, development, and maintenance of the platform where data will be stored and used.

Step 5: Create a DataOps Enablement function #

Creating a DataOps Enablement function is crucial for organizations that aim to make the most of their data and become more data-driven. This function focuses on the tools, processes, and culture that make data management more efficient and effective.

Having this function in place in the organization enables other teams to focus on more important tasks that deliver more value to the organization as improving the productivity of professionals working with data is one of the key goals of a DataOps Enablement function.

Step 6: Implement Agile methodologies #

Implement Agile methodologies to adapt to changing requirements.

Step 7: Automate repetitive tasks #

Automate repetitive tasks to improve efficiency and reduce costs by removing duplicated efforts.

Step 8: Implement a Data Governance program #

Data Governance refers to the policies, processes, and tools that organizations use to manage and protect their data.

In the context of DataOps, this includes ensuring that data is used in compliance with relevant laws and regulations but also that definitions are aligned between cross-functional teams and we have a source of truth for the most important domains of the business.

Step 9: Build a culture of continuous improvement #

Continuously measure and monitor according to the goals and metrics defined in the first point.

Conclusion #

In this article, we have explained the concept of DataOps and how it has emerged as a requirement for modern enterprises.

By following the DataOps principles and framework introduced, organizations can establish a robust DataOps practice that enables them to effectively manage and extract value from their data.

To deepen your understanding of DataOps and find out more about how organizations can leverage it, we encourage you to download the new Forrester Wave™: Enterprise Data Catalogs, Q3 2024 Report. The report is an evaluation of top enterprise data catalogs for DataOps. It shows how each provider measures up and helps technology architecture and delivery leaders select the right one for their DataOps needs.

Written by Xavier Gumara Rigol

Share this article