AWS Glue Data Catalog: A Guide to Metadata Management

Share this article

The AWS Glue Data Catalog centralizes metadata management for cloud data lakes, warehouses, and databases. This serverless solution integrates with AWS services like Athena and Redshift to support structured and semi-structured data, enabling secure governance, efficient ETL processes, and flexible querying for large datasets. Automated schema discovery ensures accurate data cataloging, enhancing accessibility, security, and compliance.

See How Atlan Simplifies Data Cataloging – Start Product Tour

AWS Glue is one of the most popular cloud-based ETL solutions, especially if your data infrastructure is mostly hosted on AWS. The AWS Glue Data Catalog acts as a metadata store for AWS services and any services outside of AWS that are compatible with a Hive metastore.

Table of contents #

- What is AWS Glue?

- AWS Glue Use cases

- How does AWS Glue work?

- Understanding AWS Glue’s Architecture

- What is AWS Glue Data Catalog?

- AWS Glue Data Catalog key features

- AWS Data Catalog use cases

- AWS Glue Data Catalog: Benefits and limitations

- Using Crawlers to populate AWS Glue Data Catalog

- AWS Glue vs. EMR

- Atlan + AWS Glue Data Catalog

- FAQs about AWS Glue Data Catalog

- AWS Glue Data Catalog: Related reads

What is AWS Glue? #

AWS Glue is a cloud-based ETL tool that allows you to store source and target metadata using the Glue Data Catalog, based on which you can write and orchestrate your ETL jobs either using Python or Spark.

AWS Glue offers a great alternative to traditional ETL tools, especially when your application and data infrastructure is hosted on AWS.

Glue is a fully managed ETL service that would make it easier to categorize, clean, transform, and transfer data between different data stores. These data stores could be EC2 machines, object storage, different types of databases, caches, and so on.

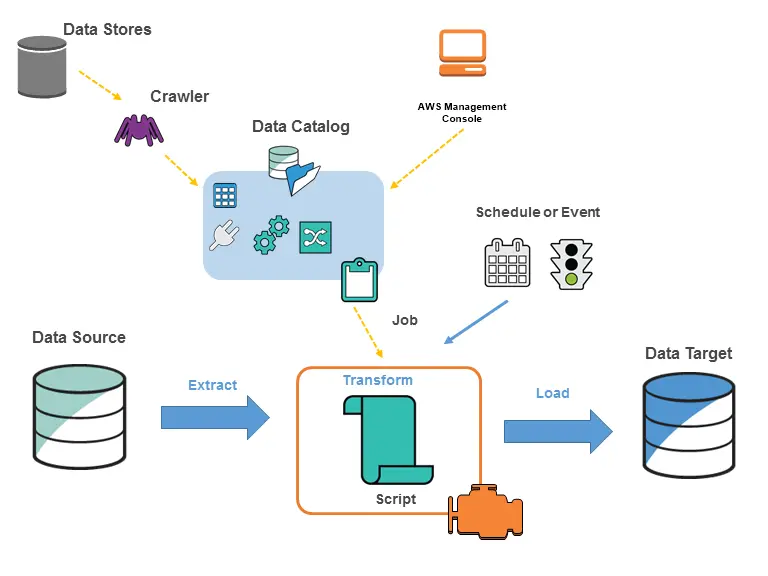

AWS Glue Environment. Source: AWS Glue Developer Guide.

The Ultimate Guide to Evaluating an Enterprise Data Catalog

Download free ebook

AWS Glue Use cases #

AWS Glue isn’t just an ETL tool; it is a lightweight job orchestrator, a data catalog solution, and much more.

AWS Glue can help you

- Extract, transform, and load data from various sources to various targets, either using out-of-the-box patterns or writing your own.

- Run serverless queries against your data lake in Amazon S3 using Redshift Spectrum or Amazon Athena.

- Understand your data by storing and organizing metadata in a Hive-compatible metastore using AWS Glue Data Catalog.

How does AWS Glue work? #

AWS Glue depends on the AWS Glue Data Catalog, which maintains metadata about sources and targets. Glue executes ETL jobs based on this metadata to move data from sources to targets.

Data Sources supported by AWS Glue #

Glue supports a wide variety of data sources, both batch-based and stream-based. Glue offers seamless support for AWS services, such as S3, RDS, Kinesis, DynamoDB, and DocumentDB. It supports any database or data warehouse as a source that can be exposed using a JDBC connection. Apart from that, AWS Glue also supports MongoDB and Apache Kafka as data sources.

Data Targets supported by AWS Glue #

AWS Glue supports, more or less, all the sources as targets, too, such as Amazon S3, RDS, MongoDB, DocumentDB, and any database that can be exposed using a JDBC connection.

Machine Learning-Driven Data Quality Monitoring (Glue DQ): In August 2024, AWS introduced Glue Data Quality, leveraging ML algorithms to monitor and detect anomalies in Glue Data Catalog allows users to set both rule-based and ML-powered monitoring to identify unexpected data patterns, enhancing proactive data quality checks in multiple AWS regions. More on this update is available on AWS’s blog here.

Understanding AWS Glue’s Architecture #

AWS Glue is made up of several individual components, such as the Glue Data Catalog, Crawlers, Scheduler, and so on.

AWS Glue uses jobs to orchestrate extract, transform, and load steps. Glue jobs utilize the metadata stored in the Glue Data Catalog.

These jobs can run based on a schedule or run on demand. You can also run Glue jobs based on an event trigger, hence laying the foundation for event-driven ETL pipelines.

AWS Data Catalog #

AWS Glue uses a Hive-compatible metastore as a data catalog. The data catalog is essential to ETL operations. Before running your job, you need to catalog the source and target metadata.

Because of its Hive compatibility, the AWS Glue Data Catalog can also be used as a standalone service in combination with a non-AWS ETL tool.

AWS Glue ETL Operations #

After ensuring that the data stores are crawled properly to get the latest metadata information into the AWS Glue Data Catalog, you are ready to use Glue to move data around within your data ecosystem.

The data moving requires a Glue job, which uses either a Python or Spark environment to execute. Your execution speed, resources, and costs will differ depending on your choice.

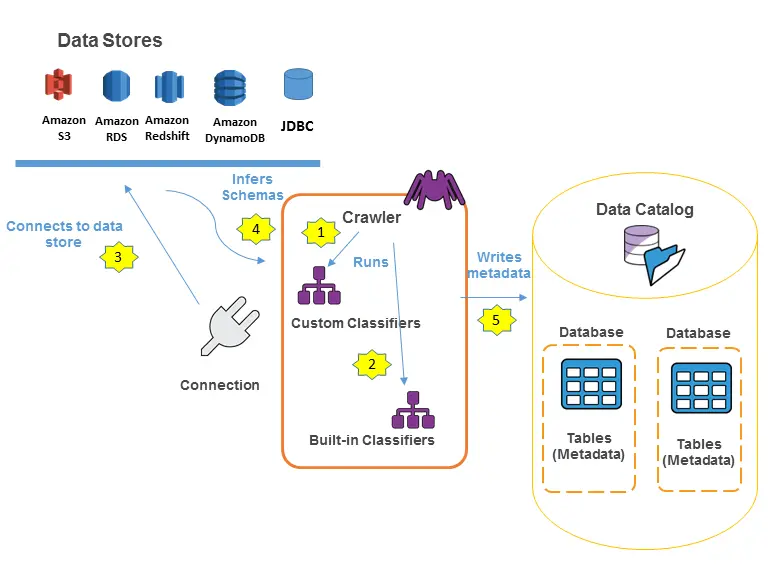

AWS Glue Crawlers #

Crawlers are scripts to get the latest metadata from a data store. If you are using a database as a data store, think of crawlers as running a SELECT query on the information_schema. Crawlers can either be run on a schedule or on demand.

Crawlers utilize predefined classifiers to determine the schema of your data. This schema is then replicated in the Glue Data Catalog as closely as possible. Crawlers can help you get the metadata from a variety of data stores.

AWS Glue Management Console #

Like all other services, AWS Glue can be managed via multiple interfaces. The most popular and easy-to-use among these interfaces remains the Management Console.

Using the Management Console, you can work with jobs, crawlers, classifiers, sources, targets, etc. Alternatively, you can also use the API or the SDK specific to your programming language or development framework.

Job Scheduling and Orchestration #

For scheduling, AWS Glue uses cron expressions. Most advanced workflow management tools and orchestration engines like Airflow and Prefect also use some variants of cron expressions to schedule jobs.

With the ability to define several types of dependencies between jobs, you can create complex ETL workflows, imitating DAGs (directed acyclic graphs), which are essential to handle real-world ETL scenarios.

A Guide to Building a Business Case for a Data Catalog

Download free ebook

What is AWS Glue Data Catalog? #

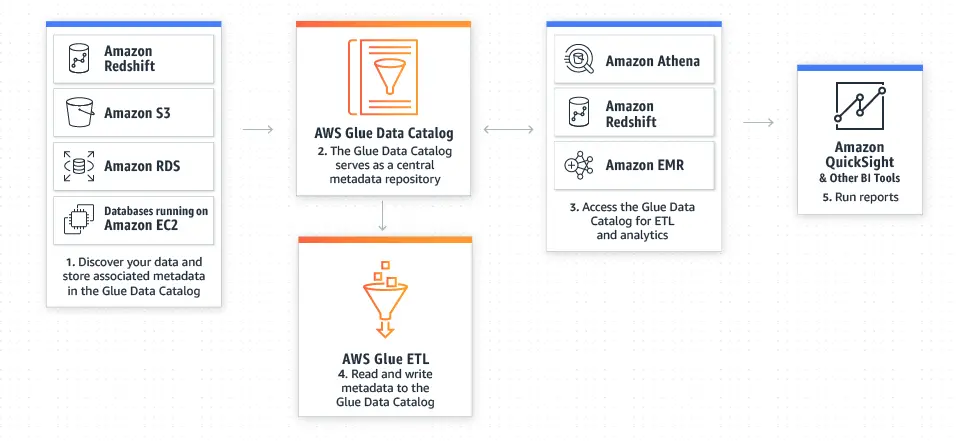

AWS Glue Data Catalog is a Hive-compatible metastore used by AWS Glue as a uniform repository of metadata coming from disparate systems.

In addition to being a data catalog, AWS Glue Data Catalog also offers audit and data governance capabilities.

AWS Glue Data Catalog key features #

- Persistent, Hive-compatible metastore for enabling ETL operations

- IAM-based fine-grained access control for enhanced data security

- Comprehensive audit and data governance for compliance

AWS Glue Data Catalog Architecture. Source: AWS Glue.

AWS Data Catalog use cases #

You can use the AWS Glue Data Catalog with various services in the following ways:

- Creating a Data Lake using AWS Lake Formation— AWS Lake Formation is a fully-managed data lake solution that uses AWS Glue Data Catalog. AWS Lake Formation manages Glue ETL jobs, crawlers, and the Data Catalog to transport data from one layer of the data lake solution to another.

- Querying External Data using Athena & Redshift Spectrum— After storing schema information and other metadata for an external data source, you can use Athena or Redshift Spectrum to query the data source directly. The AWS Glue Data Catalog retains the schema information, which helps the query engine read data from the data source.

- Data Processing & Analysis using Amazon EMR— In scenarios where you have to perform process large amounts of data or complex data analysis, you can take advantage of EMR, which gives you EC2 machines that run in a Hadoop ecosystem, giving you an easy way to write and execute Spark or PySpark code.

- Using Glue Data Catalog as a Standalone Metastore — AWS Glue Data Catalog can be used as a standalone service with any data store compatible with Apache Hive. So, even if your data infrastructure doesn’t completely lie on AWS, you can still take advantage of AWS Glue Data Catalog as your central metadata repository to enable ETL operations using a separate ETL tool.

AWS Glue Data Catalog in AWS ecosystem. Source: AWS Glue.

AWS Glue Data Catalog: Benefits and limitations #

There are many benefits to using AWS Glue Data Catalog, it:

- Is Serverless — You only need to pay for what you use. No need to provision resources in advance.

- Has Out-of-the-box Cataloging & ETL capabilities — It can enable ETL operations with built-in classifiers. Even for ETL, there are built-in transforms that you can capitalize on.

- Offers Built-in workflow & orchestration — With Glue, you can create complex workflows that can take care of your ETL workloads. You can also use it with external orchestration tools, but the integration won’t be smooth.

Although AWS Glue Data Catalog comes with several benefits listed above, there are a few limitations too:

- Language support — Currently, Glue supports only two programming languages and frameworks, i.e., Python and Spark (Scala and PySpark). Although these two cover a lot of ground for ETL and data analysis, other frameworks, such as Node.js, Julia, and R, need to be taken more seriously.

- Closed-source — Like many other services, Glue remains a black box for developers, making it difficult to innovate and extend Glue’s capabilities.

What is AWS Glue Data Catalog?

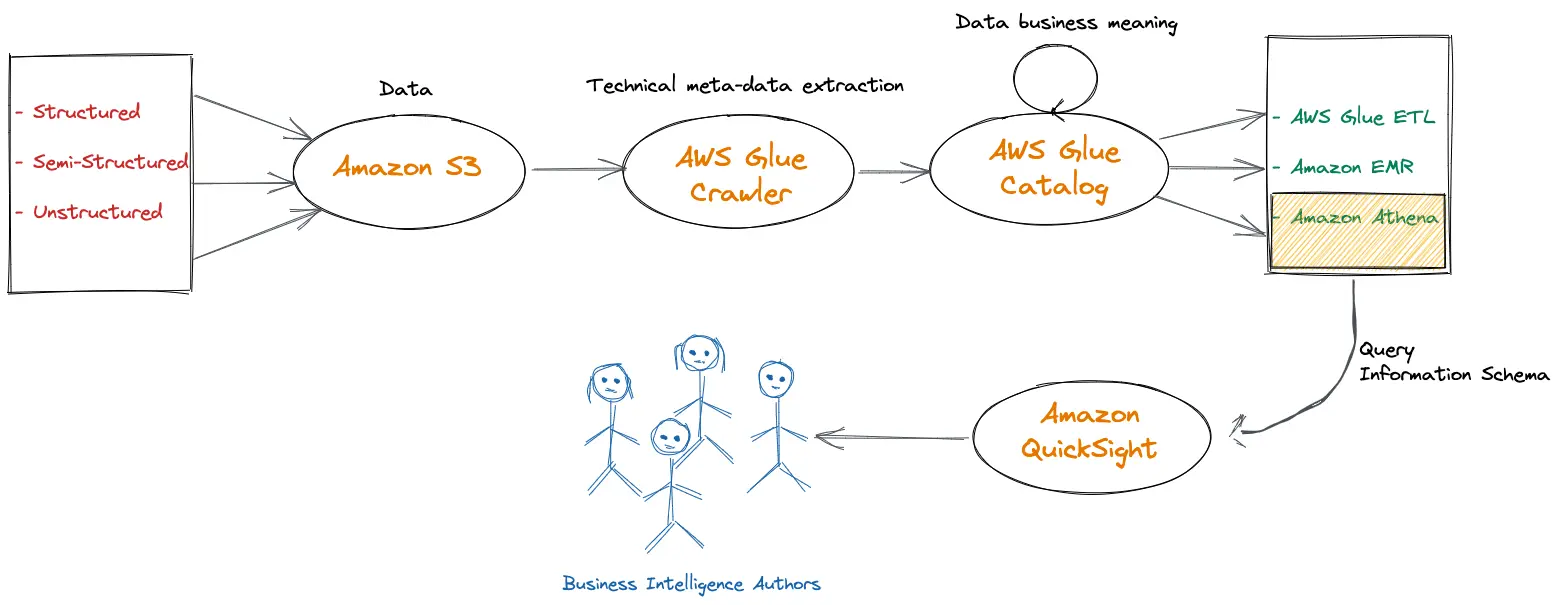

Using Crawlers to populate AWS Glue Data Catalog #

What are AWS Glue crawlers? #

You need AWS Glue Data Catalog to have the metadata information for source and target schemas to perform ETL operations. AWS Glue crawlers are scheduled or on-demand jobs that can query any given data store to extract scheme information and store the metadata in the AWS Glue Data Catalog. Glue Crawlers use classifiers to specify the data source you want it to crawl.

General workflow of how crawlers populate AWS Data Catalog #

A Glue Crawler gets the metadata from a data source and writes to the AWS Glue Data Catalog in the following manner:

- Glue uses a built-in or custom classifier to determine the data’s format, schema, and other properties. In SQL terms, imaging this being a

SELECTquery on a sample of the actual data and approximating the table’s structure based on the sample. - Glue Crawler groups the data into tables or partitions based on data classification. If the crawler is getting metadata from S3, it will look for folder-based partitions so that the data can be grouped aptly.

- Glue pushes the data into the AWS Glue Data Catalog, after which the crawled datastore is ready to be used in ETL operations.

Work flow diagram populating AWS Glue Data Catalog. Source: AWS Glue Developer Guide.

Glue offers a variety of classifiers that cover most of the popular data stores. However, if you don’t find your data source covered by the built-in classifiers, you can write your own classifier to crawl the data store.

Learn more - Simplify data discovery for business users with AWS Glue data catalog

AWS Glue vs. EMR #

AWS Glue and EMR both provide data computation and processing services. Both these services overlap in many of the features while offering something unique also because of the way they’ve been implemented. AWS Glue is a serverless ETL service, while AWS EMR uses EC2 instance clusters to create a Hadoop ecosystem for processing large amounts of data.

When you don’t know your data processing requirements, it’s better to use AWS Glue as it has a pay-as-you-go model. With EMR, you’d have to dedicate money to infrastructure that you may not even end up using. Moreover, EMR only solves the computation and processing problem; it doesn’t offer any data cataloging or workflow orchestration service. AWS Glue has the edge over EMR on this too.

EMR would make sense for you to use if you have a very good idea about the volume of data you’ll be processing, along with the type and complexity of the transforms you’ll be performing on that data.

Conclusion #

All in all, AWS Glue Data Catalog is a popular tool that can be independently used, although it does come with some limitations we discussed above.

While it provides a pay-as-you-go pay structure because of its serverless nature, it also lacks integration capabilities with non-AWS services. With many companies going the multi-cloud route, this can be challenging.

Having said that, if you have enough technical expertise and data stores with Hive compatibility, you can make the most out of AWS Glue Data Catalog, even without using a lot of other AWS services.

On the other hand, if your data infrastructure is completely hosted on AWS, Glue can be a very powerful and time-saving integration that you can use.

Atlan Plus AWS Glue Data Catalog #

Atlan’s open platform enables seamless metadata ingestion from Glue and other sources. Its automated lineage capture and AI-powered suggestions help understand data relationships and enrich metadata, facilitating a smooth transition.

Furthermore, Atlan’s customizable metadata model and no-code workflows empower users to adapt the new catalog to specific needs. This minimizes disruption and maximizes the value of the migrated metadata.

Learn more about How Nasdaq Uses Active Metadata to Evangelize their Data Strategy.

If your data team uses the AWS ecosystem, then give Atlan a try for your data catalog and metadata management needs. Atlan integrates with Redshift, Athena, and AWS glue and helps create a collaborative workspace for data teams to work together 👉 Talk to us.

Some more resources on How to connect Atlan with AWS Glue data catalog:

FAQs about AWS Glue Data Catalog #

1. What is the purpose of the AWS Glue Data Catalog? #

The AWS Glue Data Catalog serves as a central metadata repository for managing data across various AWS services and compatible platforms. It enables efficient data discovery, search, and schema management, making it essential for large-scale data integration and ETL processes.

2. How can I set up the AWS Glue Data Catalog? #

To set up the AWS Glue Data Catalog, configure a database in the AWS Glue console, then use crawlers to scan data sources and update the catalog with metadata. AWS provides options to integrate with various data stores and customize crawler settings based on data structure.

3. What are the benefits of using AWS Glue for data cataloging? #

AWS Glue Data Catalog offers seamless metadata management, scalability, and automated schema inference, enabling users to centralize and manage data assets effectively. Integration with AWS analytics services like Athena and Redshift enhances data usability and ETL workflows.

4. How does AWS Glue Data Catalog integrate with data lakes or warehouses? #

AWS Glue Data Catalog integrates with data lakes on Amazon S3 and data warehouses like Amazon Redshift, allowing you to create a unified view of all data assets. By cataloging metadata, it ensures consistent data access, supports query optimization, and simplifies ETL operations.

5. What security features does the AWS Glue Data Catalog offer? #

AWS Glue Data Catalog provides robust security features, including IAM policies for access control, encryption of metadata at rest and in transit, and audit logging. These features ensure compliance and secure access to data assets within the catalog.

6. Can the AWS Glue Data Catalog be used with on-premises data sources? #

Yes, AWS Glue Data Catalog can be configured to work with on-premises data sources through compatible connectors and AWS Direct Connect, allowing you to manage and catalog metadata across hybrid environments.

AWS Glue Data Catalog: Related reads #

- What Is a Data Catalog? & Do You Need One?

- Data catalog benefits: 5 key reasons why you need one

- Open Source Data Catalog Software: 5 Popular Tools to Consider in 2024

- Data Catalog Platform: The Key To Future-Proofing Your Data Stack

- Top Data Catalog Use Cases Intrinsic to Data-Led Enterprises

- Enterprise Data Catalog: Definition, Importance, Use Cases & Benefits

- Airbnb Data Catalog — Democratizing Data With Dataportal

- Lexikon: Spotify’s Efficient Solution For Data Discovery And What You Can Learn From It

- Best Alation Alternative: 5 Reasons Why Customers Choose Atlan

- Metadata Management in AWS: A Detailed Look at Your Options

- How To Setup Business Glossary With AWS Glue?

- AWS Data Mesh: Essential Guide for Implementation (2024)

- Benefits of Data Governance on AWS: What’s Available and How Can You Build On It?

Photo by Olenka Sergienko on Pexel

Share this article