Data Contracts: What They Are, Why They Matter & How to Implement Them

Share this article

Data contracts are formal agreements that define and enforce data schemas between producers and consumers. They help ensure data reliability by aligning expectations and automating validation.

See How Atlan Simplifies Data Governance – Start Product Tour

These contracts prevent errors in data pipelines by establishing clear rules for data exchange. They streamline integration, improve compliance, and enable smooth collaboration across teams.

Data contracts also support versioning, allowing systems to adapt to changing data needs. This enhances data quality, strengthens governance, and ensures secure, consistent data sharing across systems.

A data contract outlines how data can get exchanged between two parties. It defines the structure, format, and rules of exchange in a distributed data architecture. These formal agreements make sure that there aren’t any uncertainties or undocumented assumptions about data.

Let’s demystify data contracts and provide foundational information as well as advanced insights to help you understand and implement them effectively.

Table of contents #

- What is a data contract?

- Why are data contracts important?

- What is inside a data contract?

- Data contracts tooling: Are there any tools for creating data contracts?

- Types of data validation

- How to implement data contracts by validating various agreements

- How to handle contract validation failures

- Lifecycle of data contracts and best practices

- How organizations making the most out of their data using Atlan

- Wrapping up

- FAQs about Data Contracts

- Data contracts: Related reads

What is a data contract? #

A data contract is an agreement between the producer and the consumers of a data product.

Just as business contracts hold up obligations between suppliers and consumers of a business product, data contracts define and enforce the functionality, manageability, and reliability of data products.

According to dbt, the ability to define and validate data contracts is essential for cross-team collaboration. With data contracts:

- The producer of a data product interface can ensure that it is not accidentally causing breakages downstream which it did not anticipate.

- The consumer of the interface relies on can ensure that is not and will not be broken.

Why are data contracts important? #

Data architecture remained largely monolithic for a long time, with a central data team owning all the data and its quality even though that data was produced by someone else.

Recently, there has been a shift towards distributed data ownership, where the domain teams are held accountable for their own products. This shift helps organizations in reimagining quality expectations for a dataset as an agreement between data producers and consumers. Data contracts are a way of formalizing this agreement.

The benefits of data contracts are:

- Scale the distributed data architecture effectively

- Build and maintain highly reliable quality checks since data producers are the owners of the data they produce

- Shift the responsibility left as the data quality gets checked as soon as the products are created/updated

- Increase the surface area for data quality as the central data platform team can focus more on building tools and frameworks that support contract validations

- Set up a feedback culture between data producers and consumers, fostering a collaborative rather than a chaotic environment

Also, read → dbt Data Contracts: Quick Primer With Notes on How to Enforce

What is inside a data contract? #

In addition to general agreements about intended use, ownership, and provenance, data contracts include agreements about:

- Schema

- Semantics

- Service level agreements (SLA)

- Metadata (data governance)

Let’s explore each agreement further.

Schema #

Schema is a set of rules and constraints placed on data attributes and/or columns of a structured dataset. It provides useful information on processing and analyzing data.

Schemas include names and data types of attributes and whether or not they are mandatory. Sometimes they can include the format, length, and range of acceptable values of columns.

As data sources evolve, schemas undergo change. Schemas can also sometimes change because of changes in business requirements.

For example, in order to avoid an Integer Overflow error, a team may decide to replace a numeric identifier with a UUID. Alternatively, they may decide to remove some of the redundant/dormant columns.

An example of JSON schema for a business entity called ‘Person’. Image by Atlan.

Semantics #

Semantics are about capturing the rules of each business domain. Semantic rules include several aspects, such as:

- How business entities transition from one state to another within their lifecycle

- For example, in a dataset of e-commerce orders, the fulfillment date can never be earlier than the order date

- How business entities relate to one another

- For example, in a dataset of users, a user can have many postal addresses but only one email id

- Business conditions

- For example, if the fraud score of a transaction is not null, the payout value must be 0)

- Deviation from the normal

- For example, the percentage threshold over average that the values cannot exceed

Service level agreements (SLA) #

Service level agreements (SLAs) are commitments about the availability and freshness of data in a data product. SLAs help data practitioners design data consumption pipelines more effectively.

Since data products are continually updated with new information, SLAs can be drafted to include commitments, such as:

- The latest time by which new data is expected in the data product

- The maximum expected delay (in case of the real-time data stream) for late-arriving events

- Metrics like Mean Time Between Failures and Mean Time To Recovery (MTBF or MTTR)

Metadata (Data governance) #

Data governance specifications in a data contract help you understand security and privacy restrictions and check whether your data products are in compliance.

For instance, attribute pseudonymization/masking limits the ways it can be consumed. Similarly, any PII information included in the product must follow data privacy and protection regulations from GDPR, HIPAA, PCI DSS, etc.

The most common aspects of data governance rules are:

- User roles that can access a data product

- Time limit to access a data product

- Names of the columns with restricted access/visibility

- Names of columns with sensitive information

- How sensitive data is represented within the dataset

- Other metadata — data contract version, name and contact of data owners

Data contract example: What does a data contract look like? #

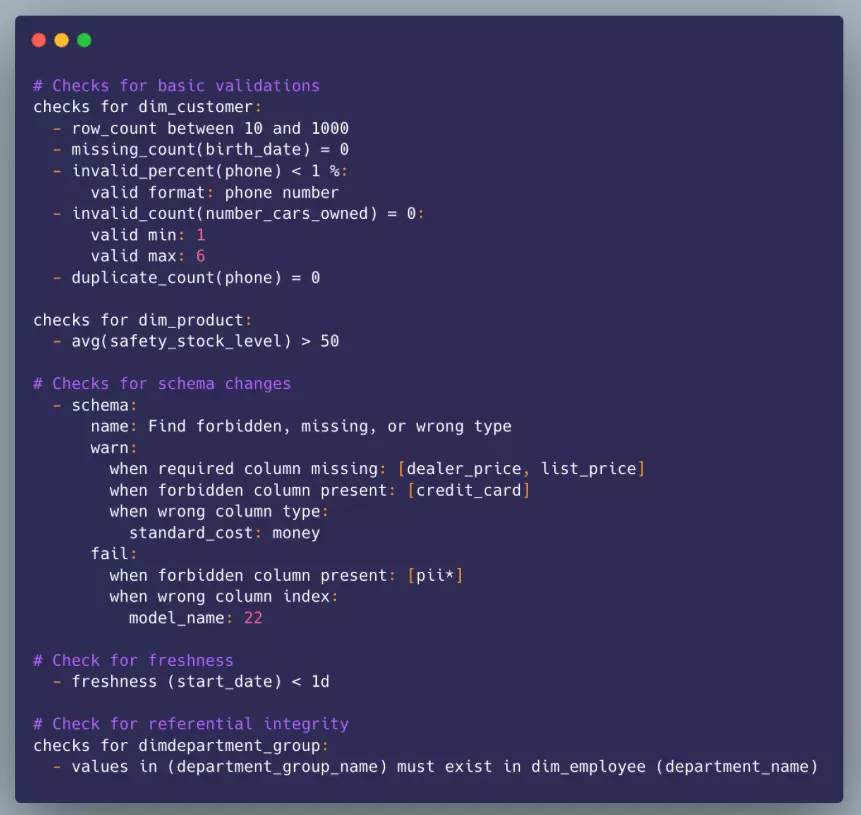

Here’s an example of a data contract defined in YAML format, covering schema, semantics, SLA and governance-related checks.

We created this contract using Soda.io, a popular data quality framework.

An example of a data contract created using Soda.io. Image by Atlan.

Data contracts tooling: Are there any tools for creating data contracts? #

There are no any universal data frameworks for creating, publishing or validating data contracts. Most tools in this space are in their early stages and the data community is still warming up to the potential benefits of data contracts.

Some tools like Schemata, which is a framework that focuses on contract modeling, are a step in the right direction.

In the absence of standardized universal frameworks, the process of authoring and validating data contracts remains fragmented within the data architecture and depends on data flow (batch/real-time), choice of data serialization, storage and/or processing systems.

Types of data validation #

Data validation can address varying levels of granularity. It can be:

- Record-level validation: Some validation rules (mostly schema rules) are applied one record at a time within a structured dataset. For example, data type rules or rules about attributes value range are validated at record level.

- Dataset-level validation: More complex validations may need to be applied to the whole dataset or even a chunk of it. For example, complex rules aggregating over multiple records in a dataset are validated at dataset level.

Validation checks can occur at two points in a data pipeline — in motion or at rest.

In-motion validation #

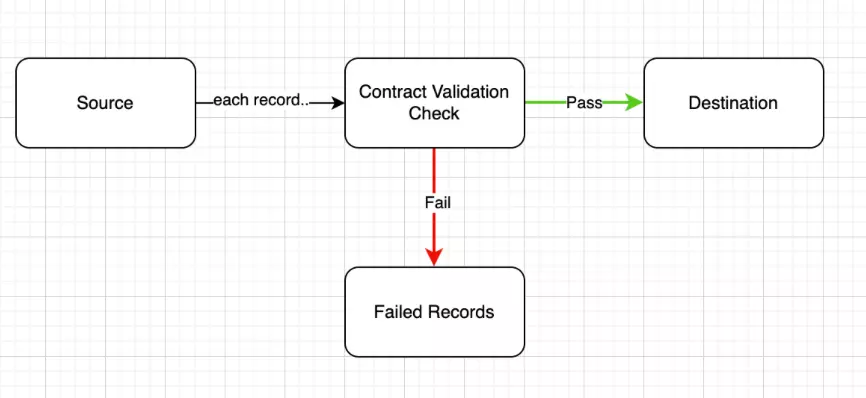

In this type of validation, some aspects of the contract get validated as data moves through the pipeline. Such checks are more common in events data or real-time data (for example, CDC events).

The advantage of this approach is that it filters out invalid records even before they land at the final destination. The limitation is that, because of its granularity, it mostly only validates schema rules and other record-level rules.

In-motion validation. Image by Atlan.

At-rest validation #

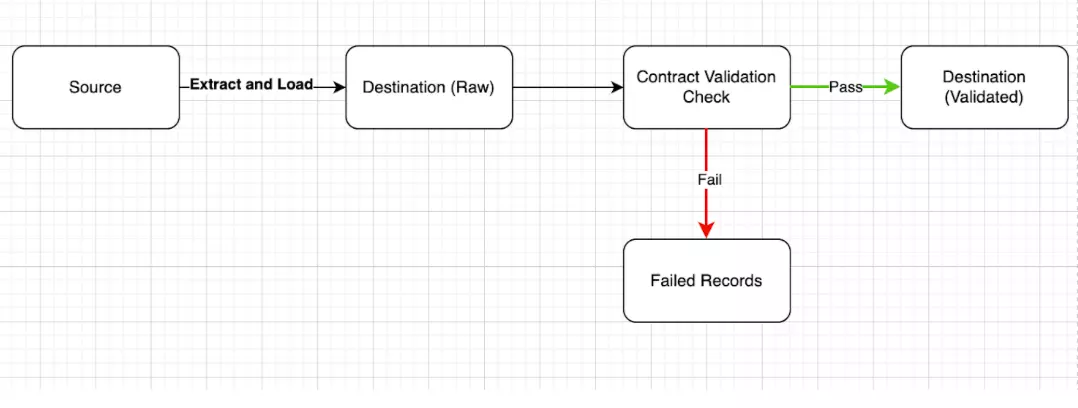

When data is ingested/transmitted unabated into a raw data lake, validation checks could be applied post-facto.

In this approach, the validation step behaves like a transformation step — it applies contract rules and filters out invalid data.

The advantage of this approach is that it can support both record-level as well as dataset-level validations.

At-rest validation. Image by Atlan.

Another use of at-rest validation is when contracts are enforced across trust boundaries.

For example when data is shared by a third party producer, or when the trust level between producers and consumers is low. In such situations, the consumer applies contract validations after the data is ingested into the consumer’s data lake.

How to implement data contracts by validating various agreements #

Each agreement within a data contract — schema, semantics, SLA, governance — must be validated to properly implement data contracts.

Schema validations #

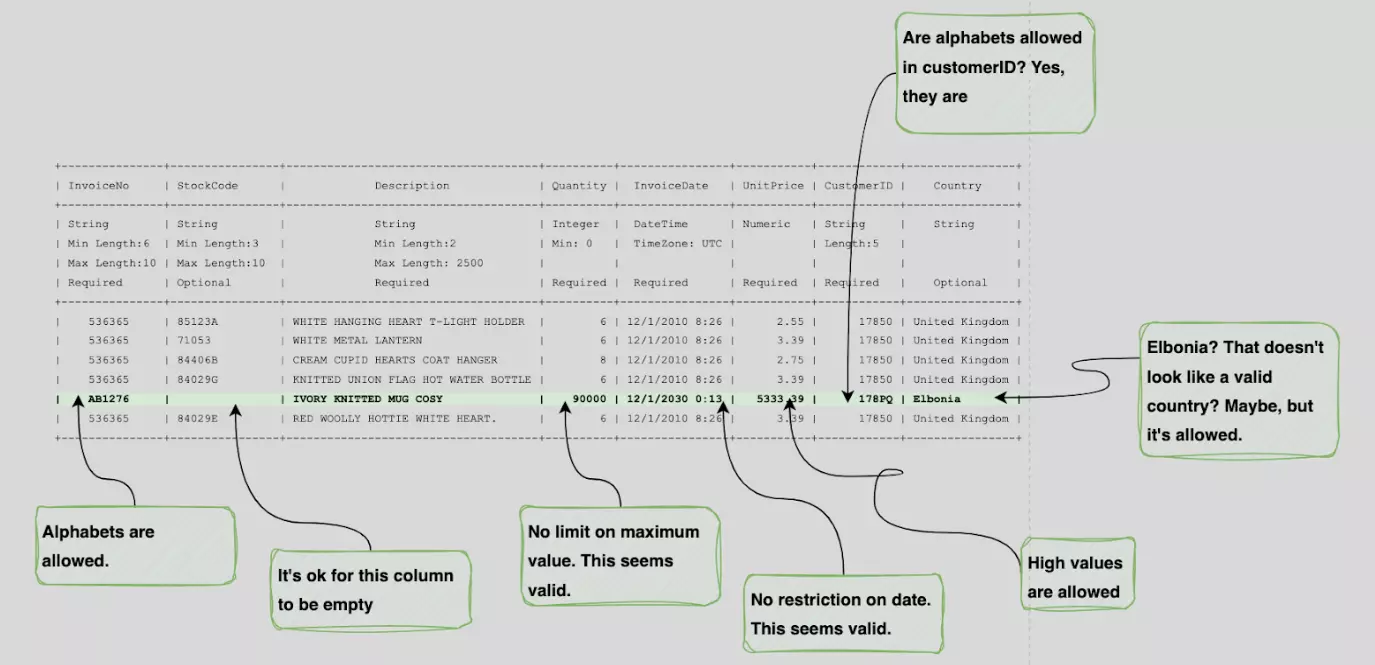

Schemas are essential to ensure the validity of each record in a dataset. Here’s an example of a dataset without schema information. This is an invoice showing orders from an online retail store.

An analyst might have several questions about the data type, format, range, and more.

The questions an analyst might have about each record in a dataset. Image by Atlan.

However, if you attach schema rules, then the analyst would come to conclusions as shown in the image below.

How schema validation rules can address the questions an analyst might have about records in a dataset. Image by Atlan.

Schema validations can be implicit or explicit.

Implicit schema validation #

Some of the data transfer and storage formats implicitly support schema definitions because they make schema specifications mandatory.

If you use such format, there would be no need for additional, external schema validators. For example, data serialization formats like Protobuf and Avro implicitly enforce schema rules.

Storage formats such as Parquet and most other relational database systems also enforce schema on data.

Explicit schema validation #

In case of schema-less data formats such as JSON or csv, or in cases where schema rules are not covered by implicit formats, external validation framework becomes inevitable. Most well known quality and observability frameworks support schema validations.

Semantic validations #

Semantic validations ensure that the data is logically valid and makes sense to the business domain.

For example, in an e-commerce business, the business logic could state that the customer-id in the Orders dataset must match the customer-id in the Customers dataset. This would be a semantic rule and would need to be validated as such.

Implementing semantic validations requires an in-depth understanding of the business domain. Unlike schema validations, semantic validations cannot be added implicitly to data formats. They need to be explicitly enforced via a validation framework.

Semantic checks can be related to:

- Value integrity

- Outliers

- Referential integrity

- Event order and state transitions

Let’s explore each aspect further.

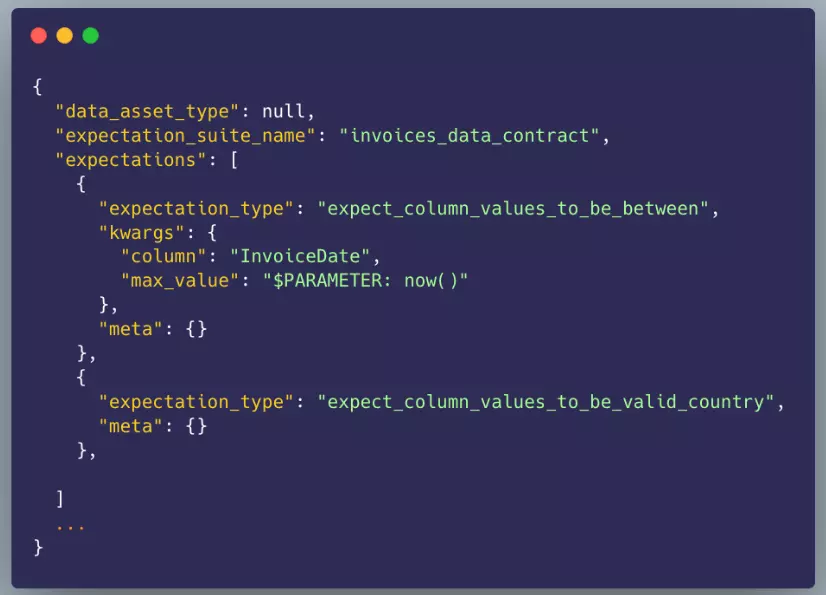

Value integrity checks #

Integrity checks validate data against business rules so as to detect unacceptable, illogical values caused by misconfigured systems, bugs in the business logic, or even test data leaking into the production environment.

For example, business rules might dictate that an invoice can never be future-dated, or that values like country and currency code must always comply with ISO and other similar standards.

You can have prebuilt checks incorporated within popular data quality frameworks and then include more specific checks with custom code.

Here’s an example from GreatExpectations that includes a predefined semantic check for country names based on GeoNames.

An example of a value integrity check in GreatExpectations, which includes a predefined semantic check. Image by Atlan.

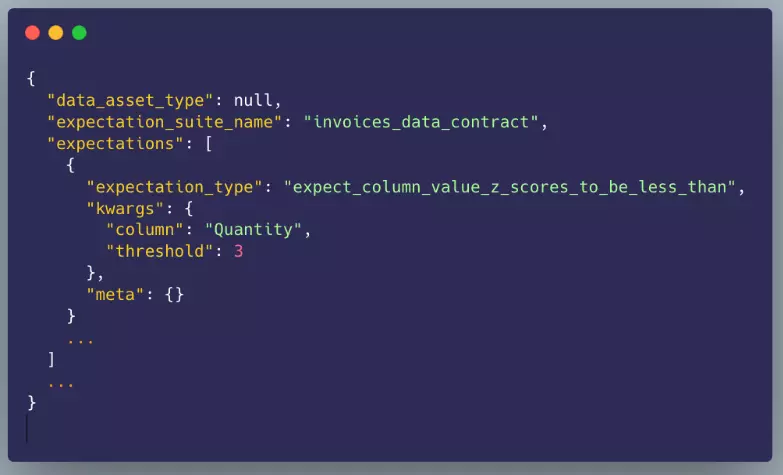

Outliers check #

A deep business context and understanding is a prerequisite to crafting rules regarding outliers. For example, how far above or below the average (arithmetic mean or median) can a value be, before being considered an outlier?

You can use statistical techniques such as IQR or Z-Score to spot outliers. Here’s how a Z-score based rule for outliers looks in GreatExpectations.

An example of a Z-score-based rule in GreatExpectationsl. Image by Atlan.

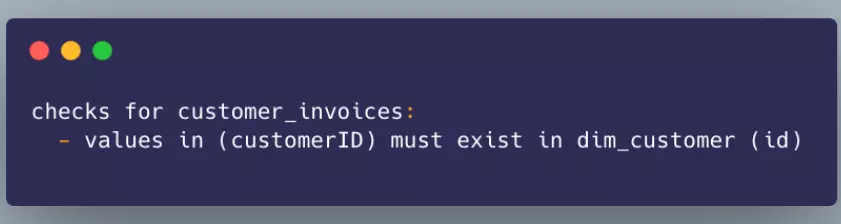

Referential integrity check #

Business entities are related to each other. For example, customers and invoices usually have a one-to-many relationship. Therefore, an invoice is valid only if it has a CustomerID that actually exists in the customers’ dataset. This type of constraint is called Referential Integrity Constraint.

Similar relationship constraints could exist across datasets or even within a dataset across multiple attributes. For example, in a dataset called Taxi Rides, a business rule could mandate that the end timestamp for a ride must always be AFTER the start timestamp.

Poor referential integrity can mean missing or incomplete data or even bugs in business logic. Data contracts must always factor in for such possibilities.

Here is an example referential integrity check in Soda.io.

An example of referential integrity check in Soda.io. Image by Atlan.

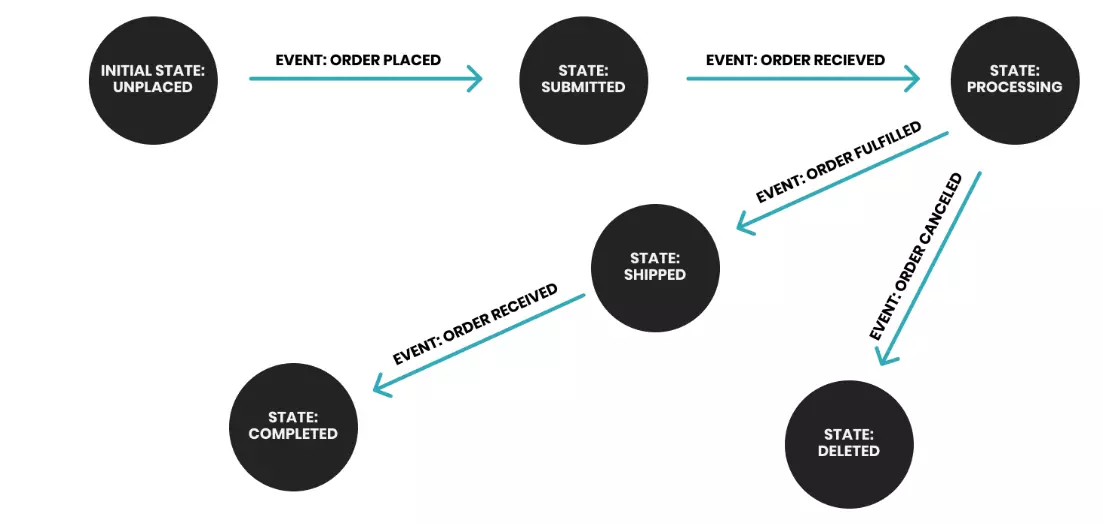

Event order and state transitions checks #

Event order and state transitions checks are the special types of checks that check to see if the order of historical state transitions of a business process is logically valid.

Most, if not all, business processes can be modeled as finite-state machines responding to the sequence of events administered.

Visualizing the event order. Image by Headway.

So, from a business process perspective, events must depict logically valid state transitions.

For example, if the events show that an order was in the shipped state BEFORE it was in the submitted state, it clearly indicates an invalid transition.

Errors such as these tend to occur due to bugs in business logic. Sometimes they are also caused by the inherent limitations in the distributed data processing systems which may lead to synchronization problems while collecting or loading data.

In real-time data pipelines, events can often arrive out-of-order or appear to be missing. Contract rules must factor in for such events.

Existing frameworks do not provide out-of-the-box support for such semantic checks. So, you have to create custom validation checks.

It is also important to base the semantic rules on evidence-backed business processes which represent valid business scenarios. Basing contract rules on subjective judgments or incorrect assumptions about business invariants can lead to validation failures.

SLA validations #

Service Level Agreements (SLA) in data contracts include commitments on data freshness, completeness, and failure recovery.

Data freshness #

Downstream processing and analysis depend on the parent dataset being up-to-date.

For example, reports on daily count of orders should only be run after the orders table is fully updated with the previous day’s data. And for real-time data pipelines, data is usually expected to be no older than a couple of hours.

Failure recovery metrics #

Incident metrics such as MTTR can be added to the data contracts to capture the producers’ commitments about data availability. This requires careful tracking of incidents and extracting the data from application monitoring and incident management tools.

For example, data from tools like PagerDuty or incident management systems is extracted to measure and report incident metrics. Once this data is available in a dataset, any type of quality framework could be used to validate incident reports data against their contract.

Metadata and data governance validations #

There isn’t much off-the-shelf support for authoring or validating governance specifications in contracts. But it is straightforward enough to develop in-house frameworks to serve this purpose.

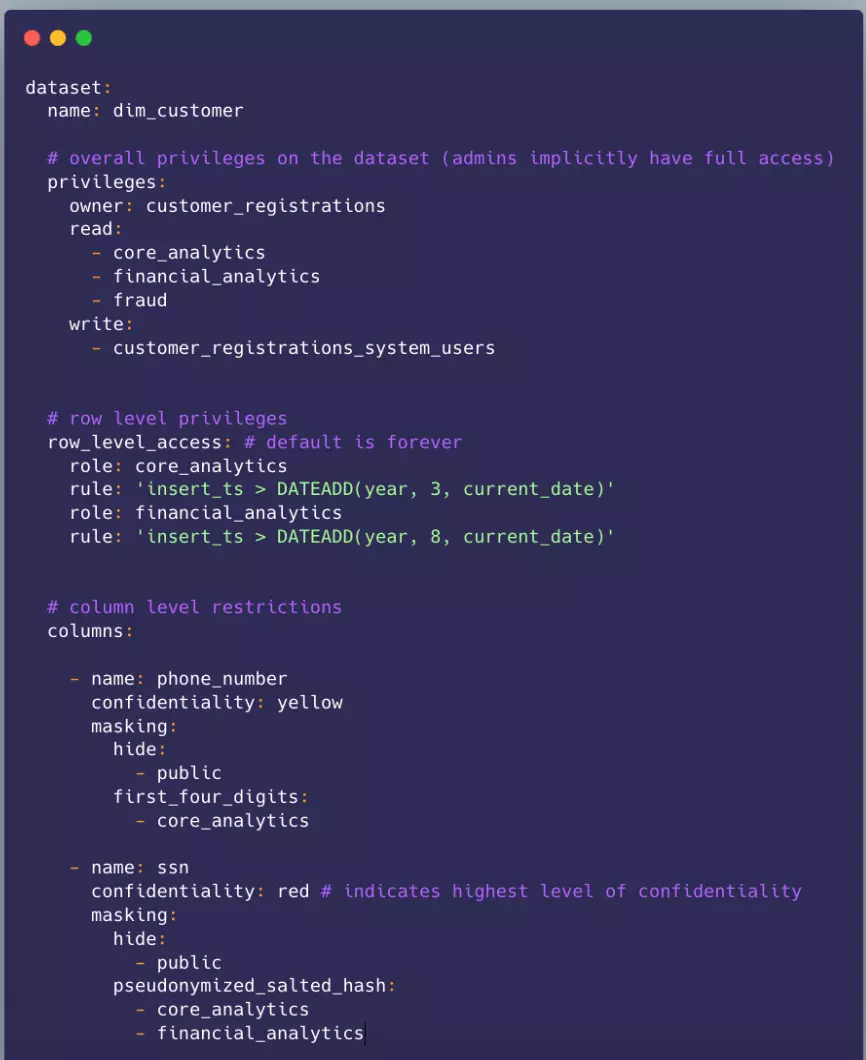

Here’s an example of the governance contract of a dataset in YAML format. This file lists the access privileges of a dataset, including table and row-level access policies. For sensitive columns, it details the masking policies applicable for each role.

An example of the data governance contract in YAML format. Image by Atlan.

Most modern data warehouses like Snowflake, Databricks, and BigQuery have extensive support for a rich information schema that can be queried (often using plain SQL) to validate the governance rules.

These rules can be implemented as custom, user-defined checks in existing frameworks like Soda.io or GreatExpectations, both of which provide a well-documented process for adding such checks.

How to handle contract validation failures #

Contract validation failures are handled in different ways, depending on the severity of failure and the relative importance of the dataset. These include:

- Alerts only

- All or none

- Ignore failed records

- Dead letter queue

Alerts only #

For low severity validation failures, the system notifies the data producers and its stakeholders (human as well as machines). No other automatic action is taken. The data owner would then decide the course of investigation and response.

When alerted in this way, the downstream pipelines can choose to continue working or pause until the upstream issue is fixed. It is possible to configure data orchestration systems to create dependencies between upstream and downstream datasets.

All or none #

For high severity failures (and critical datasets), the failures are deemed catastrophic and the system alerts the downstream pipelines just as before. But this time, the dataset is marked unavailable and all the updates to the dataset are halted until the issue is investigated/fixed.

Ignore failed records #

In some cases, typically raw events tracking, where a small fraction of data is expected to be bad because of tracking issues, failed records are simply ignored until they reach a set threshold number.

After that, the failures are deemed catastrophic and treated the same way as in the above “All or None” style.

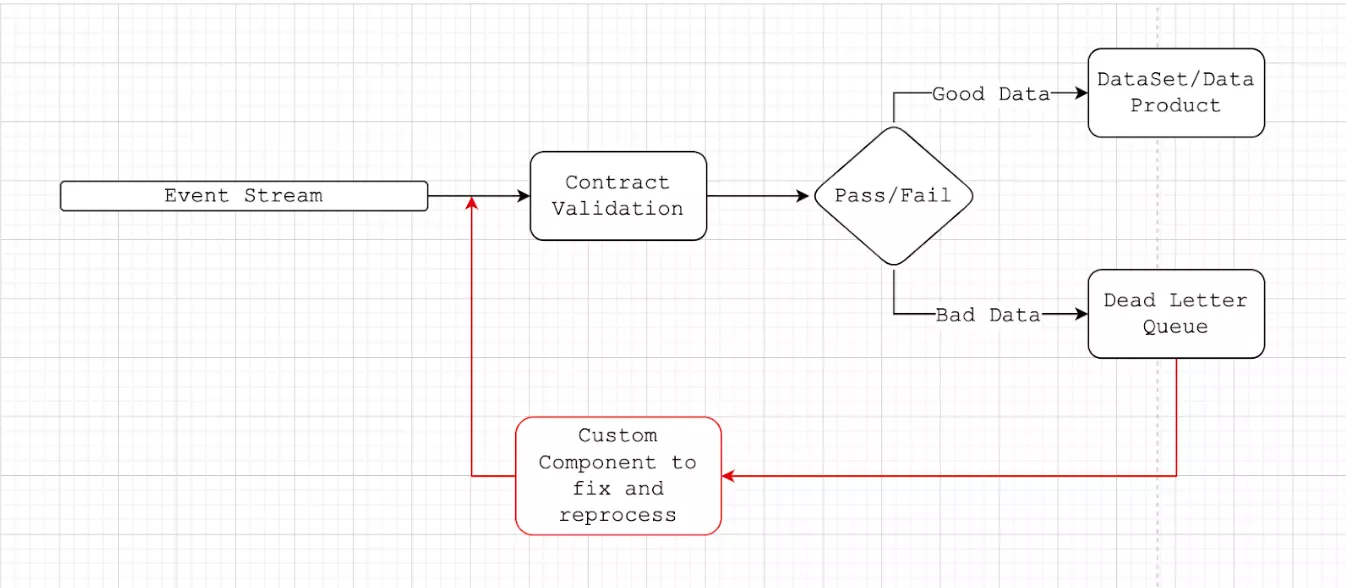

Dead letter queue #

In some cases, like in event-based data pipelines (including CDC), failed records are not ignored but stored in a separate pile. If necessary, a custom data pipeline is written to fix and reprocess the failed records.

A threshold may be used to indicate if the failure rate is too high to continue - in which case, the pipeline is completely stopped.

Dead letter queue workflow. Image by Atlan.

Lifecycle of data contracts and best practices #

Data products can get replaced, split or merged with one another as business rules change over time. As data products evolve and change, their contracts must be realigned to reflect these changes.

It is not unusual for data owners to modify the schema and/or semantics of their data products. Moreover, teams often go through continual reorganization (Heidi Helfand calls this Dynamic Reteaming). This could also lead to changes in data products.

Data products may even change ownership and/or get redesigned to keep up with the changed organizational structures.

Changing a data product is costly and requires significant time, effort and re-education. Data contracts help by always reflecting and tracking all such changes as and when they occur.

If the contracts don’t evolve along with the data products, it can lead to situations where:

- Data owners shy away from making important and necessary changes to their products, for fear of breaking the contract. In such situations, data products no longer reflect the new/changed business reality.

- Data owners make random, on-the-fly changes to their data products without honoring contractual commitments. In such situations, downstream systems which depend on these products could get severely impacted. This can in turn lead to depletion of trust in data contracts.

Here are some best practices to avoid such scenarios:

- Decouple data products from transactional data

- Avoid brittle contracts

- Avoid backward-incompatible changes

- Ensure versioning of your contracts

- Republish all data

Decouple data products from transactional data #

A common anti-pattern in designing the contract is simply pushing transactional data as-is into the data product. This is problematic because transactional data contains low-level details that need not be exposed in the data product. This also creates a tight coupling and leaky abstraction between the transactional store and the data product.

Even minor changes in the shape of transactional data will require the contract to change. This anti-pattern is sometimes exacerbated by CDC frameworks that convert every record from a transactional database into an event.

One of the recommended ways to mitigate this is to use what’s called an Outbox Pattern, where the data contract is intentionally designed at a higher level of abstraction that is not prone to changes for minor implementation level changes in the transactional data. The data product only includes those attributes that are required by the consumers and nothing more.

Avoid brittle contracts #

Sometimes, there is a tendency to build overly strict contracts in the hope that this will improve the quality of data.

For instance, the contract may impose strict restrictions on the length of each attribute or a limitation on the possible values (or formats) that an attribute can take.

While these are well intended, they can make the contract brittle and prone to breakage even with the smallest of changes.

The contract should instead be flexible and make room for organic changes, at least in the foreseeable future.

Avoid backward-incompatible changes #

Whenever possible, it is best to allow only backward-compatible changes to data contracts.

Backward compatible changes do not break an existing contract. For example, adding a new attribute to a data product is a safe change but removing an existing attribute is not.

Ensure versioning of contracts (Contracts migration) #

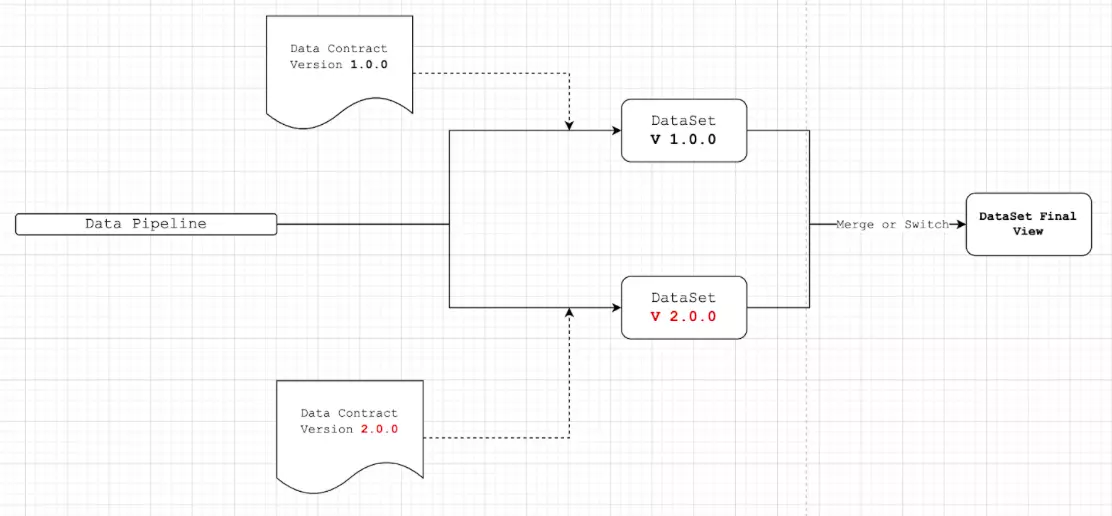

Much like APIs, data contracts (and therefore data products) must follow a versioning scheme.

Let’s consider Semantic Versioning.

In this approach, the contract has a version number, and data pipelines are designed such that the dataset storage location (table or schema) is tied to the contract’s version. When the contract version gets incremented, data-producing systems automatically push the new dataset/data product to a new location.

A view of the underlying data is often exposed to the data consumers. The advantage of using a view is that it creates a facade to encapsulate the underlying tables and version changes can be incorporated in a safe way (either by merging the versions or switching) into the final data product.

Semantic versioning. Image by Atlan.

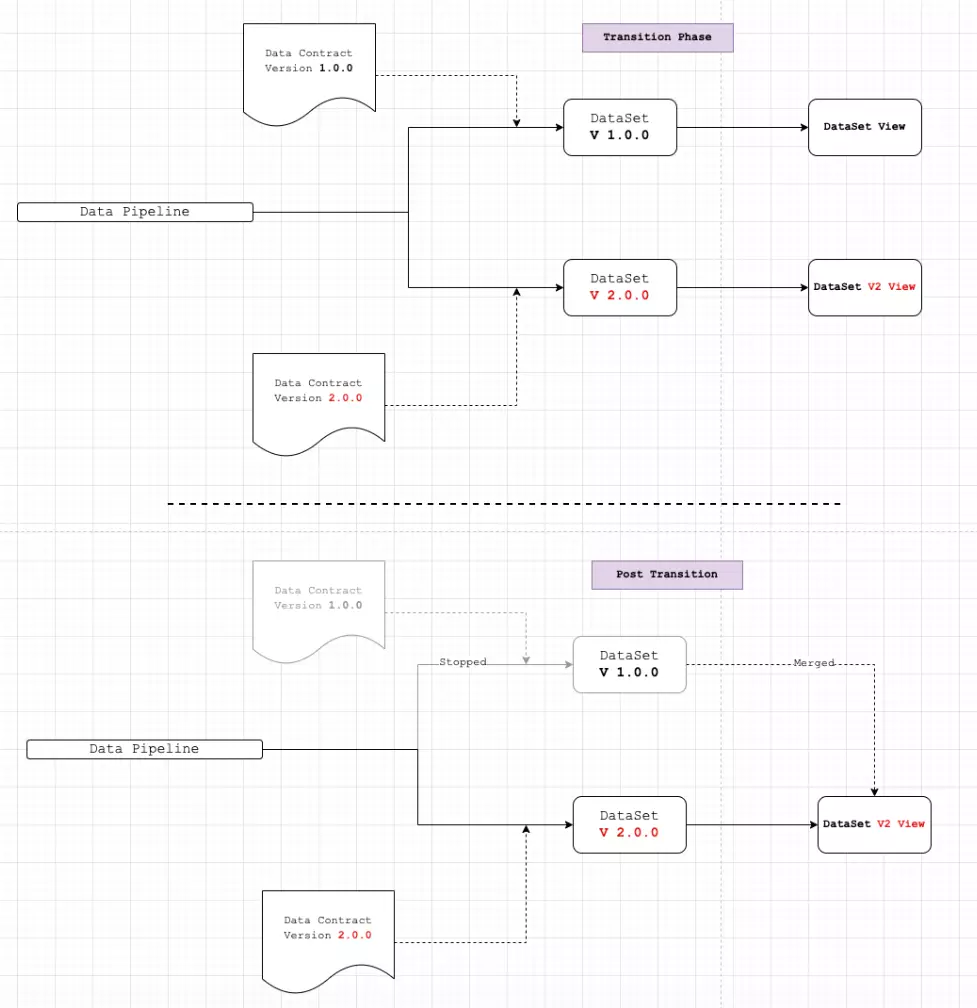

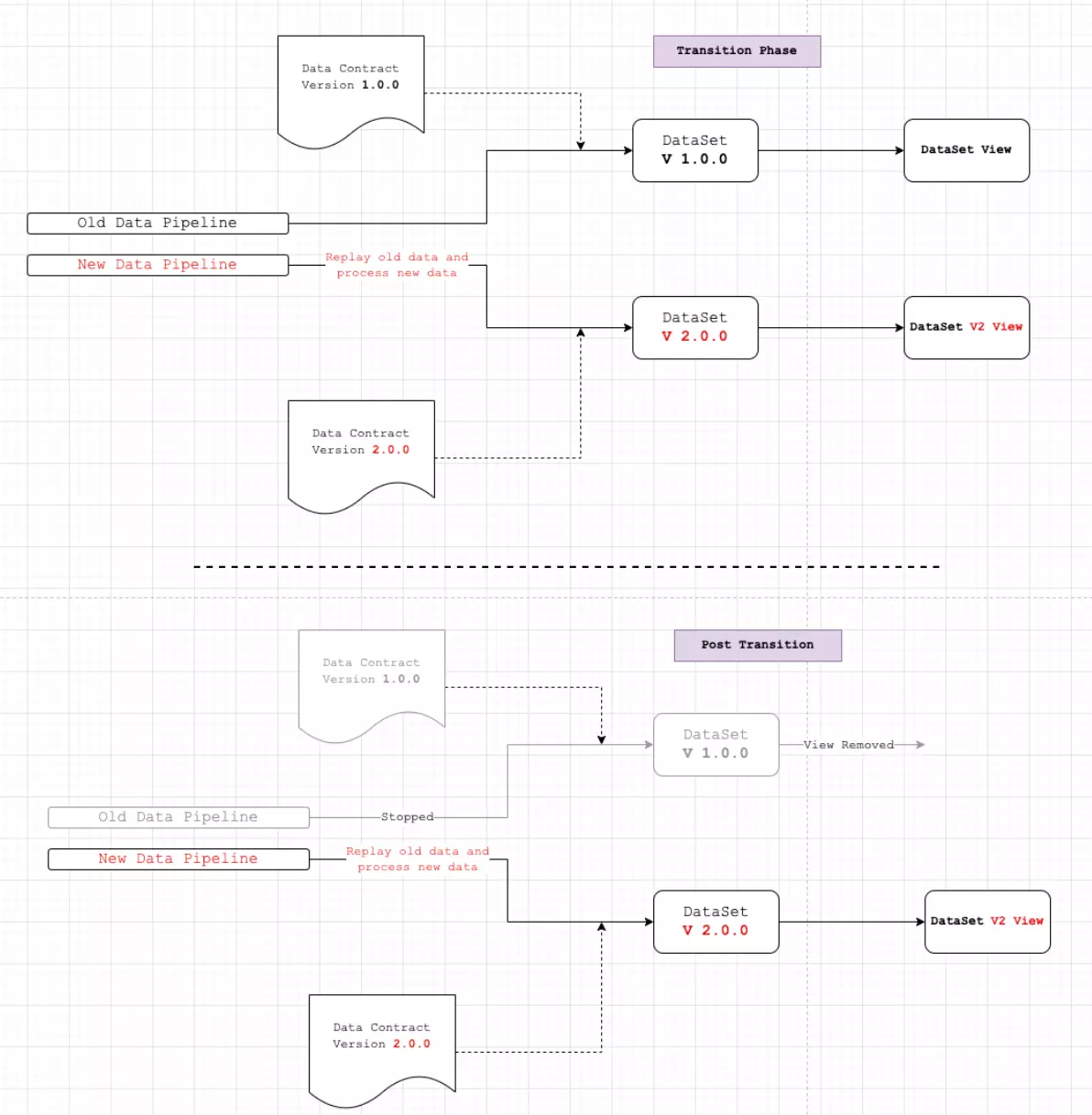

Similar to API version evolution, data contract version updates require a transition phase to be maintained while the old version is being deprecated.

During the transition, both old and new versions are supported until all the consumers have safely migrated to the newer version. Once the migration is complete, the old version of the data contract is fully removed and old data is merged into the view.

The transition phase for data contract updates. Image by Atlan.

Republish all data #

A more stringent yet costly technique is to replay all historical data and its dependent pipelines when the contract changes (at least from a certain point in time). The process looks similar to the migration technique described above, with a few key differences.

This is usually a very expensive operation but is worth considering if the new data contract looks dramatically different from the old version.

Republishing all data. Image by Atlan.

Also, read → Data contracts: The missing foundation | Driving Data Quality with Data Contracts

How organizations making the most out of their data using Atlan #

The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes #

- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

Wrapping up #

In the current economical context, it is all the more important to cut back on data expenses, create more ROI for data, and partner with existing tools and platforms, rather than spend more money and effort on solving data quality problems.

By left-shifting ownership to producers, data contracts are all set to assume an even more important role in ensuring data quality and promoting a collaborative culture within data-forward organizations.

It will be interesting to see how the community embraces data contracts and whether or not they get absorbed into the larger governance implementations in organizations.

FAQs about Data Contracts #

1. What are data contracts in the context of data engineering? #

Data contracts are formal agreements that define how data is exchanged between different systems or teams. They specify the structure, format, and rules for data exchange, ensuring consistency and reliability in distributed data architectures.

2. How do data contracts improve data reliability? #

By enforcing clear expectations for data structure and quality, data contracts help prevent unexpected changes or discrepancies. This ensures that all downstream systems receive consistent, reliable data, improving the overall trustworthiness of data pipelines.

3. Why are data contracts important for data pipelines? #

Data contracts act as guardrails for data pipelines, reducing the likelihood of errors or failures caused by incompatible data formats or unexpected schema changes. They streamline collaboration between teams and enhance the efficiency of data processing workflows.

4. How can teams implement data contracts effectively? #

Teams can implement data contracts by collaborating to define the necessary data schema, setting validation rules, and using automated tools to monitor compliance. It’s crucial to involve both producers and consumers of data in this process to ensure the contracts meet everyone’s needs.

5. What tools support automated data contract validation? #

Tools like Atlan, Apache Kafka, and dbt (Data Build Tool) offer features for defining and enforcing data contracts. These platforms help automate the validation of data against defined contracts, ensuring real-time compliance and reducing manual intervention.

6. How do data contracts relate to schema evolution? #

Data contracts play a crucial role in schema evolution by formalizing the process of making changes to a data schema. They ensure that any updates are backward-compatible or properly communicated to all stakeholders, minimizing disruptions in data pipelines.

Data contracts: Related reads #

- 10 Data Contract Open Questions You Need to Ask

- dbt Data Contracts: Quick Primer With Notes on How to Enforce

- Top 6 Database Schema Examples & How to Use Them!

- Data Validation: Types, Benefits, and Accuracy Process

- Data Validation vs Data Quality: 12 Key Differences

- 8 Data Governance Standards Your Data Assets Need Now

- Metadata Standards: Definition, Examples, Types & More!

- Data Governance vs Data Compliance: Ultimate Guide (2025)

- Data Compliance Management: Concept, Components, Steps (2025)

- 10 Proven Strategies to Prevent Data Pipeline Breakage

Share this article