Data Catalog Connectors: Value, Types, Setup, and More

Share this article

As their name suggests, data catalog connectors connect the rest of your stack to your data catalog by extracting metadata from various data sources and tools.

They serve as an indispensable bridge between different data systems, enabling data practitioners to integrate and access relevant & related information seamlessly.

Let’s discuss data catalog connectors: their types, the value they provide, and how to set them up.

Table of contents

Permalink to “Table of contents”- Data catalog connectors for the modern data stack

- What are data catalog connectors?

- How do data catalog connectors work?

- Data catalog connectors: A key consideration in the evaluation process

- How to set up data catalog connectors in Atlan

- Wrapping up

- Data catalog connectors: Related reads

Data catalog connectors for the modern data stack

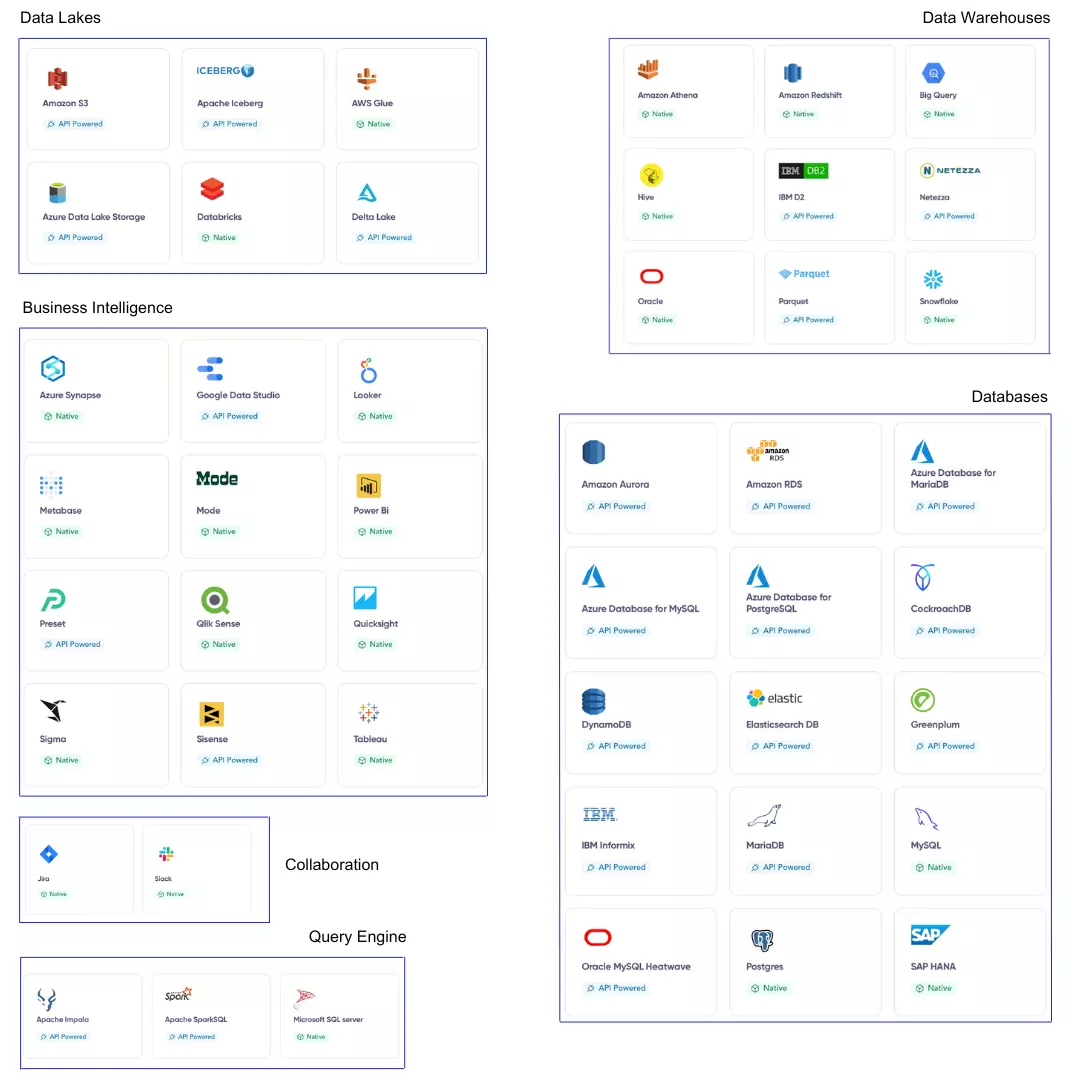

Permalink to “Data catalog connectors for the modern data stack”There are several types of data catalog connectors that connect various data sources and tools to your data catalog. The most commonly used connectors are for:

- Data warehouses: E.g. Amazon Redshift, BigQuery, Apache Hive

- Data lakes and lakehouses: E.g. Amazon S3, Databricks, Delta Lake

- Databases: E.g. Azure Database for MySQL, Elasticsearch DB, PostgreSQL

- ETL/ELT tools: E.g. dbt, Fivetran

- Orchestration tools: E.g. Apache Airflow, Luigi, Dagster

- Query engines: E.g. Microsoft SQL Server, Presto, Apache SparkSQL

- Data quality tools: E.g. Monte Carlo

- Collaboration tools: E.g. Slack, Jira

- BI tools: E.g. Looker, Power BI, Tableau

The different types of data catalog connectors - Image by Atlan.

What are data catalog connectors?

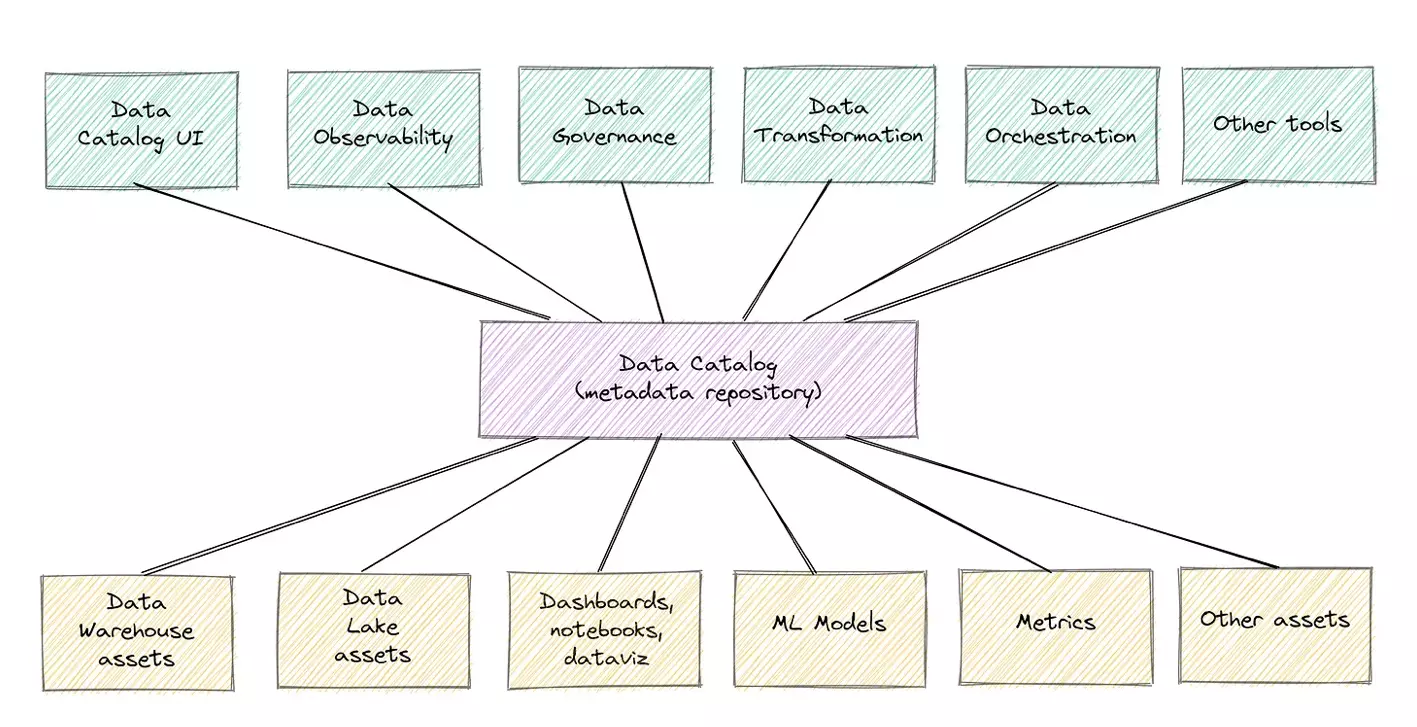

Permalink to “What are data catalog connectors?”Data catalog connectors are elements that help catalogs extract metadata from various data sources, platforms, and applications. They play a central role in transforming a data catalog into a central metadata repository for your data landscape.

A central metadata repository connected to the different tools (in green) and assets (in yellow) - Source: Towards Data Science.

Data catalog connectors crawl data assets to gather metadata, such as schemas, tables, columns, views, dashboards, workspaces, reports, metrics, and more.

This information helps you find the right data assets and get complete context to understand them.

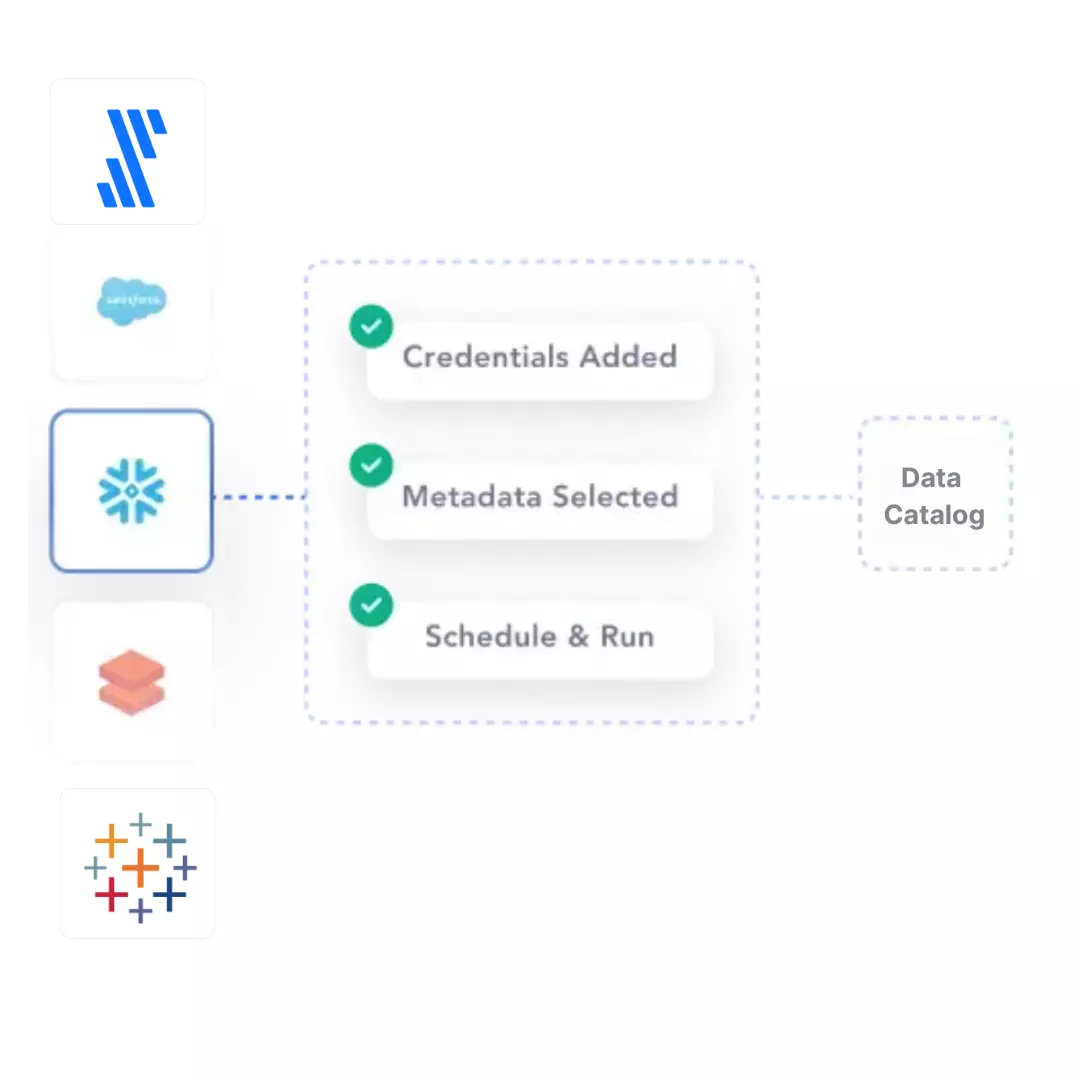

An example of a native data catalog connector - Image by Atlan.

How do data catalog connectors work?

Permalink to “How do data catalog connectors work?”Data catalog connectors help you:

- Integrate the entire data stack — data sources, query engines, orchestration tools, BI platforms, collaboration tools, and more

- Extract all kinds of metadata — technical, operational, governance, collaboration, quality, and usage — for better context and documentation of data assets

- Ensure that metadata extraction is standardized and automated to reduce inconsistencies and improve data quality

- Build a central repository — a single source of truth — for all metadata across your data ecosystem

- Find and access the data you need more efficiently, simplifying data search and discovery

- Ensure embedded collaboration in your daily workflows via the data catalog

- Track data lineage for better trust in your data by helping you understand where your data comes from, how it has transformed, where it is stored, and how it is used

Data catalog connectors: A key consideration in the evaluation process when choosing a data catalog tool

Permalink to “Data catalog connectors: A key consideration in the evaluation process when choosing a data catalog tool”The importance of native connectivity in data catalogs

Permalink to “The importance of native connectivity in data catalogs”A core tenet for evaluating data catalogs is the depth and breadth of connector support.

Integrating data tools can involve either native or custom code connections. That’s why the data catalog design should be open by default, rather than a closed, black-box platform.

So, one of the core capabilities that you should look for in a data catalog is an open architecture that supports either native or API-driven integration with the tools in your data stack.

Evaluating data catalogs for their connector support

Permalink to “Evaluating data catalogs for their connector support”If you’re looking for a data catalog that fits seamlessly into your data stack, here are some evaluation criteria to consider:

- Compatibility: Check that the data catalog works well with the various data sources and tools you use

- Ease of setup: Ensure that the setup and configuration process is easy by using automated, native, or API-powered connectors

- Efficiency: Look for a data catalog that allows for quick and efficient metadata extraction

- Data format support: Confirm that the data catalog connectors are capable of extracting all types and formats of data you need

How to set up data catalog connectors in Atlan

Permalink to “How to set up data catalog connectors in Atlan”Atlan offers connector support in two ways:

- Out-of-the-box connectors

- API-powered connectivity

Let’s explore this further.

1. Out-of-the-box connectors

Permalink to “1. Out-of-the-box connectors”You can natively connect data sources and tools in just three steps, complete with activity logs and automated Slack alerts for easy monitoring.

The setup is a no-code, DIY process, and here’s how that works:

Step 1: Authenticate your connection

Permalink to “Step 1: Authenticate your connection”

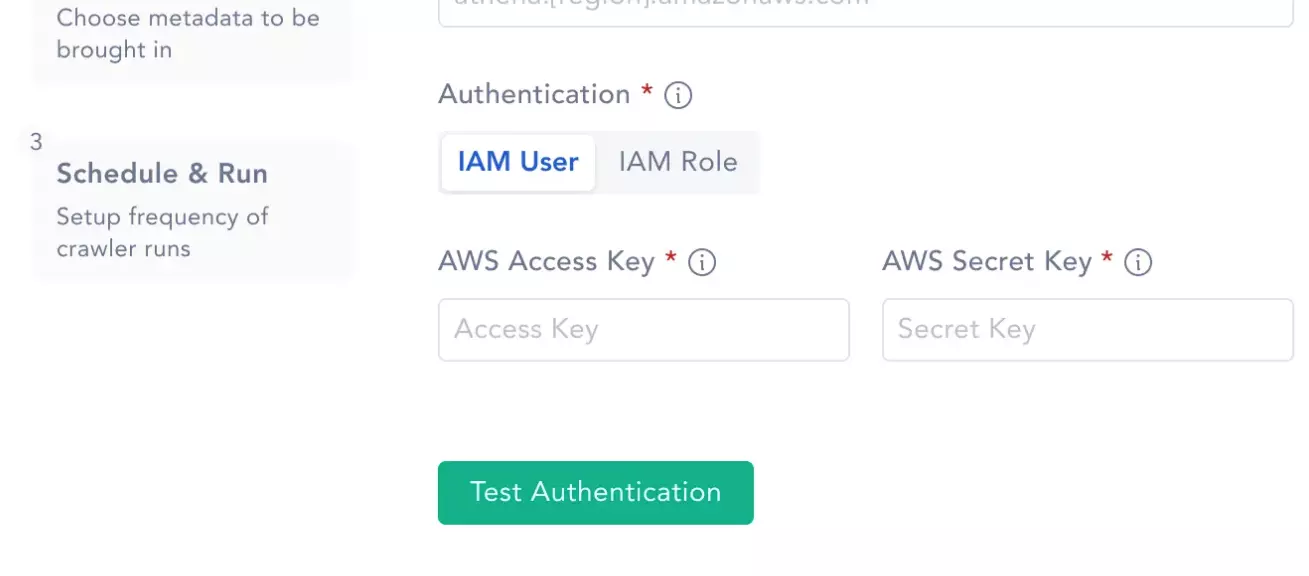

Authenticate your connection - Image by Atlan.

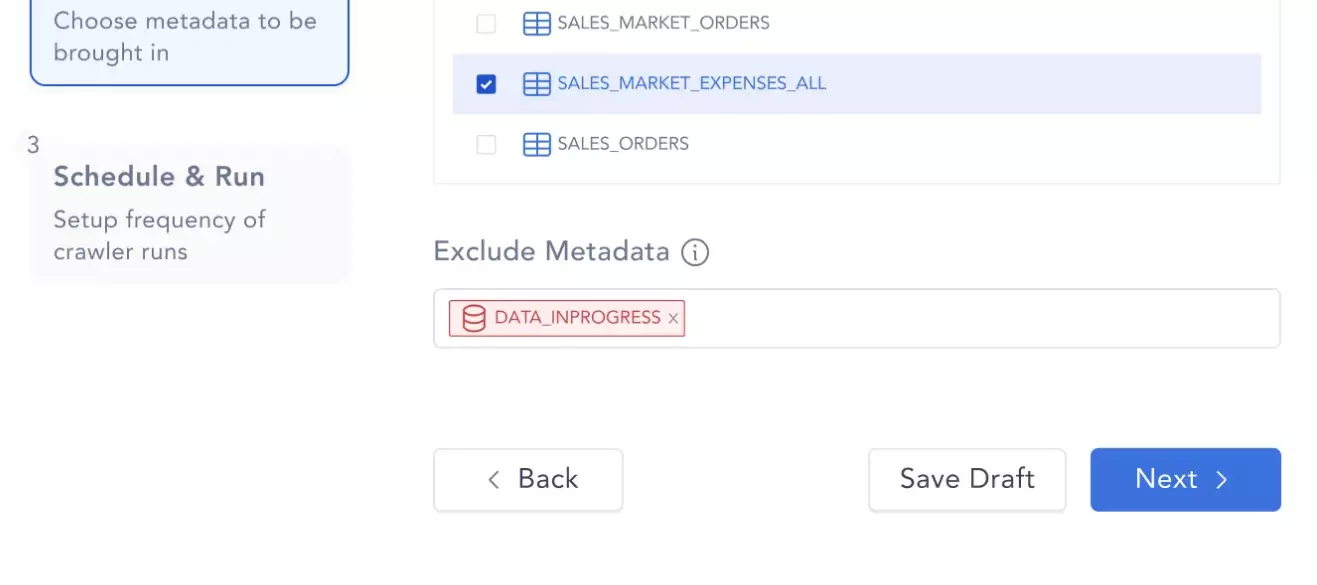

Step 2: Choose the metadata to be crawled at a database, schema, or table level

Permalink to “Step 2: Choose the metadata to be crawled at a database, schema, or table level”

Choose the metadata to be crawled - Image by Atlan.

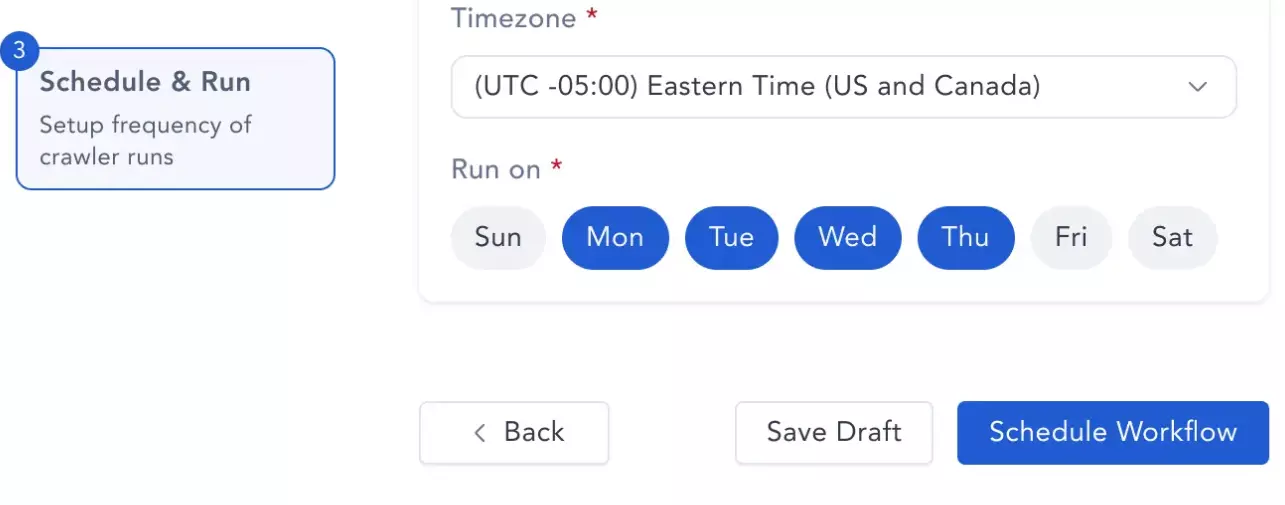

Step 3: Schedule metadata crawling to run on a daily, weekly, or monthly basis

Permalink to “Step 3: Schedule metadata crawling to run on a daily, weekly, or monthly basis”

Schedule metadata crawling to run - Image by Atlan.

2. API-powered connectivity

Permalink to “2. API-powered connectivity”Atlan’s Open API architecture allows you to connect to any data source in your data stack effortlessly.

So, you can connect data sources and tools by configuring APIs and leveraging Atlan’s open-by-default architecture.

As a result, you can find and assimilate metadata across your entire data stack. This auto-creates end-to-end column-level lineage, ensures complete visibility, and helps you discover assets across your data estate.

While Atlan connects with several data sources, tools, and applications, let’s look at one setup in action — AWS Glue.

AWS Glue connector: How does Atlan crawl data assets

Permalink to “AWS Glue connector: How does Atlan crawl data assets”Before crawling AWS Glue, it’s important to set up AWS Glue access permissions. After that, you should:

-

Select AWS Glue as your data source: Choose New>New Workflow > Glue Assets >Setup Workflow.

-

Authenticate the connection: Start by selecting the Regionof your Glue deployment. After that:

- Choose the authentication type (IAM User or IAM Role).

- Click Test Authentication to confirm connectivity to AWS Glue.

Once the authentication test is successful, click Next.

-

Configure the connection: Provide a Connection Name and click Next.

-

Set up the crawler: Select the assets you wish to include or exclude.

-

Run the crawler: You can click Run to crawl once. Alternatively, you can schedule the crawler to run hourly, daily, weekly, or monthly and click the Schedule & Run button.

Wrapping up

Permalink to “Wrapping up”Data catalog connectors provide a standardized interface to access, crawl, and document metadata. They play a vital role in integrating the entire data stack and building a central repository for all metadata.

In this article, we looked at the various types of data catalog connectors. We also outline key evaluation criteria for choosing the data catalog with proper connector support. Lastly, we look at Atlan as an example to demonstrate the ease and simplicity of establishing native connectivity between a data catalog and your tech stack.

Interested in knowing how to find and implement such a catalog and drive business value? Take Atlan for a spin. Atlan is a third-generation modern data catalog built on the framework of embedded collaboration that is key in today’s modern workplace, borrowing principles from GitHub, Figma, Slack, Notion, Superhuman, and other modern tools that are commonplace today.

Data catalog connectors: Related reads

Permalink to “Data catalog connectors: Related reads”- What Is a Data Catalog? & Do You Need One?

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- 8 Ways AI-Powered Data Catalogs Save Time Spent on Documentation, Tagging, Querying & More

- 15 Essential Data Catalog Features to Look For in 2023

- What is Active Metadata? — Definition, Characteristics, Example & Use Cases

- Data catalog benefits: 5 key reasons why you need one

- Open Source Data Catalog Software: 5 Popular Tools to Consider in 2023

- Data Catalog Platform: The Key To Future-Proofing Your Data Stack

- Top Data Catalog Use Cases Intrinsic to Data-Led Enterprises

- Business Data Catalog: Users, Differentiating Features, Evolution & More

Share this article