Data Quality Management: Dimensions, Impact & Best Practices

What are the 9 dimensions of data quality?

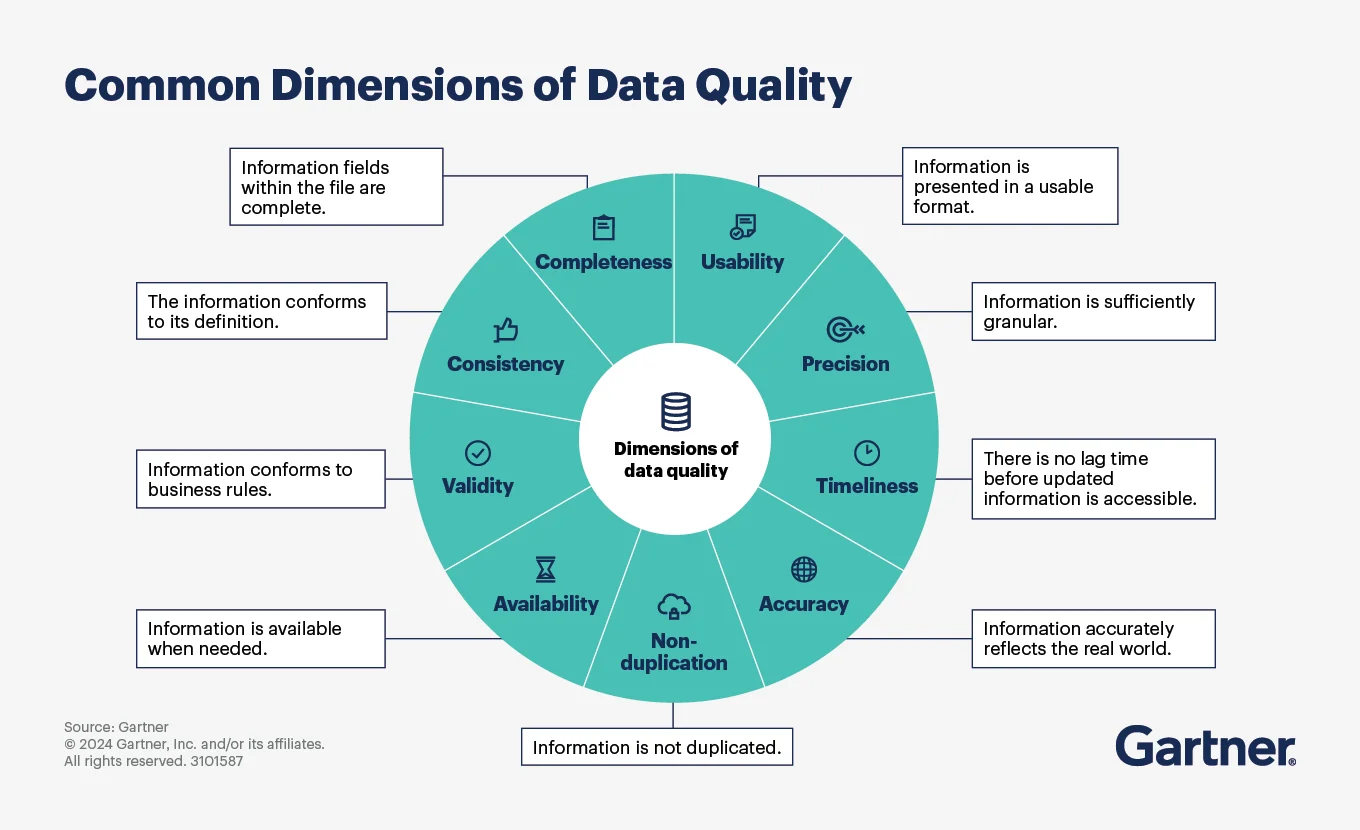

Permalink to “What are the 9 dimensions of data quality?”Data quality dimensions provide the framework for evaluating whether information meets business requirements. These standardized criteria help organizations assess trustworthiness and usability across different data assets.

Gartner identifies the following as the nine most common data quality dimensions for enterprises.

The most common dimensions of data quality, according to Gartner. Source: Gartner

1. Accuracy

Permalink to “1. Accuracy”Accuracy measures how closely data represents real-world entities and events. Inaccurate customer addresses prevent successful deliveries. Wrong pricing data leads to revenue leakage.

2. Accessibility

Permalink to “2. Accessibility”Accessibility measures how easily data can be discovered, retrieved, and integrated into workflows. Data that exists but is hard to find or access cannot deliver value.

3. Completeness

Permalink to “3. Completeness”Completeness evaluates whether all required fields contain values. Missing phone numbers block customer outreach. Incomplete product specifications frustrate buyers. Teams track null percentages and field coverage to identify gaps that impact business processes.

4. Consistency

Permalink to “4. Consistency”Consistency ensures data matches across systems and follows standard formats. Customer names appearing differently in CRM versus billing systems create confusion. Date formats varying between databases break integrations. Standardized definitions prevent these misalignments.

5. Precision

Permalink to “5. Precision”Precision reflects whether data is captured at the level of detail required by the business. Overly rounded values, coarse timestamps, or vague classifications limit analytical and operational usefulness.

6. Relevance

Permalink to “6. Relevance”Relevance evaluates whether data is applicable to specific business decisions or processes. Collecting data without a clear use case increases cost and complexity without improving outcomes.

7. Timeliness

Permalink to “7. Timeliness”Timeliness confirms data arrives when needed for decisions. Stale inventory counts cause stockouts. Delayed financial reports miss board meetings. Real-time requirements vary by use case, from millisecond trading systems to monthly analytics refreshes.

8. Uniqueness

Permalink to “8. Uniqueness”Uniqueness prevents duplicate records that inflate counts and skew analysis. Multiple customer profiles with slightly different information confuse support teams. Duplicate transactions distort financial reporting. Deduplication processes match and merge redundant entries.

9. Validity

Permalink to “9. Validity”Validity verifies data conforms to business rules and acceptable ranges. Invalid zip codes fail address verification. Out-of-range temperatures indicate sensor errors. Data quality testing enforces these constraints automatically.

Assess your organization's data quality maturity in 3 minutes

Take the Assessment →Why does data quality matter in analytics and AI?

Permalink to “Why does data quality matter in analytics and AI?”Data quality is a prerequisite for reliable analytics, compliant operations, and effective AI. Gartner research indicates poor data quality undermines decision-making, regulatory compliance, and operational efficiency.

Better decisions start with trustworthy data.

Permalink to “Better decisions start with trustworthy data.”Executives rely on analytics to guide strategy. Flawed or inconsistent data leads to misinformed decisions, while analysts lose time validating numbers instead of delivering insights. Studies show that nearly 30% of generative AI projects fail in part due to poor data quality.

Analytics and AI depend on strong foundations.

Permalink to “Analytics and AI depend on strong foundations.”Dashboards built on unreliable data erode stakeholder confidence. Inconsistent data warehouse outputs force manual reconciliation. Machine learning models trained on low-quality data produce unstable predictions and unreliable automation.

Regulatory compliance mandates provable quality.

Permalink to “Regulatory compliance mandates provable quality.”Regulatory compliance requires demonstrable quality controls. Industries like finance and healthcare must demonstrate accuracy, completeness, and traceability to auditors. Regulations such as GDPR explicitly require data accuracy, making quality controls a compliance necessity.

Operational efficiency declines with poor data.

Permalink to “Operational efficiency declines with poor data.”Duplicate records slow customer support, inaccurate inventory disrupts supply chains, and outdated data undermines marketing performance.

Customer experience is directly affected.

Permalink to “Customer experience is directly affected.”Data quality issues, such as incorrect addresses, broken personalization, and inconsistent interactions reduce satisfaction and retention.

What are the most common data quality challenges?

Permalink to “What are the most common data quality challenges?”As data ecosystems scale, quality issues emerge from a mix of technical complexity, fragmented ownership, and operational gaps. These challenges tend to reinforce one another.

Duplicates distort reality.

Permalink to “Duplicates distort reality.”Multiple records for the same customer, product, or transaction inflate counts, skew metrics, and confuse downstream teams. Deduplication becomes harder as data sources multiply.

Inconsistencies erode trust.

Permalink to “Inconsistencies erode trust.”Conflicting values across systems—such as different addresses or revenue figures for the same entity—undermine confidence in analytics and force manual reconciliation.

Incompleteness blocks decisions.

Permalink to “Incompleteness blocks decisions.”Missing critical fields make data unusable for reporting, automation, and AI. Incomplete records disrupt customer outreach, compliance, and forecasting.

Manual processes introduce errors and don’t scale.

Permalink to “Manual processes introduce errors and don’t scale.”Manual data entry, ad hoc fixes, and copy-paste workflows create inaccuracies that propagate quickly across pipelines, even in highly automated environments.

Moreover, high data volume and velocity make human checks impractical. Also, when platforms process billions of records daily, even small error rates can impact millions of rows, amplifying downstream risk.

Integration increases failure points.

Permalink to “Integration increases failure points.”Data from acquisitions, legacy systems, and cloud migrations often arrives with conflicting formats, definitions, and standards. Each integration point becomes a potential source of quality drift.

Unclear ownership weakens accountability.

Permalink to “Unclear ownership weakens accountability.”When data responsibility is spread across teams, quality issues persist without accountability. Customer, product, and financial data are often owned by different teams across systems.

Without clear ownership and shared standards, no one is responsible for end-to-end quality.

System failures corrupt data.

Permalink to “System failures corrupt data.”Pipeline breaks, schema changes, and software updates can invalidate data without obvious signals. Without monitoring and contracts, these issues spread before teams can react.

How to measure data quality: 3 essential indicators to track

Permalink to “How to measure data quality: 3 essential indicators to track”Measuring data quality transforms abstract concepts into actionable metrics. Organizations track specific indicators tied to business outcomes rather than generic scores.

1. Profiling establishes baselines

Permalink to “1. Profiling establishes baselines”Data profiling analyzes existing datasets to understand current state. Column-level statistics reveal null percentages, value distributions, and pattern violations. Initial profiling identifies where to focus improvement efforts based on business impact.

2. Metrics quantify performance

Permalink to “2. Metrics quantify performance”Data quality metrics convert observations into trackable numbers. Completeness rates show the percentage of populated fields. Accuracy scores compare data against validated sources. Uniqueness metrics count duplicate records.

Key performance indicators include:

- Completeness rate: Percentage of required fields populated

- Accuracy percentage: Records matching validated sources

- Duplicate ratio: Redundant records per unique entity

- Timeliness score: Data freshness against SLA requirements

- Validity rate: Records conforming to business rules

- Data availability rate: Successful access attempts vs. total

- Precision level compliance: Values captured at required granularity

- Staleness rate: Assets unused beyond a defined period

Organizations prioritize metrics aligned with business goals. E-commerce teams focus on product data completeness affecting conversion. Financial services emphasize transaction accuracy for regulatory reporting.

3. Monitoring detects issues

Permalink to “3. Monitoring detects issues”Data quality dashboards provide visibility across domains. Continuous monitoring catches quality degradation before business impact. Automated checks run after each data refresh. Data quality alerts notify teams when metrics breach thresholds.

How to improve data quality: 5 best practices to follow in 2026

Permalink to “How to improve data quality: 5 best practices to follow in 2026”Improving data quality requires a systematic approach embedded into day-to-day data operations. Moving beyond reactive fixes, organizations are now focusing on proactive quality engineering to ensure data is accurate, reliable, and ready for analytics and AI/ML initiatives.

1. Establish governance foundations

Permalink to “1. Establish governance foundations”A strong data governance framework sets the foundation for quality. Define clear standards and metrics for accuracy, completeness, and format. Assign ownership across teams and document procedures for issue resolution. Executive sponsorship ensures quality remains a priority, while governance policies integrate control mechanisms essential for AI/ML initiatives.

2. Implement validation at source

Permalink to “2. Implement validation at source”Prevent quality issues before they propagate. Input forms, APIs, and data contracts enforce format and structural requirements at the point of creation. Validation rules check for accuracy, completeness, and compliance with standards, reducing downstream errors and duplicates.

3. Automate quality checks

Permalink to “3. Automate quality checks”Automated testing frameworks replace manual validation, ensuring continuous and consistent monitoring. Business rules engines enforce logic, machine learning detects anomalies, and deployment pipelines include quality gates. Continuous monitoring of key metrics—such as accuracy, completeness, and timeliness—keeps data reliable over time.

4. Enable self-service quality

Permalink to “4. Enable self-service quality”Empower domain teams to take ownership of data quality. Business users can define rules in natural language templates, while SQL-savvy analysts write custom validations. Centralized platforms democratize quality management across roles, making it easier for teams across the organization to monitor, correct, and improve their own data.

5. Remediate issues systematically

Permalink to “5. Remediate issues systematically”Address data problems through structured workflows. Route issues to the appropriate owners, track resolutions to avoid duplication, and perform root cause analysis to fix underlying system issues. Maintain knowledge bases to capture patterns and recurring solutions. Regular cleansing to remove errors, inaccuracies, and duplicates ensures data remains accurate and trustworthy.

How do modern platforms automate data quality?

Permalink to “How do modern platforms automate data quality?”Modern data platforms shift quality management from reactive fixes to proactive automation. Automation embeds quality directly into how data is produced, transformed, and consumed. In practice, automation is the result of active metadata, cloud-native execution, and embedded collaboration.

Automation starts with metadata as the control layer. Active metadata captures data lineage to show where data originates, how it transforms, and what depends on it. Business glossaries align teams on shared definitions, while usage analytics highlight which datasets are most critical, deserving stricter quality controls.

Execution happens natively within cloud data platforms. Quality checks run natively in Snowflake Data Metric Functions or Databricks, eliminating data movement and infrastructure overhead. Results are stored in warehouses, making them immediately queryable, while elastic compute scales automatically to support large batch validations or frequent checks.

Automation becomes effective only when quality signals are visible where teams already work. Modern data catalogs surface trust scores and quality badges directly in BI dashboards, data catalogs, and notebooks. Alerts flow through collaboration tools like Slack, ensuring issues are seen and acted on quickly, not buried in monitoring logs.

Leading platforms for 2026 combine these foundations into a unified experience:

- Native execution: Quality checks run in Snowflake, Databricks without data movement.

- No-code rules: Business users create validations through templates.

- Ecosystem integration: Connect with data quality tools like Monte Carlo, Soda, Great Expectations, Anomalo.

- Real-time trust signals: Trust badges are embedded across analytics and governance surfaces.

- Collaborative workflows: Route issues to stewards with full context on lineage, ownership, etc.

Automation also extends to rule creation and evolution. AI-assisted profiling suggests validations based on observed data patterns. Historical trends establish normal ranges for anomaly detection. Business logic extracted from code becomes reusable quality rules.

Finally, modern platforms close the loop with collaborative remediation. Quality workflows route issues to data stewards or engineers. Comments, escalation paths, and shared dashboards ensure problems are resolved systematically, not repeatedly rediscovered.

How does metadata-driven quality address governance at scale?

Permalink to “How does metadata-driven quality address governance at scale?”As data estates grow, quality breaks down across teams and tools. Metadata provides the shared context that connects quality rules, governance standards, and ownership.

Traditional quality tools create separate layers of definitions disconnected from business context. Data definitions in quality rules don’t match glossary terms. Validation logic duplicates across systems. Metadata-driven approaches unify these fragments and apply data quality rules and standards consistently across pipelines and data products.

Automation reduces manual effort through classification, pattern detection, and rule suggestions, allowing teams to focus on exceptions. Trust signals surface quality at discovery time, showing scores, trends, and certification status before data is used.

Modern platforms deliver this through a unified control plane. A solution like Atlan’s Data Quality Studio runs automated quality checks natively and integrates the results with lineage and catalogs. It monitors data quality metrics and configures real-time alerts, helping you spot issues early, track trends over time, and maintain compliance with your data governance policies.

Real stories from real customers: How teams improve data quality with unified platforms

Permalink to “Real stories from real customers: How teams improve data quality with unified platforms”General Motors: Data Quality as a System of Trust

Permalink to “General Motors: Data Quality as a System of Trust”“By treating every dataset like an agreement between producers and consumers, GM is embedding trust and accountability into the fabric of its operations. Engineering and governance teams now work side by side to ensure meaning, quality, and lineage travel with every dataset — from the factory floor to the AI models shaping the future of mobility.” - Sherri Adame, Enterprise Data Governance Leader, General Motors

See how GM builds trust with quality data

Watch Now →Workday: Data Quality for AI-Readiness

Permalink to “Workday: Data Quality for AI-Readiness”“Our beautiful governed data, while great for humans, isn’t particularly digestible for an AI. In the future, our job will not just be to govern data. It will be to teach AI how to interact with it.” - Joe DosSantos, VP of Enterprise Data and Analytics, Workday

See how Workday makes data AI-ready

Watch Now →Ready to move forward with automated data quality at scale?

Permalink to “Ready to move forward with automated data quality at scale?”Data quality ensures data is accurate, complete, and reliable for analytics, AI, and decision-making. Achieving it at scale requires metadata-driven automation connecting quality checks to business context, governance policies, and operational workflows.

The path forward needs clear quality dimensions, measurable metrics, and continuous monitoring built into data operations. Platforms like Atlan’s Data Quality Studio operationalize best practices by running checks natively, surfacing trust signals, and embedding quality into everyday workflows.

When quality is part of how data is built and used, teams move faster, trust their data, and reduce risk.

Atlan automates data quality at scale while connecting it to governance and discovery.

FAQs about data quality

Permalink to “FAQs about data quality”1. What is the formal definition of data quality?

Permalink to “1. What is the formal definition of data quality?”Data quality is the degree to which a dataset meets established criteria for accuracy, completeness, consistency, validity, timeliness, and uniqueness. These dimensions determine whether data is fit for its intended purpose in operations, analytics, or decision-making.

2. What’s the difference between data quality and data integrity?

Permalink to “2. What’s the difference between data quality and data integrity?”Data quality evaluates fitness for purpose across six dimensions. Data integrity focuses specifically on accuracy and preventing unauthorized changes throughout the data lifecycle. Both work together to ensure trustworthy information.

3. What are examples of poor data quality in business?

Permalink to “3. What are examples of poor data quality in business?”Common examples include duplicate customer records causing billing errors, missing product information reducing e-commerce conversion, outdated contact details preventing marketing outreach, inconsistent financial data delaying reports, and inaccurate inventory counts causing stockouts.

4. What data quality metrics should organizations track?

Permalink to “4. What data quality metrics should organizations track?”Key metrics include completeness rate (percentage of required fields populated), accuracy percentage (records matching validated sources), duplicate ratio, timeliness score against SLAs, and validity rate. Organizations select metrics based on business priorities.

5. What are data quality management best practices?

Permalink to “5. What are data quality management best practices?”Best practices include establishing clear governance with assigned ownership, implementing validation at data source, automating quality checks through rules engines, enabling self-service quality tools for domain teams, and creating systematic workflows for issue remediation and root cause analysis.

6. How do you build a data quality framework?

Permalink to “6. How do you build a data quality framework?”Build a framework by defining quality dimensions relevant to your use case, establishing measurable metrics and thresholds, implementing profiling and monitoring tools, assigning clear ownership and accountability, and documenting processes for issue detection and resolution. Framework components include governance, technology, and continuous improvement.

7. What data quality assessment techniques are most effective?

Permalink to “7. What data quality assessment techniques are most effective?”Effective techniques include data profiling to establish baselines, automated rule-based validation for business logic, anomaly detection using statistical methods, cross-system consistency checks, and sampling-based manual audits for critical datasets. Assessment combines automated and human review.

8. Why is data quality critical for AI and analytics?

Permalink to “8. Why is data quality critical for AI and analytics?”AI models require high-quality training data to produce reliable outputs. Poor quality causes model drift, biased predictions, and operational failures. Analytics dashboards displaying incorrect metrics erode stakeholder trust and lead to flawed business decisions. Research shows data quality issues cause 30% of AI projects to fail.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Data quality: Related reads

Permalink to “Data quality: Related reads”- How to Improve Data Quality in 10 Actionable Steps

- Data Quality Alerts: Setup, Best Practices & Reducing Fatigue

- Data Quality Measures: Best Practices to Implement

- Data Quality in Data Governance: The Crucial Link that Ensures Data Accuracy and Integrity

- 8 Best Data Quality Tools for Modern Data Teams in 2026

- 6 Popular Open Source Data Quality Tools in 2025: Overview, Features & Resources

- Data Quality Metrics: Understand How to Monitor the Health of Your Data Estate

- How to Ensure Data Quality in Healthcare Data: Best Practices and Key Considerations

- Data Quality Issues: Steps to Assess and Resolve Effectively

- Data Lineage Tracking | Why It Matters, How It Works & Best Practices for 2026

- Dynamic Metadata Management Explained: Key Aspects, Use Cases & Implementation in 2026

- How Metadata Lakehouse Activates Governance & Drives AI Readiness in 2026

- Metadata Orchestration: How Does It Drive Governance and Trustworthy AI Outcomes in 2026?

- What Is Metadata Analytics & How Does It Work? Concept, Benefits & Use Cases for 2026

- Dynamic Metadata Discovery Explained: How It Works, Top Use Cases & Implementation in 2026

- Semantic Layers: The Complete Guide for 2026

- Context Graph vs Knowledge Graph: Key Differences for AI

- Context Graph: Definition, Architecture, and Implementation Guide

- Context Graph vs Ontology: Key Differences for AI

- Context Layer 101: Why It’s Crucial for AI

- Who Should Own the Context Layer: Data Teams vs. AI Teams? | A 2026 Guide

- Combining Knowledge Graphs With LLMs: Complete Guide

- What Is an AI Analyst? Definition, Architecture, Use Cases, ROI

- What Is Ontology in AI? Key Components and Applications

- What Is Conversational Analytics for Business Intelligence?

- Ontology vs Semantic Layer: Understanding the Difference for AI-Ready Data

- Context Preparation vs. Data Preparation: Key Differences, Components & Implementation in 2026