Open Data Discovery: An Overview of Features, Architecture, and Resources

Share this article

What is Open Data Discovery? #

Open Data Discovery is a data cataloging and discovery tool that was open-sourced in August 2021 by a California-based AI consulting firm. The firm works on a vast array of problems, including intelligent document scanning, demand forecasting, worker safety, and more.

Is Open Source really free? Estimate the cost of deploying an open-source data catalog 👉 Download Free Calculator

As the firm had extensive experience dealing with AI and ML systems, they put extra focus on including the ML ecosystem in the discovery and observability solution.

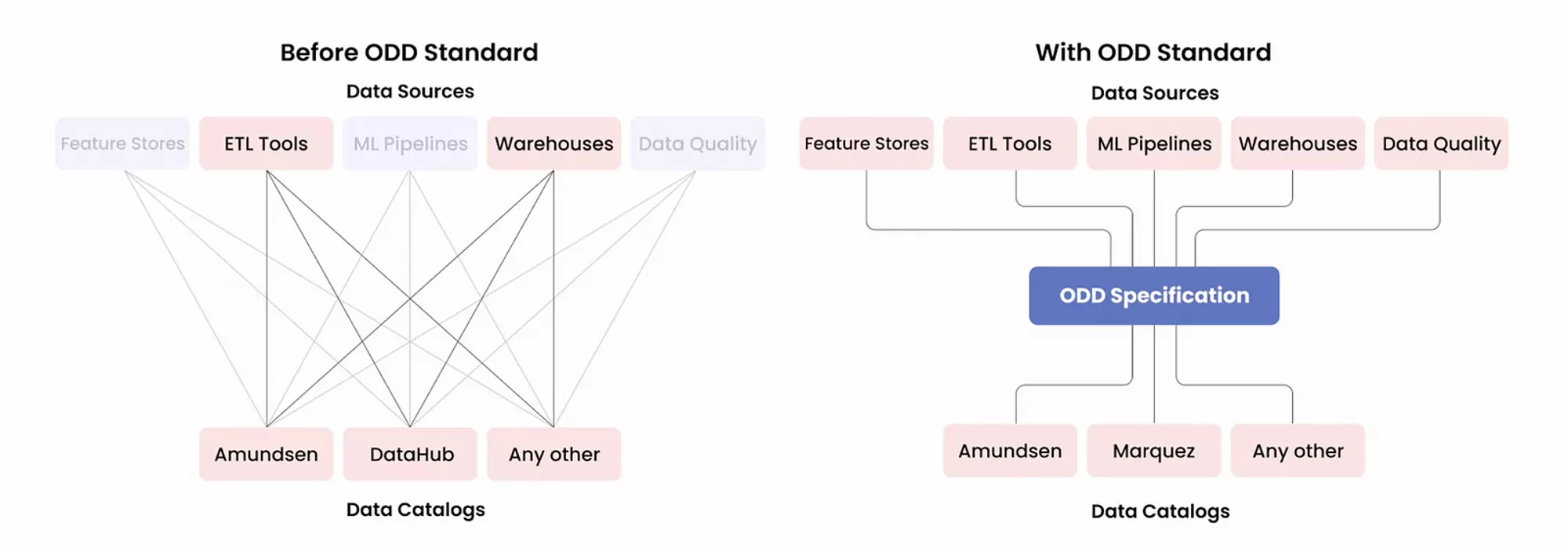

They have also designed Open Data Discovery to use open protocols to let you create a federated catalog of catalogs. Their open specification that sits between metadata sources and other data catalogs explicitly solves this problem, as shown in the image below:

OpenDataDiscovery Standard - Image from the official documentation

This article will take you through the prominent features and provide an overview of the technical architecture of Open Data Discovery.

Table of contents #

- What is Open Data Discovery?

- Open Data Discovery: Features

- Open Data Discovery: Architecture overview

- Conclusion

- OpenDataDiscovery: Resources

- Related Reads on More Open Source Tools

Open Data Discovery: Features #

Although very early in its development, OpenDataDiscovery has built the base features expected of a data cataloging and discovery engine. In this section, we’ll look at Open Data Discovery’s four main offerings: data discovery, quality, lineage, and governance. As of the v0.11.1 release, some features are more developed than others. We’ll talk about that too. Let’s dive in.

Data discovery #

The Open Data Discovery platform takes a different approach than most data catalogs by rejecting data graph databases and full-text search engines like neo4j and Elasticsearch. Instead, the platform makes full use of PostgreSQL’s power.

OpenDataDiscovery stores the metadata in PostgreSQL and uses its graph search and full-text search capabilities. This reduces some complexity in the architecture. Some other companies have also taken a similar route with PostgreSQL.

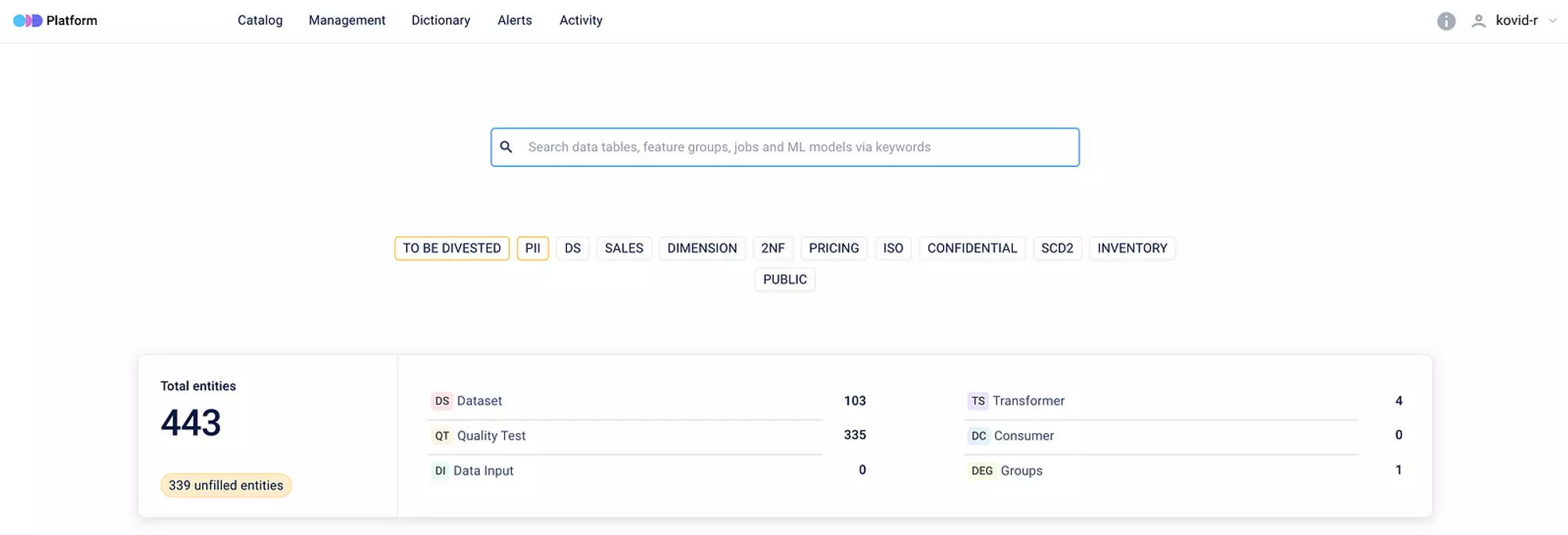

The home page of the discovery engine gives you a simple full-text search interface, an option to search data resources based on tags, and a summary of all the entities in your catalog, also called the Data Entity Report, as shown in the image below:

The OpenDataDiscovery Platform - Image from the OpenDataDiscovery demo application

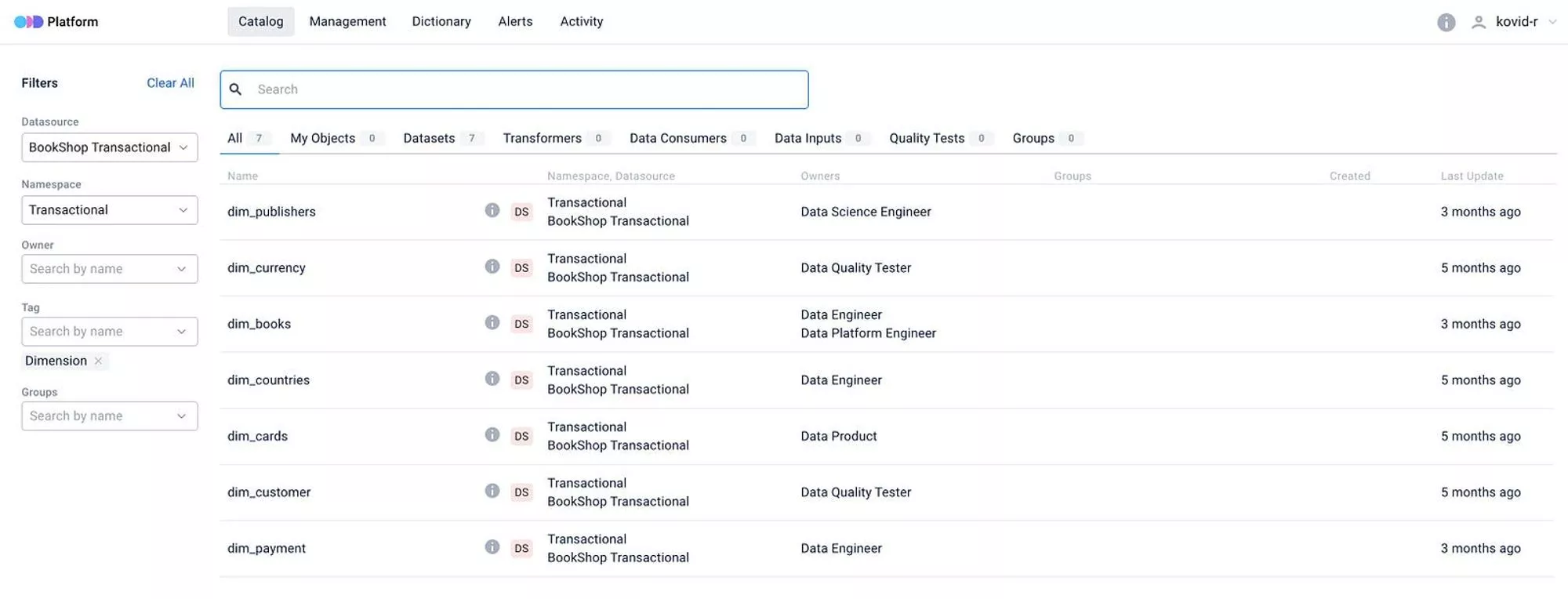

The other way to search and discover data assets is to go to the Catalog tab, which gives you a more granular search interface. Here you can limit your search area based on data sources, namespaces, owners, tags, and groups, as shown in the image below:

Home page of the discovery engine - Image from the OpenDataDiscovery demo application

Data can’t be entirely understood unless you also look at the relationships between various entities that store the data. Data lineage helps with that. Let’s take a look at how data lineage works in Open Data Discovery.

Data lineage #

There are two ways in which Open Data Discovery works with lineage metadata. The first is the most common way of tracing data lineage — from one data object to another. Currently, Open Data Discovery supports table-level data lineage only.

The second is specifically modeled for tracing lineage metadata from your microservices-based application. Data from every microservice is treated as a separate data object.

Like all metadata, data lineage is also stored in the PostgreSQL backend. The creators of Open Data Discovery looked at and evaluated other open-source solutions that deal with lineage but couldn’t find a satisfactory solution based on open standards. They looked at Marquez’s OpenLineage, but soon discovered its limitations with respect to supporting external data sources like BI dashboards, ML models, and so on.

Open Data Discovery decided to go with its own data lineage solution based on the Open Data Discovery specification. The specification currently lists various types of entities, such as DataInput, DataConsumers, DataQualityTest, DataTransformer, and so on, all of which can contribute to figuring out the data lineage.

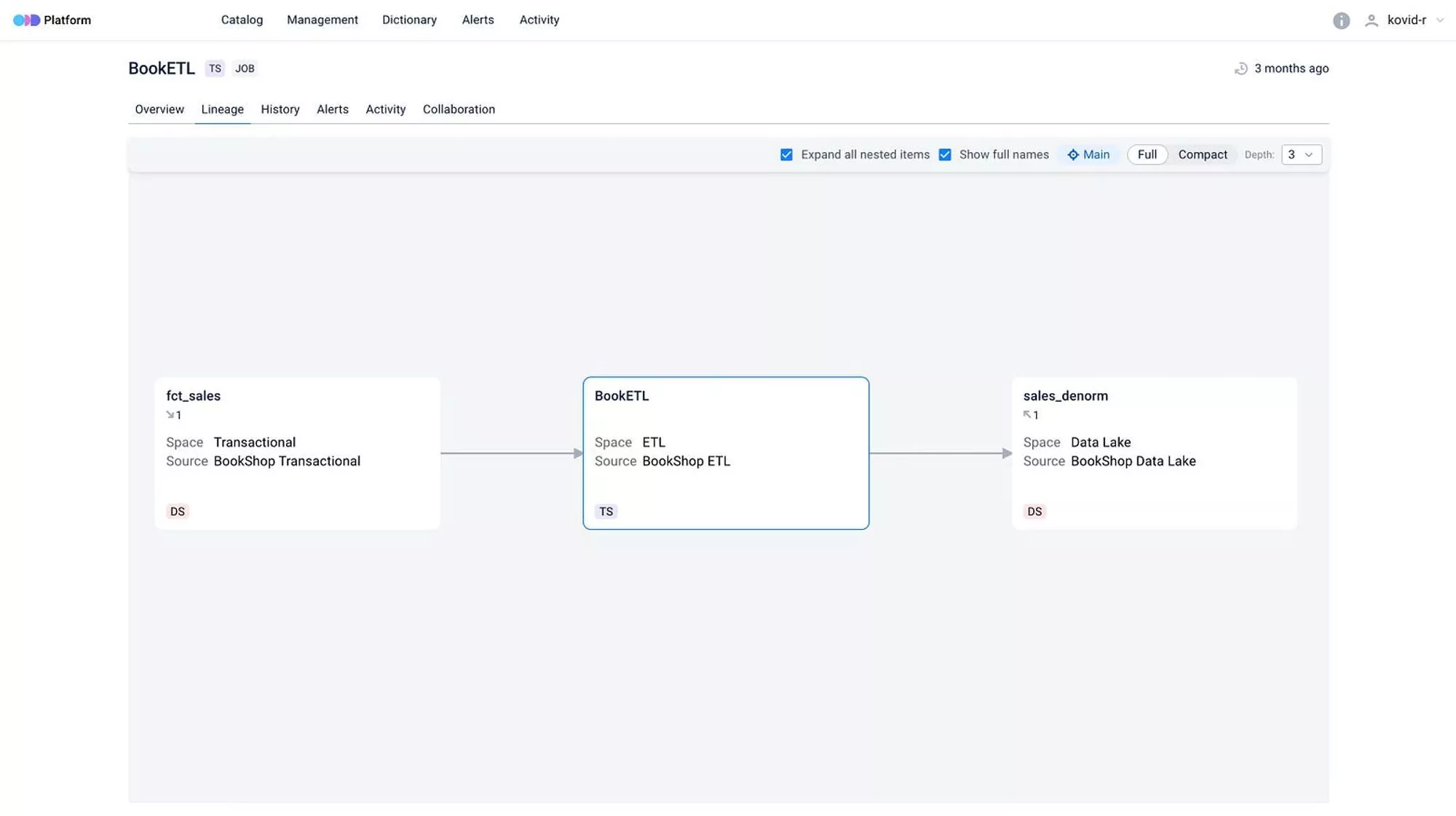

Working with data lineage is a visual process. Open Data Discovery allows you to interact with any of your entities and explore the flow of data using a lineage graph, as shown in the image below:

Data lineage example - Image from the demo application on the OpenDataDiscovery website

In addition to object names, the lineage graph also mentions tags, upstream/downstream signifiers, namespaces, and data sources.

Data governance #

As of the v0.11.1 release, Open Data Discovery’s data governance capabilities are limited to different tagging and classification types. OpenDataDiscovery doesn’t provide a way to authorize and authenticate access to data. However, OpenDataDiscovery enables you to integrate with OIDC providers like GitHub, Okta, AWS Cognito, Keycloak, etc. You can also write your own OIDC provider or power your authentication engine using your LDAP server.

Although you cannot control access to data sources from Open Data Discovery, you can describe data object ownership, roles, policies, and permissions using JSON Schema. Here’s a policy example from Open Data Discovery’s official documentation, where you are allowing updating of the business name, description, and metadata if the user is the data entity’s owner and the entity is in the TestNamespace namespace:

{

"statements": [

{

"resource": {

"type": "DATA_ENTITY",

"conditions": {

"all": [

{

"is": "dataEntity:owner"

},

{

"eq": {

"dataEntity:namespace:name": "TestNamespace"

}

}

]

}

},

"permissions": [

"DATA_ENTITY_INTERNAL_NAME_UPDATE",

"DATA_ENTITY_CUSTOM_METADATA_CREATE",

"DATA_ENTITY_CUSTOM_METADATA_UPDATE",

"DATA_ENTITY_CUSTOM_METADATA_DELETE",

"DATA_ENTITY_DESCRIPTION_UPDATE"

]

}

]

}

As you might have been able to gauge from the policy example, Open Data Discovery allows you to add custom metadata to your data objects. The custom metadata can be a flag signifying whether the data object contains PII data. It can also have custom tags that tell you about the type of table, i.e. if you’re using a Data Vault schema, is it a PIT table, a bridge table, a link table, and so on.

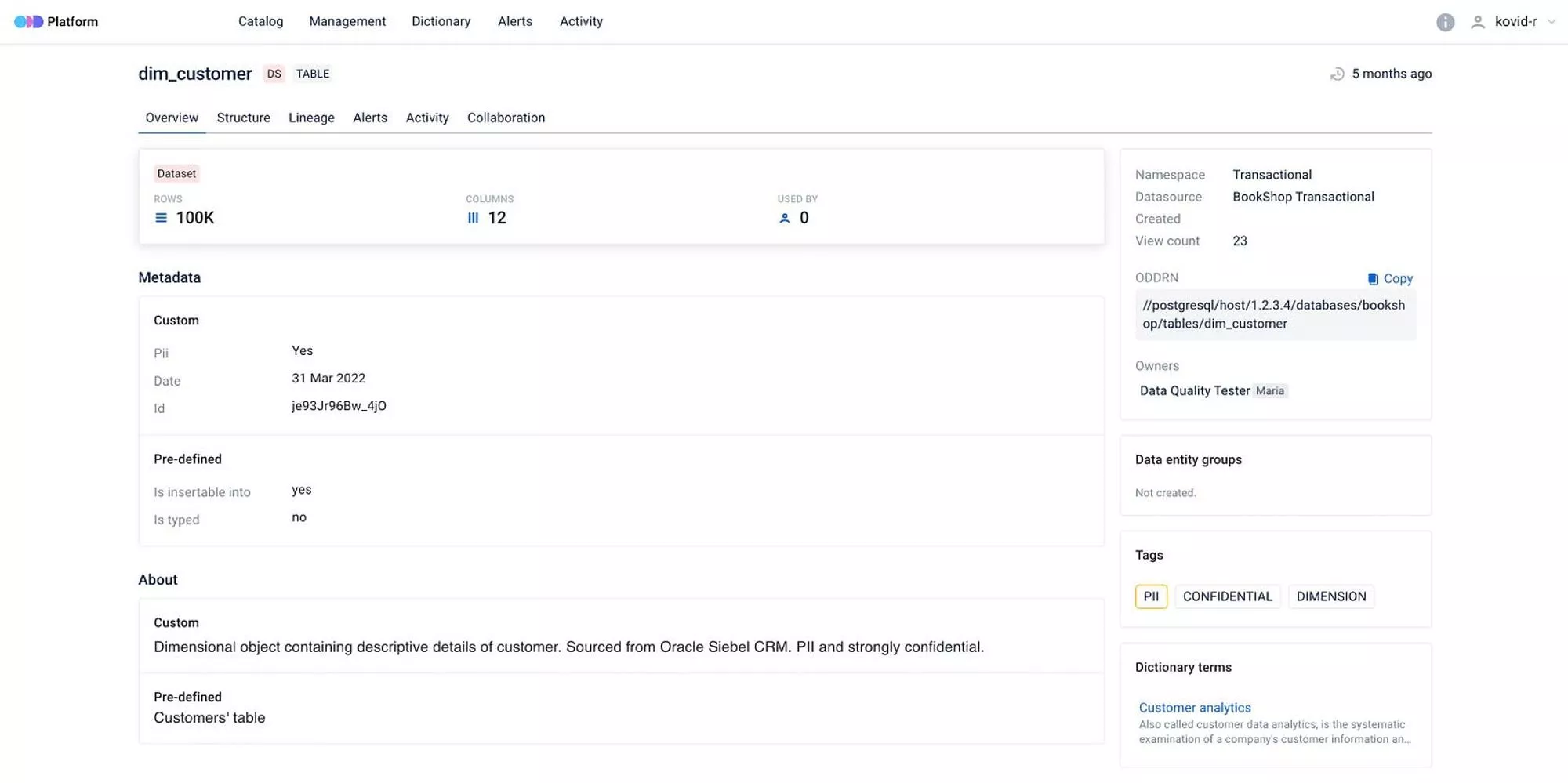

With custom tags and metadata, you’re free to use them how you like. The following image shows how tags, entity groups, owners, and custom metadata are specified on the Overview page for a data object:

How tags, entity groups, owners, and custom metadata are specified. Image from the demo application on the OpenDataDiscovery website

Let’s look at the fourth main feature on offer from OpenDataDiscovery — data quality.

Data quality #

OpenDataDiscovery integrates with popular data quality and profiling tools, such as Pandas Profiling and Great Expectations. If these tools don’t support the tests you are looking for, you can create your own SQL-based tests.

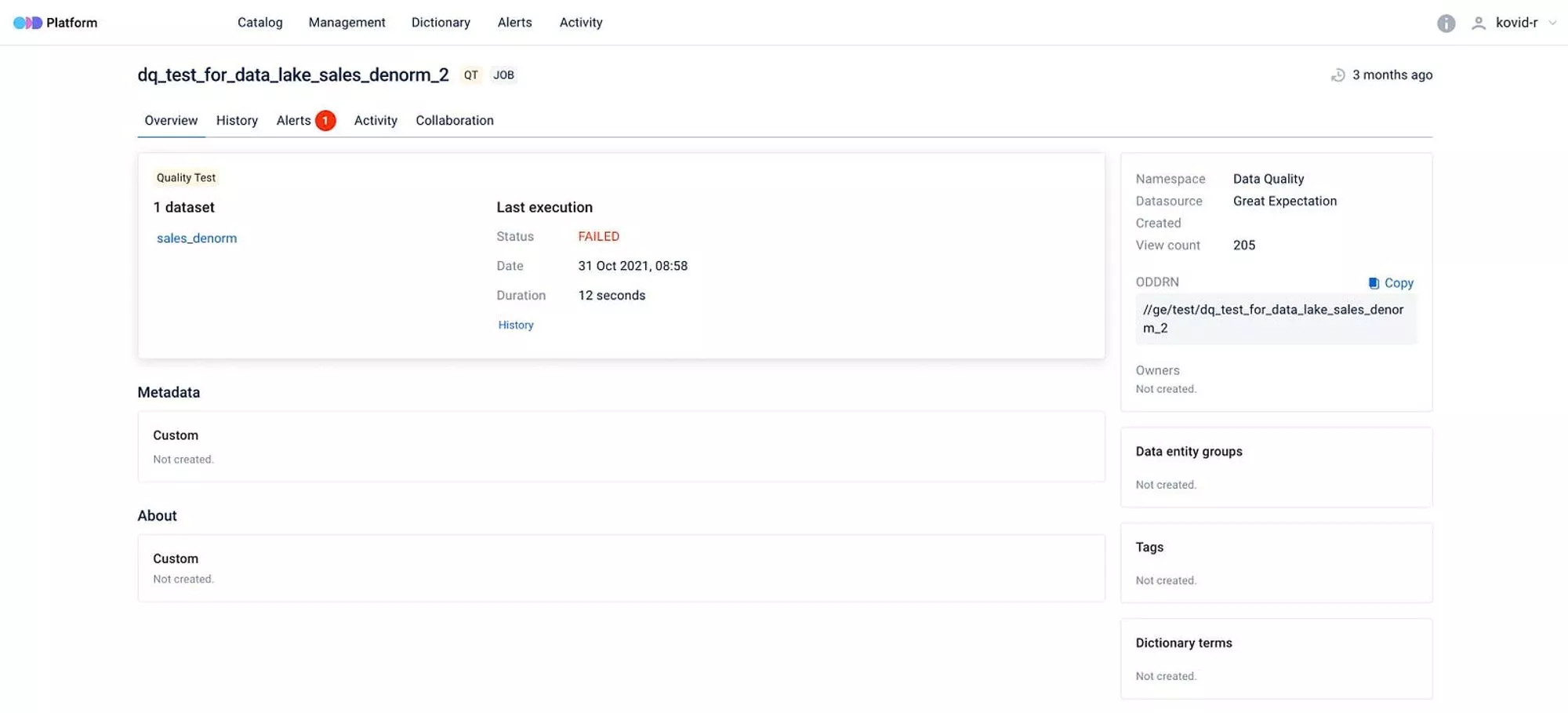

To give you a holistic view of the data quality, OpenDataDiscovery supports data quality and testing results imported from official integrations like Great Expectations and any custom test suites and frameworks you want to use. Here’s a sample test imported from Great Expectations:

Overview of a test imported from Great Expectations - Image from the OpenDataDiscovery demo page

Open Data Discovery also allows you to manage data quality and profiling related alerts from the data entity itself. Now that we’ve gone through all of the primary features of Open Data Discovery, let’s understand the technical architecture.

Open Data Discovery: Architecture overview #

Open Data Discovery’s architecture is built around the PostgreSQL database.

The component that pushes the data from your data pipelines and sources, and the component that ingests that data into a database, is centered around PostgreSQL’s ability to cater to several types of query workloads, underlying storage structures, and changing requirements.

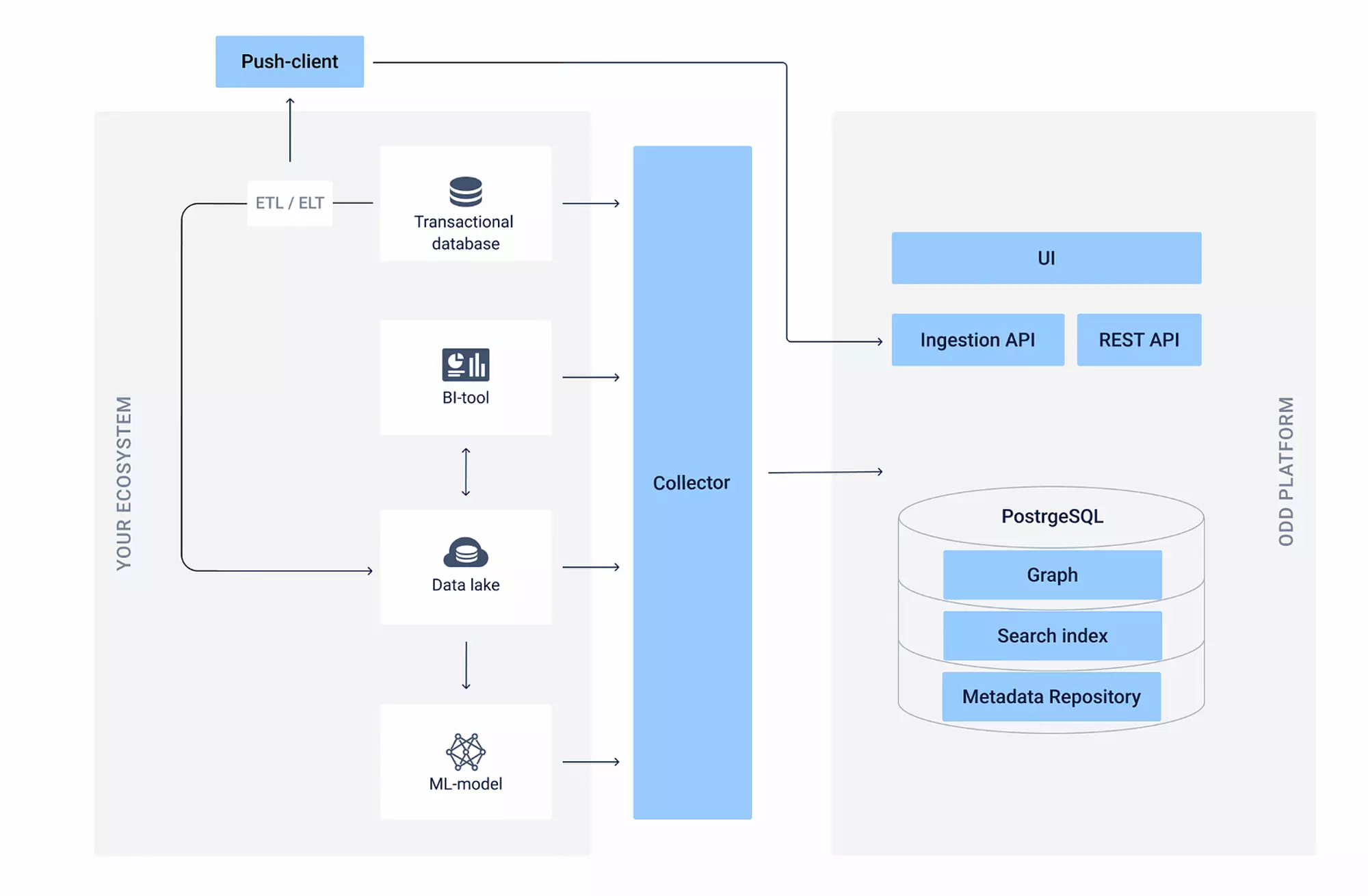

Open Data Discovery benefits from PostgreSQL becoming a graph database, a full-text search engine, and a relational database simultaneously. Let’s look at the following image to understand the technical architecture a bit more and see how different components interact with each other:

The technical architecture of OpenDataDiscovery - Image from the official documentation.

Let’s categorize the architectural components broadly into three categories: backend database, ingestion and REST APIs, and push-client and collector.

Backend database #

As mentioned at the beginning of the article, the PostgreSQL database acts as the backbone of the cataloging and discovery engine. Because of PostgreSQL’s vast feature set and extensibility, it has come to support a wide range of use cases with the help of extensions. Some extensions allow PostgreSQL to store and search graph and full-text data natively — two of the primary use cases for a tool like Open Data Discovery.

The storage in PostgreSQL is based on a custom object model. The model is built upon open standards like the Open Data Discovery specification and the ODDRN (Open Data Discovery Resource Name). Building on open standards is to enable federated connectivity between OpenDataDiscovery and other data catalogs like Amundsen, DataHub, and Marquez.

Ingestion and REST APIs #

Open Data Discovery offers to communicate using two different APIs. One of them, the Ingestion API, is used to ingest data, and the other one, the generic REST API, is used by various microservices to interact with each other. The UI is one service that interacts with the REST API, whereas the collectors use the Ingestion API.

Push-client and collector #

The push client is a component that can be directly used to fetch data from your data ecosystem with the help of Open Data Discovery’s Ingestion API and the REST API. The collector is a service that lets you fetch data from various data sources directly and load it into the PostgreSQL schema.

Collectors work on adapters, which are YAML configuration files for every data source you will need. These configuration files contain all the information that usually a database connection string contains. This opens up Open Data Discovery for data sources like dbt, Airflow, Airbyte, CockroachDB, MongoDB, Metabase, Presto, Superset, Redshift, Vertica, and many more.

As the product is relatively new, the architecture will keep evolving. Check the latest updates using the resources mentioned at the end of this article.

Conclusion #

This article took you through the basics of Open Data Discovery, and why and how Provectus decided to build it. You also learned about the features and limitations of OpenDataDiscovery and open-source data cataloging tools, specifically regarding data lineage. Open Data Discovery is a relatively new addition to the open-source data discovery landscape, so some of the features are still nascent. You can learn more about OpenDataDiscovery using the links in the next section. Keep an eye out for future updates.

OpenDataDiscovery: Resources #

Documentation | Medium | Slack | GitHub | Demo Application

Related Reads on More Open Source Tools #

- Open Source Data Catalog - List of 6 Popular Tools to Consider in 2023

- 7 Popular Open-Source ETL Tools

- 5 Popular Open-Source Data Lineage Tools in 2023

- 5 Popular Open-Source Data Orchestration Tools in 2023

- 7 Popular Open-Source Data Governance Tools to Consider in 2023

- 11 Top Data Masking Tools

- 9 Best Data Discovery Tools

- Amundsen Data Catalog: Understanding Architecture, Features, Ways to Setup & More

- DataHub: LinkedIn’s Open-Source Tool for Data Discovery, Catalog, and Metadata Management

- Netflix’s Metacat: Open Source Federated Metadata Service for Data Discovery

Share this article