Data Quality Dimensions: What Are They, Why They Matter & How To Implement Them In 2025

Share this article

Quick Answer: What are data quality dimensions?

Permalink to “Quick Answer: What are data quality dimensions?”Data quality dimensions are standardized criteria used to evaluate whether data is fit for use. These dimensions—such as accuracy, completeness, consistency, timeliness, and validity—help organizations assess the reliability and usability of their data.

Data quality dimensions serve as the yardstick by which we measure the reliability, trustworthiness, and actionability of our data. For data teams, they act as a common language to identify gaps, track improvements, and align quality with business goals.

Up next, we’ll explore the most common data quality dimensions, understand how to choose them, and discuss how to implement them in practice.

Table of contents

Permalink to “Table of contents”- Data quality dimensions explained

- What are the 9 key data quality dimensions?

- Why do data quality dimensions matter?

- How do data quality dimensions differ from measures and metrics?

- How can you select the right data quality dimensions for your organization?

- What is an example of a data quality dimensions framework? The 6Cs of data quality

- What are the benefits of using data quality dimensions?

- What are the challenges in maintaining data quality dimensions?

- How does a unified data quality studio help with data quality dimensions?

- Summing up: Turn data quality dimensions into operational advantage

- Data quality dimensions: Frequently asked questions (FAQs)

- Data quality dimensions: Related reads

Data quality dimensions explained

Permalink to “Data quality dimensions explained”Data quality dimensions are the core attributes used to evaluate how trustworthy, usable, and effective data is for a specific purpose. They provide a framework for assessing the quality of a dataset.

Various models and frameworks identify different dimensions, but some commonly cited dimensions include completeness, consistency, validity, availability, accuracy, and timeliness, among others.

What are the 9 key data quality dimensions?

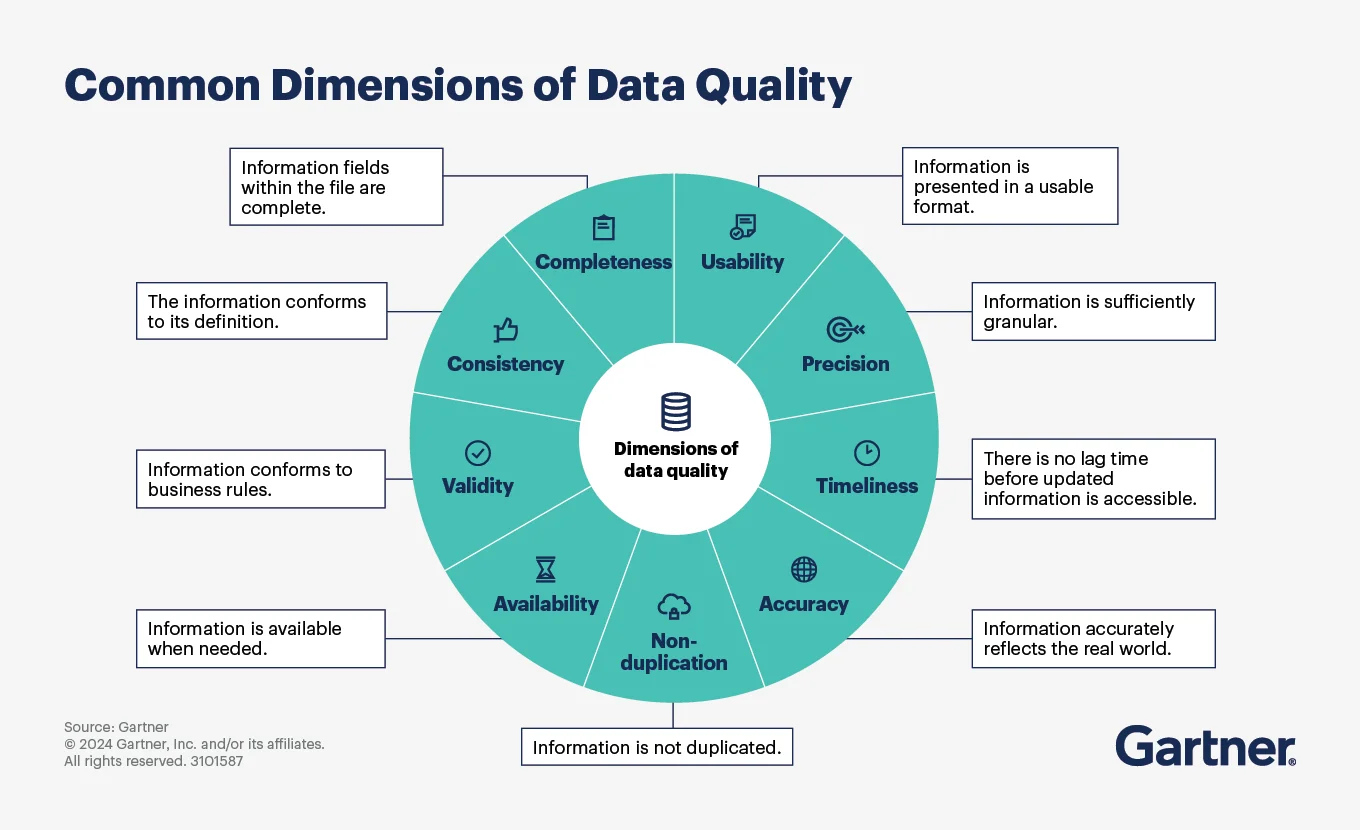

Permalink to “What are the 9 key data quality dimensions?”According to Gartner, here are the nine most commonly used dimensions:

- Completeness

- Consistency

- Validity

- Availability

- Non-duplication (Uniqueness)

- Accuracy

- Timeliness

- Precision

- Usability

Common data quality dimensions, according to Gartner - Source: Gartner.

Together, these dimensions provide a holistic view of data quality across the lifecycle—from ingestion and transformation to consumption and reporting.

Let’s explore each data quality dimension in detail.

1. Completeness

Permalink to “1. Completeness”Missing values can break analytics and delay critical business processes. Completeness ensures that every required piece of data is available, giving a full picture and enabling confident decisions.

2. Consistency

Permalink to “2. Consistency”Inconsistencies introduce confusion and reduce trust. For instance, a CRM system might show a client’s address differently from an invoicing system, leading to confusion and potential missed opportunities.

Consistency is the key to ensuring that your data aligns across systems and reports in your organization.

3. Validity

Permalink to “3. Validity”Validity is about ensuring that data fits the intended format and constraints.

It might sound basic, but consider the complexities of date formats between countries or the varied ways products might be categorized in an e-commerce database.

Valid data is standardized and structured, ensuring it can be effectively used in analyses. Invalid data (e.g., letters in phone fields) reduce usability.

4. Availability

Permalink to “4. Availability”Data should be available, easily retrieved and integrated into business processes. Even perfect data is useless if teams can’t access it in time.

5. Non-duplication (Uniqueness)

Permalink to “5. Non-duplication (Uniqueness)”For professionals dealing with vast databases, duplicate records can be a nightmare. They skew analysis, waste resources, and can lead to conflicting insights.

Ensuring data uniqueness means that each piece of information stands on its own merit.

6. Accuracy

Permalink to “6. Accuracy”Accuracy is about ensuring that the data truly reflects reality. Professionals understand that even minor inaccuracies can have major repercussions.

Mistakes in names, IDs, or transactions degrade downstream outcomes. Imagine the implications of a financial error in a large transaction or a minor misrepresentation in a clinical trial dataset.

Accuracy ensures that decisions made based on the data will be sound.

7. Timeliness

Permalink to “7. Timeliness”Stale data can result in outdated insights and poor decision-making. For instance, outdated stock prices can lead to financial losses, while old patient records might not reflect recent diagnoses or treatments.

Timely data means that professionals can make decisions that are relevant right now.

8. Precision

Permalink to “8. Precision”Vague values (e.g., “APAC” vs. “Singapore”) reduce relevance for localized use cases. That’s why data should be recorded with the granular precision required by business processes.

9. Usability

Permalink to “9. Usability”Data should be presented in a way that makes it easy to understand and apply. Poor formatting and naming conventions slow down workflows.

Why do data quality dimensions matter?

Permalink to “Why do data quality dimensions matter?”According to Gartner surveys, 59% of organizations do not measure data quality.

Data quality dimensions matter because poor data quality directly impacts decision-making, regulatory compliance, operational efficiency, and customer experience.

When teams lack a shared definition of “good data,” inconsistencies and costly mistakes follow.

How do data quality dimensions differ from measures and metrics?

Permalink to “How do data quality dimensions differ from measures and metrics?”Understanding data quality involves three distinct but related concepts: dimensions, measures, and metrics. Think of them as a progression from defining what good data is, to observing its current state, to tracking its performance over time.

Data quality dimensions: What standards should good data have?

Permalink to “Data quality dimensions: What standards should good data have?”Data quality dimensions are the qualitative categories that define “good data.” They set the standards for how data should be. An example is completeness – is all required data present?. They provide the framework for evaluating your data’s health.

Data quality measures: What are you observing?

Permalink to “Data quality measures: What are you observing?”Meanwhile, data quality measures are quantitative observations under each dimension (e.g., % of rows missing values under Completeness). They’re the raw counts or simple proportions that describe what you directly see in your data.

For example, let’s consider completeness to be the data quality dimension. The observation that ‘200 rows in a table have missing phone numbers’ is the measure. It tells you the absolute count of incomplete records for the phone number field.

Data quality metrics: How are you tracking performance and trends?

Permalink to “Data quality metrics: How are you tracking performance and trends?”Data quality metrics are calculated indicators that quantify data quality performance over time. Derived from measures, metrics usually express quality as a percentage, rate, or score, providing context and enabling comparisons. They’re what you’d typically see on dashboards.

For example, the data completeness rate for phone numbers could be 80%. This provides a clear indication of the data quality level for this specific attribute and can be tracked over time to monitor improvements or degradation.

In short:

- Dimensions define quality

- Measures count observed quality issues

- Metrics track the performance of that quality over time

How can you select the right data quality dimensions for your organization?

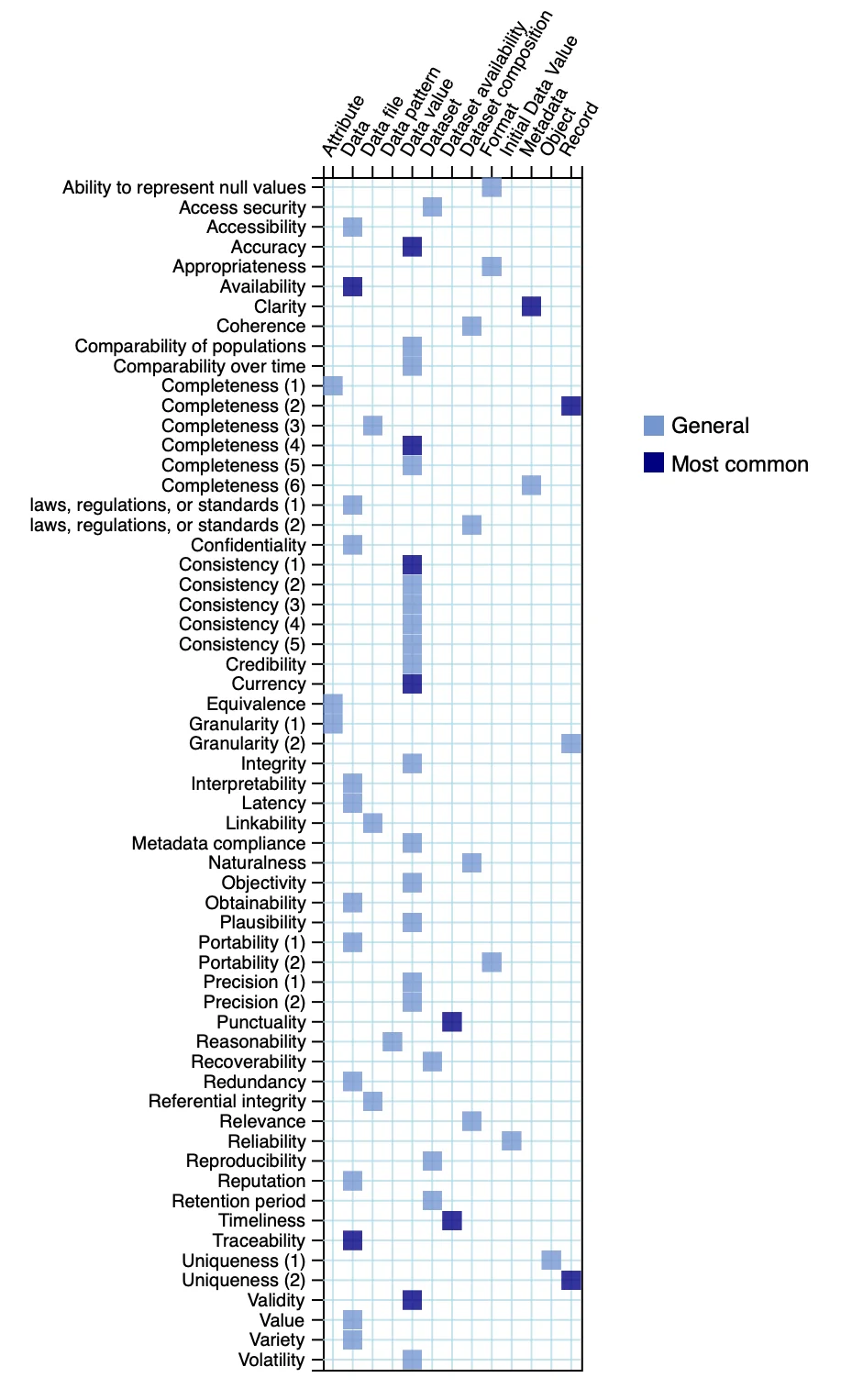

Permalink to “How can you select the right data quality dimensions for your organization?”There’s no universal agreement on the right set of data quality dimensions for an organization. DAMA (Data Management Association) has famously documented 60 dimensions for data quality.

The various data quality dimensions, according to DAMA - Source: DAMA.

Gartner recommends picking the dimensions relevant to your use cases:

“Select the data quality dimensions that are useful to your use cases and identify data quality metrics for them. There is no need to apply all of them at once, nor to apply all in the same way to all data assets.”

So, the best way to choose the right data quality dimensions for your organization is by:

- Understanding which data is important

- Identifying the dimensions essential for that data

- Ensuring that the dimensions chosen can be tied to your overall business goals

- Establishing measures and metrics for the selected dimensions

What is an example of a data quality dimensions framework? The 6Cs of data quality

Permalink to “What is an example of a data quality dimensions framework? The 6Cs of data quality”The 6Cs of data quality is a practical framework that helps teams structure how they evaluate, maintain, and communicate about data quality. Each “C” represents a foundational dimension that must be addressed to ensure your data is trustworthy and actionable across business workflows.

Here’s a quick overview of the 6Cs:

- Correctness: Measures whether data accurately reflects the real-world object or event it describes. In practical scenarios, data incorrectness can lead to a multitude of issues and the cascading impact of such inaccuracies could be significant, from health hazards to financial discrepancies.

- Completeness: Ensures all necessary data is present and ensures that the entire narrative your data is supposed to tell is intact. For instance, if a retailer is tracking sales transactions but fails to record all sales channels, they might miss significant insights about customer behavior.

- Consistency: Confirms data matches across systems and formats. If your marketing system and billing system show different names for the same customer, it creates confusion and erodes trust in data.

- Currency: Focuses on timeliness. Even accurate data loses value if it’s outdated. For example, stock prices from a month ago or yesterday’s weather data won’t be much help in making decisions today. Currency ensures data is up to date and relevant for current decisions.

- Conformity: Checks whether data follows predefined formats, codes, or schemas. For example, dates should be in a standard format (e.g., YYYY-MM-DD), and state names should conform to official abbreviations.

- Cardinality: Cardinality emphasizes that each record should be unique, ensuring clean, lean, and efficient databases. If your database has duplicate entries, they can skew analytical results, inflate figures, or cause redundancy in communications (like sending two copies of the same promotional email to a customer).

What are the benefits of using data quality dimensions?

Permalink to “What are the benefits of using data quality dimensions?”Data quality dimensions offer practical ways to translate data governance policies into tangible outcomes. Here’s how they add value:

- Create a common language: Dimensions standardize how teams define and discuss data quality, eliminating ambiguity across technical and business stakeholders.

- Improve decision-making: Reliable, accurate, and complete data ensures that business decisions are grounded in fact, not assumptions or flawed inputs.

- Increase operational efficiency: Dimensions like consistency and availability help eliminate bottlenecks and rework caused by bad data—saving time, effort, and money across teams.

- Support regulatory compliance: Ensuring validity, accuracy, and completeness is essential for meeting requirements under GDPR, HIPAA, BCBS 239, and more. Dimensions help standardize how these quality checks are applied and monitored.

- Provide a competitive advantage: High-quality data is a foundational asset for analytics, AI, and innovation. By systematizing quality using dimensions, organizations can move faster and with greater confidence than competitors struggling with unreliable data.

- Support consistent measurement: Dimensions act as the foundation for building meaningful metrics that can be tracked over time, supporting continuous improvement and accountability.

- Build trust across the organization: A structured, dimension-led approach to data quality fosters transparency and accountability, giving data consumers more confidence in what they’re using.

What are the challenges in maintaining data quality dimensions?

Permalink to “What are the challenges in maintaining data quality dimensions?”Even with well-defined data quality dimensions, organizations often struggle to maintain consistently high-quality data. Here are some of the most common challenges:

- Volume and velocity of data: Modern organizations generate massive volumes of data at high speeds. The sheer volume can overwhelm traditional data management systems, leading to potential issues with accuracy and consistency.

- Diversity of data sources: Data often flows in from CRMs, ERPs, marketing platforms, social channels, and more. Each source may have different formats, standards, and quality levels, making it difficult to maintain consistency, completeness, and validity.

- Data decay over time: Even accurate data degrades over time, becoming outdated and irrelevant. This is called data decay. Contact details become outdated, product prices change, and business entities evolve. Without regular updates and monitoring, dimensions like accuracy and timeliness suffer.

- Human error: Manual data entry, mislabeling, and inconsistent tagging remain major sources of quality issues. These errors affect completeness, precision, and validity, often corrupting dashboards and models downstream.

- Complex data relationships: Modern data is rarely flat. It spans nested structures, joins across domains, and complex transformations. Maintaining consistency and integrity across these relationships requires sophisticated lineage tracking and metadata management.

- Evolving regulatory and compliance demands: Dimensions like completeness, accuracy, and validity are often compliance-critical. As regulations like GDPR, HIPAA, and BCBS 239 evolve, keeping quality standards aligned with legal requirements adds ongoing pressure.

- Integration of new technologies: Emerging tools ( AI/ML systems, reverse ETL platforms, and new cloud services) introduce unfamiliar data types, sources, and flows. These can break existing quality checks or introduce new vulnerabilities.

- Lack of standardization: When teams across an organization define data quality differently, alignment becomes nearly impossible. Without shared definitions for dimensions like “timeliness” or “accuracy,” teams talk past each other and quality issues persist.

- Resource constraints: Data quality initiatives often compete for time, budget, and headcount. Without dedicated roles or automation, even basic quality checks and remediations can fall by the wayside.

- Low prioritization of data quality: Despite its impact, data quality is often an afterthought and only addressed when it breaks dashboards or triggers audit failures. Organizations without a data quality culture struggle to improve in a sustained way.

Addressing these challenges requires a strategic approach that includes the implementation of robust data governance policies, continuous monitoring of data quality dimensions, and the use of advanced metadata management tools.

Metadata, in particular, is crucial as it is vital to operationalizing data quality dimensions by anchoring them to real-world context.

Metadata tells you:

- What each data field represents (definitions, formats)

- Where the data came from (lineage)

- Who owns it (accountability)

- How it’s used downstream (business impact)

Without this context, it’s hard to understand which dimensions matter most, where to apply them, or how to act on quality issues. For example, if a dashboard is showing inconsistent values, metadata can help trace the inconsistency to an upstream pipeline transformation and identify the owner to fix it.

That’s why a metadata-led data quality studio like Atlan can help.

How does a unified data quality studio help with data quality dimensions?

Permalink to “How does a unified data quality studio help with data quality dimensions?”A modern data quality studio like Atlan takes the theory of dimensions and turns it into a living, integrated system by:

- Mapping dimensions to metadata: Atlan connects dimensions (e.g., completeness, timeliness) directly to fields and assets using metadata tags, lineage, and glossary terms—so quality rules are both visible and context-rich.

- Centralizing monitoring across tools: Atlan integrates with tools like Anomalo, Soda, and Great Expectations, providing a single control plane for one trusted view of data health. You can monitor freshness, failure rates, or duplication across dimensions and systems.

- Automating enforcement via metadata policies: Atlan allows you to automatically flag invalid data or low-quality assets based on custom dimension thresholds.

- Routing issues to the right owner: When a dimension fails (e.g., low uniqueness), Atlan notifies the responsible data steward through Slack, Jira, or other workflows.

- Tracking trust with dashboards: Atlan’s Reporting Center lets you track coverage, failures, and business impact at a glance.

By integrating metadata, lineage, quality signals, and ownership, Atlan turns data quality dimensions from static ideas into living controls embedded across your ecosystem.

Summing up: Turn data quality dimensions into operational advantage

Permalink to “Summing up: Turn data quality dimensions into operational advantage”Data quality dimensions lay the foundation for building trust in data across your organization. From improving decisions and compliance to streamlining operations and enabling AI, these dimensions give teams a shared language to define, track, and act on what “good data” really means.

But dimensions alone won’t solve your data quality challenges. You need the right context (metadata), the right workflows (automation), and the right culture (ownership). A metadata-led platform like Atlan bridges the gap between theory and execution—making your dimensions visible, measurable, and enforceable across every part of your stack.

Data quality dimensions: Frequently asked questions (FAQs)

Permalink to “Data quality dimensions: Frequently asked questions (FAQs)”1. What are data quality dimensions?

Permalink to “1. What are data quality dimensions?”Data quality dimensions are standardized attributes, such as accuracy, completeness, and timeliness. They’re used to assess whether data is trustworthy, usable, and fit for a specific purpose.

2. How do data quality dimensions differ from metrics and measures?

Permalink to “2. How do data quality dimensions differ from metrics and measures?”Dimensions define what good data looks like (e.g., completeness). Measures quantify observations (e.g., 200 rows have missing values). Metrics track performance over time (e.g., 90% completeness score this month).

3. Why are data quality dimensions important?

Permalink to “3. Why are data quality dimensions important?”Data quality dimensions help data teams assess and improve the fitness of data for key business tasks, enabling better decision-making, operational efficiency, compliance, and trust across the organization.

4. How many data quality dimensions are there?

Permalink to “4. How many data quality dimensions are there?”There’s no universal number, but Gartner identifies nine key dimensions: completeness, consistency, validity, availability, uniqueness, accuracy, timeliness, precision, and usability.

5. Can you customize data quality dimensions for your organization?

Permalink to “5. Can you customize data quality dimensions for your organization?”Yes. While standard dimensions provide a starting point, organizations often tailor them based on specific data products, domains, or regulatory requirements.

6. How do metadata and data lineage support data quality dimensions?

Permalink to “6. How do metadata and data lineage support data quality dimensions?”Metadata provides context (what data means, where it comes from, who owns it), while lineage shows data’s journey. Together, they make it easier to enforce dimensions and trace quality issues back to their source.

7. How can a data quality platform like Atlan help?

Permalink to “7. How can a data quality platform like Atlan help?”Atlan connects dimensions with metadata, automates issue detection, integrates with popular DQ tools, and routes quality tasks to the right people, turning static frameworks into active, scalable workflows.

Data quality dimensions: Related reads

Permalink to “Data quality dimensions: Related reads”- Data Quality Explained: Causes, Detection, and Fixes

- Data Quality Framework: 9 Key Components & Best Practices for 2025

- Data Quality Measures: Best Practices to Implement

- Data Quality Dimensions: Do They Matter?

- Resolving Data Quality Issues in the Biggest Markets

- Data Quality Problems? 5 Ways to Fix Them

- Data Quality Metrics: Understand How to Monitor the Health of Your Data Estate

- 9 Components to Build the Best Data Quality Framework

- How To Improve Data Quality In 12 Actionable Steps

- Data Integrity vs Data Quality: Nah, They Aren’t Same!

- Gartner Magic Quadrant for Data Quality: Overview, Capabilities, Criteria

- Data Management 101: Four Things Every Human of Data Should Know

- Data Quality Testing: Examples, Techniques & Best Practices in 2025

- Atlan Launches Data Quality Studio for Snowflake, Becoming the Unified Trust Engine for AI

Share this article