Pachyderm for Data Lineage: Architecture, Features, Setup

Share this article

Pachyderm is an open-source tool for data lineage and automatic data versioning. It is containerized, data-agnostic, and runs on cloud and on-premise environments.

This guide will delve into Pachyderm’s origins, architecture, features, setup, and other open-source data lineage alternatives.

Table of contents #

- What is Pachyderm?

- Pachyderm: Architecture overview

- Pachyderm features

- Pachyderm setup

- Pachyderm alternatives

- Final thoughts

- Related reads

What is Pachyderm? #

Pachyderm is an open-source data lineage tool that helps you manage data flow through your data estate via data versioning and automated pipelines.

Pachyderm calls itself a CI/CD engine for data and aims to enable data engineers to automate data pipelines with data versioning and data lineage tracking.

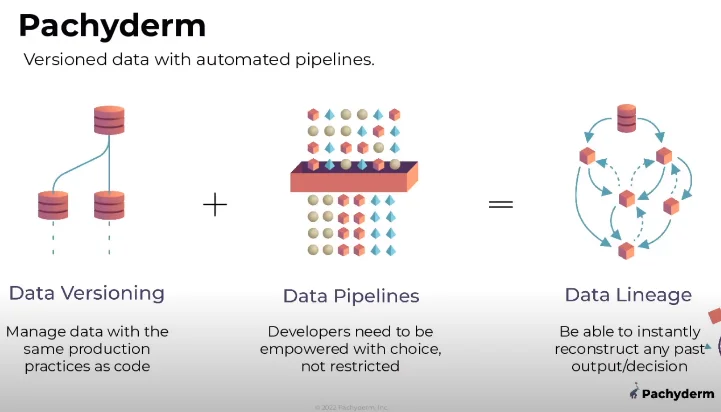

How Pachyderm enables data lineage - Source: Pachyderm.

Pachyderm has implemented a version-control system like Git to capture and store changes to data assets. This creates an audit trail of events, which helps in setting up a data lineage graph for further analysis.

Pachyderm also enforces immutability at your data source, assigning Global IDs to lineage events and data objects. You can view the immutable data lineage map as a DAG in Pachyderm’s UI.

Also, read → Automated data lineage 101

Pachyderm origins #

Pachyderm was founded in 2014 by Joe Doliner and Joey Zwicker to provide an enterprise-grade, open-source platform for version control in data science. It comes in two editions — Community (open-source) and Enterprise (a managed platform).

In 2023, Pachyderm was acquired by Hewlett Packard Enterprise to enhance its AI-at-scale capabilities.

Pachyderm: Architecture overview #

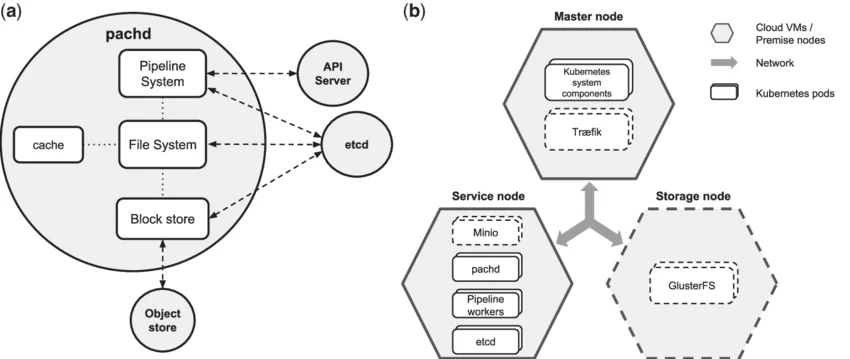

Pachyderm’s architecture is composed of several essential components that collectively contribute to its functionality and data lineage capabilities. We’ll be focusing on:

- Pachyderm File System (PFS)

- Pachyderm Pipeline System (PPS)

- Pachyderm workers

- Pachyderm Daemon (PachD)

- Input and output repositories

- Authentication and authorization (RBAC) mechanisms

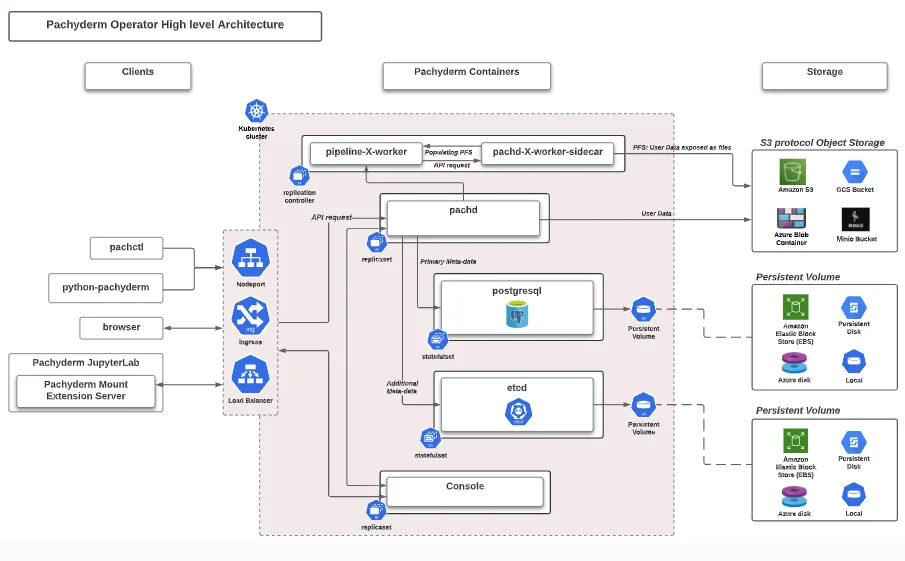

A high-level architecture diagram of Pachyderm - Source: Pachyderm.

Let’s explore each component further.

1. Pachyderm File System (PFS) #

PFS, the distributed file system integral to Pachyderm, is a version-controlled data management system that lets you store all formats and types of data.

It is built on top of Postgres and AWS S3. PFS helps your object store (i.e., S3) become the single source of truth for your data with its complete history.

The versioning mechanism within PFS operates through the creation of immutable “commits.”

Commits are snapshots capturing data at specific time points.

This immutability ensures the constancy of data lineage, as commits cannot be altered.

Pachyderm also uses ETCD and MinIO for storing and managing data. ETCD is a distributed key-value store to house metadata, including commit hashes, file sizes, and timestamps.

MinIO, on the other hand, is an object storage service to send or receive data through the S3 protocol.

2. Pachyderm Pipeline System (PPS) #

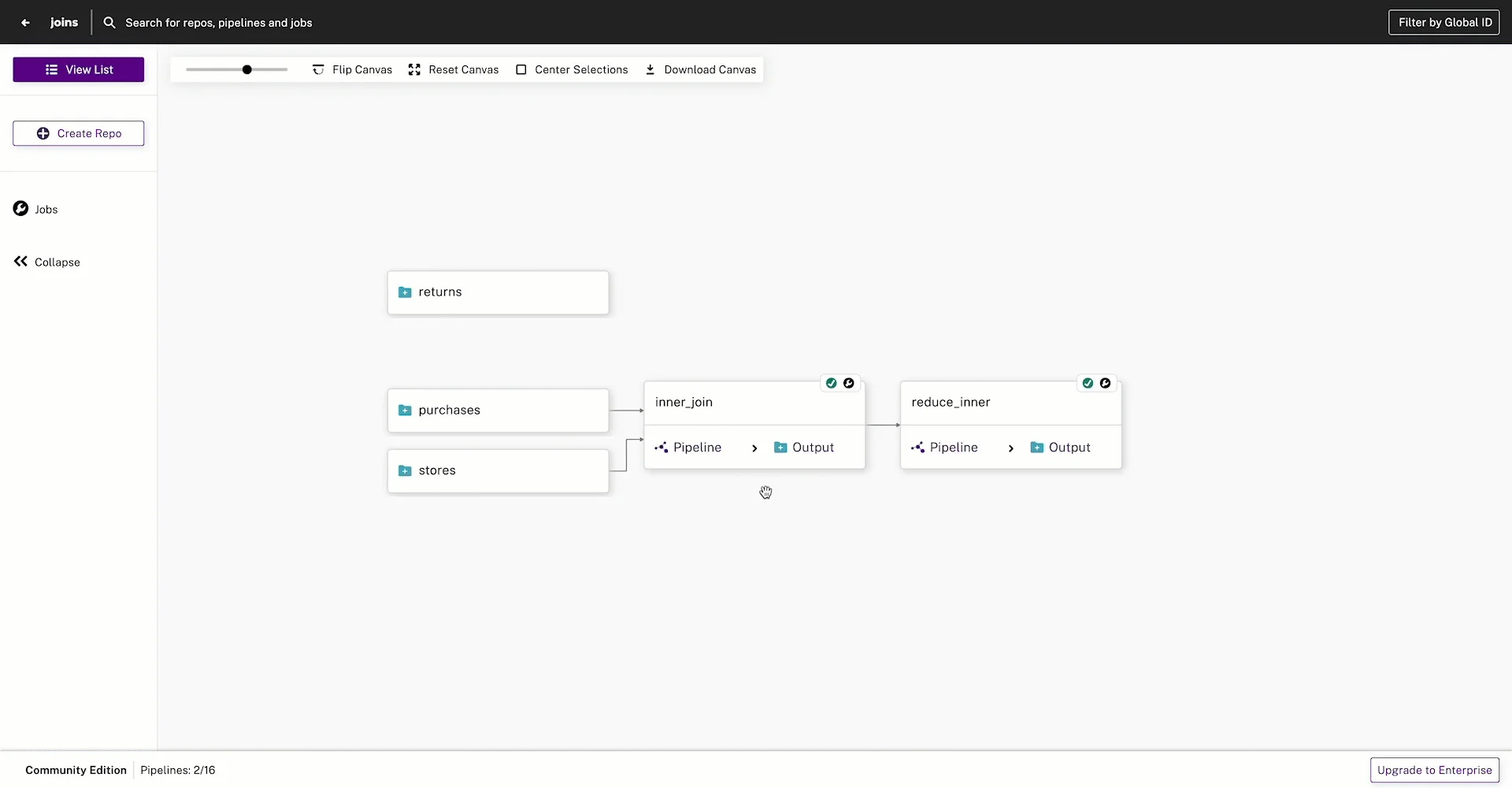

PPS is essential for automating data transformation. Whenever you make changes to data, pipelines get triggered automatically because of the PPS.

The output of each PPS is stored in a Pachyderm data repository. These outputs are version-controlled so that you can track the history of each data asset and the transformations it has undergone.

With PPS, you can define, execute, and monitor complex data transformations using code that is run in Docker containers.

Pipelines in Pachyderm - Source: Pachyderm.

3. Pachyderm workers #

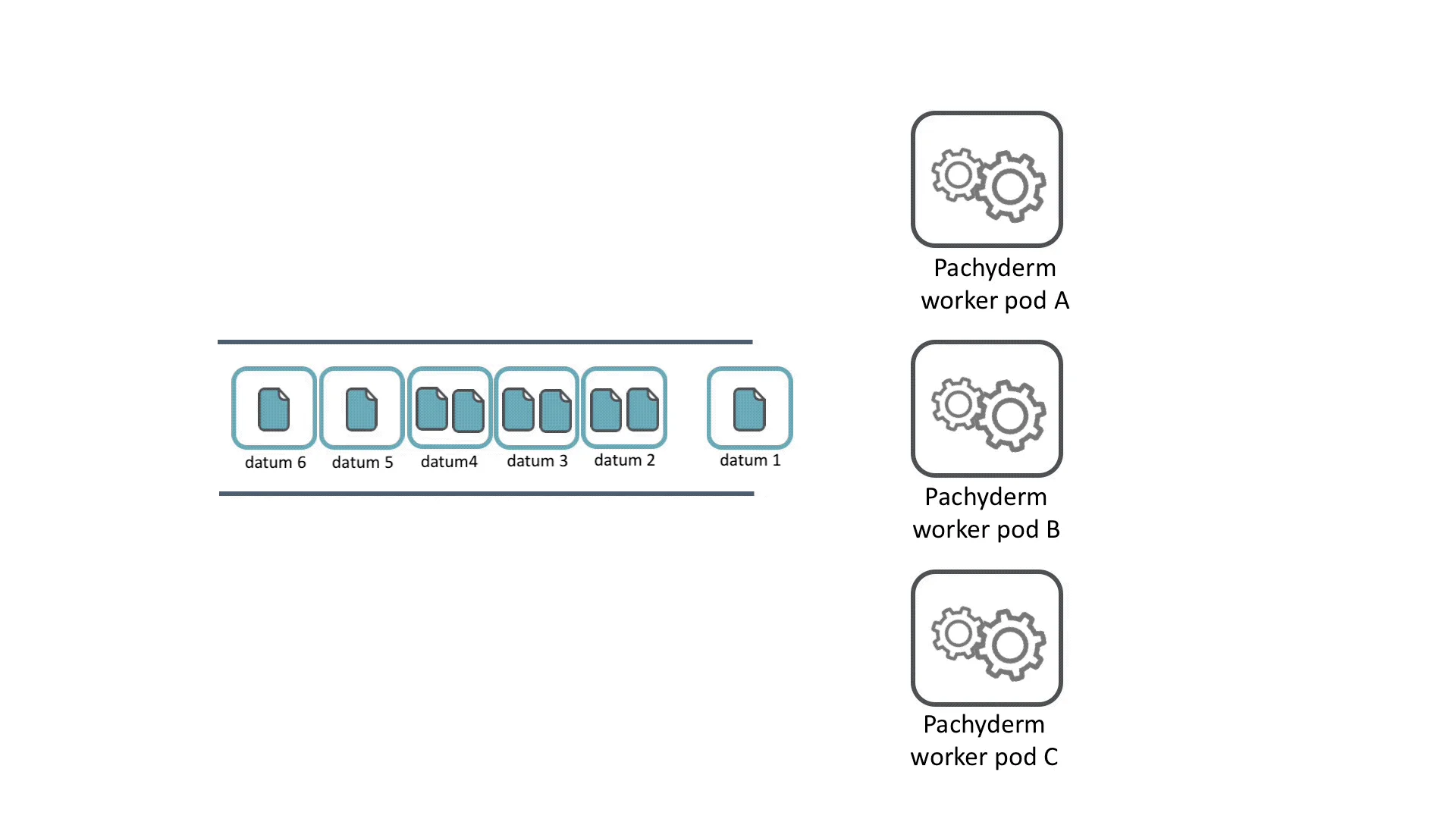

Pachyderm workers are Kubernetes pods that run the code specified in your pipelines.

When you create a pipeline, Pachyderm spins up workers that continuously run in the cluster, waiting for new data to process.

Pachyderm constantly monitors these pods to ensure their sustained functionality. Should a pod encounter failure, Kubernetes automatically replaces it.

Pachyderm worker pods in action - Source: Pachyderm.

4. Pachyderm daemon (PachD) #

PachD houses Pachyderm’s data versioning and pipelining components. Running in Kubernetes pods, it communicates with users and system components via gRPC.

gRPC operates by defining a service and outlining the remote-callable methods (i.e., functions that can be called by one computer to execute on another, as if they were local to the calling program), with their parameters and return types.

PachD tracks changes, restores previous versions, and facilitates collaboration. Pachd also orchestrates the scheduling and execution of these pipelines on the Kubernetes infrastructure.

Pachyderm daemon: Managing pipelining and versioning - Source: ResearchGate.

5. Input and output repositories #

In Pachyderm, input repositories store data and are used to feed it to pipelines. Meanwhile, the results of a pipeline are stored in the output repositories.

Every Pachyderm pipeline automatically creates an output repository with the same name as the pipeline.

6. Authentication and authorization mechanisms #

You can authenticate Pachyderm clusters using MockIDP, Auth0, or Okta. Once you enable authentication, you can access the authorization capabilities.

Pachyderm’s Role-Based Access Control (RBAC) lets you configure user roles and assign permissions for accessing data. You can configure access by resource, role, or user type.

Pachyderm features #

Pachyderm provides the following features in relation to data lineage:

- Directed Acyclic Graph (DAG) for data lineage mapping

- Data-driven pipelines that get automatically triggered whenever there are changes to data

- Immutable data lineage with data versioning for all data assets

- Collaboration through a git-like structure of commits

- Support for all major cloud providers and on-premises installations

- Integration with various data stack tools, including Google BigQuery, JupyterLab, Label Studio, and Superb AI, through its RESTful API

Pachyderm setup #

Here are the steps you need to follow to install and configure Pachyderm using Docker Desktop:

- Install Docker Desktop

- Install Pachctl CLI

- Install and configure Helm

- Install PachD

- Verify installation

- Connect to cluster

Pachyderm alternatives #

In addition to Pachyderm, there are several other noteworthy open-source data lineage tools that offer features for tracking and managing data flows within various environments, including Tokern, Egeria, OpenLineage, and Truedat.

Read More → 5 Best Open Source Data Lineage Tools in 2023

Final thoughts #

We looked at Pachyderm’s use for data lineage and version control. We explored the tool’s architecture, key features, and setup.

Pachyderm’s ability to automate data lineage mapping offers a comprehensive understanding of data flows within your data estate.

However, while Pachyderm offers significant capabilities, it is generic and might not offer the extensive set of capabilities that an off-the-shelf alternative like Atlan can provide. Moreover, the deployment using Kubernetes (as well as setting up your own Kubernetes cluster) can be a challenge.

Pachyderm Data lineage: Related reads #

- Data Lineage Explained

- How to Implement Data Lineage? - Steps, Tools & Benefits

- Automated Data Lineage: Key Benefits, Tools Evaluation Guide

- 5 Best Open Source Data Lineage Tools in 2023

- Gartner on Data Lineage

- What is Metadata Lineage & Why You Should Care About It?

- Business Lineage 101: Features, Framework and Use Cases

Share this article