What is a Data Platform? Exploring its Elements, Features & More

Share this article

A data platform is a unified system designed for the efficient handling and analysis of large volumes of data. It integrates various components such as databases, data lakes, and data warehouses to store both structured and unstructured data.

Unlock Your Data’s Potential With Atlan – Start Product Tour

The platform streamlines the process of collecting, managing, and storing data, making it accessible and usable for various applications.

Beyond storage, a data platform incorporates advanced tools for data processing and analytics, including big data processing engines and machine learning capabilities. This allows for the extraction of valuable insights from data, aiding in informed decision-making and strategic planning across diverse industries.

Essentially, a data platform is the backbone of modern data-driven initiatives, enabling organizations to fully leverage their data assets.

In this article, we will explore:

- What is a data platform and its key components?

- What is a data platform architecture?

- Different types of data platforms and their examples

- Various data platform tools and how they help data capabilities

- 8 Key differences between traditional and modern data platforms

- How can you create a data platform strategy?

Ready? Let’s dive in!

Table of contents #

- What is a data platform: Understanding its key components

- What is a data platform architecture?

- 12 Key features of a data platform

- Data platforms: 11 Key benefits for your organization

- 5 Different types of data platforms with examples

- Data platform tools: Enhancing data capabilities

- Traditional data platform vs. modern data platform

- A tabular view

- Data platform vs database

- How to craft a data platform strategy?

- Bringing it all together

- Related reads

What is a data platform: Understanding its key components #

A data platform is a technology environment designed to collect, store, manage, and analyze data. It’s a place where data is brought together from multiple sources and is made available to users like data scientists, analysts, and business teams who can then extract insights from the data to inform decision-making or to create data-driven products.

Here are some key elements that define a data platform:

- Data collection and ingestion

- Data storage and management

- Data processing and transformation

- Data analysis and visualization

- Scalability and performance

- Security and compliance

- Interoperability and flexibility

Now, let us look into each of the above key elements in brief:

1. Data collection and ingestion #

A data platform should be capable of handling data from various sources, such as relational databases, NoSQL databases, log files, streaming data, and even unstructured data sources.

2. Data storage and management #

Data platforms are responsible for storing and managing large amounts of data in a secure and efficient manner. They should offer different storage options (like data warehouses, data lakes, or databases) suitable for different types of data and use cases.

3. Data processing and transformation #

A data platform should provide functionalities to clean, transform, and process data into a form that can be easily analyzed.

4. Data analysis and visualization #

A robust data platform should include or integrate well with tools that allow for data analysis, such as business intelligence tools, data visualization tools, and machine learning algorithms.

5. Scalability and performance #

As data volumes and processing demands grow, a data platform should be able to scale accordingly to maintain high performance.

6. Security and compliance #

Data platforms must ensure the security of data, including compliance with data privacy regulations.

7. Interoperability and flexibility #

A data platform should be interoperable with various data tools and technologies, and flexible enough to allow for the development and integration of new functionalities.

In terms of mutual dependence, a data platform acts as a bridge between data providers (databases, APIs, data streams, etc.) and data consumers (analysts, data scientists, business users, etc.).

The platform provider must ensure the platform’s robustness, scalability, and security, while the developers and users rely on the platform to build, deploy, and operate their data-driven applications and services. The success of the platform provider is tied to the success of the users and developers, creating a symbiotic relationship.

What is a data platform architecture? Understanding it with a schematic representation #

A data platform architecture refers to the underlying structure and layout of a data platform, which consists of various technologies, tools, and methodologies to collect, process, store, manage, and analyze data.

A data platform architecture is generally built around the following components:

- Data ingestion

- Data storage

- Data processing

- Data management

- Data analysis and visualization

- Data science and machine learning

- Infrastructure

Let’s look at them in detail:

1. Data ingestion #

This is the process by which data is collected or imported into the data platform. It could be real-time data (streaming) or batch data. Tools like Apache Kafka, Amazon Kinesis, or Google Pub/Sub are used for real-time data ingestion, while ETL (Extract, Transform, Load) tools like Talend, Informatica, or AWS Glue are used for batch data ingestion.

2. Data storage #

This is where the data resides after it is collected. It could be in a data warehouse (like Amazon Redshift, Google BigQuery, or Snowflake), a database (like MySQL, PostgreSQL, or MongoDB), or a data lake (like Amazon S3, Azure Data Lake Store, or Google Cloud Storage).

3. Data processing #

This is the act of transforming and cleaning the raw data into a form that can be used for analysis. It could involve data normalization, aggregation, and other kinds of data transformation.

4. Data management #

Data needs to be managed for consistency, reliability, and compliance. This involves data governance, metadata management, data lineage, and data security. Tools like Atlan could be used for data governance and metadata management and data lineage.

5. Data analysis and visualization #

The processed data is analyzed and visualized using tools like Tableau, Looker, PowerBI, or programming languages like Python or R for custom analysis.

6. Data science and machine learning #

The platform may include a suite of machine learning tools for predictive modeling, clustering, recommendation, etc. This could involve platforms like Databricks, Google AI Platform, or AWS SageMaker.

7. Infrastructure #

This could be on-premises, cloud-based (like AWS, Azure, Google Cloud), or hybrid. It defines where the actual hardware and software resources that power the platform reside.

These components are connected together in a way that allows for secure, reliable, and efficient flow and processing of data from the point of ingestion to the point of consumption (like a dashboard or report).

The architecture is also designed to be scalable to handle growing data volumes and complex workloads. The exact design and technologies used in a data platform architecture will depend on the specific needs and goals of the organization.

Data platform architecture schematic representation #

A data platform consists of several interconnected components that work together to collect, store, process, analyze, and visualize data. In this section, let us explore the key components of a data platform and how they help in data-driven decisions.

A textual schematic could look something similar to this:

[Data Sources] --> [Data Ingestion] --> [Data Storage] --> [Data Processing] --> [Data Analysis] --> [Data Visualization]

| | | | |

(Collects data) (Secures & stores) (Transforms data) (Analyzes data) (Visualizes results)

| | | | |

v v v v v

(Raw Data) (Stored Data) (Processed Data) (Insights/Patterns) (Reports/Dashboards)

Let us understand each of the components in the above schema:

- Data sources

These are the original sources of data, which can include databases, APIs, files, streams, or even real-time data from IoT devices.

- Data ingestion

This step involves collecting or capturing data from the various data sources. It could involve processes like data extraction, data streaming, or batch processing.

- Data storage

The collected data is stored securely in databases, data lakes, or data warehouses. The choice of storage depends on the type and scale of the data, as well as the use case.

- Data processing

This step involves cleaning, transforming, and enriching the data to prepare it for analysis. This could involve data engineering tasks like ETL (Extract, Transform, Load).

- Data analysis

Here, the processed data is analyzed using various techniques and tools, such as SQL queries, data mining, or machine learning algorithms.

- Data visualization

Finally, the results of the data analysis are presented in a visual, easily digestible format. This could involve creating dashboards, charts, or graphs.

Please note that this is a simplified view, and real-world data platforms may include additional or more complex components.

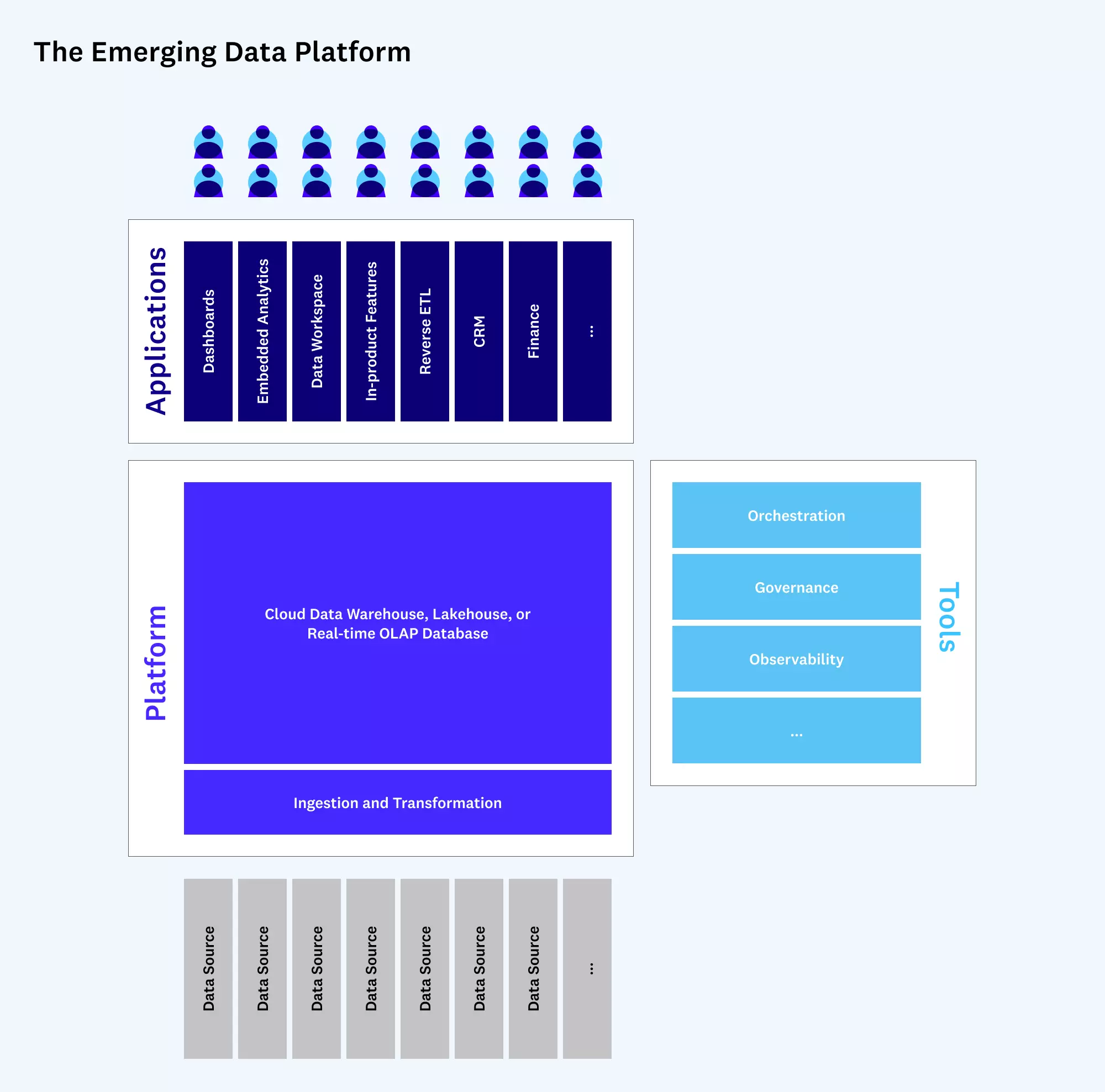

Here’s a picture that represents how data is processed in an organization:

How data is processed in an organization. Source: a16z.

12 Key features of a data platform #

A data platform, designed to manage and leverage the vast quantities of data generated in the digital age, encompasses a range of features that cater to various aspects of data handling, analysis, and utilization. Here’s an overview of the key features that define a robust data platform:

- Scalability

- Data integration and ingestion

- Real-time processing

- Security and compliance

- Data management and governance

- Advanced analytics and machine learning

- Data visualization and reporting

- Flexible storage options

- Automated workflows and orchestration

- User accessibility and collaboration

- Cloud-native and hybrid capabilities

- Customization and extensibility

Let’s look at them further:

1. Scalability #

A crucial feature, scalability allows the platform to handle increasing volumes of data efficiently. This means the platform can expand its capacity as the amount of data grows, ensuring consistent performance and data reliability.

2. Data integration and ingestion #

Data platforms are equipped to integrate data from multiple sources, including on-premises databases, cloud sources, and streaming data. Effective ingestion mechanisms ensure that data is consistently and accurately imported into the system.

3. Real-time processing #

The ability to process data in real-time is a significant feature, allowing organizations to make timely decisions based on the most current data available.

4. Security and compliance #

Strong security features are essential to protect sensitive data and ensure compliance with various data protection regulations like GDPR, HIPAA, etc. This includes encryption, access controls, and audit trails.

5. Data management and governance #

Effective data management capabilities ensure high-quality, consistent, and reliable data. This includes features for data cleansing, deduplication, and lineage tracking.

6. Advanced analytics and machine learning #

Data platforms often include tools for advanced analytics, machine learning, and AI to extract deep insights from data. These capabilities enable predictive modeling, trend analysis, and other sophisticated data analyses.

7. Data visualization and reporting #

User-friendly visualization tools are essential for interpreting complex data sets and communicating insights clearly. These tools typically include dashboards, charts, and interactive reports.

8. Flexible storage options #

A mix of storage solutions, such as data warehouses for structured data and data lakes for unstructured data, provide the flexibility to store and manage different types of data effectively.

9. Automated workflows and orchestration #

Automation features streamline data workflows, reducing the need for manual intervention and improving efficiency. This includes automated data pipelines, scheduling, and task orchestration.

10. User accessibility and collaboration #

Interfaces that are accessible to users with varying levels of technical expertise, along with features that facilitate collaboration, are important for democratizing data access and use across an organization.

11. Cloud-native and hybrid capabilities #

Many data platforms are cloud-native, offering the advantages of cloud computing like elasticity and cost-efficiency. Hybrid capabilities also allow for seamless integration between on-premises and cloud environments.

12. Customization and extensibility #

The ability to customize and extend the platform with additional tools, plugins, or APIs is important for organizations to tailor the platform to their specific needs.

These features collectively make data platforms powerful tools for organizations, enabling them to effectively harness the power of their data, gain insights, and drive informed decision-making.

Data platforms: 11 Key benefits for your organization #

The implementation of a data platform offers numerous advantages for organizations, enabling them to harness the power of data effectively and efficiently. Here are some of the key benefits:

- Improved data management

- Enhanced decision-making

- Increased efficiency

- Scalability

- Real-time processing and analytics

- Enhanced security and compliance

- Cost-effective

- Cross-departmental collaboration

- Customer insights and personalization

- Innovation and competitiveness

- Flexibility and adaptability

Let’s understand them in detail:

1. Improved data management #

Data platforms provide a centralized system for managing vast amounts of data from various sources. This centralization simplifies data governance, improves data quality, and ensures consistency, making it easier for organizations to manage and utilize their data assets.

2. Enhanced decision-making #

With integrated tools for analytics and reporting, data platforms enable organizations to derive actionable insights from their data. This leads to more informed decision-making, helping businesses to respond quickly to market changes and customer needs.

3. Increased efficiency #

Automation of data processes, from ingestion to analysis, significantly reduces manual effort and minimizes errors. This efficiency allows organizations to focus more on strategic activities rather than on managing data.

4. Scalability #

Data platforms are designed to scale with the growing data needs of an organization. This scalability ensures that businesses can handle increased data loads without a drop in performance, future-proofing their data infrastructure.

5. Real-time processing and analytics #

The ability to process and analyze data in real-time is a significant advantage, enabling organizations to react promptly to emerging trends, operational issues, or customer behaviors.

6. Enhanced security and compliance #

With built-in security features and compliance tools, data platforms help organizations protect sensitive data and meet regulatory requirements, reducing the risk of data breaches and legal penalties.

7. Cost-effective #

By optimizing data storage and processing, and reducing the need for multiple disparate tools, data platforms can be a cost-effective solution for managing an organization’s data needs.

8. Cross-departmental collaboration #

By providing a common platform for data access and analysis, these systems facilitate better collaboration across different departments, breaking down silos and fostering a more integrated approach to data.

9. Customer insights and personalization #

Data platforms enable businesses to gain deeper insights into customer behavior and preferences, leading to more effective marketing strategies and personalized customer experiences.

10. Innovation and competitiveness #

Access to advanced analytics and machine learning tools within data platforms can drive innovation, helping businesses to develop new products, services, and processes that keep them competitive in a rapidly evolving marketplace.

11. Flexibility and adaptability #

With the ability to integrate with a wide range of tools and systems, data platforms offer flexibility, allowing businesses to adapt their data strategy as their needs evolve.

In summary, data platforms are integral in enabling organizations to leverage their data more effectively, driving operational efficiency, strategic decision-making, and competitive advantage in today’s data-driven world.

5 Different types of data platforms with examples #

Data platforms can be categorized based on various factors such as the type of data they handle, the kind of processing they support, and their deployment model. Here’s a breakdown:

- Data warehouses

- Data lakes

- Cloud-based data platforms

- Hybrid data platforms

- Real-time data platforms

Now, let’s discuss each of these data platform types in more detail:

1. Data warehouses #

A data warehouse is a type of data platform that is primarily designed for storing structured data in an organized, columnar format for quick and efficient analytical queries.

Data in a warehouse is cleaned, transformed, and structured in a specific schema (like star or snowflake) to support business intelligence and reporting. Examples include Amazon Redshift, Google BigQuery, and Snowflake.

2. Data lakes #

A data lake is another type of data platform, designed to store vast amounts of raw data, whether it’s structured, semi-structured, or unstructured. Data lakes are built on technologies that do not require a predefined schema, so data can be stored in its native format.

This makes data lakes suitable for big data and machine learning use cases. Examples of data lake platforms include AWS Lake Formation and Azure Data Lake Storage.

3. Cloud-based data platforms #

These are data platforms that are hosted on the cloud, providing scalability, flexibility, and cost-efficiency. They usually provide a range of integrated services for data ingestion, storage, processing, and analysis. Examples include AWS, Google Cloud Platform, and Microsoft Azure.

4. Hybrid data platforms #

Hybrid data platforms combine on-premises and cloud environments. They allow organizations to leverage the advantages of the cloud while keeping certain data or applications on-premises due to regulatory requirements or other considerations. Cloudera offers solutions that can be deployed in a hybrid manner.

5. Real-time data platforms #

These data platforms are designed to handle real-time data processing and analytics. They enable businesses to react to information as it comes in, which is crucial for use cases like fraud detection, real-time recommendations, and operational analytics. Examples include Apache Kafka and AWS Kinesis for data streaming, and Apache Storm and Spark Streaming for real-time data processing.

These categories are not mutually exclusive. For instance, a data platform could be both cloud-based and support real-time processing. The choice of a data platform depends on a variety of factors, including the type of data, the use case, cost, and the skill set of the team.

Data platform tools: Enhancing data capabilities #

In this section, we will explore a range of tools that data teams can consider deploying on top of their data platforms, empowering them to maximize the value of their data assets. These tools provide additional functionalities and address specific needs related to data discovery, governance, quality, security, privacy, visualization, collaboration, and automation.

Let’s understand the different categories that they belong to:

- Data discovery tools

- Data governance tools

- Data quality tools

- Data lineage tools

- Data security tools

- Data privacy tools

- Data visualization tools

- Data storytelling tools

- Data collaboration tools

- Data automation tools

Let us look into each of the above category of tools in brief:

1. Data discovery tools #

These tools help users find and understand the data that is available to them. They can help to identify data that is relevant to a particular project, and to understand the quality and lineage of that data.

2. Data governance tools #

Data governance tools help to manage the data lifecycle, from creation to deletion. They can help to ensure that data is accurate, consistent, and secure.

3. Data quality tools #

They help to identify and fix data quality issues. They can also help to ensure that data is accurate, complete, and consistent.

4. Data lineage tools #

Data lineage tools track the flow of data through an organization. They can help to identify data sources, data transformations, and data destinations.

5. Data security tools #

These tools help to protect data from unauthorized access, use, disclosure, disruption, modification, or destruction.

6. Data privacy tools #

Data privacy tools help in compliance with data privacy regulations and even identify and protect personal data. Besides, they give users control over their data.

7. Data visualization tools #

These tools help to make data easier to understand and interpret. They can help to create charts, graphs, and other visuals that can be used to communicate data insights.

8. Data storytelling tools #

These tools help to tell stories with data. They can help to create engaging and informative content that can be used to communicate data insights to a wider audience.

9. Data collaboration tools #

These tools help to facilitate collaboration between data teams. They can help to share data, insights, and ideas, and to work together on data projects.

10. Data automation tools #

These tools help to automate data tasks. They can help to save time and effort, and to improve the accuracy and consistency of data processes.

So far, we have discussed a few tool categories that you could consider deploying on top of your data platform. However, the specific tools that you need will depend on the size and complexity of your organization, the type of data you are working with, and your data needs.

Traditional data platform vs. modern data platform: Their evolution and what makes them different #

Traditional data platforms, characterized by structured and centralized approaches, relied on relational databases and data warehouses. However, as data volumes, velocity, and variety increased, these platforms faced limitations in handling big data, real-time processing, and unstructured data.

That led to the emergence of modern data platforms that offer enhanced capabilities to meet the demands of today’s data-rich environments. In this section, we will explore the differences between traditional and modern data platforms:

Traditional data platforms #

In the past, traditional data platforms typically followed a structured and centralized approach. They primarily consisted of relational databases and data warehouses. These systems were designed to handle structured data and were often on-premises, meaning they were physically located within the organization.

- Relational databases

These databases use a schema to define data relationships, and data must be structured to fit this schema. Examples include MySQL, Oracle Database, and SQL Server.

- Data warehouses

Data warehouses are used for reporting and data analysis. They are optimized to process large amounts of data and support complex queries.

However, traditional data platforms had limitations. They struggled with large volumes of data (what we now call “big data”), they weren’t built to handle real-time data processing, and they didn’t support unstructured data well, which makes up a large portion of modern data (e.g., text, images, videos).

Modern data platforms #

Modern data platforms have evolved to overcome the limitations of traditional data platforms and support the diverse needs of today’s data-rich environments. They have the ability to handle enormous volumes of data, process data in real-time, and manage both structured and unstructured data. Furthermore, they often leverage cloud technologies for scalability, flexibility, and cost-effectiveness.

- Big data technologies

Tools like Hadoop and Apache Spark allow for distributed processing of large data sets across clusters of computers.

- Data lakes

Data lakes store data in its raw format, supporting structured, semi-structured, and unstructured data. They provide flexibility as the need for pre-defined schemas is eliminated or reduced.

- NoSQL databases

NoSQL databases are designed to handle unstructured data, scale horizontally, and support real-time processing. They can store and retrieve data that is modeled in means other than the tabular relations used in relational databases.

- Real-time processing

Tools like Apache Kafka and Apache Flink allow for real-time data ingestion and processing.

- Cloud-based services

Modern data platforms often leverage cloud-based services for storage, processing, and analysis. Examples include Snowflake, Google BigQuery, AWS Redshift, and Azure Data Lake Storage.

- Machine learning and AI

Modern platforms often incorporate machine learning and AI capabilities, making it easier to build and deploy predictive models.

- Data governance and security

With the increasing importance of data privacy and protection, modern platforms incorporate advanced data governance and security features.

While modern data platforms provide many advantages, they also introduce complexity due to the variety of tools and technologies involved. Therefore, organizations need to carefully consider their specific needs and capabilities when designing their data platforms.

Traditional data platform vs. modern data platform: A tabular view #

Here is a comparison between traditional and modern data platforms in a tabular format:

| Feature | Traditional data platform | Modern data platform |

|---|---|---|

| Data type | Primarily structured data | Structured, semi-structured, and unstructured data |

| Data volume | Limited; struggles with large volumes ("big data") | Handles very large volumes of data ("big data") |

| Data processing | Batch processing; struggles with real-time processing | Both batch and real-time processing |

| Data storage | Relational databases and data warehouses | Mix of relational databases, NoSQL databases, data lakes, and data warehouses |

| Infrastructure | Often on-premises | Often cloud-based, taking advantage of scalability and flexibility |

| Data analytics | Supports traditional analytics and BI tools | Supports a variety of analytics tools, including advanced analytics and AI/ML capabilities |

| Flexibility | Data must fit into predefined schemas | Flexible schema (schema-on-read), particularly in data lakes and NoSQL databases |

| Data governance | Basic data governance capabilities | Advanced data governance and security features, often built-in or integrated |

In the table above, we have provided you a general comparison. But, the specific capabilities and characteristics can vary based on the tools and technologies used in the data platform.

Data platform vs database: Uncovering their differences #

While a database and a data platform both deal with data, they serve different purposes and operate on different levels of complexity.

-

Database: A database is essentially a structured set of data. It’s a place to store, manage, and retrieve data efficiently.

- A database can be used to hold tables of data, relationships between them, views, indexes, and procedures.

- Examples include SQL databases like MySQL, PostgreSQL, or Oracle, and NoSQL databases like MongoDB or Cassandra.

- Databases usually offer capabilities like transactions, allowing multiple operations to be treated as a single unit of work.

-

Data platform: Like we defined above, a data platform is an architecture or environment that houses not only databases but also other components necessary for storing, processing, and analyzing large volumes of structured, semi-structured, and unstructured data.

- A data platform may encompass data ingestion tools, data storage (like databases, data warehouses, and data lakes), data processing engines, analytics and business intelligence tools, and often machine learning capabilities.

- It also includes data governance and security mechanisms.

- Examples include big data platforms like Amazon Web Services, Microsoft Azure, and Google Cloud Platform.

In simple terms, a database can be considered as one component or a building block of a data platform. A data platform not only stores data (in databases, data lakes, or data warehouses) but also provides various capabilities to process, analyze, and gain insights from this data.

How to craft a data platform strategy in 8 steps #

Now, let us look at a high-level strategy for developing a data platform. Here are the key points:

- Understand business needs and goals

- Define data architecture

- Implement robust data governance

- Ensure data security and privacy

- Prioritize scalability and performance

- Facilitate real-time and batch processing

- Promote self-service capabilities

- Adopt a culture of continuous learning and improvement

Now, let’s discuss these points in more detail:

1. Understand business needs and goals #

A data platform should be designed to meet specific business needs and goals. Understand what questions your business needs to answer, the kind of insights it’s seeking, and the decisions it wants to support with data. This will guide the selection of technology and design of the data platform.

2. Define data architecture #

Design the structure of the data platform considering factors like data sources, data types (structured, semi-structured, unstructured), the volume of data, data flows, processing needs, and storage requirements. The architecture should balance cost, complexity, and capability.

3. Implement robust data governance #

Good data governance ensures data is consistent, reliable, and of high quality. This involves establishing clear policies and processes for data management, including metadata management, data lineage, and data quality management.

4. Ensure data security and privacy #

With the growing importance of data protection laws, it is critical to embed security and privacy measures in the data platform. This includes access controls, encryption, audit logs, and measures to comply with regulations like GDPR and CCPA.

5. Prioritize scalability and performance #

The data platform should be designed to handle growth in data volume and complexity. Cloud-based solutions often offer good scalability. Performance, in terms of data processing speed and query response times, should also be a key consideration.

6. Facilitate real-time and batch processing #

The data platform should support both real-time (streaming) and batch processing of data. Real-time processing is important for operational analytics, while batch processing is often used for large-scale, complex analytical tasks.

7. Promote self-service capabilities #

Empower business users by providing self-service capabilities. This includes self-service data preparation, analytics, and reporting. This requires user-friendly interfaces and tools within the data platform.

8. Adopt a culture of continuous learning and improvement #

Technology evolves rapidly, so it’s important to stay updated and continuously improve the data platform. This might involve adopting new tools, revising data models, or refining processes. Feedback from users of the platform should be actively sought and used to drive improvements.

This strategy provides a broad roadmap for developing a data platform. However, the exact approach would vary depending on the specifics of the organization, including its size, industry, regulatory environment, and existing IT landscape.

Bringing it all together #

In summary, data platforms are central to managing and deriving value from data in today’s data-rich environments. They provide the infrastructure and tools necessary for handling, processing, and analyzing data.

They have helped evolve to meet the increasing demands of modern data workloads. In this comprehensive blog, we delved into the world of data platforms, exploring their key components, capabilities, and evolution.

What is a data platform? Related reads #

- What is a modern data platform: Components, Capabilities, and Tools

- Modern data teams: Roles, structure and how do you build one

- Modern data catalogs: 5 essential features and evaluation guide

- What is a data lake? Definition, architecture, and solutions

- What is Data Governance? Its Importance, Principles & How to Get Started?

- What is Metadata? - Examples, Benefits, and Use Cases

- What Is a Data Catalog? & Why Do You Need One in 2023?

- Is Atlan compatible with data quality tools?

Share this article