6 Steps to Set Up OpenMetadata: A Hands-On Guide

Share this article

How to set up OpenMetadata? #

To simplify the process of setting up OpenMetadata, we’ve broken down the process into the following six steps:

Step 1: Taking stock of requirements to install OpenMetadata

Step 2: Understanding OpenMetadata architecture

Step 3: Downloading the Docker Compose YAML file

Step 4: Running the containers using Docker Compose

Step 5: Verifying if all the relevant services are operational

Step 6: Loading sample data by running Airflow DAGs

Once you’re done loading the data in OpenMetadata, you can explore OpenMetadata features. In this article, you’ll go through an example of data lineage of dimension tables from a sample Shopify data model.

Table of contents #

- How to set up OpenMetadata?

- Prerequisites

- Understand OpenMetadata architecture

- Download the Docker Compose YAML file

- Run the containers using Docker Compose

- Verify if all the relevant services are operational

- Load sample data by running Airflow DAGs

- Explore OpenMetadata

- Summary

- Related reads

Step 1. Prerequisites #

Although you can install OpenMetadata using several different methods, for simplicity’s sake, this article will use the Docker method:

- Docker Engine (>= v20.10.0) — you can verify your Docker Engine version with

docker --version - Docker Compose (>= v2.1.1) — you can verify your Docker Compose version with

docker compose version

This tutorial was run on MacOS, but you can run Docker Engine on any supported OS, and the installation process will be the same.

Step 2. Understand OpenMetadata architecture #

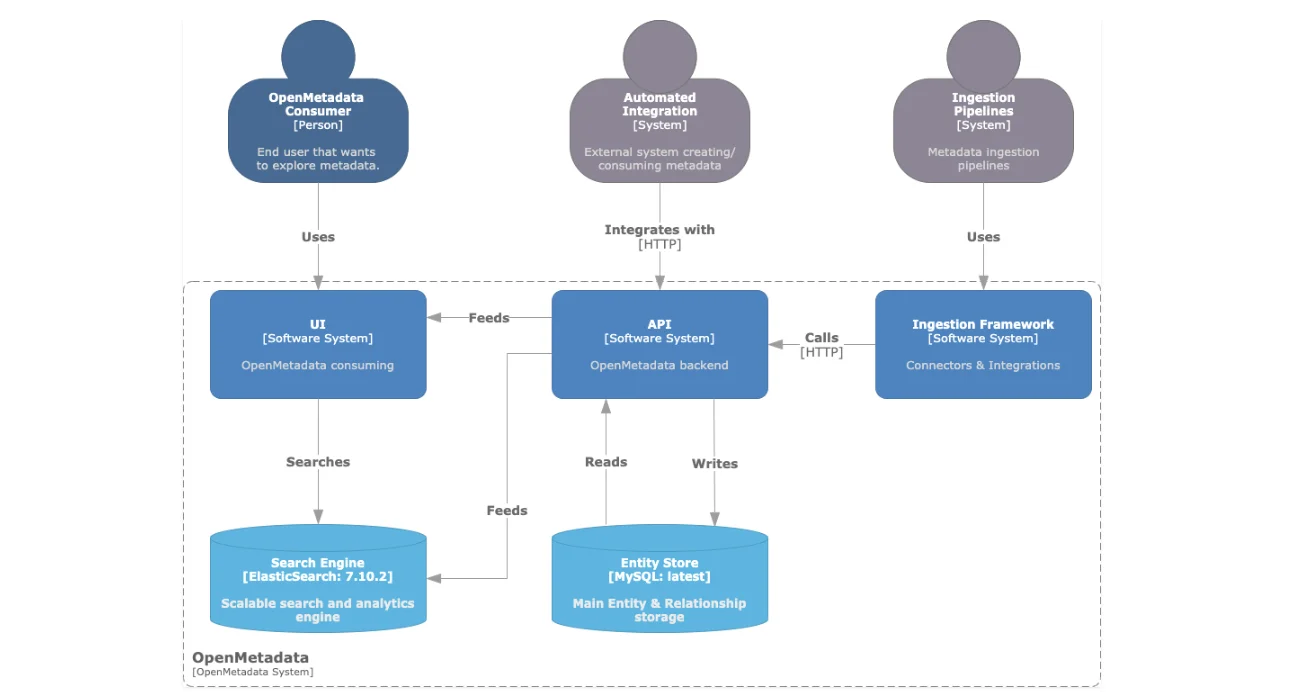

OpenMetadata is driven by a DropWizard-powered REST API that serves as the backbone of all internal and external communications with systems like metadata sources, the ingestion framework, the UI, the backend database, and the search engine.

The OpenMetadata UI is powered by the API, a MySQL database that stores all the metadata, and an Elasticsearch instance that makes the metadata across the business searchable and discoverable.

OpenMetadata's architecture - Image from OpenMetadata documentation.

OpenMetadata only supports pull-based ingestion, but it supports both push and pull-based metadata consumption. Metadata ingestion in OpenMetadata is facilitated by a source-agnostic ingestion framework written in Python. To learn more, go to the OpenMetadata blog, where the engineering team discusses how they built the ingestion framework.

An open-source Apache Airflow container orchestrates metadata ingestion, but you can configure it to use cloud-managed versions, such as AWS MWAA and Google Cloud Composer.

Step 3. Download the Docker Compose YAML file #

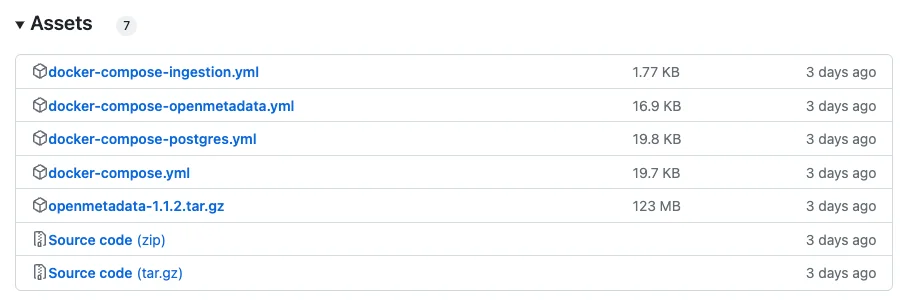

Now, download the Docker Compose file from the OpenMetadata GitHub repository using the following command:

wget https://github.com/open-metadata/OpenMetadata/releases/download/1.1.2-release/docker-compose.yml>

You can locate the Docker Compose file in the project assets listed on the GitHub Releases page, as shown in the image below:

OpenMetadata releases - Image from GitHub repository.

Step 4. Run the containers using Docker Compose #

Spin up all the containers defined in the Docker Compose file using the command below:

docker compose -f docker-compose.yml up - detach

This might take a few seconds to a couple of minutes to run. Here’s what you’ll see on your terminal screen when Docker Compose is doing its magic:

![]()

Tracking the installation process from the CLI - Image by Atlan.

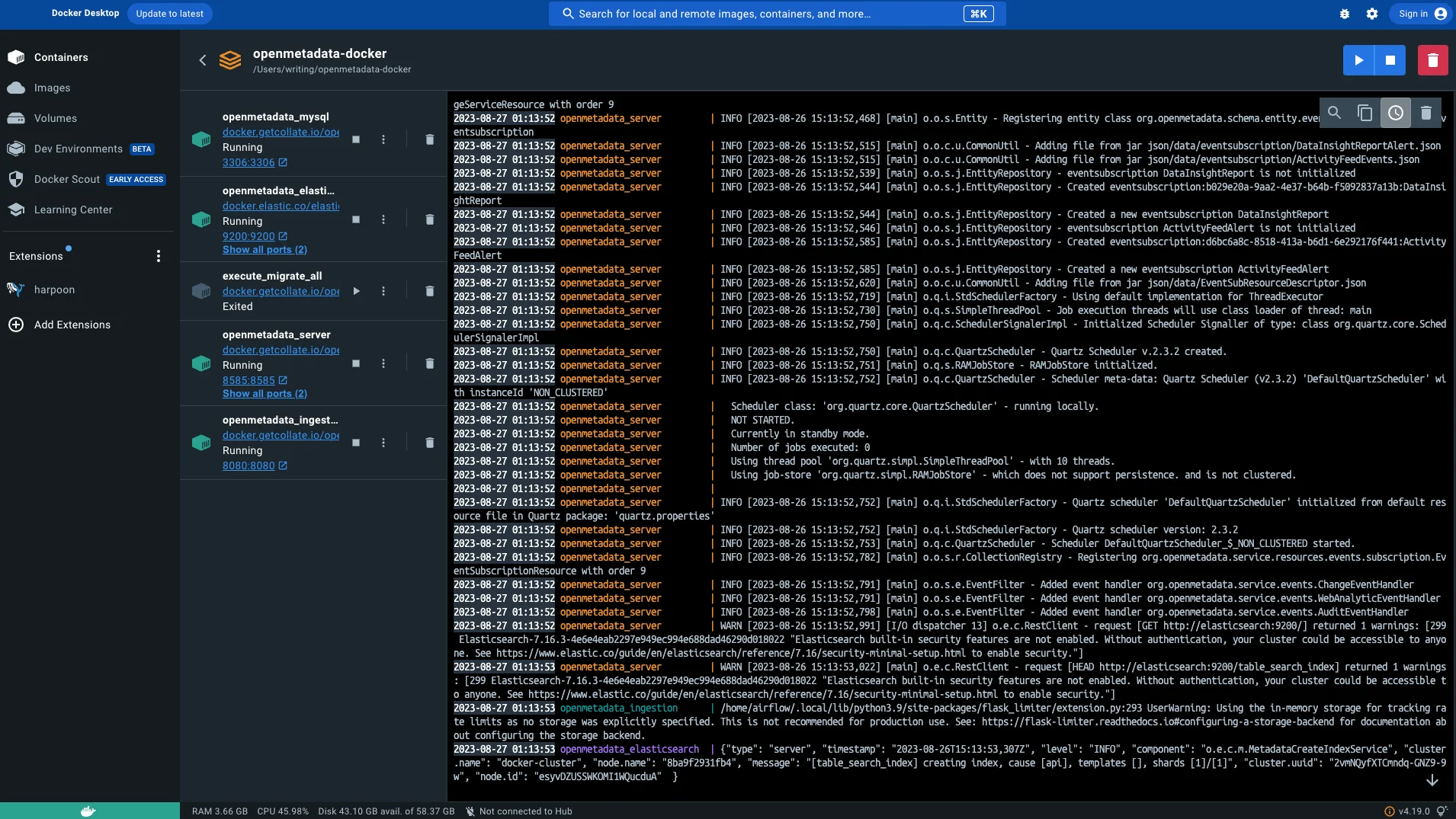

If you want to see detailed logs of what’s happening during the installation, you can go to the Docker Desktop application, as shown in the image below:

Docker Desktop application during installation - Image by Atlan.

Step 5. Verify if all the relevant services are operational #

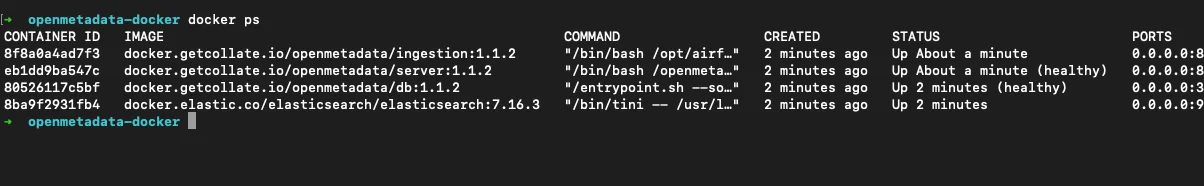

Once the installation is finished, you can execute a docker ps command to see the status of the different containers that are operational, as shown in the image below:

Verifying if all OpenMetadata services are running fine - Image by Atlan.

If all the services are running okay, you should be able to log into both the OpenMetadata UI and the Airflow UI using the same username and password combo, which is admin and admin, as shown in the sections below.

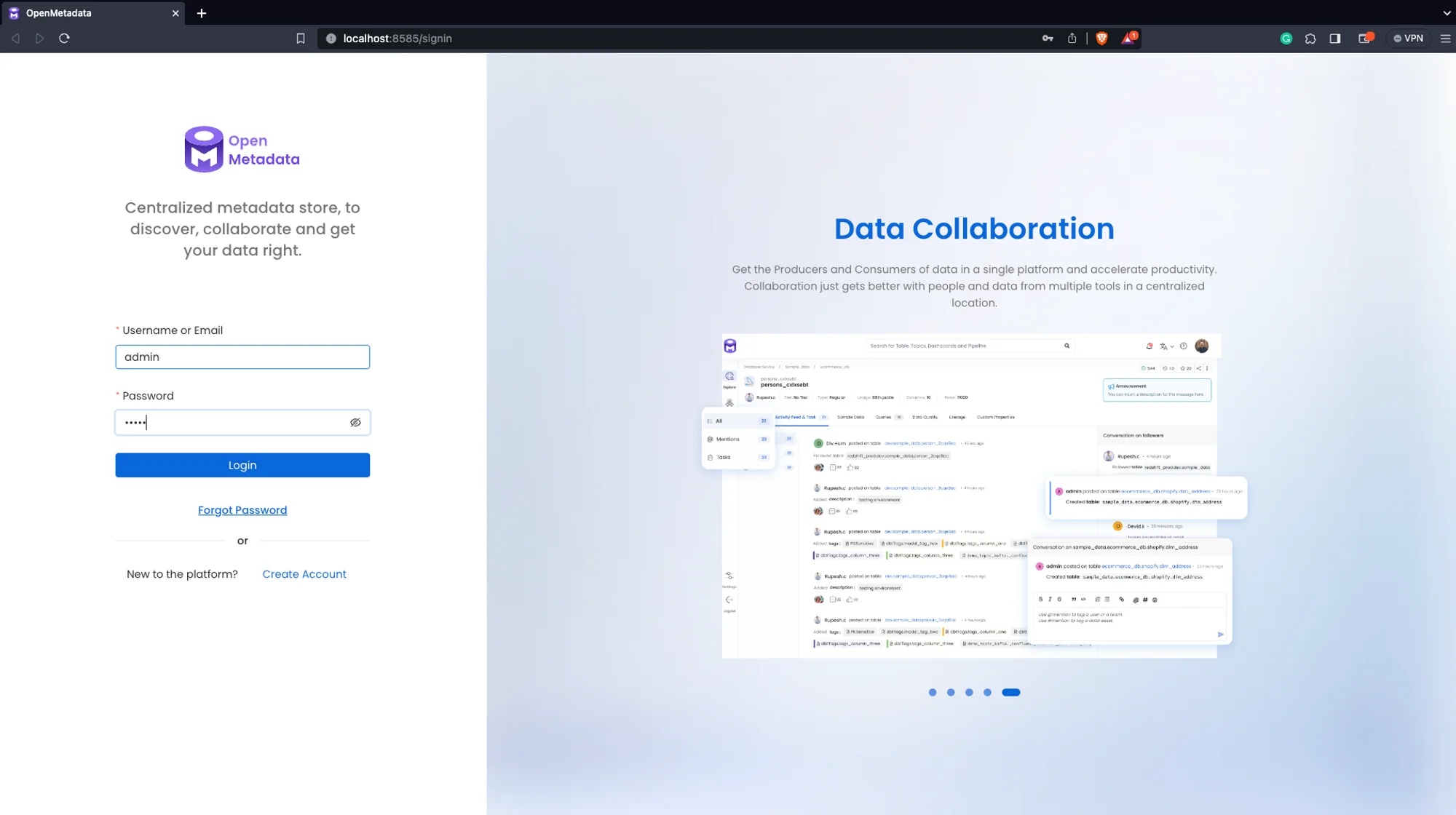

Step 5.1. Log into OpenMetadata #

The OpenMetadata frontend is hosted on port 8585 by default, so you can go to localhost:8585/login to log into OpenMetadata, as shown in the image below:

Logging into OpenMetadata - Image by Atlan.

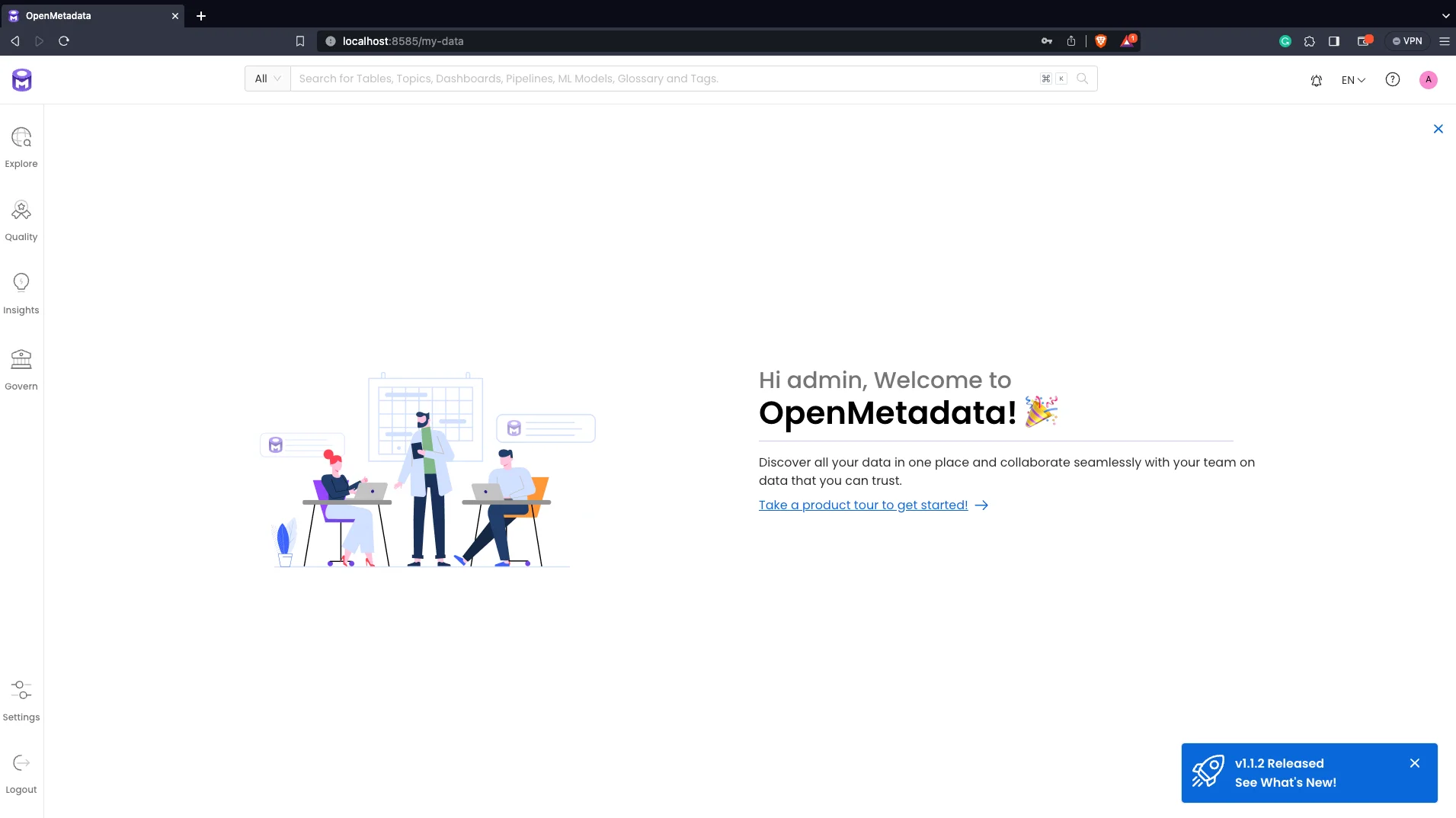

After successfully logging into OpenMetadata, you’ll land on the following page:

OpenMetadata landing page - Image by Atlan.

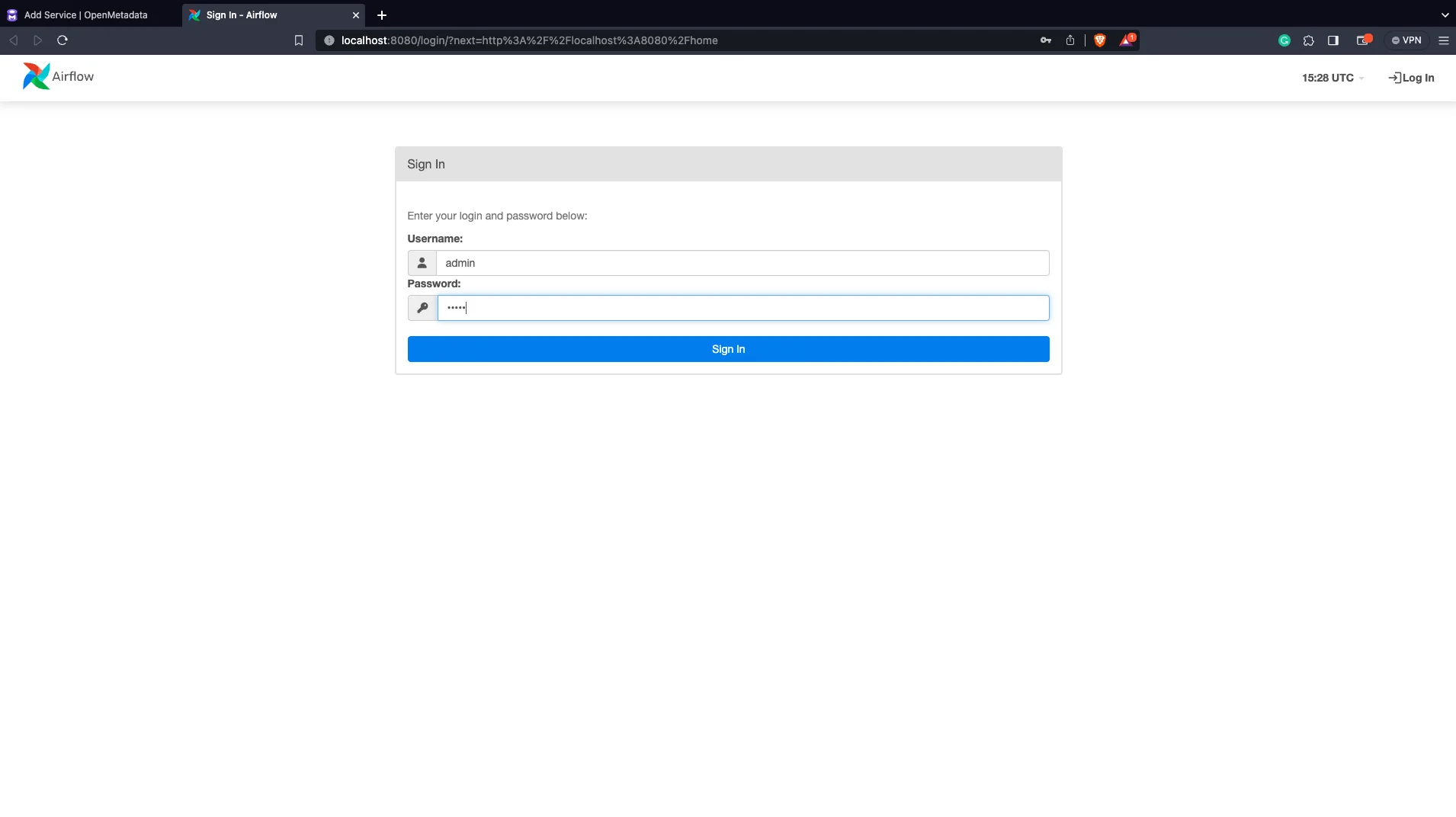

Step 5.2. Log into Airflow #

You can log into Airflow by going to localhost:8080 and entering the default username and password mentioned at the beginning of this section, as shown in the image below:

Login page in Airflow - Image by Atlan.

Once that’s done, you’ll be ready to load sample data into OpenMetadata using one of the pre-configured Airflow DAGs.

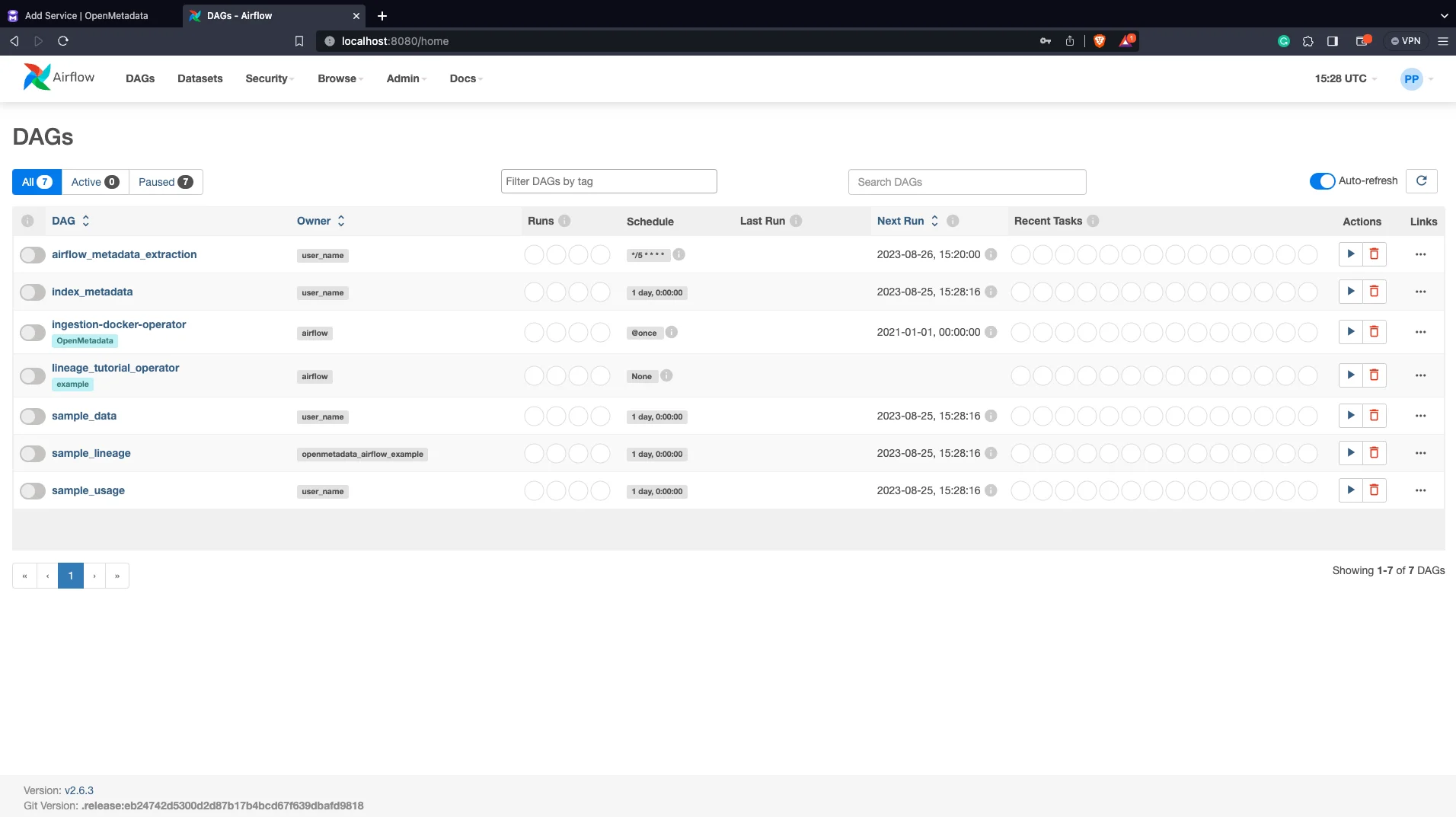

Step 6. Load sample data by running Airflow DAGs #

In this guide, we’ll be loading pre-packaged sample data into OpenMetadata. The sample data is a dimensional model for an e-commerce website called Shopify. All the default DAGs are shown in the image below; you have to enable and run the sample_data DAG first:

DAGs view in Airflow - Image by Atlan.

Once the DAG is run, you can run the lineage_tutorial_operator, which will fetch lineage metadata for that dimensional model into OpenMetadata.

Explore OpenMetadata #

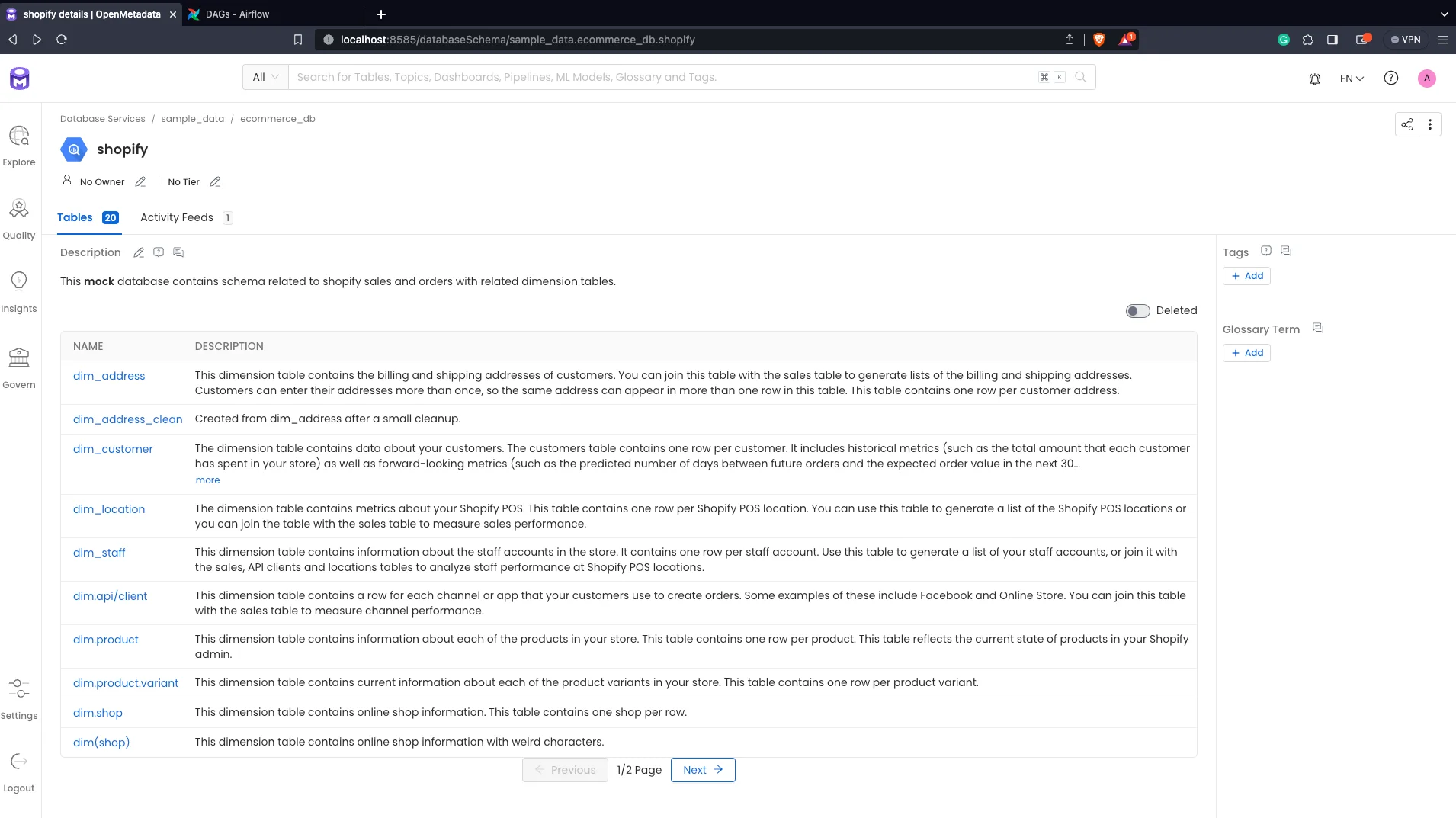

After you have some metadata ingested into the data catalog, you can have a look at what’s been loaded, i.e., the Shopify dimensional model, as shown below:

Viewing Shopify dimensional data model tables in OpenMetadata - Image by Atlan.

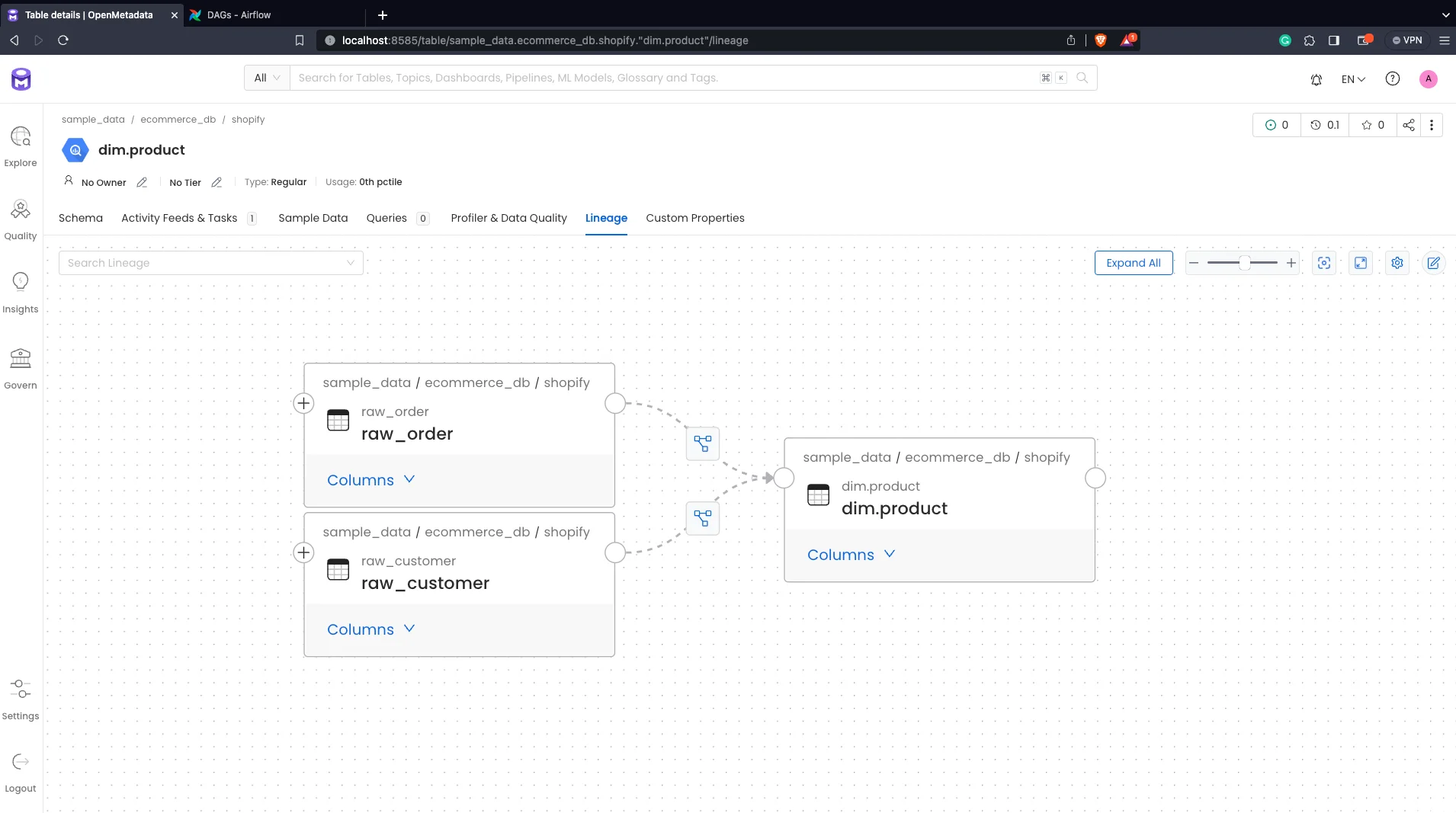

You can also explore the object and column-level lineage using the Lineage view for any given table in the dimensional model:

Lineage view of dim_product table in OpenMetadata - Image by Atlan.

Summary #

This article took you through the step-by-step process of setting up OpenMetadata using Docker. It also introduced you to OpenMetadata’s architecture, ingestion framework, and the sample dimensional data used while exploring OpenMetadata.

Although the Docker-powered OpenMetadata deployment is suitable for playing around and running small-scale workloads, for production deployment, you should go with a more flexible and scalable solution powered by Kubernetes. Learn more about deploying OpenMetadata in production from the official deployment guides.

OpenMetadata installation: Related reads #

- OpenMetadata Ingestion : Framework, Workflows, Connectors & More

- OpenMetadata : Design Principles, Architecture, Applications & More

- OpenMetadata and dbt : For an Integrated View of Data Assets

- OpenMetadata vs. Amundsen : Compare Architecture, Capabilities, Integrations & More

- OpenMetadata vs. DataHub : Compare Architecture, Capabilities, Integrations & More

- Open Source Data Catalog - List of 6 Popular Tools to Consider in 2023

Share this article