OpenMetadata and DataHub are leading open-source data cataloging tools. They both provide essential functionalities for data management, including cataloging, search, and governance. However, they differ in architecture and capabilities.

OpenMetadata, developed by the team behind Uber’s metadata infrastructure, emphasizes a unified metadata model. In contrast, DataHub, created by LinkedIn, focuses on a generalized metadata service.

This article compares their features, integrations, and use cases to help organizations choose the right tool.

Quick answer:

Strapped for time? Here’s a snapshot of what to expect from the article:

- OpenMetadata is an open-source metadata store built by the team behind Uber’s metadata infrastructure. DataHub is an open-source data cataloging tool from LinkedIn.

- Both tools offer similar functionalities for data cataloging, search, discovery, governance, and quality.

- In this article, we’ll compare OpenMetadata and DataHub in terms of their architecture, tech stack, metadata modeling and ingestion setup, capabilities, and integrations.

See How Atlan Streamlines Metadata Management – Start Tour

OpenMetadata and DataHub are two of the most popular open-source data cataloging tools out there. Both tools have a significant overlap in terms of features, however, they both have some differentiators as well. Here we will compare both these tools based on their architecture, ingestion methods, capabilities, available integrations, and more.

Why open source catalogs didn’t work for Autodesk’s business goals #

We went through an entire deployment of an open source version… but it wasn’t sustainable as we continued to grow and grow. Atlan met all of our criteria, and then a lot more. — Mark Kidwell, Chief Data Architect, Autodesk.

Start the tour to experience Atlan ✨

Table of contents #

- What is OpenMetadata?

- What is DataHub?

- Differences between OpenMetadata and DataHub

- Architecture and technology stack

- Metadata modelling and ingestion

- Data governance capabilities

- Data lineage

- Data quality and data profiling

- Upstream and downstream integrations

- Comparison summary

- How organizations making the most out of their data using Atlan

- FAQs about OpenMetadata vs. DataHub

- Related Resources

What is OpenMetadata? #

OpenMetadata was the result of the learnings of the team that created Databook to solve data cataloging at Uber. OpenMetadata took a look at existing data cataloging systems and saw that the missing piece in the puzzle was a unified metadata model.

Save Your Seat To See Atlan in Action - Live Demo Series

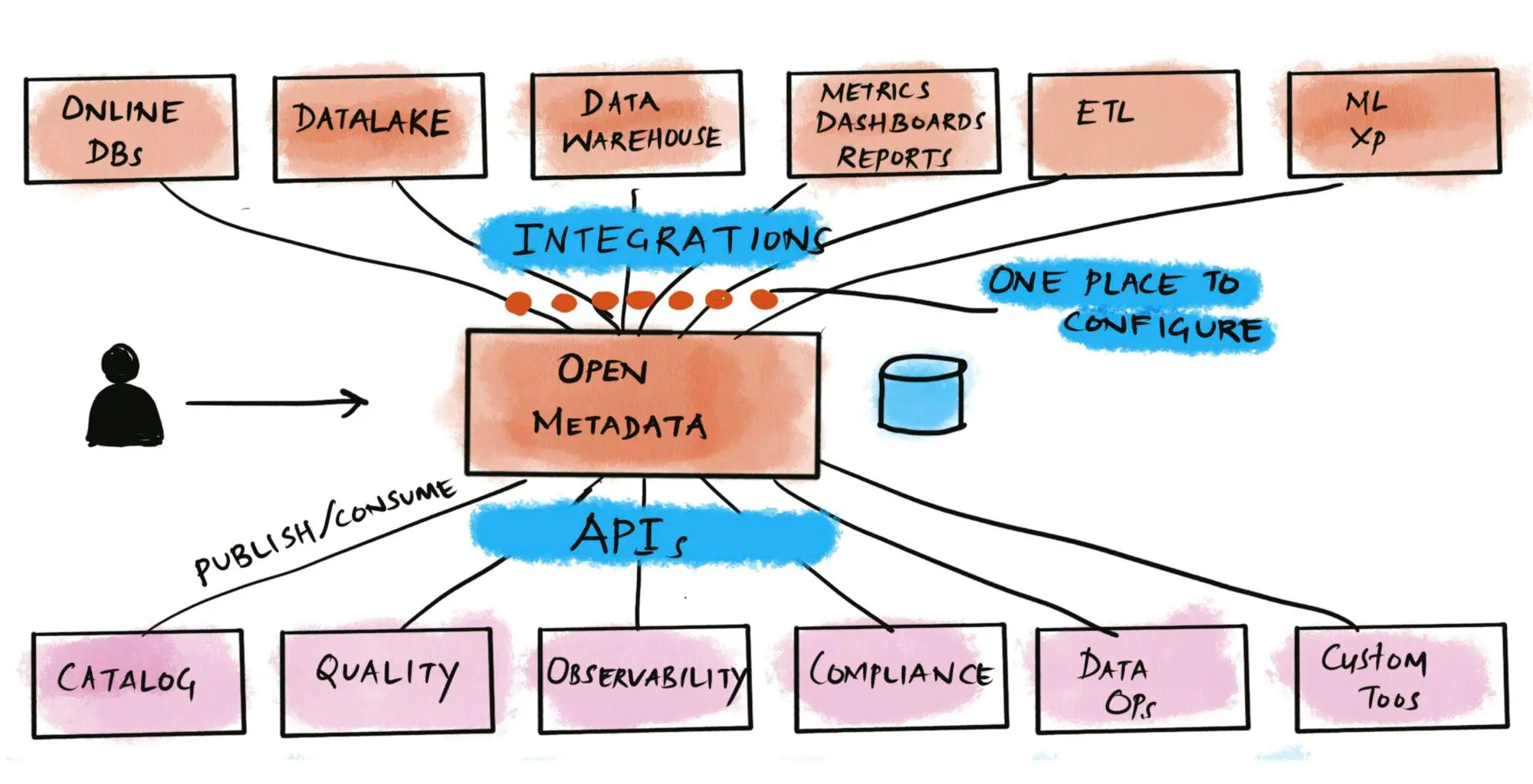

On top of that, they added metadata flexibility and extensibility. OpenMetadata has been in active development and usage for a few years. The last major release happened in November 2023, which focused on creating a unified platform for discovery, observability, and governance. Aside from newly added support for new asset types, OpenMetadata has also built support for data mesh with domains and data products.

Read more about OpenMetadata here.

An overview of OpenMetadata

What is DataHub? #

DataHub was LinkedIn’s second attempt at solving the data discovery and cataloging problem; they had earlier open-sourced another tool called WhereHows. In the second iteration, LinkedIn tackled having a wide variety of data systems, query languages, and access mechanisms by creating a generalized metadata service that acts as the backbone of DataHub.

DataHub’s latest release was in October 2023, where support for column-level lineage was added for dbt, Redshift, Power BI, and Airflow. Improvements around cross-platform lineage with Kafka and Snowflake were pushed with this release. Moreover, support for Data Contracts was added, but only in the CLI, as of now.

Read more about DataHub here.

LinkedIn DataHub Demo #

Here’s a hosted Demo environment for you to try DataHub — LinkedIn’s open-source metadata platform.

An overview of LinkedIn DataHub

What are the differences between OpenMetadata and DataHub? #

Let’s compare OpenMetadata vs DataHub based on the following criteria:

- Architecture and technology stack

- Metadata modeling and ingestion

- Data governance capabilities

- Table and column-level lineage

- Data quality and data profiling

- Upstream and downstream integrations

We’ve curated the above criteria to draw a comparison between these tools with an understanding of what’s critical to know — especially if you are to choose one of them as a metadata management platform that’ll drive specific use cases for your organization.

Let’s consider each of these factors in detail and clarify our understanding of how they fare.

OpenMetadata vs. DataHub: Architecture and technology stack #

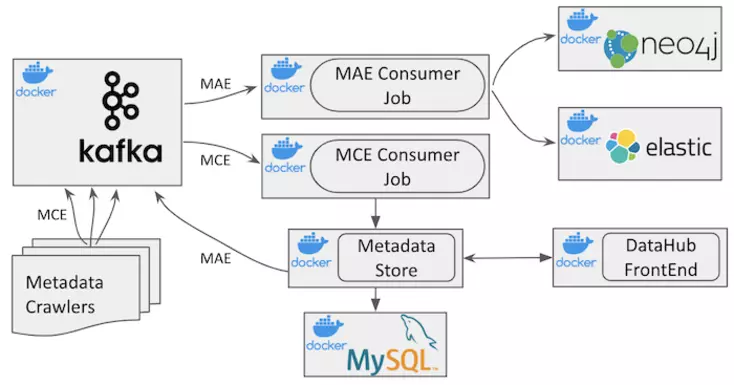

DataHub uses a Kafka-mediated ingestion engine to store the data in three separate layers - MySQL, Elasticsearch, and neo4j using a Kafka stream.

The data in these layers is served via an API service layer. In addition to the standard REST API, DataHub also supports Kafka and GraphQL for downstream consumption. DataHub uses the Pegasus Definition Language (PDL) with custom annotations to store the model metadata.

High level understanding of DataHub architecture. Image source: LinkedIn Engineering

OpenMetadata uses MySQL as the database for storing all the metadata in the unified metadata model. The metadata is thoroughly searchable as it is powered by Elasticsearch, the same as DataHub. OpenMetadata doesn’t use a dedicated graph database but it does use JSON schemas to store entity relationships.

Systems and people using OpenMetadata interact with the REST API either calling it directly or via the UI. To understand more about the data model, please refer to the documentation page explaining the high-level design of OpenMetadata.

From fragmented, duplicated, and inconsistent metadata to a unified metadata system. Source: OpenMetadata

OpenMetadata vs. DataHub: Metadata modelling and ingestion #

One of the major differences between the two tools is that DataHub focuses on both pull and push-based data extraction, while OpenMetadata is clearly designed for a pull-based data extraction mechanism.

Both DataHub and OpenMetadata, by default, primarily use push-based extraction, although the difference is that DataHub uses Kafka and OpenMetadata uses Airflow to extract the data.

Different services in DataHub can filter the data from Kafka and extract what they need, while OpenMetadata’s Airflow pushes the data to the API server, DropWizard, for downstream applications.

Both tools also differ in how they store the metadata. As mentioned in the previous section, DataHub uses annotated-PDL, while OpenMetadata uses annotated JSON schema -based documents.

Learn more: DataHub Integrations | OpenMetadata Connectors

OpenMetadata vs. DataHub: Data governance capabilities #

In 2022, DataHub released their Action Framework to power up the data governance engine. Action Framework is an event-based system that allows you to trigger external workflows for observability purposes. The data governance engine automatically annotates new and changed entities. Head to this link for a quick walkthrough of the Actions Framework.

Both OpenMetadata and DataHub offer built-in role-based access control for managing access and ownership.

OpenMetadata introduces a couple of other concepts, such as Importance, to provide a better search and discovery experience with additional context. DataHub uses a construct called Domains as a high-level tag on top of the usual tags and glossary terms to give you a better search experience.

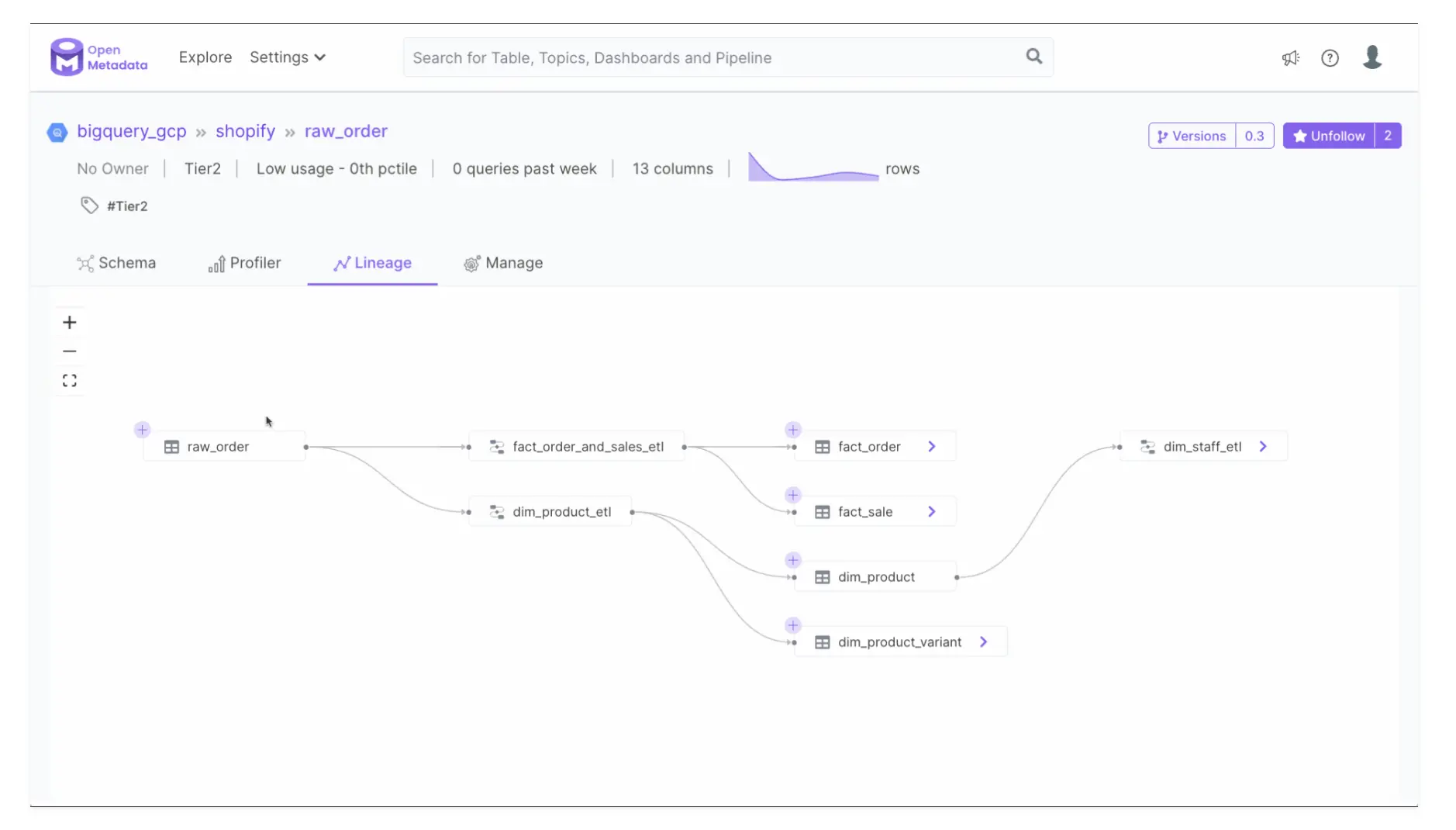

OpenMetadata vs. DataHub: Data lineage #

Since the November 2022 release, DataHub has been able to support column-level data lineage. More improvements were made over the course of last one year. With DataHub, you can extract column-level lineage in three different ways now — automatic extraction, DataHub API, and file-based lineage. Column-level lineage, as of June 2023, is only supported for Snowflake, Looker, Tableau, and Databricks Unity Catalog.

Both table-level and column-level lineages were already available in OpenMetadata. Support for column-level lineage using the API has also been around since the 0.11 release in July 2022. The latest release, 1.3, has incorporated all the changes made in SQLfluff along with added support for column-level lineage where columns aren’t explicitly mentioned, i.e., in queries using SELECT * . Moreover, OpenMetadata’s metadata workflow is now able to bring stored procedures and parse their execution logs to extract lineage metadata.

OpenMetadata’s Python SDK for Lineage allows you to fetch custom lineage data from your data source entities using the entityLineage schema specification for storing lineage data.

View upstream and downstream dependencies for data assets with lineage. Source: OpenMetadata

OpenMetadata vs. DataHub: Data quality and data profiling #

Although DataHub had roadmap items for certain data quality-related features a while back, they haven’t materialized yet. However, DataHub does offer integrations with tools like Great Expectations and dbt. You can use these tools to fetch the metadata and their testing and validation results.

With version 0.12.0, DataHub has also started supporting data contracts, which provide another way of enforcing data quality at the source and target layers. DataHub currently supports various contract types that help assert schema structure, freshness of data, and overall data quality. Keep an eye out for the latest updates in the release documentation.

Check out this demo of Great Expectations in action on DataHub.

OpenMetadata has a different take on quality. They have integrated data quality and profiling into the tool. Because there are many open-source tools for checking data quality, there are many ways to define tests, but OpenMetadata has chosen to support Great Expectations, in terms of metadata standards for defining tests.

If Great Expectations is already integrated with your other workflows and you’d rather have it as your central data quality tool, you can have that with OpenMetadata’s Great Expectations integration.

More recently, OpenMetadata has also created its own data quality framework called the OpenMetadata Data Quality Framework, which, like any other DQ tool, gives you some boilerplate code, but still requires you to write a bit of Python code to create and run tests. To make it easy for you, OpenMetadata comes with around 30 pre-defined tests that you can use.

OpenMetadata vs. DataHub: Upstream and downstream integrations #

Both DataHub and OpenMetadata have a plugin-based architecture for metadata ingestion. This enables them both to have smooth integrations with a range of tools from your data stack.

DataHub offers a GraphQL API, an Open API, and a couple of SDKs for your application or tool to develop and interact with DataHub. Moreover, you can use the CLI to install the plugins you need. These various methods of interacting with DataHub allow you to both ingest data into DataHub and take data out of DataHub for further consumption.

OpenMetadata supports over fifty connectors for ingesting metadata, ranging from databases to BI tools, and message queues to data pipelines, including other metadata cataloging tools, such as Apache Atlas and Amundsen. OpenMetadata currently offers two integrations - Great Expectations and Prefect.

OpenMetadata vs. DataHub: Comparison summary #

Both DataHub and OpenMetadata try to address the same problems around data cataloging, search, discovery, governance, and quality. Both tools were born out of the need to solve these problems for big organizations with lots of data sources, teams, and use cases to support.

Although these tools are a bit apart in terms of their release history and maturity, there’s a significant overlap in their features. Here’s a quick summary of some of those features:

| Feature | OpenMetadata | DataHub |

|---|---|---|

| Search & discovery | Elasticsearch | Elasticsearch |

| Metadata backend | MySQL | MySQL |

| Metadata model specification | JSON Schema | Pegasus Definition Language (PDL) |

| Metadata extraction | Pull and push | Pull |

| Metadata ingestion | Pull | Pull |

| Data governance | RBAC, glossary, tags, importance, owners, and the capability to extend entity metadata | RBAC, tags, glossary terms, domains, and the Actions Framework |

| Data lineage | Table-level and column-level | Table-level and column-level |

| Data profiling | Built-in with the possibility of using external tools | Via third-party integrations, such as dbt and Great Expectations |

| Data quality | In-house OpenMetadata Data Quality Framework, along with the possibility of using external tools like Great Expectations | Native data contract enforcement, DQ via third-party integrations, such as dbt and Great Expectations |

How organizations making the most out of their data using Atlan #

The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes #

- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

FAQs about OpenMetadata vs. DataHub #

1. What is OpenMetadata and how does it differ from DataHub? #

OpenMetadata is an open-source metadata management tool designed to provide a unified metadata model. It differs from DataHub, which is also open-source but focuses on a generalized metadata service. Both tools offer features for data cataloging, governance, and lineage tracking, but their architectures and approaches to metadata management vary.

2. What are the key features of OpenMetadata? #

OpenMetadata offers several key features, including a unified metadata model, flexible metadata ingestion, and robust data governance capabilities. It supports role-based access control, data lineage tracking, and integration with various data tools, making it suitable for organizations looking to enhance their data management practices.

3. How can OpenMetadata improve data governance in an organization? #

OpenMetadata enhances data governance by providing a centralized metadata repository that supports role-based access control and data lineage tracking. This allows organizations to manage data ownership, ensure compliance, and improve data quality through better visibility and control over their metadata assets.

4. What are the advantages of using DataHub for data management? #

DataHub offers advantages such as a flexible architecture that supports various data systems, robust data lineage tracking, and integration capabilities with tools like dbt and Great Expectations. Its event-based governance framework allows organizations to automate workflows and improve data observability.

5. How does DataHub facilitate data discovery and collaboration? #

DataHub facilitates data discovery through its powerful search capabilities and user-friendly interface. It allows users to explore data assets, understand their relationships, and collaborate effectively by providing insights into data lineage and quality. This enhances data accessibility and promotes a collaborative data culture.