AWS Data Mesh: Essential Guide for Implementation (2024)

Share this article

For any organization of reasonable complexity and demand for agility, data mesh has become a popular choice of data architecture. In this article, we’ll take you through the whys and hows of building a data mesh for your organization on AWS under the following themes:

- The need for data mesh

- AWS data mesh patterns & blueprints

- Leveraging metadata in a data mesh

Data mesh is a conceptual framework that moves away from the traditional data silo-inducing model to a more data ownership-driven model of data management across an organization. Rather than concentrating the power of creating and using data from a central team, it empowers every business unit and team to take ownership and responsibility for their data assets.

Let’s look at the need to implement data mesh and how you can leverage metadata in a data mesh using the right tool!

Table of contents #

- The need for data mesh

- AWS data mesh patterns & blueprints

- Leveraging Metadata in a Data Mesh

- Summary

- Related reads

The need for data mesh #

In more traditional data warehousing setups and even in the more recent data lake and lakehouse setups, most of the time is spent moving around data across sources, systems, and layers. Since inception, these processes have stayed very centralized; they aren’t built to be governed in a distributed manner.

Data mesh, on the other hand, is a framework built explicitly around integrating disparate data sources, minimizing data movement, and sharing and managing data access consistently so that it can be consumed more freely than ever before.

The four core principles of the data mesh framework, as interpreted by AWS, are as follows:

- Distributed domain-driven architecture — responsibility for data should be driven by business units, teams, or domains.

- Data as a product — apply product thinking to data sets. Make your products discoverable, addressable, trustworthy, and self-describing.

- Self-serve data infrastructure — use DevOps principles to create self-serve infrastructure to prevent duplication of effort and enforce infrastructure standards.

- Federated data governance — use a shared responsibility model for implementing data security and governance, where the responsibility lies both with the data product owners and the data consumers.

Now, look at how some of these principles materialize in some of the patterns and blueprints AWS recommends.

AWS data mesh patterns & blueprints #

With a broad range of data services, AWS is well-placed to host data mesh implementation. It has everything you need — storage, compute, governance, visualization, and more. Aside from individual services, you can leverage packaged offerings like LakeFormation, HealthLake, DataZone, etc.

Whether it is AWS’s data mesh reference architecture for the healthcare and life sciences industry or it is for a customer 360 data product, some services and patterns emerge where AWS:

- Stores the data in a service like RDS, Redshift, or S3, and,

- Offers you the freedom to use the compute you want — EMR, Lambda, Glue, Redshift, etc., and,

- Manages the infrastructure and governance using services like Glue Data Catalog and DataZone.

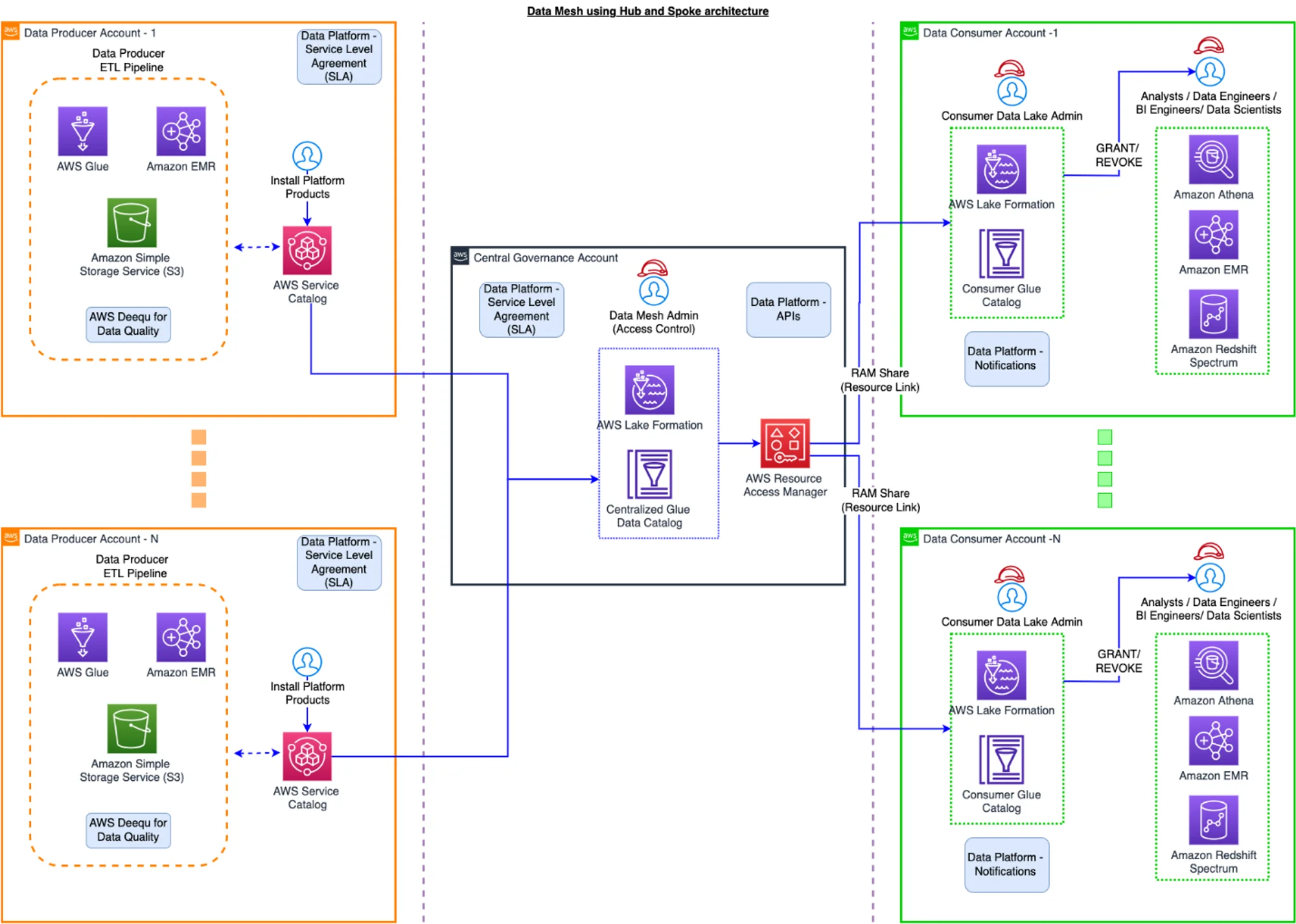

You can see an example of this in the following architecture that comes from GoDaddy’s data mesh implementation on AWS:

Governance using the Hub-and-Spoke model for GoDaddy - Source: AWS Blog.

The above solution at GoDaddy does the following things:

- It leverages AWS Lake Formation to spin up producer, governance, and consumer AWS accounts to separate concerns.

- It uses various storage and compute services like S3, Redshift, Athena, Redshift Spectrum, and EMR.

- It lets you define a central metadata repository with Glue Data Catalog, where you can register data producers, define access control policies, and define and implement SLO contracts using EventBridge and Lambda.

- It lets various business units consume data using the Glue Data Catalog.

It is worth noting that this implementation is currently not in use at GoDaddy, citing “the team encountered fragmented experiences, disconnected systems, and a large amount of technical debt” based on their latest update on the evolution of their data platform.

To note: Instead of providing fragmented experiences, Atlan is designed to provide a consistent experience to whosoever uses data products in your team, which makes it the right tool to use if you’re implementing data mesh in your organization. It does that by embedding the fundamentals of data mesh both in the backend architecture and the UI.

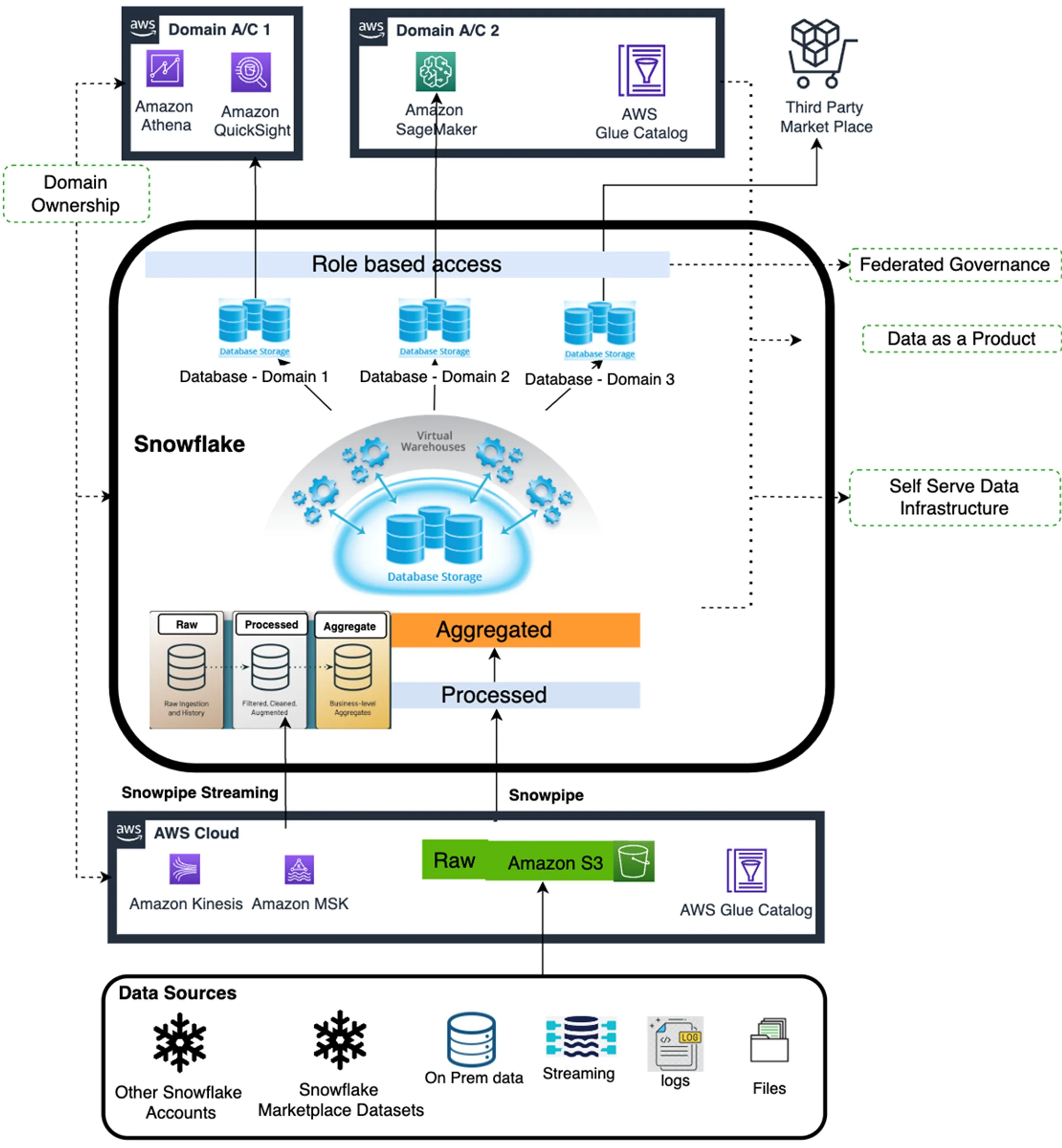

Another data mesh reference architecture example uses Snowflake as the storage and compute layer for the data products. The data pipeline populates three layers — raw, processed, and aggregated. In Snowflake, domain separation is done at a database level. In AWS, it is done on the AWS account level. So, a team with Account 1 might use Athena and Quicksight to consume data. Another team with Account 2 might use SageMaker to do the same. Federated data governance across AWS and Snowflake accounts uses a mix of AWS IAM and Snowflake’s RBAC controls.

Reference architecture of Snowflake-centric data mesh implementation on AWS - Source: AWS Blog.

In this architecture, data products are discovered by searching the domain databases in Snowflake. The technical metadata is handy here, but there is a scope for making data products more “discoverable.” This is where a metadata-driven approach comes into play, which we’ll discuss in the next section.

Leveraging Metadata in a Data Mesh #

As you might have already understood, the facade of data mesh rests upon a solid metadata foundation, which needs to be both technical and business-oriented.

Metadata is at the heart of making data “discoverable, addressable, trustworthy, and self-describing.” If the implementation of a data mesh leads to fragmented experiences and disconnected systems, the core purpose is not attained.

Even if the technical aspects like schema registry, technical metadata alignment, and data type normalization, among others, are taken care of, a host of other things still need to be implemented, such as active and seamless collaboration, consistent experience in discovering data products, easy-to-use and easy-to-implement data access controls, and so on. Doing this requires building a system to activate your metadata and let it work for you. Atlan’s metadata activation platform already does that for you.

The Atlan Mesh home page is designed with data mesh principles embedded in the foundation. Whether you want to search, discover, and use your data products by domains, domain owners, criticality, sensitivity, freshness, recent activity, etc., Atlan allows you to do that easily with the mesh hierarchy built in. You can also navigate to the underlying assets and the lineage graph for any data product.

One key element holding the mesh together is the data contract. Atlan ensures that your data contracts defined in YAML, JSON, or any other file format flow into the system and are linked to the data assets and products for you to investigate. Moreover, Atlan Mesh allows you to work with data product metadata stored in Excel. Your edits in Excel are automatically carried forward to Atlan. Lastly, Atlan Mesh also integrates with Teams to provide a fully collaborative experience for your team. There are many more features, such as contract enforcement as part of CI/CD and bringing your own contract.

See all-new data products, domains, and contracts come to life as first-class citizens in Atlan.

Summary #

In this article, we discussed storage, compute, and governance patterns for producers and consumers of data products in a data mesh environment in AWS. We also discussed key learnings and takeaways from some of the early data mesh implementations on AWS. Finally, toward the end of the article, we discussed how leveraging metadata is crucial to a successful data mesh implementation.

Use the following resources to learn more about implementing data mesh on AWS:

- Laboratory data mesh guidance on AWS

- Building a customer 360 data product in a data mesh on AWS

- Data mesh architecture for healthcare & life sciences on AWS

- Implementing a Snowflake-centric data mesh on AWS for scalability and autonomy

- Building data mesh architectures on AWS

AWS Data Mesh: Related reads #

- What is Data Mesh?: Examples, Case Studies, and Use Cases

- Gartner on Data Mesh: The Debate, Challenges, and the Future of Data Architecture

- Forrester on Data Mesh: Approach, Characteristics, Tooling Capabilities, Use Cases, and the Future

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Data Mesh Catalog: Manage Federated Domains, Curate Data Products, and Unlock Your Data Mesh

- Data Mesh Architecture: Core Principles, Components, and Why You Need It?

- Data Mesh Principles — 4 Core Pillars & Logical Architecture

- Data Mesh Setup and Implementation - An Ultimate Guide

- How to implement data mesh from a data governance perspective

- Atlan Activate: Bringing Data Mesh to Life

- How Autodesk Activates Their Data Mesh with Snowflake and Atlan

- Journey to Data Mesh: How Brainly Transformed its Data & Analytics Strategy

Share this article