Data Contracts Explained: Key characteristics, tools, implementation & best practices for 2026

Why are data contracts important?

Permalink to “Why are data contracts important?”According to dbt, the ability to define and validate data contracts is essential for cross-team collaboration. Gartner calls them an “increasingly popular way to manage, deliver, and govern data products.”

Just like business contracts formalize expectations between suppliers and consumers of a business product, data contracts set clear, enforceable rules for data exchange.

With data contracts, you can:

- Prevent downstream breakages by ensuring that schema modifications follow rules and expectations

- Shift the responsibility left since the data quality gets checked as soon as the products are created/updated

- Improve data reliability and trust through clearly defined structures, semantics, ownership, and quality checks

- Enable and scale the distributed data architecture effectively

- Facilitate governance and automation with embedded validation rules, SLAs, and metadata feeding into automated pipelines

- Eliminate ambiguity and misinterpretations by documenting expectations upfront

- Set up a feedback culture between data producers and consumers to foster a collaborative, rather than a chaotic, environment

Also, read → dbt Data Contracts: Quick Primer With Notes on How to Enforce

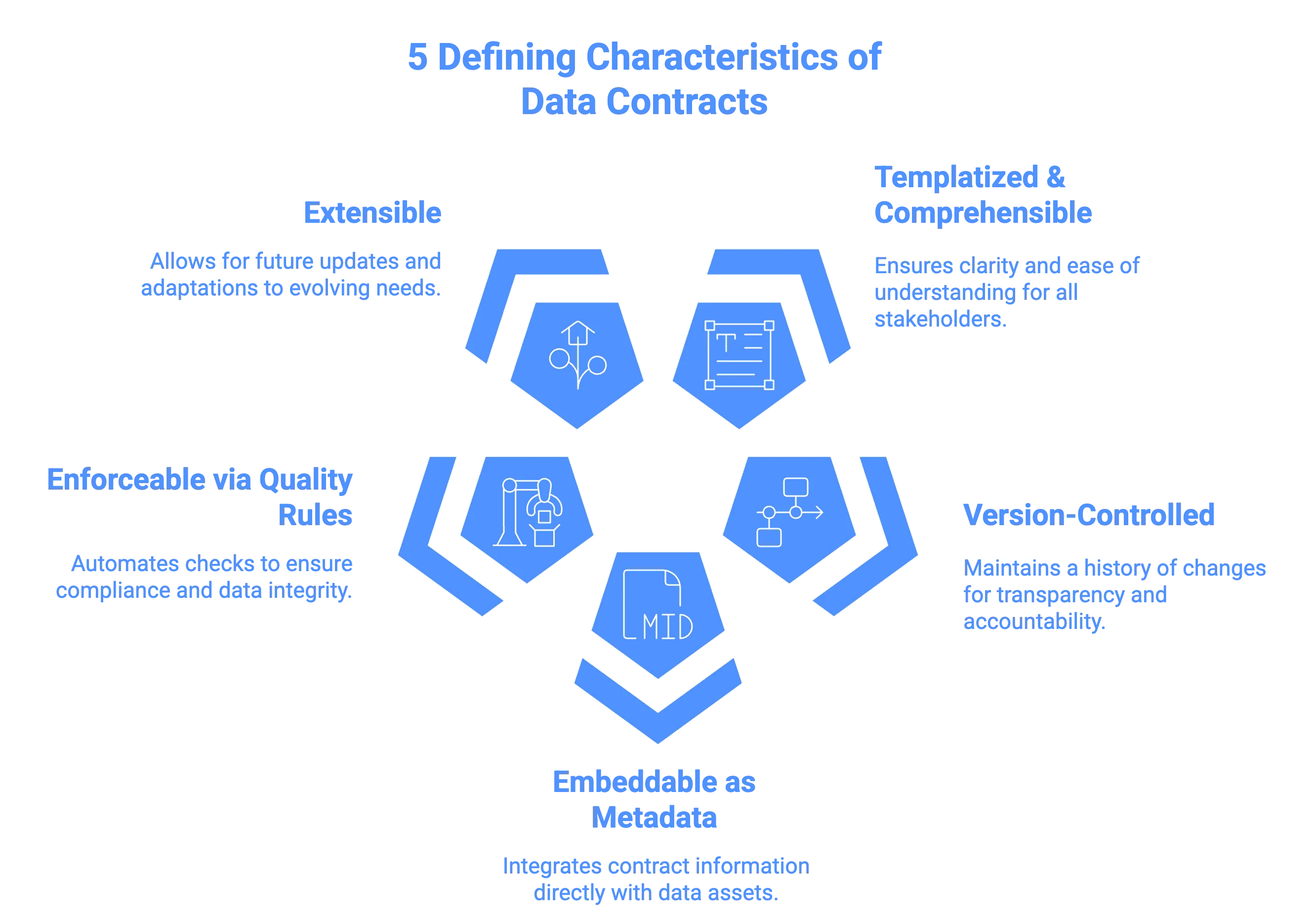

What are the 5 defining characteristics of a trustworthy data contract?

Permalink to “What are the 5 defining characteristics of a trustworthy data contract?”For a data contract to help build trust in your data, it should be:

- Templatized & comprehensible: Use a consistent, easy‑to‑understand format that all stakeholders can quickly interpret.

- Version‑controlled: Maintain histories and changes so that producers and consumers always know the active agreement.

- Embeddable as metadata: Include contract information within the asset’s metadata so rules, ownership, and usage context travel with the data.

- Enforceable via quality rules: Automate checks and validations (schema conformity, freshness, completeness) that enforce the contract.

- Extensible: Define processes for adding new specifications, releasing new versions, and comparing changes over time.

Essential characteristics of a trustworthy data contract. Source: Atlan.

These characteristics help ensure data contracts deliver clarity, governance, and actionable controls in distributed data ecosystems.

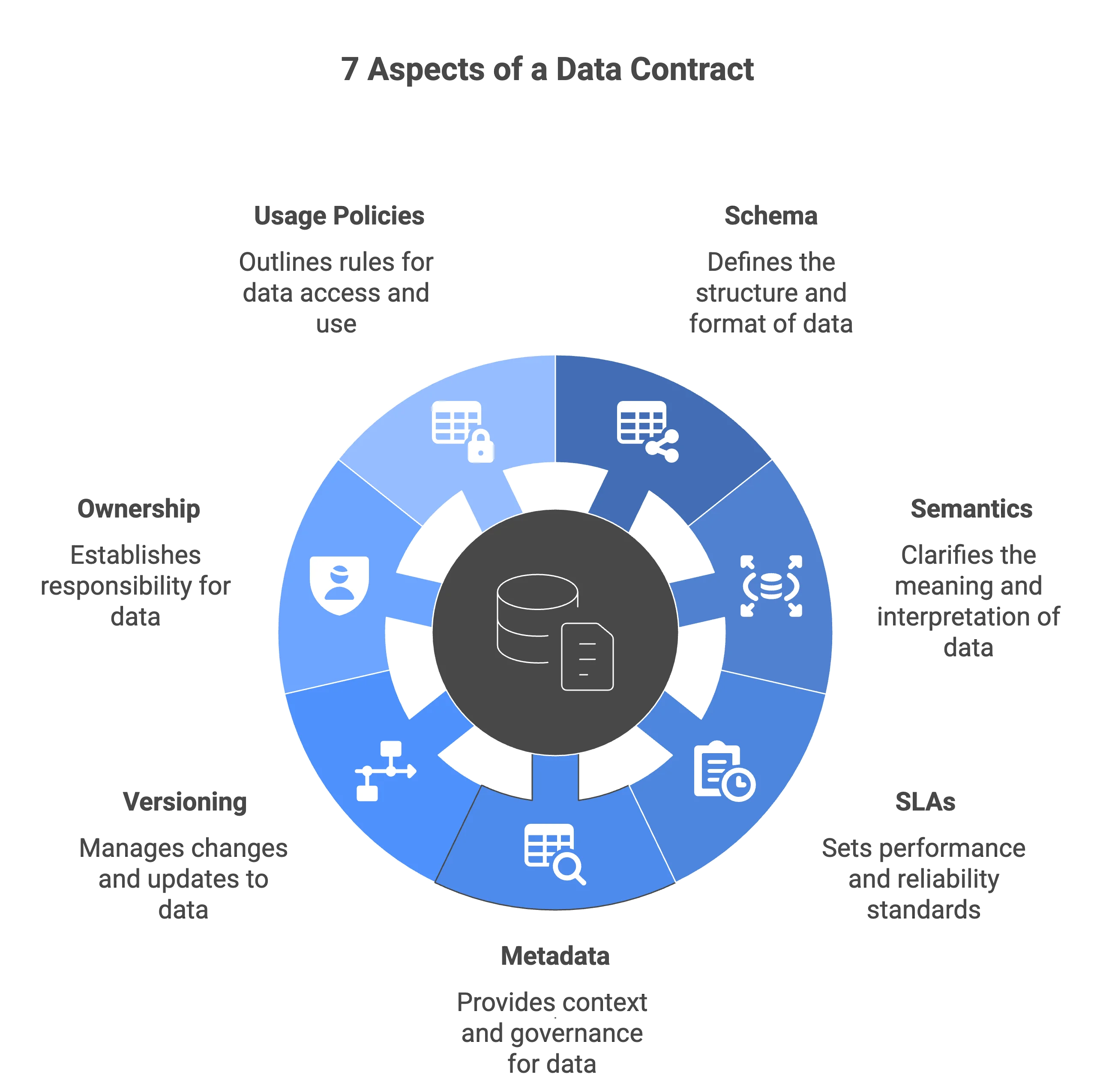

What are the key aspects of a data contract?

Permalink to “What are the key aspects of a data contract?”In addition to general agreements about intended use, ownership, and provenance, data contracts include agreements about:

- Schema

- Semantics

- Service level agreements (SLAs)

- Metadata (data governance)

- Versioning and change management

- Ownership and stewardship details

- Usage policies and access protocols

Key aspects of a data contract. Source: Atlan.

1. Schema

Permalink to “1. Schema”Schema defines the explicit field names, data types, formats, and structure of the data product.

Because data sources and business requirements evolve, schemas may change — for example replacing a numeric identifier with a UUID or removing dormant columns.

An example of JSON schema for a business entity called ‘Person’. Source: Atlan.

2. Semantics

Permalink to “2. Semantics”Semantics capture the business rules and meaning behind the data, such as:

- How entities transition

- How entities relate to one another

- How entities meet conditional business logic (e.g., a “fulfillment date” must not precede an “order date”)

- How entities deviate from normal

3. Service Level Agreements (SLAs)

Permalink to “3. Service Level Agreements (SLAs)”Service level agreements (SLAs) set commitments around data availability, latency, completeness, and performance.

They can specify when new data should appear, maximum delay in real‑time streams, or metrics like MTBF (Mean Time Between Failures) and MTTR (Mean Time To Recovery).

4. Metadata (data governance)

Permalink to “4. Metadata (data governance)”Governance metadata defines access rights, classification, lineage, taxonomy, version information, and contact points. It enables users to understand:

- User roles that can access a data product

- Time limit to access a data product

- Names of the columns with restricted access/visibility

- Names of columns with sensitive information

- How sensitive data is represented within the dataset

- Other metadata — data contract version, name and contact of data owners

5. Versioning and change management

Permalink to “5. Versioning and change management”This element ensures that changes to schema, semantics, or SLAs are tracked, communicated, and managed to avoid breaking dependencies and maintain backward compatibility.

6. Ownership and stewardship

Permalink to “6. Ownership and stewardship”Ownership and stewardship assign responsible parties—both for producing and consuming the data product. The data contract can define roles, responsibilities, support contacts, and escalation paths.

7. Usage policies and access protocols

Permalink to “7. Usage policies and access protocols”Usage rules specify how data may be consumed, shared, or transformed. The data contract can also define permissions and restrictions (e.g., masking, anonymization).

It can also outline internal and external regulatory compliance requirements.

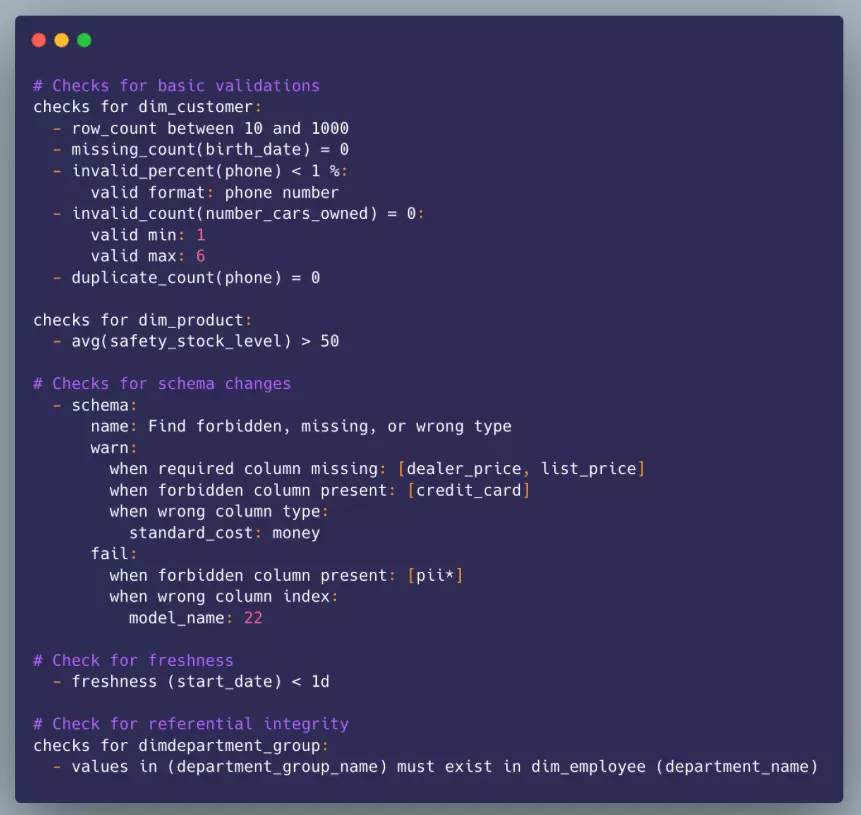

What does a data contract look like?

Permalink to “What does a data contract look like?”Here’s an example of a data contract defined in YAML format, covering schema, semantics, SLA and governance-related checks.

We created this contract using Soda.io, a popular data quality framework.

An example of a data contract created using Soda.io . Source: Atlan.

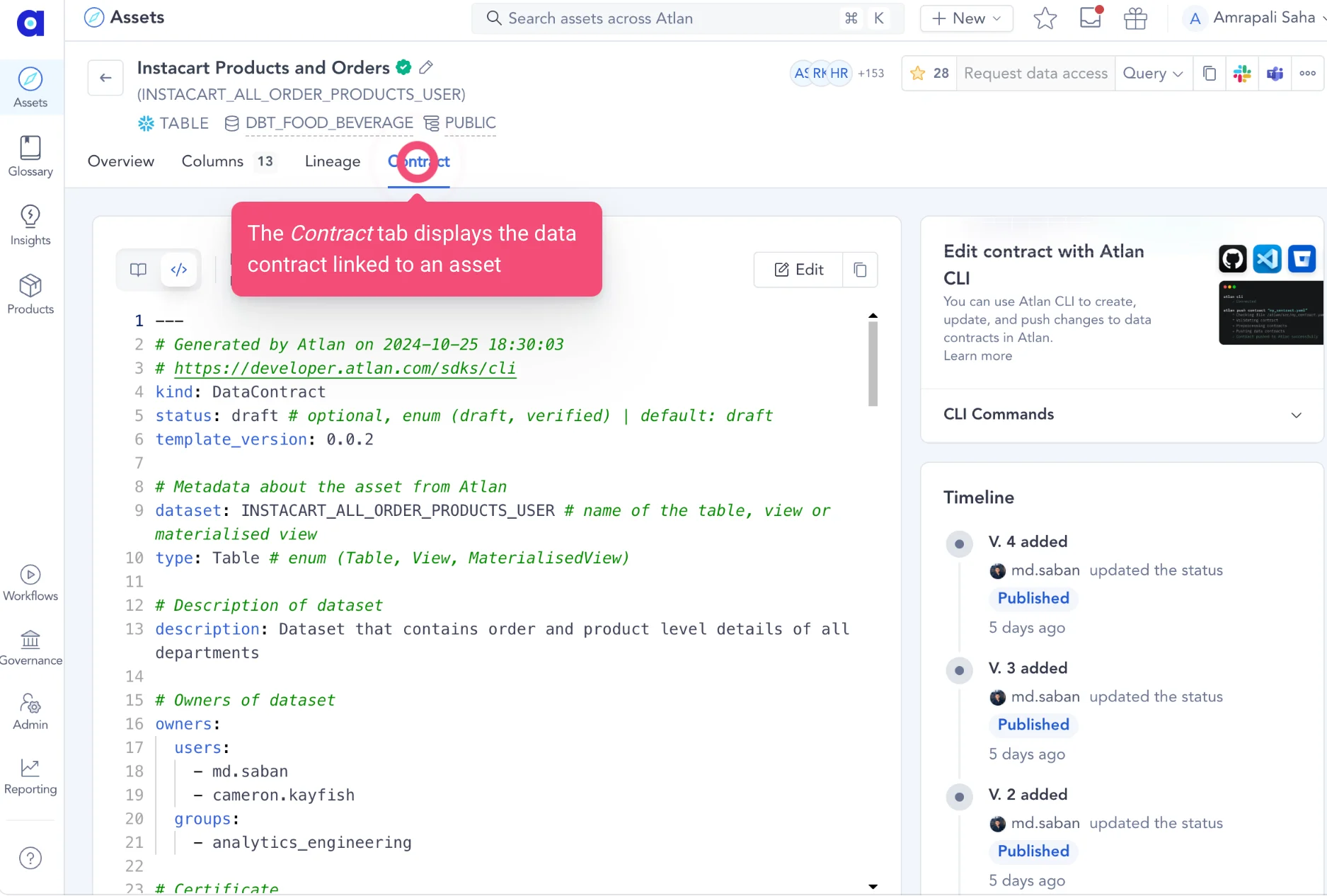

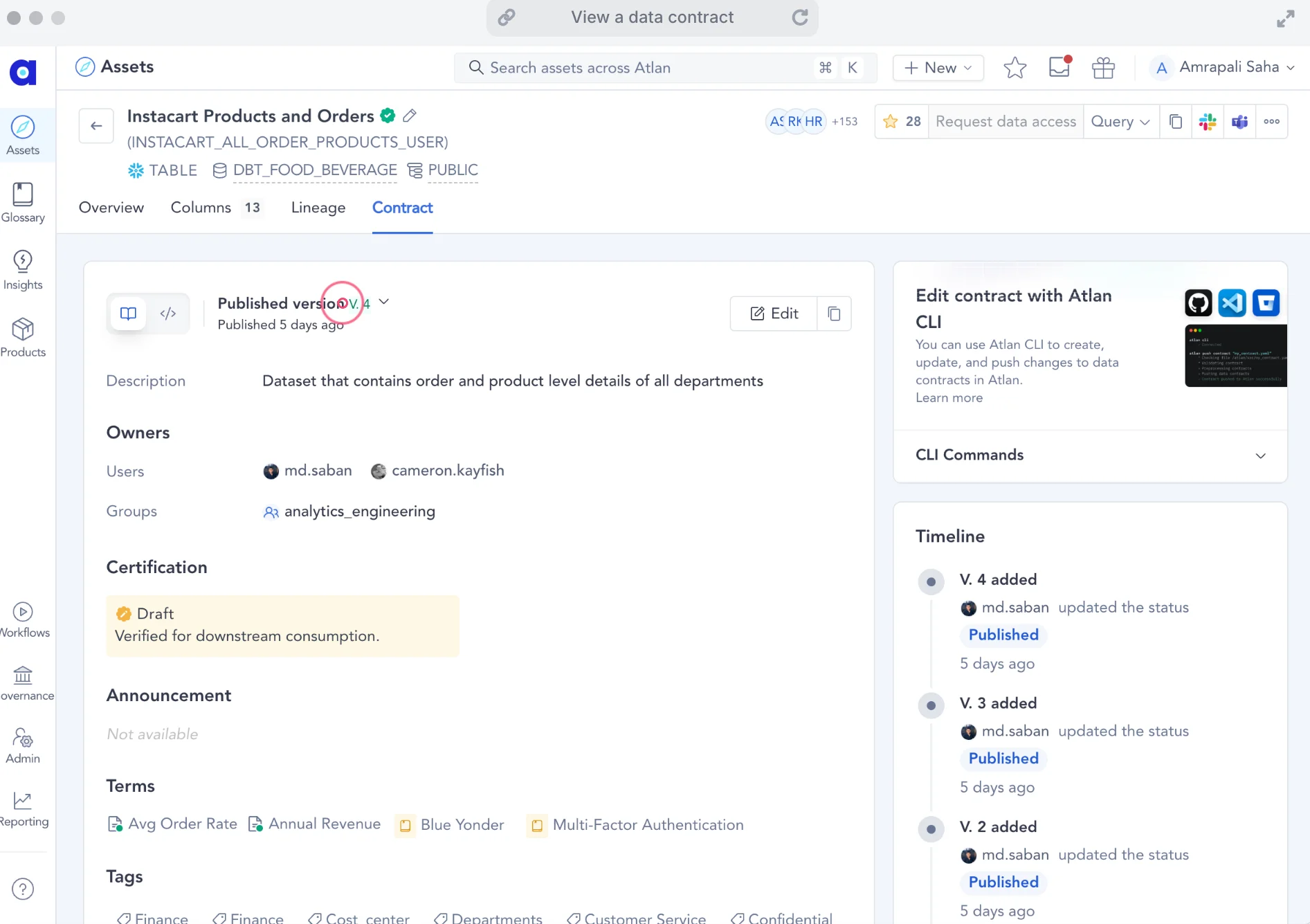

If you use Atlan to create your data contracts, here’s what that would look like. It displays essential information such as contract status, version history, ownership, description, and tags, among others.

Data contract example in Atlan. Source: Atlan.

In Atlan, you can also go through the contract details in a simplified, read-only view.

A simplified, read-only view of data contracts in Atlan . Source: Atlan.

What are the top use cases for data contracts?

Permalink to “What are the top use cases for data contracts?”- Real‑time data sharing: Data contracts ensure schema consistency, latency bounds, and secure access so that you can make rapid, accurate decisions.

- Cross‑team data sharing (across geographies): Contracts define quality checks, privacy controls, interoperability rules, and compliance requirements so that global teams can trust shared information.

- Supplier telemetry in manufacturing: Contracts standardize supplier‑provided inventory and logistics data, enabling end‑to‑end supply‑chain automation and traceability.

- Training datasets for GenAI applications: Data contracts enforce schema integrity, labeling standards, and lineage tracking, ensuring reliable, unbiased inputs for generative AI models.

How can you implement data contracts in 9 steps?

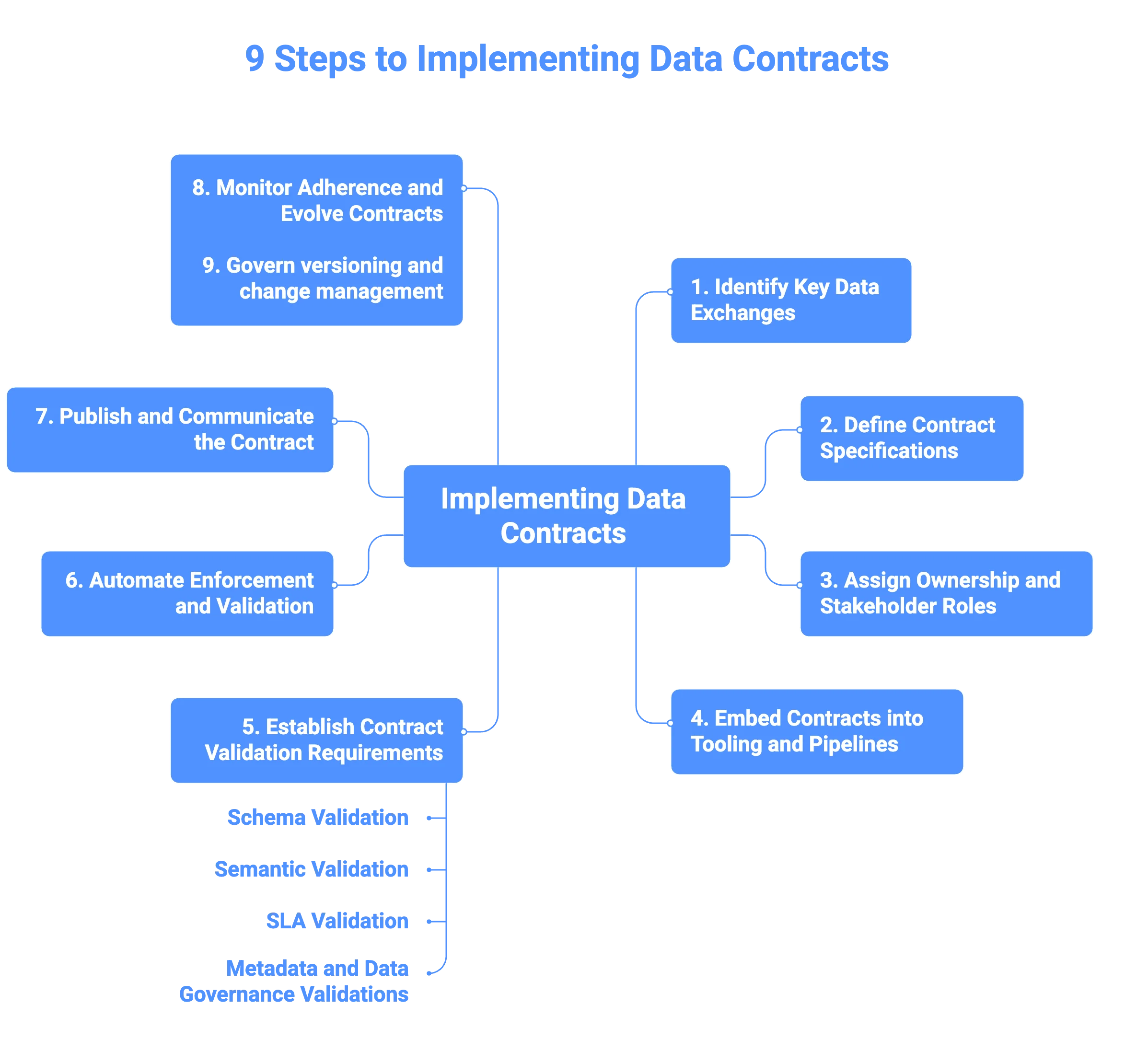

Permalink to “How can you implement data contracts in 9 steps?”To roll out effective data contracts, follow these structured steps to establish clarity, automate enforcement, and align teams:

9 steps to implement data contracts effectively. Source: Atlan.

Step 1: Identify key data exchanges

Permalink to “Step 1: Identify key data exchanges”Map critical data flows between producers and consumers—focus on high‑value datasets, domains or systems. Look for high‑impact use‑cases where unexpected schema changes disrupt key data pipelines.

Step 2: Define the contract specifications

Permalink to “Step 2: Define the contract specifications”Specify:

- Schema (fields, data types, formats)

- Semantics (business logic)

- SLAs (freshness, completeness, availability)

- Metadata (status, classification, tags)

- Versioning

- Usage rules

Step 3: Assign ownership and stakeholder roles

Permalink to “Step 3: Assign ownership and stakeholder roles”Establish clear ownership—who produces the data, who consumes it, and who monitors the contract.

Step 4: Embed contracts into tooling and pipelines

Permalink to “Step 4: Embed contracts into tooling and pipelines”Store contracts in version‑controlled formats (e.g., YAML, JSON Schema), tie them into CI/CD workflows, and integrate them into data tooling.

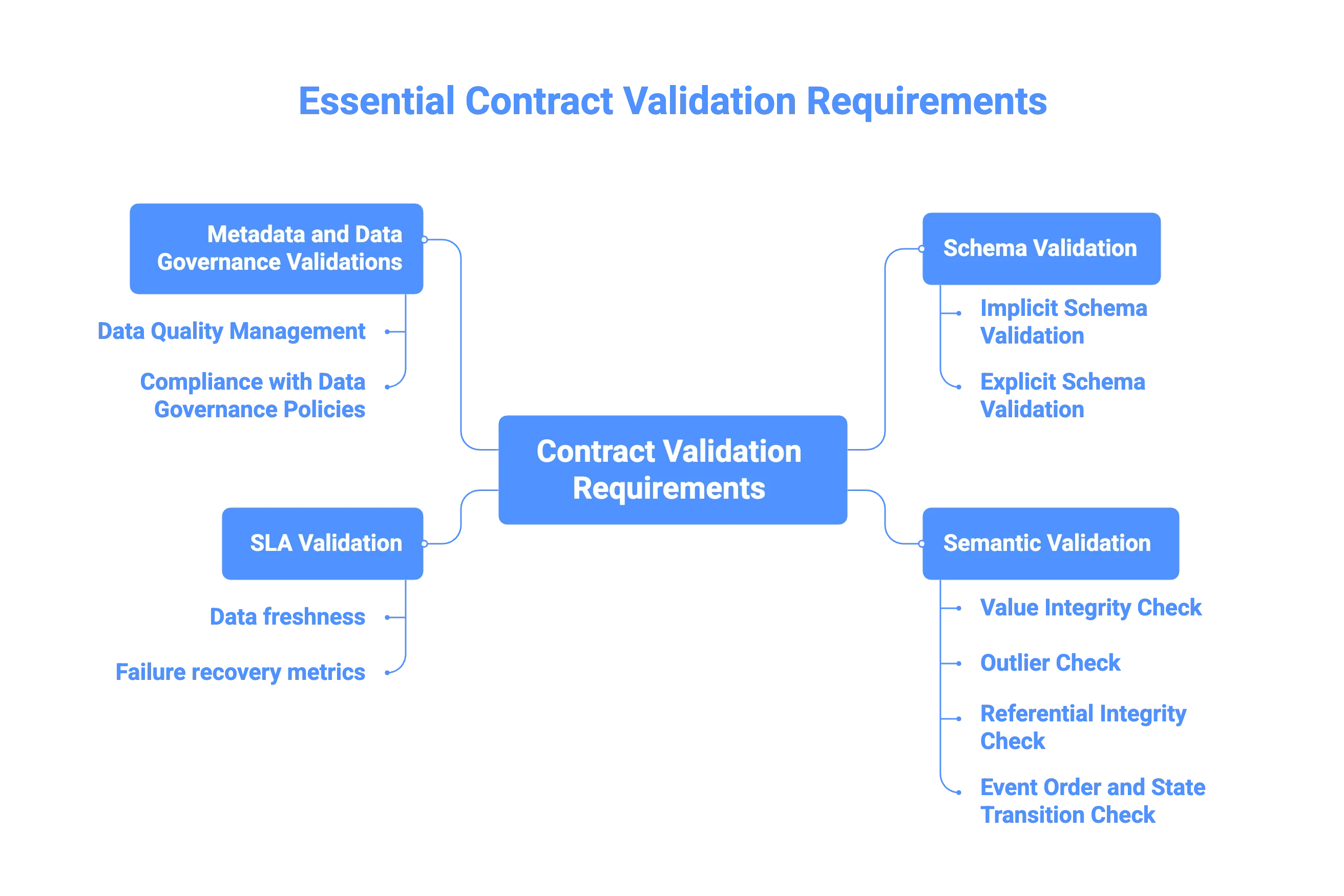

Step 5: Establish contract validation requirements

Permalink to “Step 5: Establish contract validation requirements”Each agreement within a data contract — schema, semantics, SLA, governance — must be validated to properly implement data contracts.

Validating schema, semantics, SLA and governance for proper data contract implementation. Source: Atlan.

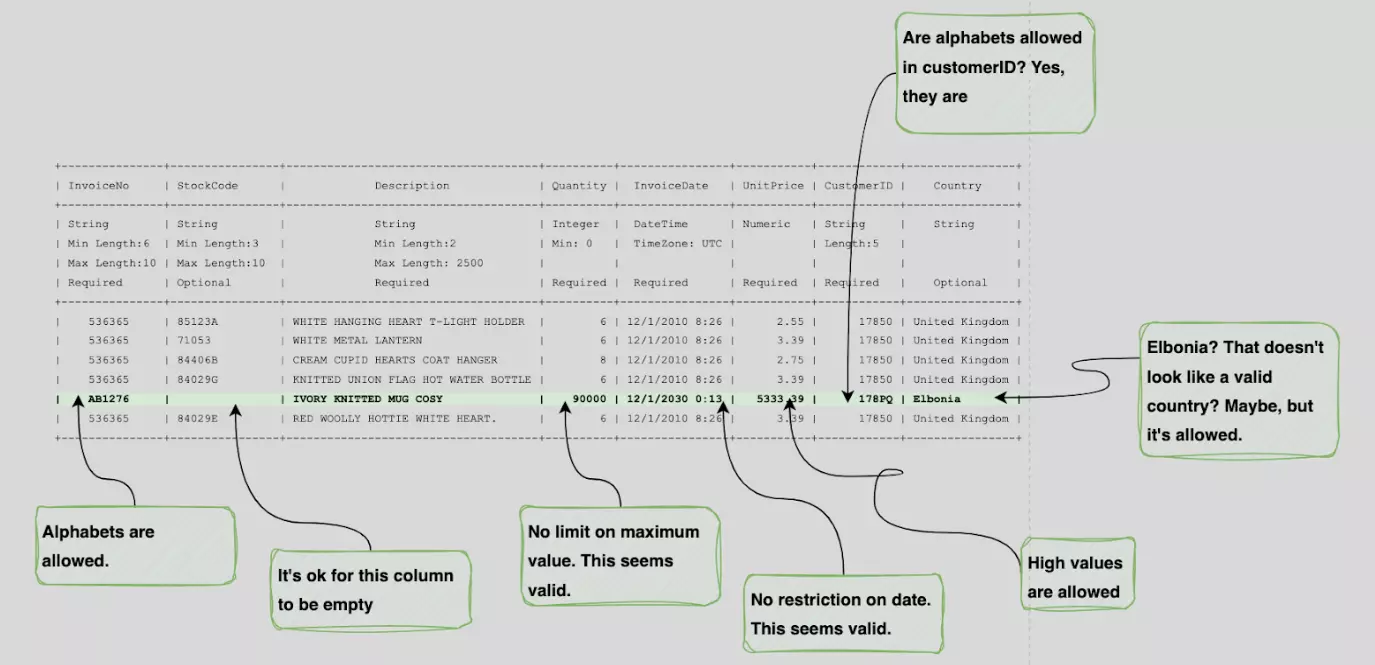

Schema validation

Permalink to “Schema validation”Here’s an example. A raw invoice dataset from an online store without schema info can leave analysts guessing about data types, formats, and valid ranges.

The questions an analyst might have about each record in a dataset. Source: Atlan.

However, if you attach schema rules, then the analyst would come to conclusions as shown in the image below.

How schema validation rules can address the questions an analyst might have about records in a dataset. Source: Atlan.

Schema validations can be implicit or explicit:

- Implicit validation: Some formats like Protobuf, Avro, Parquet, and relational databases enforce schema rules by default.

- Explicit validation: Schema-less formats like JSON or CSV require external validation tools. Most modern data quality and observability frameworks support this.

Semantic validation

Permalink to “Semantic validation”Semantic validations ensure that the data is logically valid and makes sense to the business domain.

Unlike schema validations, semantic validations cannot be added implicitly to data formats. They need to be explicitly enforced via a validation framework.

Semantic checks can be related to the following:

Value integrity check

Validate data against business rules to detect unacceptable, illogical values caused by misconfigured systems, bugs in the business logic, or even test data leaking into the production environment.

You can have prebuilt checks incorporated within popular data quality frameworks and then include more specific checks with custom code.

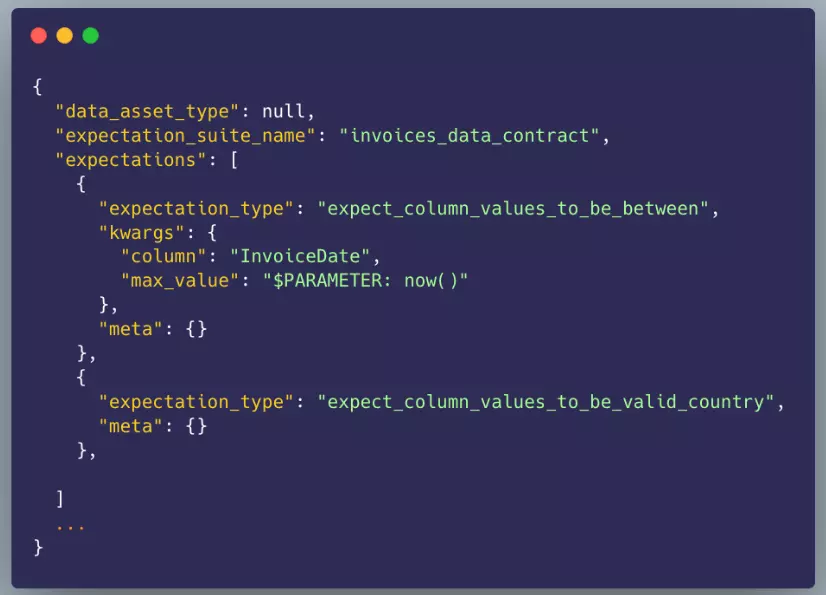

Here’s an example from GreatExpectations that includes a predefined semantic check for country names based on GeoNames.

An example of a value integrity check in GreatExpectations, which includes a predefined semantic check. Source: Atlan.

Outlier check

A deep business context and understanding is a prerequisite to crafting rules regarding outliers.

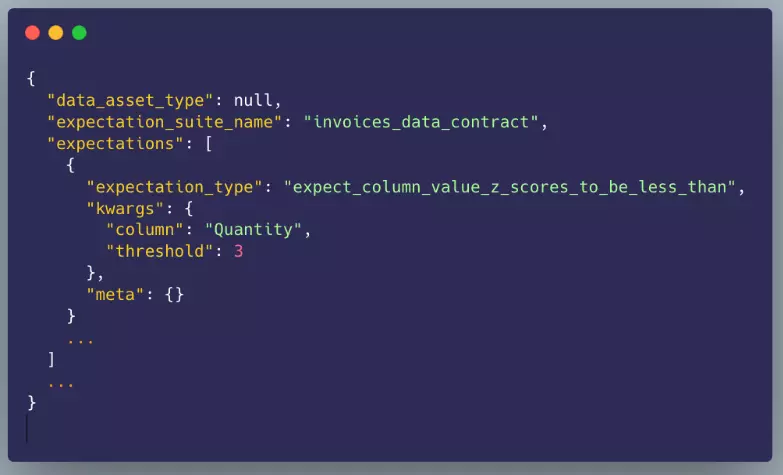

You can use statistical techniques such as IQR or Z-Score to spot outliers. Here’s how a Z-score based rule for outliers looks in GreatExpectations.

An example of a Z-score-based rule in GreatExpectations. Source: Atlan.

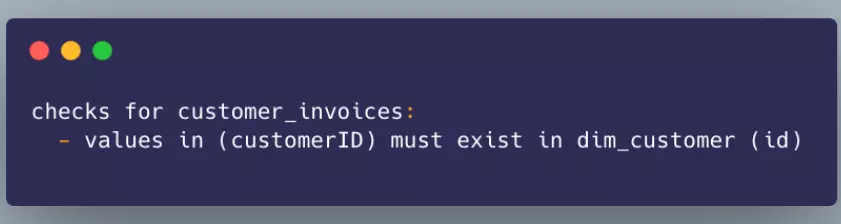

Referential integrity check

Poor referential integrity can mean missing or incomplete data or even bugs in business logic. Here is an example referential integrity check in Soda.io.

An example of referential integrity check in Soda.io . Source: Atlan.

Event order and state transition check

Event order and state transitions checks are the special types of checks that check to see if the order of historical state transitions of a business process is logically valid.

Existing frameworks do not provide out-of-the-box support for such semantic checks. So, you have to create custom validation checks.

SLA validation

Permalink to “SLA validation”Service Level Agreements (SLA) in data contracts include:

- Data freshness: Downstream processing and analysis depend on the parent dataset being up-to-date. For example, reports on daily count of orders should only be run after the orders table is fully updated with the previous day’s data.

- Failure recovery metrics: Capture the producers’ commitments about data availability with metrics like MTTR. You can extract data from tools like PagerDuty or incident management systems to measure and report incident metrics. Once this data is available in a dataset, any type of quality framework could be used to validate incident reports data against their contract.

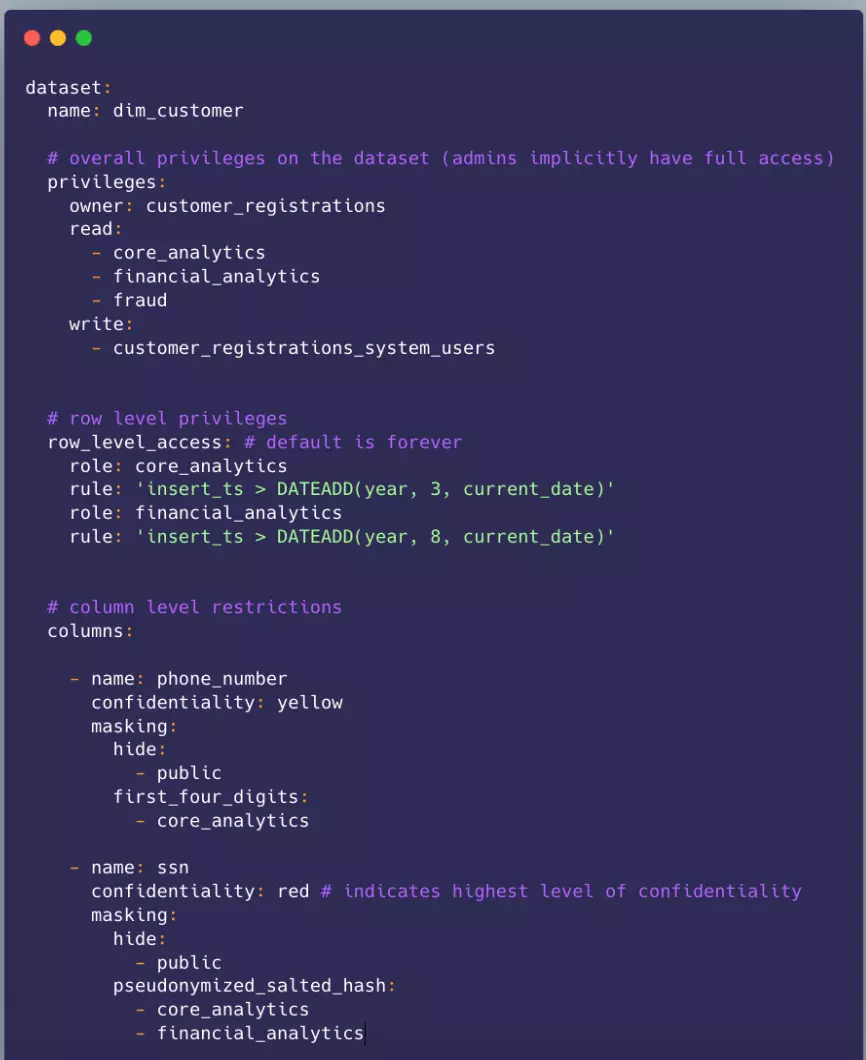

Metadata and data governance validations

Permalink to “Metadata and data governance validations”There isn’t much off-the-shelf support for authoring or validating governance specifications in contracts. But it is straightforward enough to develop in-house frameworks to serve this purpose.

Here’s an example of the governance contract of a dataset in YAML format. This file lists the access privileges of a dataset, including table and row-level access policies. For sensitive columns, it details the masking policies applicable for each role.

An example of the data governance contract in YAML format. Source: Atlan.

Most modern data warehouses like Snowflake, Databricks, and BigQuery have extensive support for a rich information schema that can be queried (often using plain SQL) to validate the governance rules.

These rules can be implemented as custom, user-defined checks in existing frameworks like Soda.io or GreatExpectations, both of which provide a well-documented process for adding such checks.

Step 6: Automate enforcement and validation

Permalink to “Step 6: Automate enforcement and validation”Incorporate contract checks—schema validation, freshness tests, data‑quality rules—into your pipeline.

Step 7: Publish and communicate the contract

Permalink to “Step 7: Publish and communicate the contract”Make the contract discoverable to users via catalogs or portals, and educate teams to align expectations.

Step 8: Monitor adherence and evolve contracts

Permalink to “Step 8: Monitor adherence and evolve contracts”Track metrics: schema changes, SLA violations, access patterns, downstream failures. Use telemetry and feedback loops to evolve the contract—version upgrades, deprecations, extensions.

Step 9: Govern versioning and change management

Permalink to “Step 9: Govern versioning and change management”Apply version control to contracts, maintain backward‑compatibility rules, notify consumers of changes, and retire older versions when needed. Failure to manage change leads to downstream breakages.

What tools can you use to create data contracts?

Permalink to “What tools can you use to create data contracts?”Data contract tooling is still developing, and there is no universal standard for authoring, publishing, or validating contracts across all data systems.

Most organizations today stitch together schemas, CI/CD checks, and metadata tooling to operationalize contracts.

Several categories of tooling have emerged:

- Schema-based frameworks (JSON Schema, Protobuf, Avro, Schemata): Formal structures for defining field types, constraints, and evolution rules.

- Contract-centric libraries (Schemata, emerging Python/TypeScript libraries): Provide modeling, schema evolution support, and contract validation inside engineering workflows.

- Event streaming platforms (Kafka Schema Registry): Enforce schema compatibility and shape real-time data contracts across producers and consumers.

- CI/CD validation pipelines: GitHub/GitLab workflows increasingly act as contract “gatekeepers,” running automated checks before schema changes reach production.

- Metadata control planes like Atlan: Atlan strengthens contract workflows by linking schemas and contract metadata to owners, domains, lineage, policies, and downstream consumers.

Atlan for data contracts

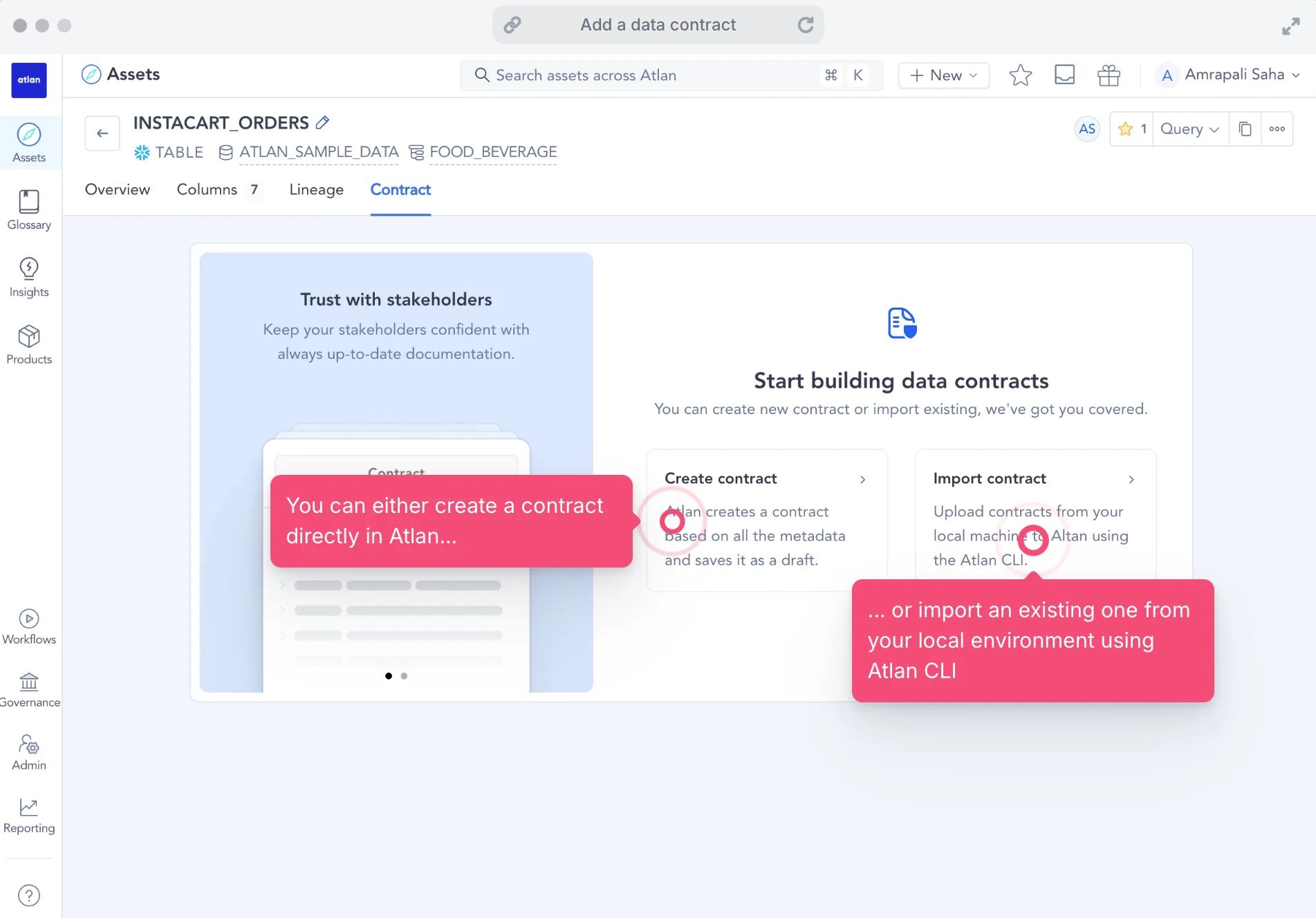

Permalink to “Atlan for data contracts”In a landscape where no universal framework exists, platforms like Atlan help unify and operationalize data contracts across diverse systems.

In Atlan, you can directly add a data contract to supported assets, such as:

- Tables

- Views

- Materialized views

- Output port assets of data products

- Custom metadata

Data contracts in Atlan. Source: Atlan.

Moreover, if you ever changed a data contract only to find out later that it broke a downstream table or dashboard, Atlan provides a GitHub Action to help you out.

This gives teams helpful context on downstream applications, while ensuring that definitions, changes, and validations flow consistently across data and AI pipelines.

What are seven best practices to follow for developing and enforcing effective data contracts?

Permalink to “What are seven best practices to follow for developing and enforcing effective data contracts?”Below are the most important best practices to ensure your data contracts stay stable, flexible, and enforceable across your ecosystem.

1. Update data contracts as data products evolve

Permalink to “1. Update data contracts as data products evolve”Data contracts only work when they evolve in lockstep with the data products they describe. As business logic shifts, teams reorganize, schemas change, and pipelines get re-architected, contracts must keep pace.

Otherwise, contracts become blockers.

2. Decouple data products from transactional data

Permalink to “2. Decouple data products from transactional data”Avoid the anti-pattern of exposing raw transactional schemas directly as data products. Doing so creates a fragile, leaky abstraction where even minor upstream schema tweaks break downstream guarantees.

Use higher-level, stable schemas—often implemented via the Outbox Pattern—so the contract exposes only what consumers need, not low-level operational details.

3. Avoid brittle contracts

Permalink to “3. Avoid brittle contracts”Strict attribute lengths, tightly constrained enums, or hyper-specific formats may seem like good data quality controls, but they make schemas extremely fragile.

So, design contracts that balance quality and evolution. Focus on semantic guarantees and expected behaviors rather than rigid implementation details.

4. Avoid backward-incompatible changes

Permalink to “4. Avoid backward-incompatible changes”Removing fields, renaming attributes, or changing data types breaks downstream pipelines and violates consumer expectations.

Prefer additive, backward-compatible changes. When breaking changes are unavoidable, follow structured versioning and migration patterns.

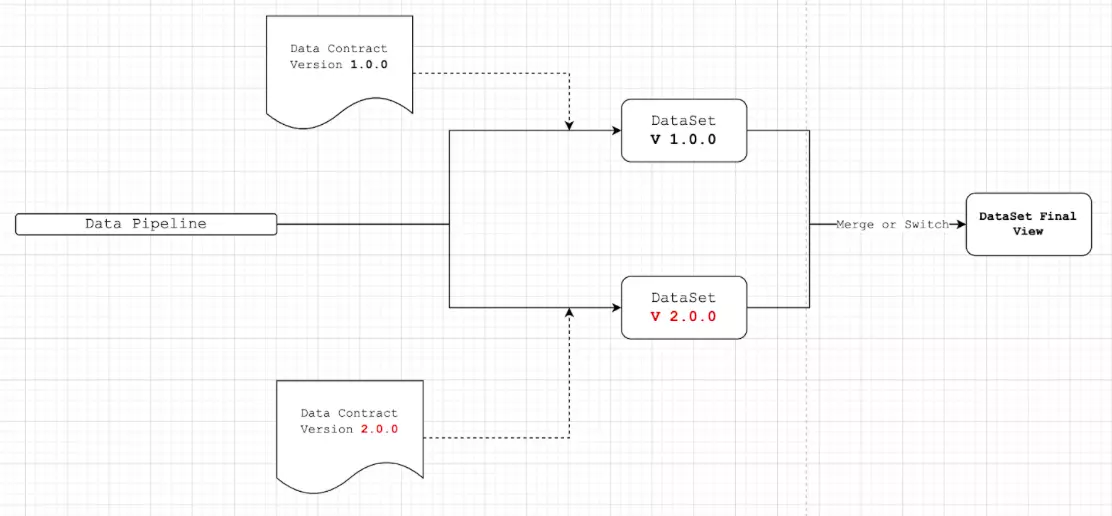

5. Ensure versioning of your contracts

Permalink to “5. Ensure versioning of your contracts”Like APIs, data contracts must have clear version numbers and a predictable upgrade path. Semantic Versioning works well here.

Semantic versioning in action. Source: Atlan.

Make sure you:

- Tie contract versions to storage locations (tables, schemas, or directories).

- Use views to shield consumers from underlying physical changes.

- Support a transition period where both old and new versions coexist until all consumers migrate.

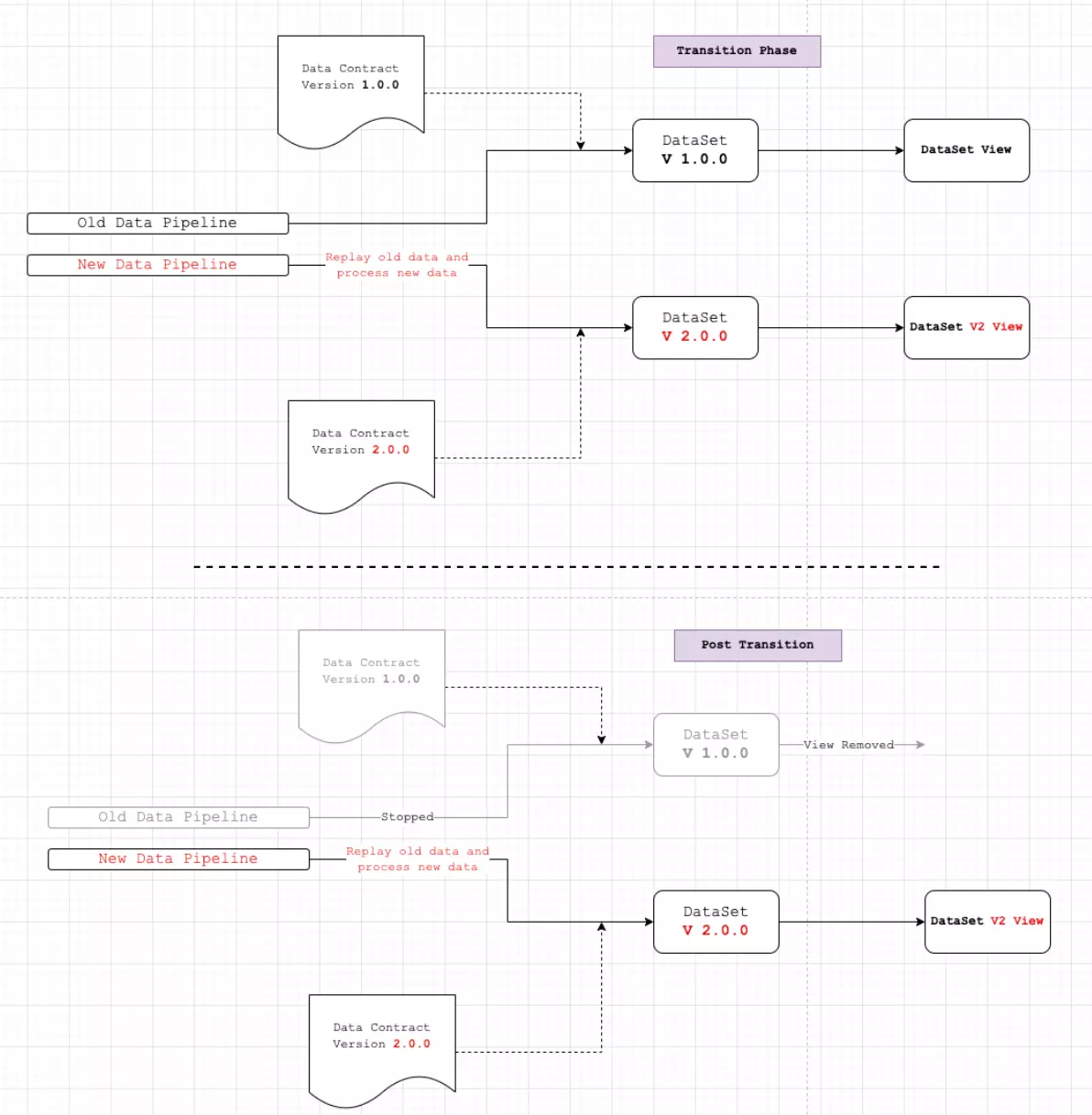

6. Republish all data

Permalink to “6. Republish all data”When a contract evolves dramatically, teams may need to reprocess historical data or replay segments of the pipeline to align past datasets with the new contract.

Republishing all data. Source: Atlan.

Use this technique sparingly, as it is expensive. This method ensures absolute consistency for regulated domains or critical analytics.

7. Operationalize contract enforcement through metadata platforms

Permalink to “7. Operationalize contract enforcement through metadata platforms”Manual contract enforcement breaks down quickly at scale. Modern platforms like Atlan strengthen contract workflows by:

- Tying contract metadata to lineage, ownership, trust signals, and downstream consumers

- Flagging breaking changes through automated impact analysis

- Propagating classifications and rules across connected assets

- Providing an auditable context layer that unifies producers, consumers, and contract terms

This shifts contracts from static documents to living, operational, continuously updated guardrails.

Join Data Leaders Scaling with Automated Data Governance

Book a Personalized Demo →Real stories from real customers: Activating metadata and scaling data governance with Atlan

Permalink to “Real stories from real customers: Activating metadata and scaling data governance with Atlan”

Modernized data stack and launched new products faster while safeguarding sensitive data

“Austin Capital Bank has embraced Atlan as their Active Metadata Management solution to modernize their data stack and enhance data governance. Ian Bass, Head of Data & Analytics, highlighted, ‘We needed a tool for data governance… an interface built on top of Snowflake to easily see who has access to what.’ With Atlan, they launched new products with unprecedented speed while ensuring sensitive data is protected through advanced masking policies.”

Ian Bass, Head of Data & Analytics

Austin Capital Bank

🎧 Listen to podcast: Austin Capital Bank From Data Chaos to Data Confidence

Join Data Leaders Scaling with Automated Data Governance

Book a Personalized Demo →

53 % less engineering workload and 20 % higher data-user satisfaction

“Kiwi.com has transformed its data governance by consolidating thousands of data assets into 58 discoverable data products using Atlan. ‘Atlan reduced our central engineering workload by 53 % and improved data user satisfaction by 20 %,’ Kiwi.com shared. Atlan’s intuitive interface streamlines access to essential information like ownership, contracts, and data quality issues, driving efficient governance across teams.”

Data Team

Kiwi.com

🎧 Listen to podcast: How Kiwi.com Unified Its Stack with Atlan

Join Data Leaders Scaling with Automated Data Governance

Book a Personalized Demo →Ready to formalize data quality, reduce costs, and scale trust with data contracts?

Permalink to “Ready to formalize data quality, reduce costs, and scale trust with data contracts?”In today’s economic environment, organizations must reduce waste, maximize ROI, and strengthen data quality without adding new tools or headcount. Data contracts create this leverage. By left-shifting ownership to producers, they ensure data quality at the source, prevent costly downstream failures, and foster tighter collaboration between engineering, analysts, and business teams.

As more organizations adopt metadata-driven governance, data contracts are poised to become a core part of enterprise data strategy—either as a standalone discipline or absorbed into broader governance frameworks.

With metadata control planes like Atlan operationalizing contract metadata, lineage, and validation across the stack, teams finally have a scalable path to trustworthy, predictable data in every workflow.

Join Data Leaders Scaling with Automated Data Governance

Book a Personalized Demo →FAQs about data contracts

Permalink to “FAQs about data contracts”1. What is the purpose of a data contract?

Permalink to “1. What is the purpose of a data contract?”A data contract defines the agreed-upon structure, semantics, quality expectations, and delivery guarantees of a data product.

Its purpose is to ensure reliability, prevent breaking changes, align producers and consumers, and make data trustworthy by design, not after the fact.

2. What is the difference between a data contract and an SLA?

Permalink to “2. What is the difference between a data contract and an SLA?”A data contract governs the content and structure of the data itself—schema, semantics, constraints, quality rules, versioning, and compatibility.

An SLA governs service performance—uptime, latency, delivery windows, and support expectations.

In short: Contracts protect data integrity; SLAs protect service reliability.

3. What is the difference between a data contract and an API contract?

Permalink to “3. What is the difference between a data contract and an API contract?”An API contract defines how systems interact—endpoints, request/response formats, authentication, and protocol behavior.

A data contract defines what the data must look like—its fields, meanings, constraints, lineage, and quality requirements.

While API contracts prevent breaking integrations, data contracts prevent breaking analytics, models, and downstream systems.

4. What should be included in the data contract?

Permalink to “4. What should be included in the data contract?”A strong data contract typically includes:

- Schema definition

- Semantic definitions

- SLAs (service level agreements)

- Quality and compatibility rules

- Operational and compliance metadata

- Versioning and change management

- Ownership & stewardship

- Usage policies and access protocols

5. How do data contracts relate to schema evolution?

Permalink to “5. How do data contracts relate to schema evolution?”Data contracts play a crucial role in schema evolution by formalizing the process of making changes to a data schema. They ensure that any updates are backward-compatible or properly communicated to all stakeholders, minimizing disruptions in data pipelines.

6. Who is responsible for data contracts?

Permalink to “6. Who is responsible for data contracts?”Primary responsibility lies with data producers—the teams creating and publishing the data—because they control schema, semantics, and data generation logic.

However, data consumers, data stewards, and platform teams all share responsibility:

- Producers define and maintain the contract.

- Consumers validate requirements and notify producers of needed changes.

- Governance/platform teams operationalize contracts, automate validation, and manage versioning (often using tools like Atlan).

In mature organizations, data contracts become a joint accountability framework aligning producers, consumers, and governance teams around reliable, high-quality data.

7. How can teams implement data contracts effectively?

Permalink to “7. How can teams implement data contracts effectively?”Teams can implement data contracts by collaborating to define the necessary data schema, setting validation rules, and using automated tools to monitor compliance. It’s crucial to involve both producers and consumers of data in this process to ensure the contracts meet everyone’s needs.

8. What tools support automated data contract validation?

Permalink to “8. What tools support automated data contract validation?”Automated data contract validation is supported by a growing ecosystem of tools that check schema consistency, enforce rules, and detect breaking changes before they hit production. Common categories include:

- Schema validation frameworks (e.g., Schemata, JSON Schema, Protobuf, Avro) for enforcing structural and semantic rules.

- Pipeline-integrated validators in tools like dbt tests, Great Expectations, and Soda for applying quality and constraint checks at runtime.

- Event-driven contract validators such as OpenAPI/AsyncAPI–based systems for streaming and message-oriented data flows.

- Metadata control planes like Atlan to operationalize data contracts

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Data contracts: Related reads

Permalink to “Data contracts: Related reads”- 10 Data Contract Open Questions You Need to Ask

- dbt Data Contracts: Quick Primer With Notes on How to Enforce

- Top 6 Database Schema Examples & How to Use Them!

- Best Data Governance Tools in 2026

- Best Data Governance Software in 2026: A Complete Roundup of Key Strengths & Limitations

- Data Catalog Examples | Use Cases Across Industries and Implementation Guide

- Data Lineage Solutions: Capabilities and 2026 Guidance

- Data Validation: Types, Benefits, and Accuracy Process

- Data Validation vs Data Quality: 12 Key Differences

- 8 Data Governance Standards Your Data Assets Need Now

- Metadata Standards: Definition, Examples, Types & More!

- Data Governance vs Data Compliance: Ultimate Guide (2026)

- Data Compliance Management: Concept, Components, Steps (2026)

- 10 Proven Strategies to Prevent Data Pipeline Breakage

- 5 Best Data Governance Platforms in 2026 | A Complete Evaluation Guide to Help You Choose

- Data Lineage Tracking | Why It Matters, How It Works & Best Practices for 2026

- The Forrester Wave™: Enterprise Data Catalogs, Q3 2024 | Available Now