Mage: A Comprehensive Guide to This Data Orchestration Tool

Share this article

Mage is an open-source hybrid framework that integrates and transforms data. It helps you run, monitor, and orchestrate data pipelines with instant feedback.

This article will look at Mage’s capabilities and how it fits into your data stack. We’ll also go through the installation requirements and setup process to help you get started quickly.

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

Table of Contents #

- What is Mage?

- Mage’s architecture

- Mage’s core features

- Mage: Getting started

- Wrapping up

- Related Reads

What is Mage? #

Mage is an open-source data pipeline tool for building real-time and batch pipelines. Each element of your pipeline is a standalone file with modular code, so that you can reuse and test it as and when required.

Mage offers a custom notebook UI for transforming data using Python, SQL, R, and PySpark.

Yes! Orchestration is just the simplest way to describe @mage_ai. But data engineers use Mage to build data and run pipelines that integrate data and transform data. The IDE makes it really easy and fast to write code to build each step in your pipeline. Thanks for sharing 😍

— Tommy DANGerous (@TommyDANGerouss) May 22, 2023

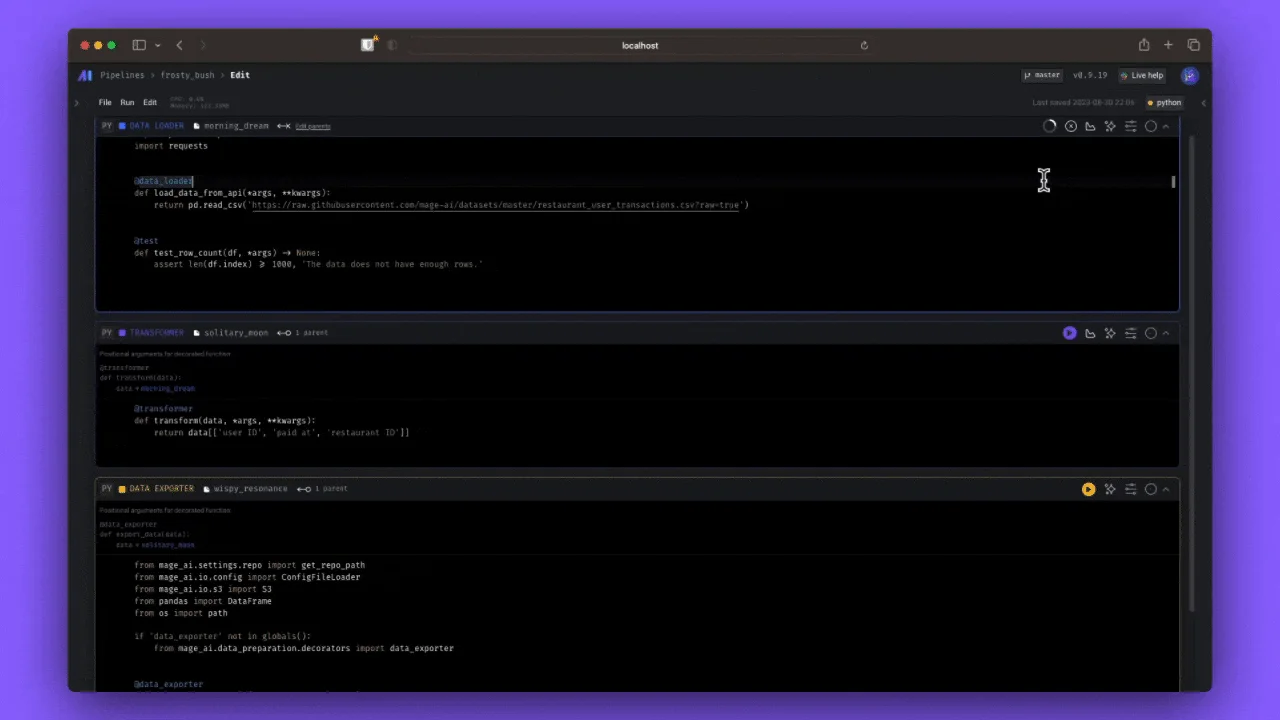

Mage's IDE simplifies coding each step. Source: Twitter.

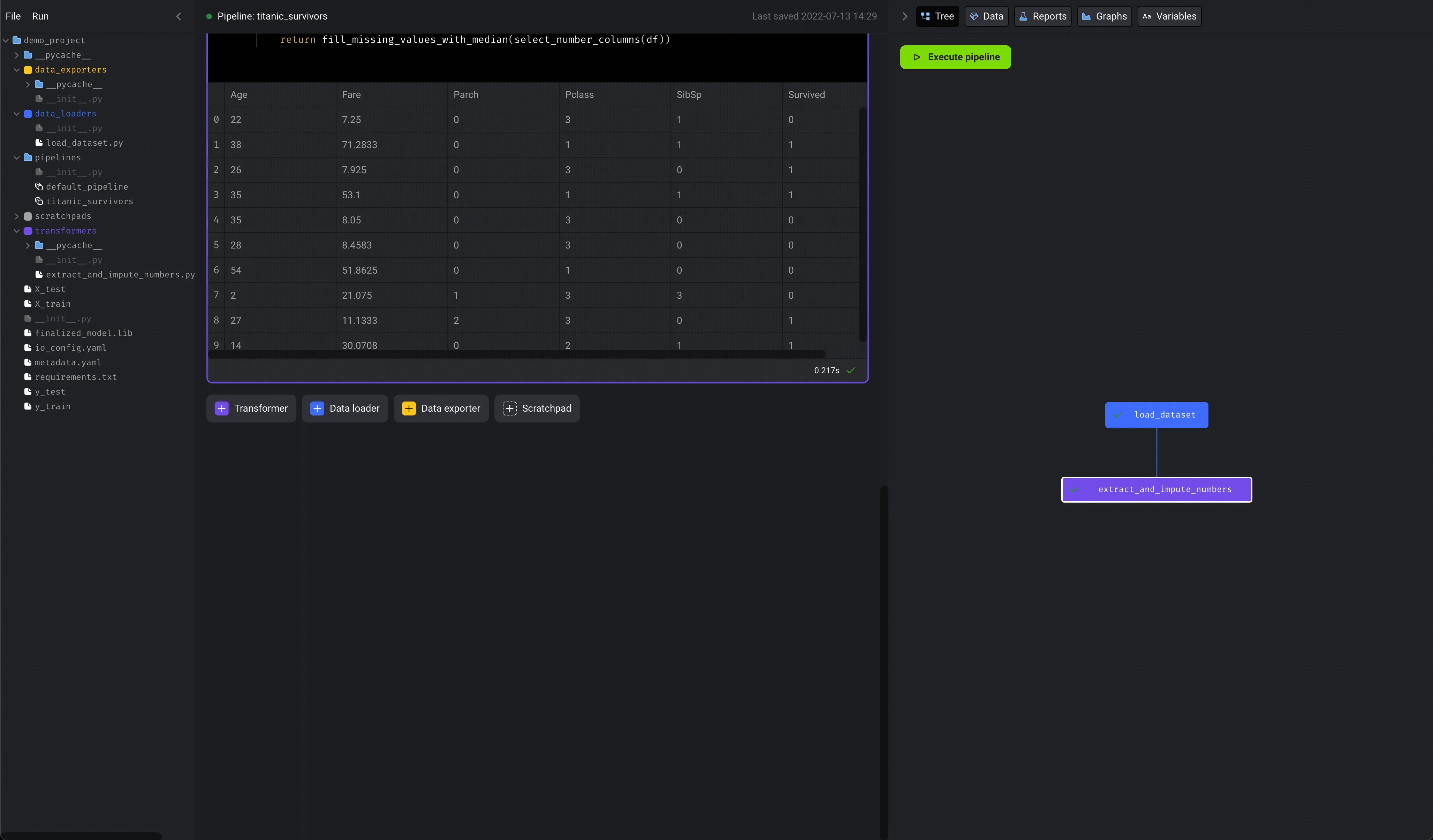

Mage’s UI also lets you preview the pipelines you build before you deploy them.

Previewing pipelines on Mage’s UI. Source: Mage.

Mage integrates with modern data stack tools, such as Amazon S3, BigQuery, Redshift, PowerBI, Tableau, Salesforce, Snowflake, and more.

Read more → Available data sources and destinations in Mage

Mage is being used by data engineers and architects across various companies, such as Airbnb, Miniclip, Red Alpha, and ZebPay.

Mage is still under development and will add more integrations in the future.

Mage: Key concepts #

Before proceeding, let’s look at the key concepts and terminology used by Mage:

- Project: A Mage project contains the code for your data pipelines, blocks, and other project-specific data.

- Block: A block is a file with code that can be executed independently or within a pipeline. Blocks can be data loaders, transformers, data exporters, scratchpads, sensors, or charts. Together, blocks form a Directed Acyclic Graph (DAG), which is called a pipeline.

- Data products: Each block produces data once it has been executed. This data is called a data product. Data products can be datasets, images, videos, text files, audio files, etc.

- Pipeline: A pipeline contains references to all the blocks of code and charts for visualizing data. It helps organize the dependency between each block of code.

- Trigger: A trigger is a set of instructions for executing a pipeline. Triggers can be schedules, events, or APIs.

- Run: A run record stores information about the run’s start time, status, completion time, and any runtime variables used in the execution of the pipeline or block, among others. Runs can be for blocks or pipelines.

- Backfill: A backfill creates one or more runs for a pipeline.

What does it do? #

Using Mage, you can:

- Build pipelines using your language of choice (Python, SQL, R)

- Preview your code’s output using interactive notebook UI

- Deploy Mage to AWS, GCP, or Azure

- Observe your pipelines with built-in monitoring, alerting, and observability via the Mage UI

Mage’s architecture: An overview #

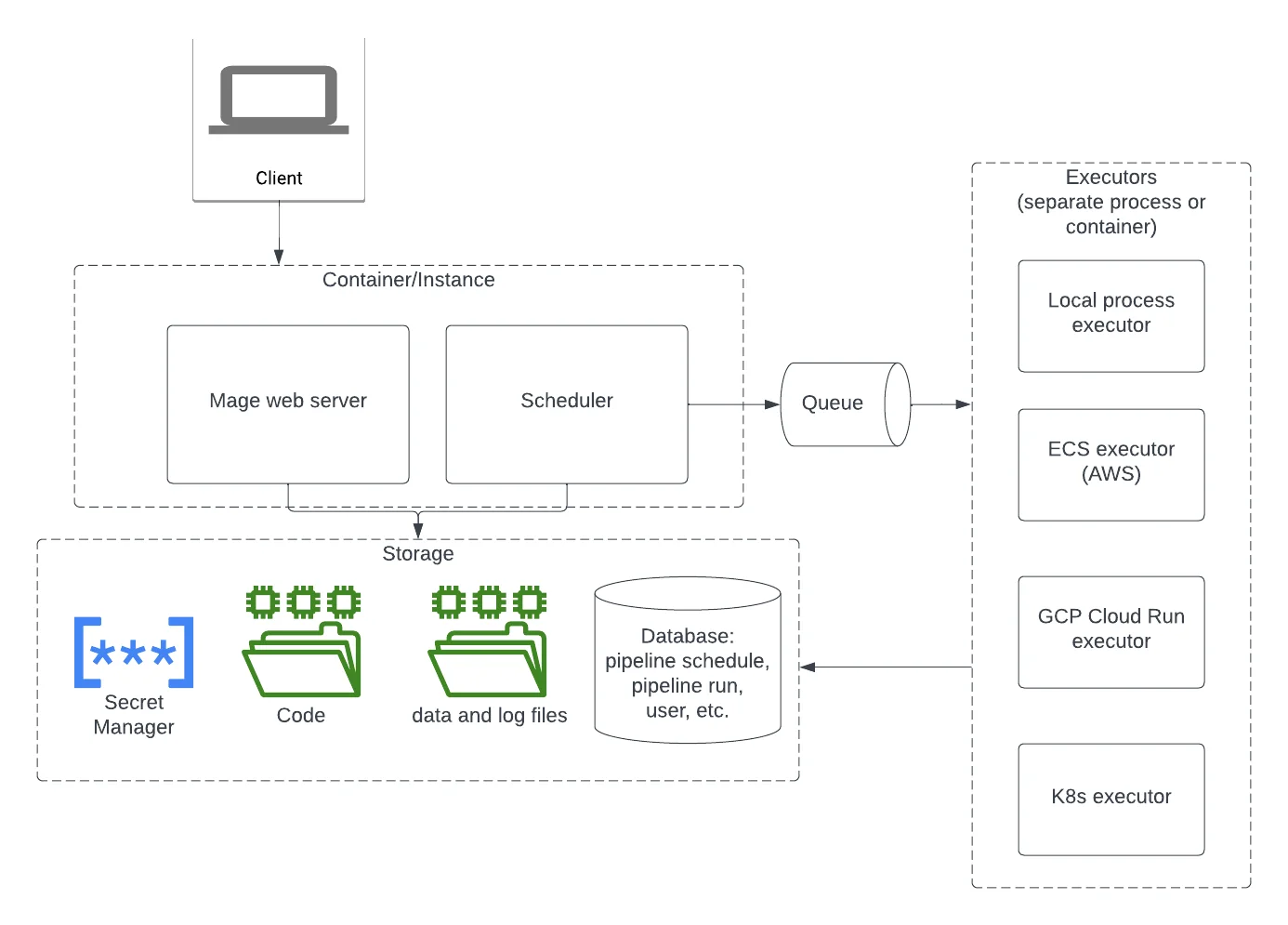

Here are the key components of Mage’s architecture:

- Mage server

- Storage

- Executors

- Schedulers

The core components of Mage’s architecture. Source: Mage.

Mage server #

According to Mage, its server is responsible for:

- Handling all the API requests

- Interacting with the storage to read and write data

- Running the websocket server to handle websocket requests

- Executing the pipeline code in the kernel

- Handling user authentication

Storage #

Mage stores code files, data and log files, objects related to orchestration and authentication, and secrets in the storage layer.

By default, Mage uses a local SQLite database. You can configure Mage to use PostgreSQL or Microsoft SQL.

To use a different database, you can set the MAGE_DATABASE_CONNECTION_URL environment variable.

Your secrets (passwords, API keys, etc.) are encrypted before being stored in the Mage database. Secrets cannot be edited, only created, deleted, or shared.

Executors #

Executors are responsible for executing pipeline code. Mage supports different types of executors, such as the local process, ECS, GCP Cloud Run, and Kubernetes executors.

Schedulers #

In Mage, the scheduler keeps an organized list of pipelines, tracking their current status, dependencies, and scheduled run times.

The scheduler ensures that pipelines run on time and in sequence with their dependencies. Source: Mage.

By default, Mage runs the web server and scheduler in the same container. You can separate them by overriding the container command.

Mage’s core features #

Mage is equipped with data orchestration features, such as:

- Data pipeline management by scheduling and managing data pipelines with observability

- Interactive Python, SQL, & R notebook editor for coding data pipelines

- Data integration by synchronizing data from third-party resources to your internal destinations

- Ingesting and transforming streaming data

- Building, running, and managing your dbt models

- Auto-versioning and partitioning each block that produces a data product

- Backfilling

Mage: Getting started #

Follow this step-by-step guide to get started with Mage:

Installation #

- Mage can be installed using Docker or pip.

- If you’re using Docker, you can create a new project and launch the tool with the following command:

docker run -it -p 6789:6789 -v $(pwd):/home/src mageai/mageai mage start [project_name] - If you’re using pip, you can install Mage with the following command:

pip install mage-ai

Building a pipeline #

After installation, open http://localhost:6789 in your browser to start building your pipeline.

Running a pipeline #

- Once you’ve built your pipeline in the tool, you can run it with the following command:

docker run -it -p 6789:6789 -v $(pwd):/home/src mageai/mageai mage run [project_name][pipeline] - If you’re using pip, replace

docker run...withmage.

Creating a new project #

- If you want to create a different project with a different name, use the following command:

docker run -it -p 6789:6789 -v $(pwd):/home/src mageai/mageai mage init [project_name] - If you’re using pip, replace

docker run...withmage.

Wrapping up #

Mage is an open-source data orchestrator with a custom notebook UI for building data pipelines. It offers multi-language support and integrates with essential tools in your modern data stack.

You can build and deploy data pipelines using modular code and preview the output of your pipelines right away.

Alternatives to Mage include Apache Airflow, Dagster, and Prefect. Choosing the right tool depends on your specific needs, resources, technical expertise, and data stack setup.

Mage: Related Reads #

- What is data orchestration: Definition, uses, examples, and tools

- Open source ETL tools: 7 popular tools to consider in 2023

- 5 open-source data orchestration tools to consider in 2023

- ETL vs. ELT: Exploring definitions, origins, strengths, and weaknesses

- 10 popular transformation tools in 2023

- Dagster 101: Everything you need to know

- Airflow for data orchestration

- Luigi: Spotify’s open-source data orchestration tool for batch processing

Share this article