Airflow: DAGs, Workflows as Python Code, Orchestration for Batch Workflows, and More

Share this article

Airflow is an open-source data orchestrator that introduced the world of data engineering to the directed acyclic graph (DAG). It was created by Airbnb in 2014 for authoring, scheduling, and monitoring workflows as DAGs.

In this article, we’ll explore Airflow’s architecture, core capabilities, and setup—equipping you with insights into this data orchestration tool.

Is Open Source really free? Estimate the cost of deploying an open-source data catalog 👉 Download Free Calculator

Table of contents #

- What is Airflow?

- Airflow: Origins

- Architecture

- Capabilities

- Getting started with Airflow

- Summing up

- Airflow: Related Reads

What is Airflow? #

Apache Airflow is an open-source, Python-based platform to programmatically develop, schedule, and monitor batch workflows.

“To oversimplify, you can think of Airflow as cron, but on steroids.” Vinayak Mehta, Former Data Engineer at Atlan

A workflow is represented as a DAG (a Directed Acyclic Graph) and the basic unit of work is called a task.

DAGs and tasks in Airflow #

According to Airflow, each DAG is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies. DAGs should be placed inside the dag_folder.

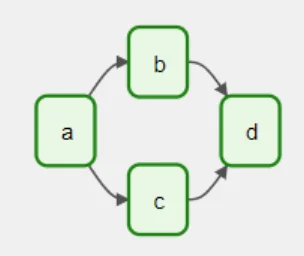

A basic DAG in Airflow with four tasks - Source: Airflow.

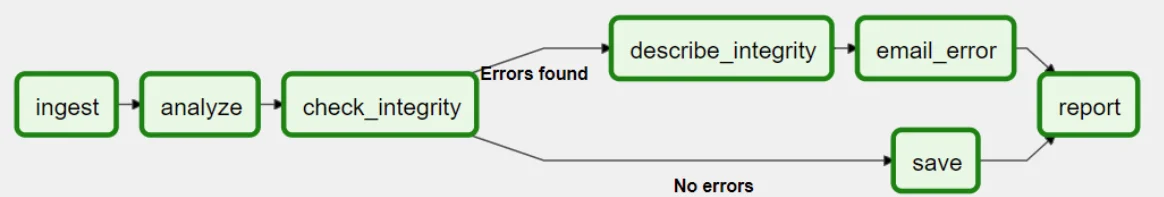

An example of a DAG - Source: Airflow.

These tasks are organized with their dependencies and data flows in mind.

“A DAG specifies the dependencies between Tasks, and the order in which to execute them and run retries; the Tasks themselves describe what to do, be it fetching data, running analysis, triggering other systems, or more.”

The three common types of tasks in Airflow are:

- Operators: Predefined tasks that you can string together quickly to build most parts of your DAGs; Operators define what gets done by a task

- Sensors: A special subclass of Operators that are entirely about waiting for an external event to happen

- TaskFlow: A custom Python function to author clean DAGs without extra boilerplate, using the

@taskdecorator

Each task is an implementation of an Operator. For instance, a

PythonOperatorwill execute some Python code, aBashOperatorwill run a Bash command, and anEmailOperatorwill send an email.

All workflows are defined in Python code. As a result, workflows can be version-controlled, developed by multiple people simultaneously, and tested to validate functionality.

Airflow components also become extensible, allowing you to connect with other technologies used in your modern data stack.

While a DAG is responsible for orchestrating task sequences, dependencies, and run frequencies, it doesn’t dictate the specifics of what actually happens within each task.

Airflow: Origins #

Maxime Beauchemin created Airflow at Airbnb in October 2014 to manage the company’s increasingly complex workflows. In 2015, Airflow was open-sourced and it has since been adopted by a wide range of organizations, including Adobe, Bosch, ContaAzul, Etsy, Grab, HBO, Instacart, Lyft, Nielsen, Shopify, and more.

According to Maxime, Airbnb used Airflow for:

- Data warehousing: Cleanse, organize, data quality check, and publish data into our growing data warehouse

- Growth analytics: Compute metrics around guest and host engagement as well as growth accounting

- Experimentation: Compute our A/B testing experimentation frameworks logic and aggregates

- Email targeting: Apply rules to target and engage our users through email campaigns

- Sessionization: Compute clickstream and time spent datasets

- Search: Compute search ranking-related metrics

- Data infrastructure maintenance: Database scrapes, folder cleanup, applying data retention policies, etc.

Airflow’s architecture: An overview #

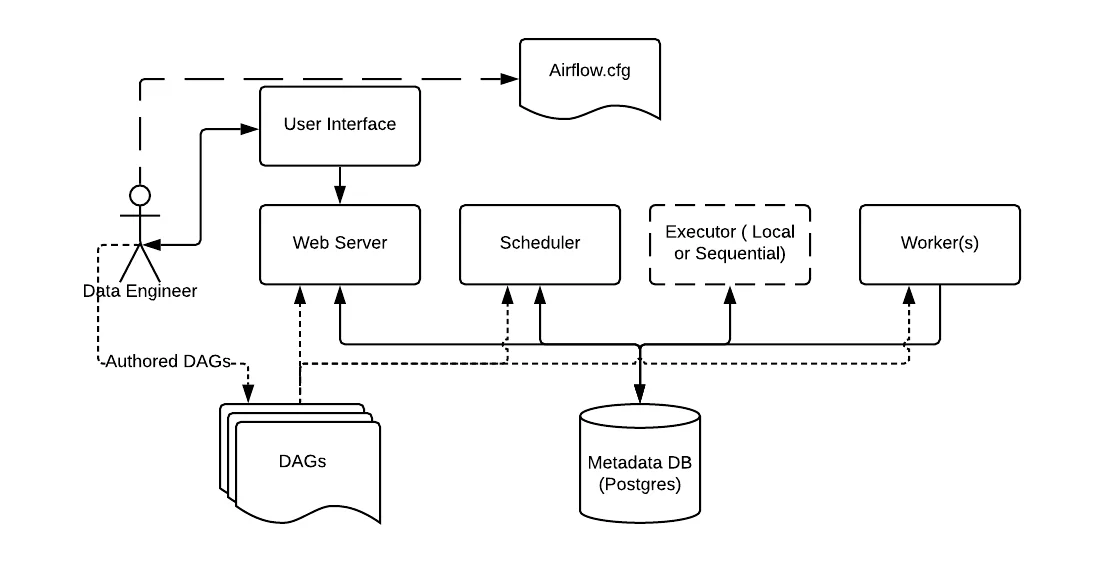

Airflow has several components that work together to orchestrate data workflows, such as:

- Metadata database

- Web server

- Scheduler

- Executor

- Worker

- DAGs

Basic Airflow architecture - Source: Airflow.

Let’s delve into the specifics of each component.

Metadata database #

In Airflow, the metadata database refers to a SQL database that stores metadata on DAGs, task runs, login information, permissions, etc.

Popular database choices include Postgres and MySQL. The Airflow community recommends Postgres for most use cases.

Web server #

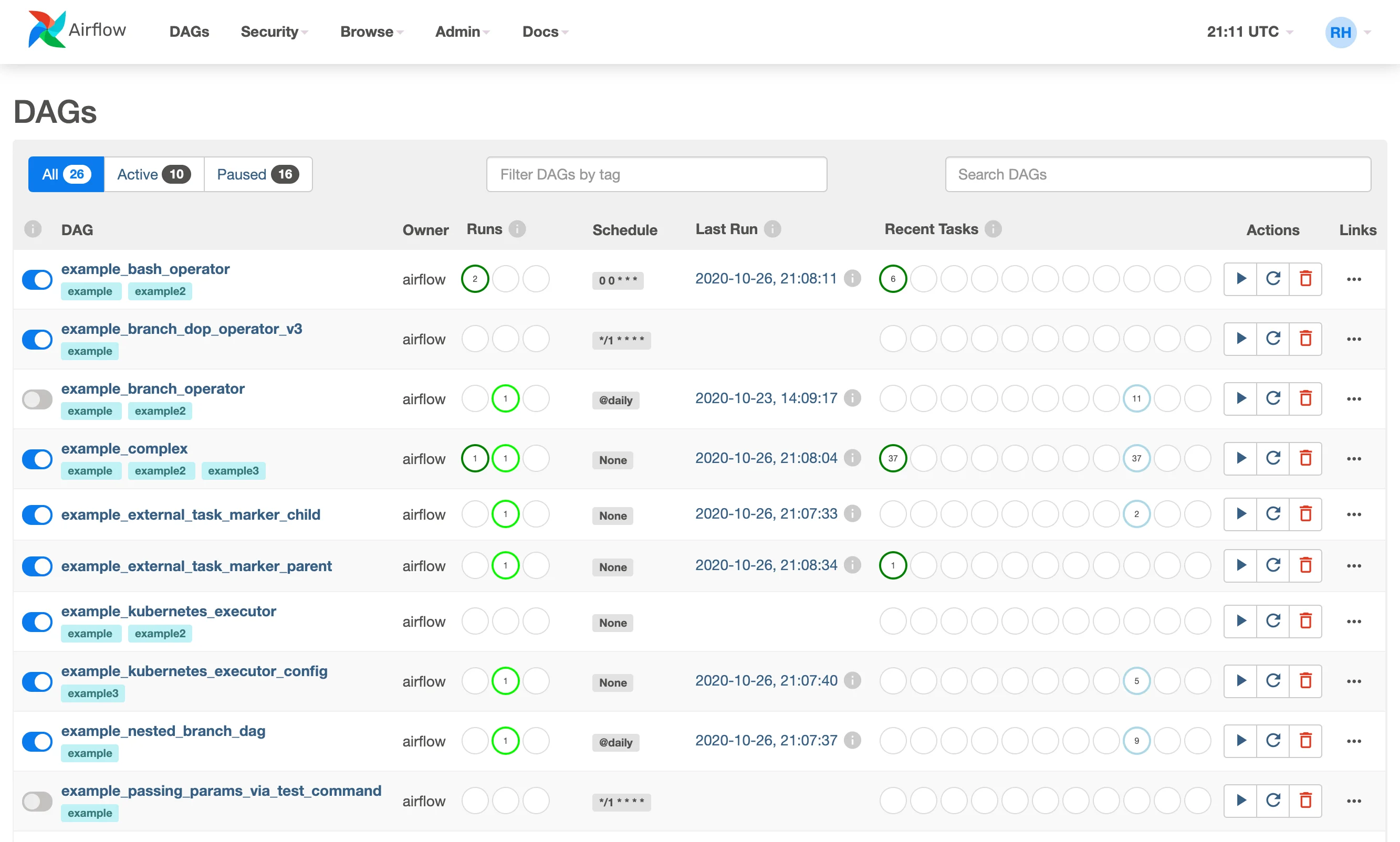

Airflow’s web server is the main interface for you to inspect, trigger, and debug DAGs and tasks.

You can view logs to monitor and troubleshoot your data pipelines, run, pause, or delete DAGs, share DAG links, and more.

Airflow’s user interface offers a comprehensive view of DAGs and their tasks - Source: Airflow.

According to Maxime, Airflow’s UI helps you:

- Visualize your pipeline dependencies

- See how your pipelines progress

- Get easy access to logs

- View the related code

- Trigger tasks

- Fix false positives/negatives

- Analyze where time is spent

- Understand at what time of the day different tasks usually finish

Scheduler #

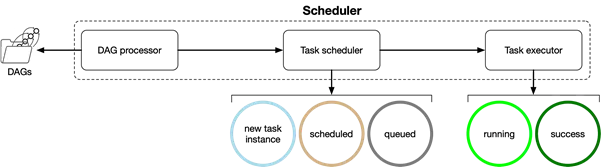

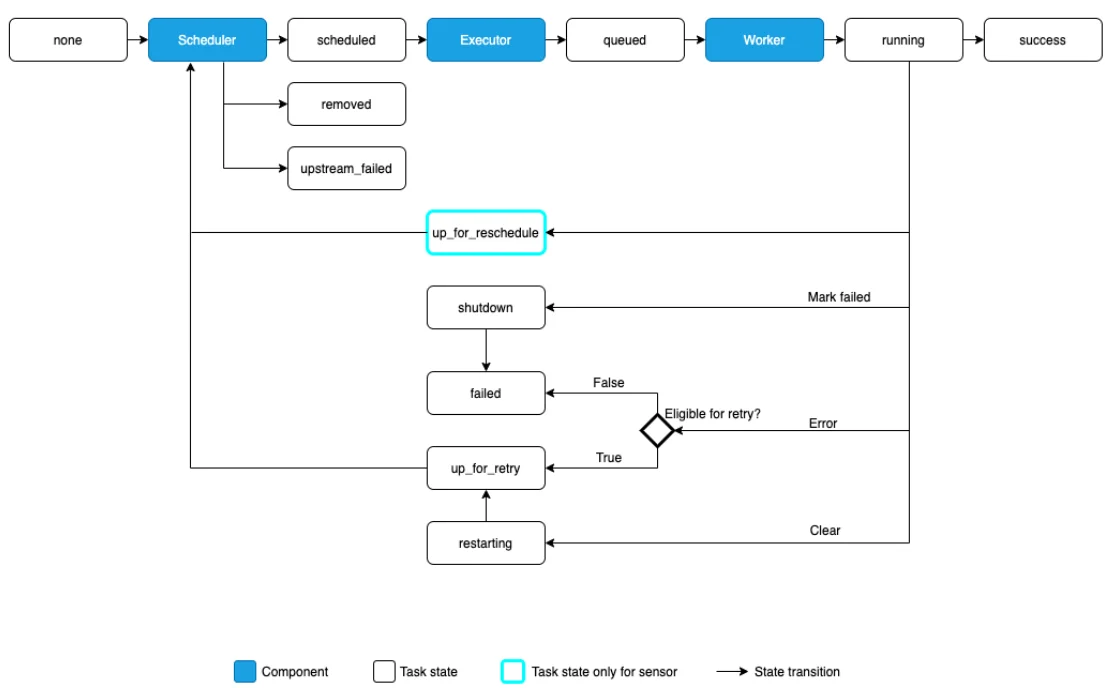

The scheduler is a daemon that monitors all DAGs and tasks. It uses the Executor to initiate tasks that are ready to run. The scheduler also determines when and where tasks should run.

“Once per minute, by default, the scheduler collects DAG parsing results and checks whether any active tasks can be triggered.”

Airflow’s scheduler - Source: Livebook.Manning

Executor #

The executor runs tasks. It isn’t a separate process. Instead, it runs within the Scheduler.

You can only have one configured executor at a time. Executors can be built-in or custom.

Airflow executors can either run the task locally (inside the scheduler process) or remotely (via a pool of workers):

- Local executors (ideal for small, single-machine installations):

- Testing DAGs with dag.test()

- Debugging Airflow DAGs on the command line

- Debug Executor (deprecated)

- Local Executor

- Sequential Executor (the default Airflow executor)

- Remote executors (ideal for multi-cloud/multi-machine installations):

- Celery Executor

- CeleryKubernetes Executor

- Dask Executor

- Kubernetes Executor

- LocalKubernetes Executor

Worker #

Workers are the processes that execute tasks, as defined by the executor. Workers also interact with other components of the Airflow architecture and the metadata repository.

How the various components of Airflow work together to run tasks - Source: Airflow.

DAGs #

As mentioned earlier, DAGs are data pipelines in Airflow written using Python. DAG files are read by the scheduler and executor from the DAG directory.

Upon startup, Airflow scans the DAG directory to load these definitions into memory for the scheduler and executor to access. The scheduler then uses them to line up tasks for execution according to their set schedules.

Airflow’s capabilities: An overview #

The core principles of Airflow are dynamic pipeline generation, extensibility, lean and explicit pipelines, and scalability. Let’s delve further into its core capabilities:

- Workflows as code: As mentioned earlier, all workflows are defined in Python code, making Airflow pipelines dynamic, extensible, and flexible. You can use standard Python features to create your workflows, including date time formats for scheduling and loops to dynamically generate tasks.

- Integrations with other data tools: You can use Python operators to execute your tasks on Google Cloud Platform, Amazon Web Services, Microsoft Azure, and other third-party services.

- Rich set of operators and hooks: Airflow orchestrates data workflows through its operators, hooks, and connections:

- Hooks are a high-level interface to an external platform. Hooks are the building blocks of operators.

- Operators leverage hooks to define tasks.

- A connection is a set of parameters (username, password, and hostname) along with the type of system that it connects. Each connection has a unique

conn_id.

You can create custom operators, hooks, and connection types to manage your use cases.

See Managing Connections for more details on how to create, edit, and manage Connections. Also, find the full list of Airflow Hooks here.

- Scalable and reliable: Since Airflow has a modular architecture, you can scale your Airflow pipelines by adding more workers. Airflow also offers built-in fault tolerance and task retries.

- Templating with Jinja: Airflow uses the Jinja templating engine to provide built-in parameters and macros. Airflow also provides hooks to define your own parameters, macros, and templates.

- Web-based UI: Airflow’s UI lets you monitor, schedule, and manage your workflows and check logs of completed and ongoing tasks.

Getting started with Airflow #

To get started with data orchestration using Airflow, here are some steps you need to follow:

- Install pip first, if you have not already done so. You can use the following command to install the latest version of pip.

- Set the location of the Airflow home directory, if you want to use a different location than the default ~/airflow. You can use the following command to set the AIRFLOW_HOME environment variable.

- Install Airflow using pip and the constraints file, which is determined based on the URL you pass.

- Run the Airflow standalone command, which initializes the database, creates a user, and starts all components.

- Access the Airflow UI by visiting localhost:8080 in your browser and log in with the admin account details shown in the terminal. Enable the

example_bash_operatorDAG on the home page.

Summing up #

Airflow is a battle-tested open-source data orchestration tool that uses DAGs to author, schedule, and manage complex workflows. Its modular architecture and workflow-as-code paradigm let you work with a wide range of tools and technologies.

Airflow is ideal for workflows that are mostly static and slowly changing. It can be used for processing real-time data by pulling data off streams in batches.

Open-source alternatives to Airflow include Dagster, Luigi, Prefect, and Argo. Choosing the right data orchestration tool would largely depend on your current data ecosystem and use cases.

Airflow: Related Reads #

- What is data orchestration: Definition, uses, examples, and tools

- Open source ETL tools: 7 popular tools to consider in 2023

- Open-source data observability tools: 7 popular picks in 2023

- ETL vs. ELT: Exploring definitions, origins, strengths, and weaknesses

- 10 popular transformation tools in 2023

- Dagster 101: Everything you need to know

- Atlan + Airflow: Better pipeline monitoring and data lineage

- Creating workflows in Apache Airflow to track disease outbreaks in India

- Airflow, metadata engineering, and a data platform for the world’s largest democracy

Share this article