Prefect: Here’s Everything You Need to Know About This Open-Source Data Orchestrator

Share this article

Prefect automates and optimizes data workflows, addressing common orchestration challenges like failure handling, dependency management, and data quality assurance.

In this article, we’ll look at Prefect’s role in data orchestration, including its architecture, features, setup, and place in the modern data stack.

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

Table of Contents #

- What is Prefect OSS?

- Prefect’s architecture

- Prefect’s capabilities

- Prefect setup: Getting started

- Summing up

- Related Reads

What is Prefect OSS? #

Prefect OSS is an open-source data orchestration tool to automatically build, observe, and react to data pipelines using Python code.

Prefect helps with scheduling, caching, retries, logging, event-based orchestration, observability, and more.

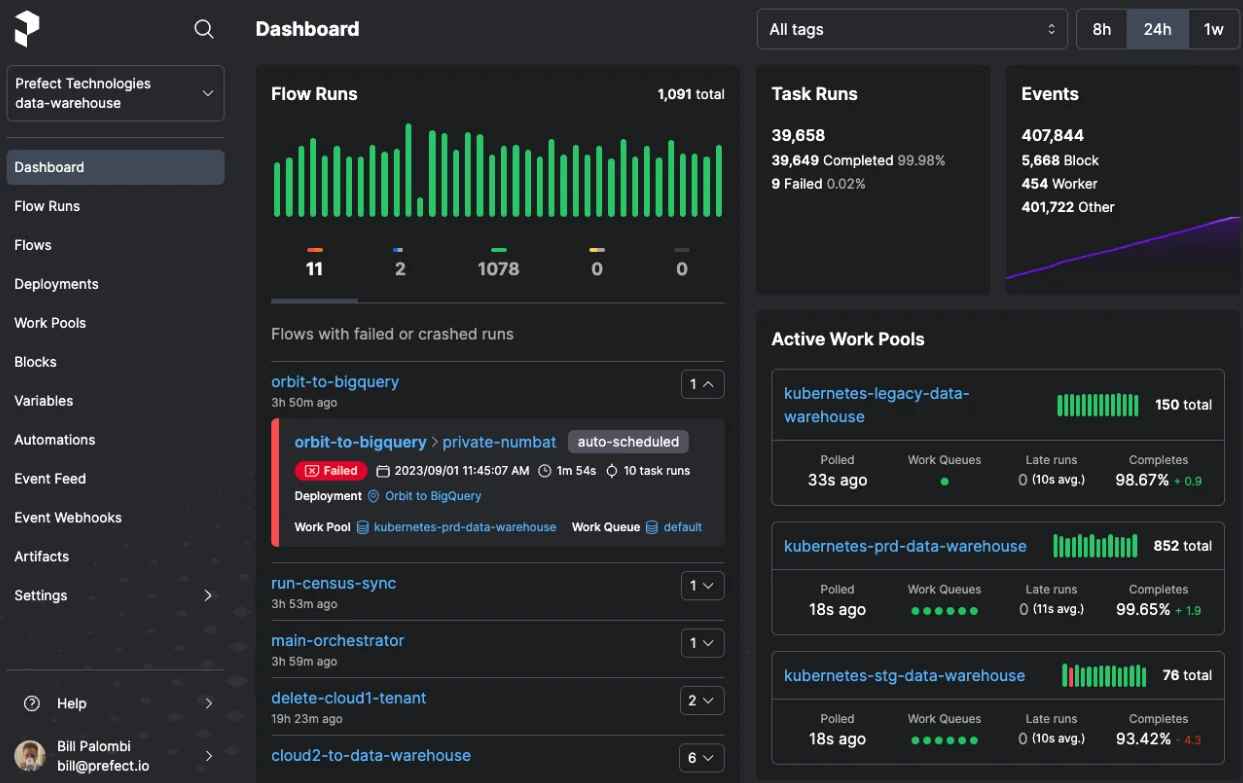

The Prefect dashboard - Source: Prefect.

In 2022, Prefect 2.0 brought new capabilities such as customizable queue settings for specific flow runs and global limits on concurrency and rate.

“Concurrency” is the ability of a system to run multiple tasks simultaneously, and “Rate” is the number of tasks that a system can complete per unit time.

Also, read → Reasons to transition from Prefect 1.0 to 2.0

Prefect Cloud is the managed version with enterprise-grade features for access management (RBAC, SCIM, etc.), logging, alerting, and support.

Prefect: Origins #

In 2018, Jeremiah Lowin (who had worked on Apache Airflow) set up Prefect Technologies, Inc. The goal was to build a more flexible, customizable, and modular tool that suited the needs of a modern data stack.

Lowin created Prefect to respond to “a need for more automation and data engineering capabilities than Airflow provides.”

In 2019, the Prefect engine was open-sourced with an Apache 2.0 license.

In 2020, the company open-sourced an orchestration layer for Prefect (including the Prefect Cloud UI).

“Our major motivation is to ensure that individuals and businesses can start taking advantage of our software with the least friction possible. As an open-source community, this furthers our objective of delivering a best-in-class workflow management experience without compromise.” Jeremiah Lowin, Founder and CEO

Prefect also offers a managed service for dataflow automation, i.e., the Prefect Cloud.

Many companies, such as Slate, Kaggle, Microsoft, PositiveSum, and ClearCover, use Prefect to handle their data pipelining and orchestration workloads.

Concepts in Prefect: An overview #

Prefect uses specific terminology, such as:

- Flows: Python functions that run a basic workflow

- Tasks: The basic unit of work that gets organized into flows; All tasks must be called from within a flow and additionally, tasks may not call other tasks directly

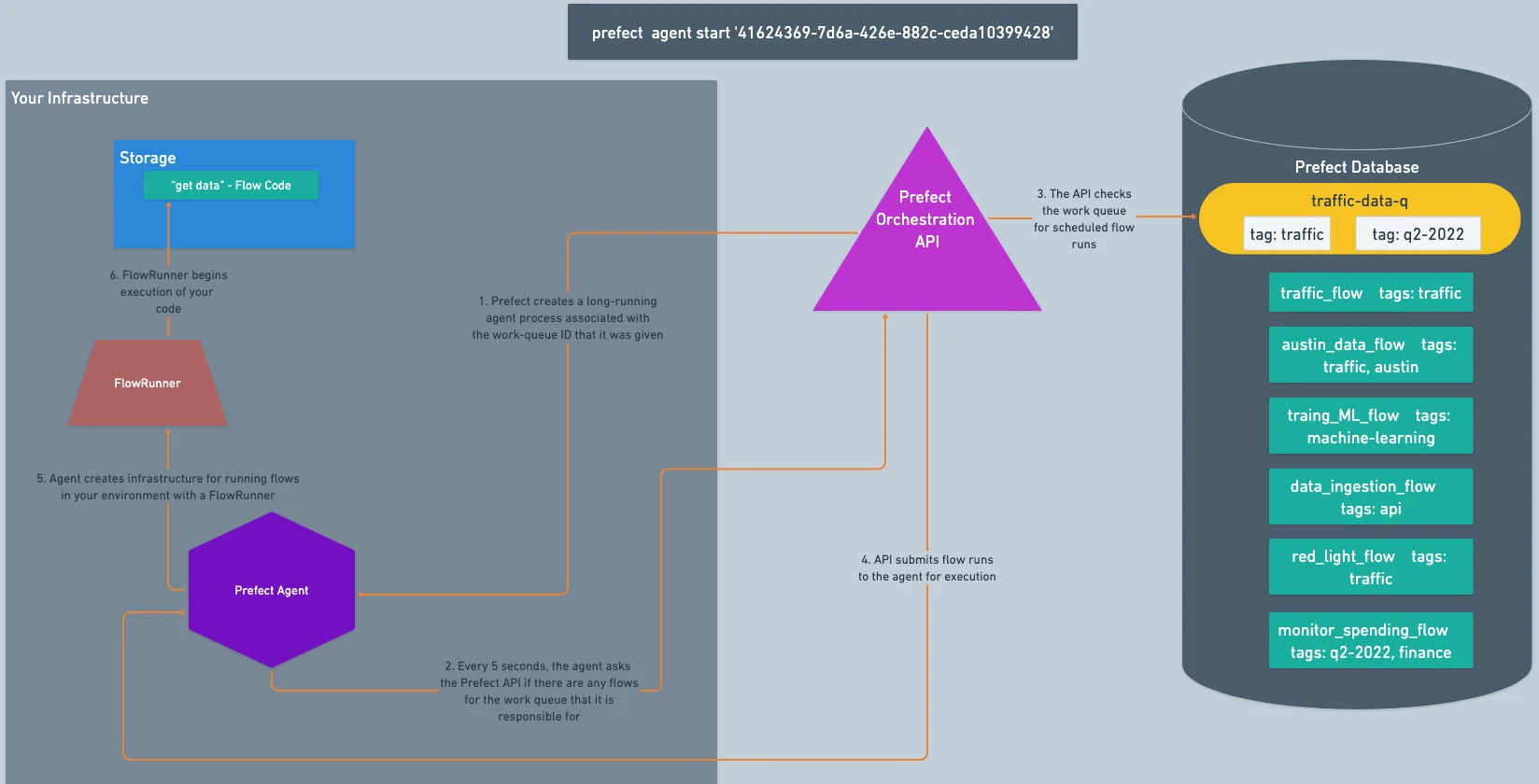

- Work pools and workers: Work pools organize work for execution, whereas a worker retrieves scheduled runs from a work pool and executes them

- Schedules: Services that inform Prefect API how to create new flow runs automatically

- Events: A notification of a change

- Results: Represent the data returned by a flow or a task

- Artifacts: Persisted outputs — tables, links, markdown — stored on Prefect Cloud or a Prefect server instance and rendered in the Prefect UI

- States: Rich objects with information about the status of a particular task run or flow run

- Blocks: Store configuration information, such as credentials for authenticating with AWS, GitHub, Slack, etc.

- Deployments: Server-side representation of flows that store metadata on when, where, and how a workflow should run; Deployments are essential for remote orchestration

- Automations: Actions that Prefect executes automatically based on trigger conditions

Flows and tasks are the basic units of Prefect that you must know.

“You can think of a flow as a recipe for connecting a known sequence of tasks together. Tasks, and the dependencies between them, are displayed in the flow run graph, enabling you to break down a complex flow into something you can observe, understand and control at a more granular level.”

Prefect for data orchestration - Source: GitHub.

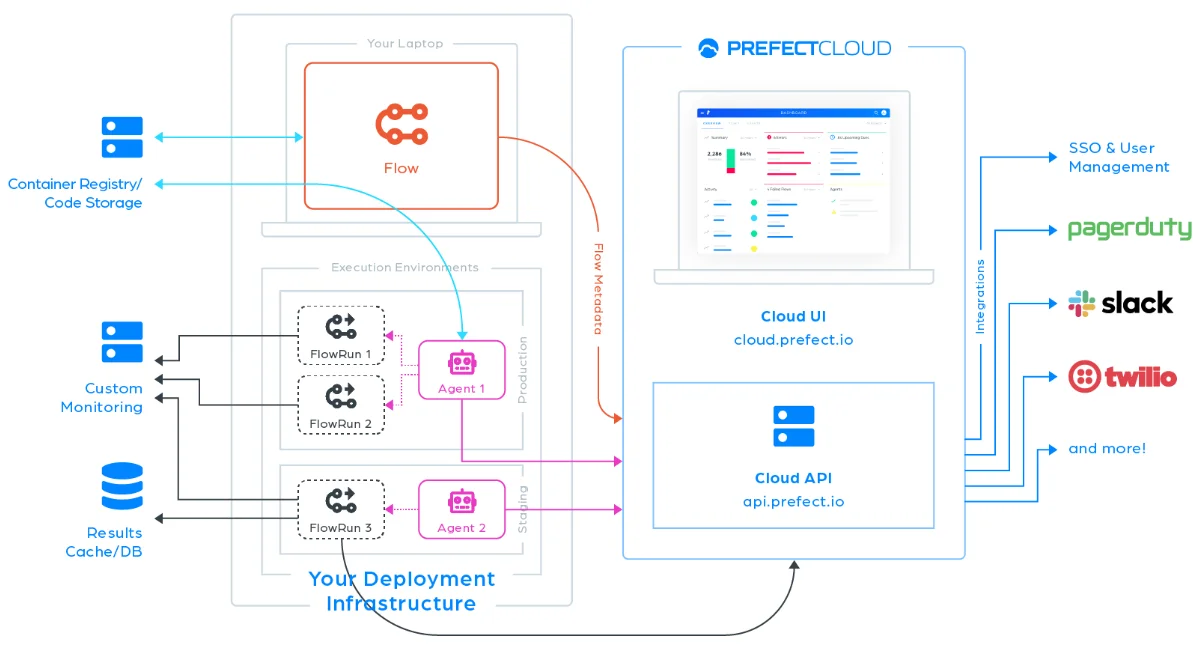

Prefect’s architecture: An overview #

The main components (and sub-components) of Prefect’s architecture are:

- Execution layer: Responsible for running tasks with agents and flows.

- Agents: Polling services that get scheduled tasks from a work pool and deploy the corresponding flow runs

- Flows: Functions that define the tasks to be executed and establish task dependencies and triggers

- Orchestration layer: Responsible for managing and tracking data workflows with the Orion API server, REST API services used for orchestration, and Prefect’s UI

Overview of Prefect 2.0’s architecture - Source: Prefect.

Prefect’s capabilities: An overview #

Prefect is lightweight — you can start a local development server with just one command — and can deal with raw Python functions. Its capabilities include:

- Dynamic workflows

- Stateful execution

- Hybrid orchestration

- Caching and mapping

- Parameterized flows

- User-friendly UI

Dynamic workflows #

Prefect allows you to define workflows that can adapt to runtime conditions and data dependencies through dynamic task execution.

With Prefect, you can write code in Python to build workflows, without requiring DAGs.

In my quest to add a pipe operator to every language and framework, I've now implemented it for the Prefect workflow engine! You can now play with task.pipe() in the latest release. Prefect is a great framework that has been working well for me for #Bioinformatics, check it out! https://t.co/ROBggaRmf8 pic.twitter.com/0zudLTxFZo

— Michael Milton (@multimeric) March 11, 2022

Integrating the pipe operator into Prefect’s workflow engine. Source: Twitter.

Stateful execution #

In Prefect, each task’s state is tracked. These states include scheduled, pending, running, failed, crashed, and more. Each of these states represents a different stage in the lifecycle of a task run.

When a task’s state changes, state handlers come into play.

As a newcomer in #Prefect, it makes it easy to add retries, dynamic mapping, caching and failure notifications to data pipelines, sometimes web platform renders slow processing flows in the radar. #Prefect in #Python, an efficient data workflow management tool. #mlopszoomcamp pic.twitter.com/dnzwECCEej

— Daniel H. VEDIA-JEREZ (@DHVediaJerez) June 9, 2022

Experiencing easy retries and dynamic mapping in Prefect. Source: Twitter.

Experiencing easy retries and dynamic mapping in Prefect. Source: Twitter

Hybrid orchestration #

In Prefect, you can run your workflows in various environments — locally on your machine, in the cloud, or even in a hybrid setup.

Caching and mapping #

Prefect allows you to cache the results of your tasks and reuse them in subsequent runs.

It also allows you to apply the same task logic to multiple inputs in parallel.

Parameterized flows #

Prefect allows runtime parameterization of flows through various methods — positional or keyword arguments, for instance.

Refactored code. Introduced #prefect. Going to parameterize and get experiments streamlined in #mlfow. How is your day going?#ML #MachineLearning #mlops

— Venkat Ramakrishnan (@flyvenkat) August 24, 2022

Streamlining experiments through parameterization in Prefect. Source: Twitter.

User-friendly UI #

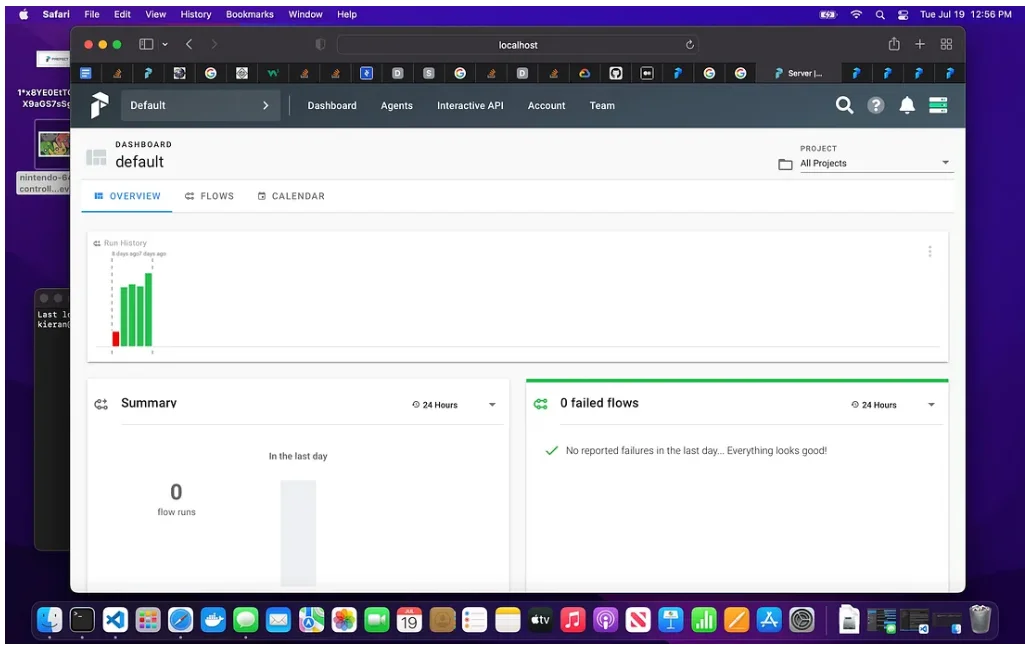

The Prefect UI helps you track and manage your flows, runs, and deployments.

The Prefect UI - Source: Medium.

It displays many useful insights about your flow runs, such as flow run summaries, deployed flow details, scheduled flow runs, task dependencies, logs, warnings, and more.

Prefect setup: Getting started #

To get started with Prefect, follow these steps:

- Install Prefect: Use pip or conda to install Prefect on your local machine. Make sure that you have Python 3.8 or newer.

- Connect to API: Use the Prefect CLI or web UI to connect to Prefect’s API. Choose between Prefect Cloud for a hosted experience or self-hosting with your own server.

- Write a flow: Employ the

@flowdecorator and task library to assemble your flow. - Deploy: Use your flow’s

servemethod to create a deployment, a remote entity with its own API that triggers or schedules flow runs. - Monitor: Once your flow is running, you can use Prefect Cloud or server to keep tabs on its state and results. Use the web UI or CLI for real-time logs, artifacts, and metrics.

Summing up #

Prefect is an API-first data orchestration tool that’s lightweight and comes with a real-time UI.

Open-source alternatives to Prefect include Airflow, Dagster, Luigi, Prefect, and Argo.

Choosing the right data orchestration tool would largely depend on your current data ecosystem, use cases, workflow complexity, and engineering skills.

Prefect: Related Reads #

- What is data orchestration: Definition, uses, examples, and tools

- Open source ETL tools: 7 popular tools to consider in 2023

- Open-source data observability tools: 7 popular picks in 2023

- ETL vs. ELT: Exploring definitions, origins, strengths, and weaknesses

- 10 popular transformation tools in 2023

- Dagster 101: Everything you need to know

- Airflow 101: Everything you need to know

Share this article