dbt Data Quality: Here’s Everything You Need to Know in 2025

Share this article

dbt is a widely used data transformation tool that offers an optional orchestration layer in its cloud version. Data movement and transformation, whether from the source or through pipelines, can lead to quality issues, making dbt data quality crucial for extracting value from data.

See How Atlan Simplifies Data Governance – Start Product Tour

Initially, dbt supported only data tests for validating entire datasets, which lacked flexibility for model-specific use cases. With the release of v1.8, dbt introduced a built-in unit testing framework, enabling users to conduct isolated, customizable tests at the model level.

This article examines how dbt’s data quality features, including unit testing, improve data reliability and trust. It also discusses how a control plane for data can further optimize your data quality management.

Table of contents

Permalink to “Table of contents”- dbt data quality: Native features

- Holistic data quality with dbt and Atlan

- Summing up

- dbt data quality: Related reads

dbt data quality: Native features

Permalink to “dbt data quality: Native features”Three broad categories allow you to manage dbt data quality natively, and these include:

- Data tests

- Unit tests

- Model contracts

Let’s explore the specifics of each category further.

1. Data tests

Permalink to “1. Data tests”Previously known simply as “tests” in dbt, data tests are commonly associated with the dbt test command, which indicates whether a test has passed or failed through a command line response. These tests help validate your data by asserting specific conditions and checking if those assertions hold true.

“dbt test quickly became a beloved command, allowing me to run our full suite of data quality tests in production each day. And these same tests would run in CI and development.” - Doug Beatty, Senior Developer Experience Advocate, dbt Labs

For instance, an assertion might be to check whether a specific field in your dataset contains any null values. This ensures that critical fields, such as customer IDs or transaction dates, are not left empty, which could otherwise compromise downstream processes like reporting or analytics.

Data tests can be of two types:

- Singular: Defined as SQL queries, singular data tests are one-off assertions used for a single purpose

- Generic: Defined in special blocks in YAML files using Jinja-based macros, generic tests are reusable. dbt comes with four generic tests out of the box:

uniquenot_nullaccepted_valuesrelationships

You can build more generic tests and save them to your test library.

2. Unit tests

Permalink to “2. Unit tests”A major limitation of data tests was that they could only be executed after fully materializing a model, which required significant computational resources for moving and transforming data—only to discover quality issues afterward.

dbt addressed this with unit tests, which validate SQL modeling logic using static mock inputs before data transformations occur. Similar to application programming unit tests, these checks ensure models function correctly in isolation, reducing resource usage and identifying issues early.

Unit tests streamline the development cycle, allowing data engineers to test business logic efficiently before integrating data.

dbt recommends adding unit tests to a model if:

- Your SQL contains complex logic (Regex, date math, window functions, etc.)

- You’re writing logic with a history of reported bugs

- You must handle edge cases not yet seen in your data

- You have to refactor transformation logic

- You have models with high ‘criticality’ (public, contracted models, or those upstream of key exposures)

dbt also advises running these tests in development or lower CI (Continuous Integration) cycles to catch issues before deployment, avoiding their use in production environments.

“Since the inputs of the unit tests are static, there’s no need to use additional compute cycles running them in production. Use them in development for a test-driven approach and CI to ensure changes don’t break them.” - dbt documentation

3. Model contracts

Permalink to “3. Model contracts”Unit and data tests provide coverage before and after model materialization, respectively. Model contracts, on the other hand, address testing during model creation by defining data contracts that must be met before downstream processing. These contracts enforce schema validation, column names, data types, precision checks, and constraints like primary and foreign keys.

Also, read → dbt data contracts

When you define a model contract, dbt runs preflight checks to ensure the model adheres to the structure and requirements defined in the contract. However, some structural and constraint aspects depend on the data platform hosting your data, meaning dbt has platform-specific limitations on model contract definitions.

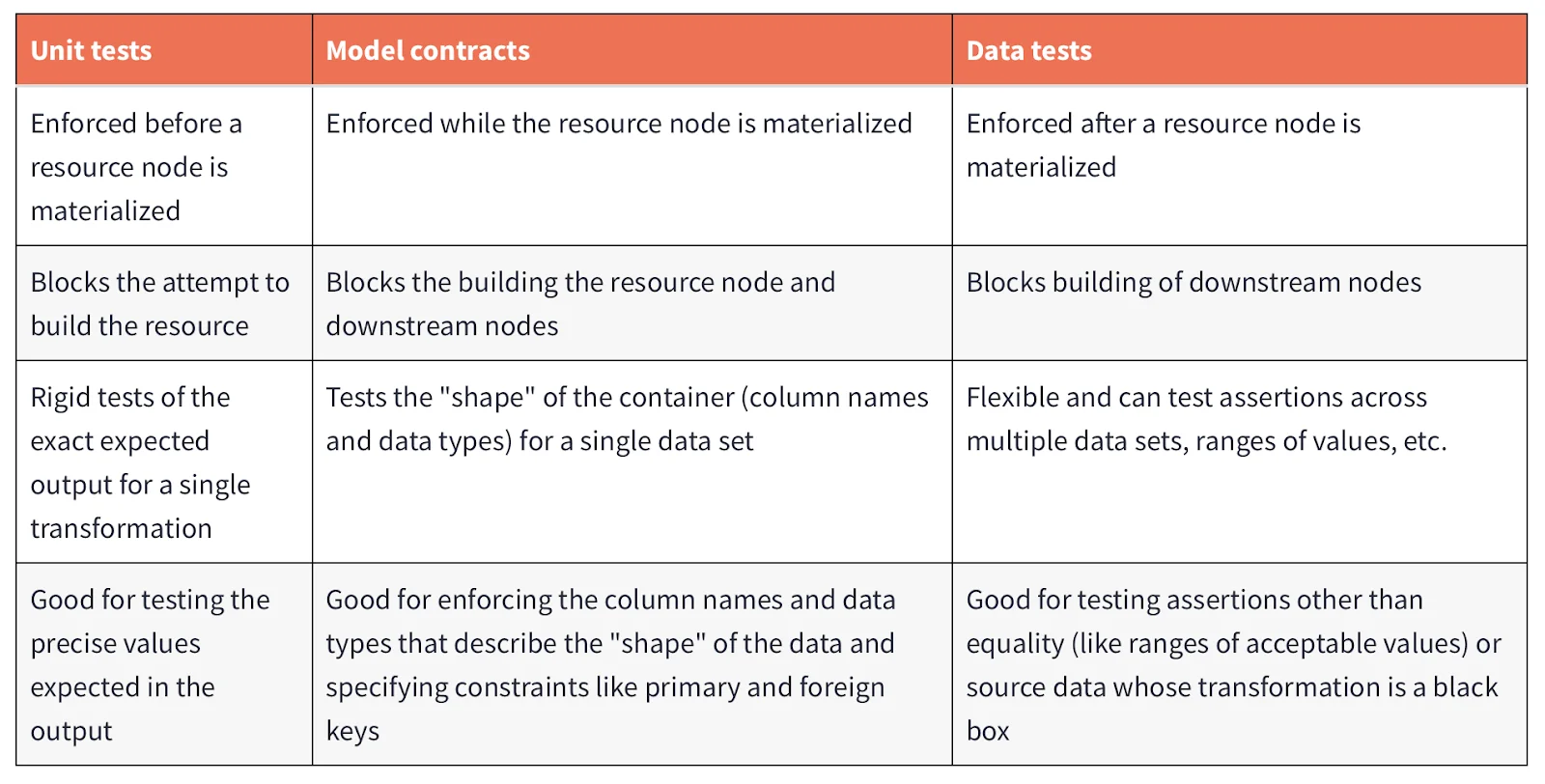

Here’s a handy table that summarizes and compares the three native dbt data quality options available.

An overview of unit tests, model contracts, and data tests in dbt - Source: dbt Blog.

While dbt is often the central transformation tool, in practice, business logic is frequently split across stored procedures, standalone SQL queries, and even the ingestion layer, requiring a seamless integration of contracts and tests across the stack.

A control plane for data can help in managing data quality for your broader data ecosystem that extends beyond dbt. That’s where Atlan comes in, with its native data quality features and close integrations with dbt as well as other data quality tools.

Holistic data quality with dbt and Atlan

Permalink to “Holistic data quality with dbt and Atlan”Atlan integrates with a wide range of tools to bring metadata into a unified control plane, enabling centralized management of data access, governance, lineage, and quality. Atlan’s partnership with dbt enhances this capability by making dbt-specific metadata—such as metrics, asset ownership, and lineage—accessible within Atlan, providing a 360° view for data teams.

In addition to cataloging and lineage, Atlan offers features focused on data quality, policy management, and contract enforcement:

- Data contracts: Define data contracts in YAML specs to validate agreements between producers and consumers of data. Using the contract metadata, you can enforce data quality rules as part of your workflows. Currently, data contracts are supported for tables, materialized views, views, and output ports of data products in Atlan.

- Data profiling: Continuously profile existing data to monitor metrics like missing values, uniqueness, and length. Atlan allows you to create profiling playbooks that can check for metrics around missing, unique, minimum, maximum, and average length values, among other things. You can run these profiling playbooks on-demand or based on a schedule.

- Data policy compliance: Define six types of policies in the Policy Center, including those for data quality, which ensure data accuracy, reliability, and consistency within your system.

While Atlan supports data profiling and natively integrates with dedicated tools like Anomalo, Soda, and Monte Carlo, it does not currently ingest testing or data quality metadata directly from dbt. By combining cataloging, lineage, governance, and integrations with third-party tools, Atlan provides a comprehensive solution for managing data quality alongside metadata.

Summing up

Permalink to “Summing up”This article introduced you to dbt’s native data quality features, which include data tests, unit tests, and model contracts.

It also introduced you to the concept of a singular control plane for data, not just for data access control and governance, but also for data quality. We explored how Atlan can be that singular control plane, especially with its integration capabilities for data quality tools and native data quality and profiling features. We also saw how Atlan integrates closely with dbt, which you can learn more about on Atlan’s official blog.

dbt data quality: Related reads

Permalink to “dbt data quality: Related reads”- dbt Data Catalog: Discussing Native Features Plus Potential to Level Up Collaboration and Governance with Atlan

- dbt Data Governance: How to Enable Active Data Governance for Your dbt Assets

- dbt Data Lineage: How It Works and How to Leverage It

- dbt Metadata Management: How to Bring Active Metadata to dbt

- Data contracts: What Are They? & How To Implement One?

- Data Contracts Open Questions You Need to Ask

- Snowflake + dbt: Supercharge your transformation workloads

- dbt Data Contracts: Quick Primer With Notes on How to Enforce

Share this article