Data Classification Tools: What Evaluation Qualities & Criteria Matter in 2025

Last Updated on: April 29th, 2025 | 10 min read

Unlock Your Data's Potential With Atlan

Data classification tools help identify and categorize your data assets based on business domains, protection requirements, security standards, and regulations. They form the foundation for applying security controls, enforcing governance policies, and ensuring responsible data use.

As data volumes surge, systems fragment, and regulations tighten (GDPR, CCPA, DORA), enterprises need classification tools that operate in real time, scale with growth, and adapt to change.

In this article, we’ll explore:

- Core capabilities of modern data classification tools

- Evaluation criteria, benchmarks and scoring frameworks

- Common pitfalls to avoid

- Atlan’s approach to data classification to find, trust, and govern AI-ready data

Table of Contents

Permalink to “Table of Contents”- What qualities should you look for in data classification tools?

- How to evaluate data classification tools for your enterprise

- What are some of the most common pitfalls when choosing a data classification tool?

- How does Atlan approach data classification?

- Wrapping up

- Data classification tools: Frequently asked questions (FAQs)

What qualities should you look for in data classification tools?

Permalink to “What qualities should you look for in data classification tools?”According to Gartner’s Magic Quadrant for Data Governance, data classification organizes data by relevant categories to make data easier to locate and retrieve, while supporting risk management, compliance, and data security.

So, while evaluating options, you should focus on the following essential qualities:

- Core classification capabilities: Look for tagging, labeling, and metadata-driven classification features. Automation should be built-in to reduce manual effort and keep pace with dynamic data environments.

- Support for all data types: Your tool must handle structured, semi-structured, and unstructured data—across files, databases, cloud storage, applications, and streams—without needing separate systems or processes.

- Enterprise scalability: Ensure the tool can operate across petabyte-scale datasets, diverse business domains, and global regions, while maintaining performance, policy consistency, and governance traceability.

- Integration across cloud and on-prem systems: Native connectors to cloud platforms like Snowflake, Databricks, AWS, Azure, and on-premise systems are essential to apply classification directly where the data lives.

- Bi-directional sync with source metadata: Prioritize tools that automatically sync classifications with your source system metadata. This ensures consistency, reduces manual overhead, and keeps metadata aligned as your data evolves.

Also, read → Marie Kondo your data catalog and spark joy with data classification & tagging

How to look beyond features: Six critical dimensions to evaluate

Permalink to “How to look beyond features: Six critical dimensions to evaluate”Once you know what capabilities matter, it’s time to dig deeper into how a tool actually performs across critical dimensions. A strong data classification tool must perform well across six critical dimensions:

- Accuracy: Can the tool identify sensitive data reliably, without flooding teams with false positives or missing critical assets?

- Flexibility: Can it adapt to your organization’s custom data models, business rules, and evolving definitions—rather than forcing rigid, predefined templates?

- Automation: What rule-based or AI-based classification options are available to scale classification without heavy manual effort?

- Governance integration: Does it link classification to data access, masking, and compliance policies?

- Lineage awareness: Can classifications propagate through lineage to downstream assets, maintaining compliance and context across upstream and downstream systems?

- User experience: Is it no-code friendly for data stewards and intuitive for analysts?

How to evaluate data classification tools for your enterprise

Permalink to “How to evaluate data classification tools for your enterprise”Choosing the right data classification tool often comes down to how well it performs in a real environment. Here’s how you can structure your evaluation:

- Run a proof-of-concept

- Track key metrics

- Ask targeted questions during evaluation

- Set up a scoring framework to compare data classification tools

1. Run a proof-of-concept (PoC)

Permalink to “1. Run a proof-of-concept (PoC)”Test the tool against real datasets representative of your production environment—across structured, unstructured, and semi-structured formats. Validate its ability to classify at scale, handle edge cases, and surface insights with minimal tuning.

2. Track key metrics

Permalink to “2. Track key metrics”Key metrics to measure the effectiveness of a data classification tool include (but aren’t limited to):

- Classification precision and recall: How accurately does the tool identify sensitive, critical, or governed data?

- Coverage percentage: What percentage of your total data estate is classified after the first pass?

- Time to classify: How long does the tool take to classify a new dataset at enterprise scale?

- Metadata enrichment rate: How effectively does the tool enrich existing assets with context (like business terms, tags, sensitivity labels)?

- Rule hit rate: How often are automated rules or AI models correctly applied without manual intervention?

- Auto-suggestion accuracy: For tools using AI, how accurate are their classification suggestions before human review?

3. Ask targeted questions during evaluation

Permalink to “3. Ask targeted questions during evaluation”During the evaluation, you should ask targeted questions to tool vendors on setup and implementation, use cases, success stories, and more:

- How much custom configuration is required to align the tool with our data models and governance standards?

- Can the tool handle hybrid cloud, multi-cloud, and on-premise environments?

- How does classification integrate with lineage, access control, and compliance tools?

- How is classification performance monitored post-deployment (dashboards, alerts, metrics)?

- How are conflicts handled if different systems tag the same asset differently?

- What real-world precision/recall benchmarks can you share based on client deployments?

- How customizable are the AI models or rule engines—can we tune them to our data domains without vendor dependency?

4. Set up a scoring framework to compare data classification tools

Permalink to “4. Set up a scoring framework to compare data classification tools”Set up a simple matrix to score shortlisted tools across dimensions like classification accuracy, scalability, ease of integration, user experience, governance features, and total cost of ownership.

Use the following table to score and compare different data classification tools based on weighted priorities that matter the most to your organization.

| Evaluation Criteria | Weight (%) | Tool A Score (1–5) | Tool B Score (1–5) | Tool C Score (1–5) |

|---|---|---|---|---|

| Classification precision and recall | 20% | |||

| Extensibility across your tech and data stack | 20% | |||

| Bi-directional sync with source metadata | 15% | |||

| Governance integration (lineage, data access) | 15% | |||

| Automation and AI | 10% | |||

| Ease of custom classification setup | 10% | |||

| User experience (no-code, UI intuitiveness) | 5% | |||

| Vendor responsiveness | 5% | |||

| Weighted Total | 100% |

Evaluating features and product dimensions alone isn’t enough—organizations often stumble into predictable traps when adopting classification solutions. Let’s look at the most common pitfalls that enterprises should bear in mind.

What are some of the most common pitfalls when choosing a data classification tool?

Permalink to “What are some of the most common pitfalls when choosing a data classification tool?”The most common pitfalls when evaluating data classification tools include:

- Over-focusing on fingerprinting without metadata context: Tools that only scan content without capturing metadata (ownership, domain, sensitivity) miss the bigger picture. Metadata context is essential for accurate governance, AI-readiness, and scalable compliance.

- Ignoring long-term operational overhead: Tools that require heavy manual tagging or constant rule-tuning won’t scale. Prioritize automation, AI assistance, and bulk-editing capabilities to minimize operational drag over time.

- Overlooking downstream governance use cases: Classification should flow directly into access control, data masking, retention policies, and compliance workflows. Choose tools that integrate classification with actionable governance.

- Choosing tools that don’t integrate with your stack: Lack of deep integrations with cloud data warehouses, BI tools, catalogs, and governance platforms will lead to silos and friction. Ensure your classification tool can bi-directionally sync with your broader data and tech environment. Pick tools that are built on an open architecture, supporting endless extensibility.

Also, read → Role of metadata management in enterprise AI

How does Atlan approach data classification?

Permalink to “How does Atlan approach data classification?”One of the biggest hurdles to data classification is fragmentation – inconsistent tag definitions across tools create confusion for data producers and consumers alike. A modern, proactive approach shifts classification closer to the source—ensuring consistency without sacrificing flexibility.

Atlan acts as a metadata control plane that allows enterprises to define, manage, and sync classification tags across systems like Snowflake, dbt, BigQuery, and more. Key classification and governance capabilities include (but aren’t limited to):

- Tag-based classification: Create and apply visual tags like PII, Confidential

- Automation via playbooks: Use metadata patterns to auto-classify assets with rule-based workflows

- Integration with external classifiers: Connect with external classifiers (like AWS Macie), import classifications from source systems

- Granular tag values: Add detail to classifications with tag values (e.g., Email, SSN)

- Lineage-aware propagation: Automatically extend tags across related assets

By making classification scalable, flexible, and consistent across platforms, Atlan helps enterprises embed trust into every layer of their data and analytics environment.

Also, read → Tags in Atlan | Setting up playbooks in Atlan | Tag propagation in Atlan

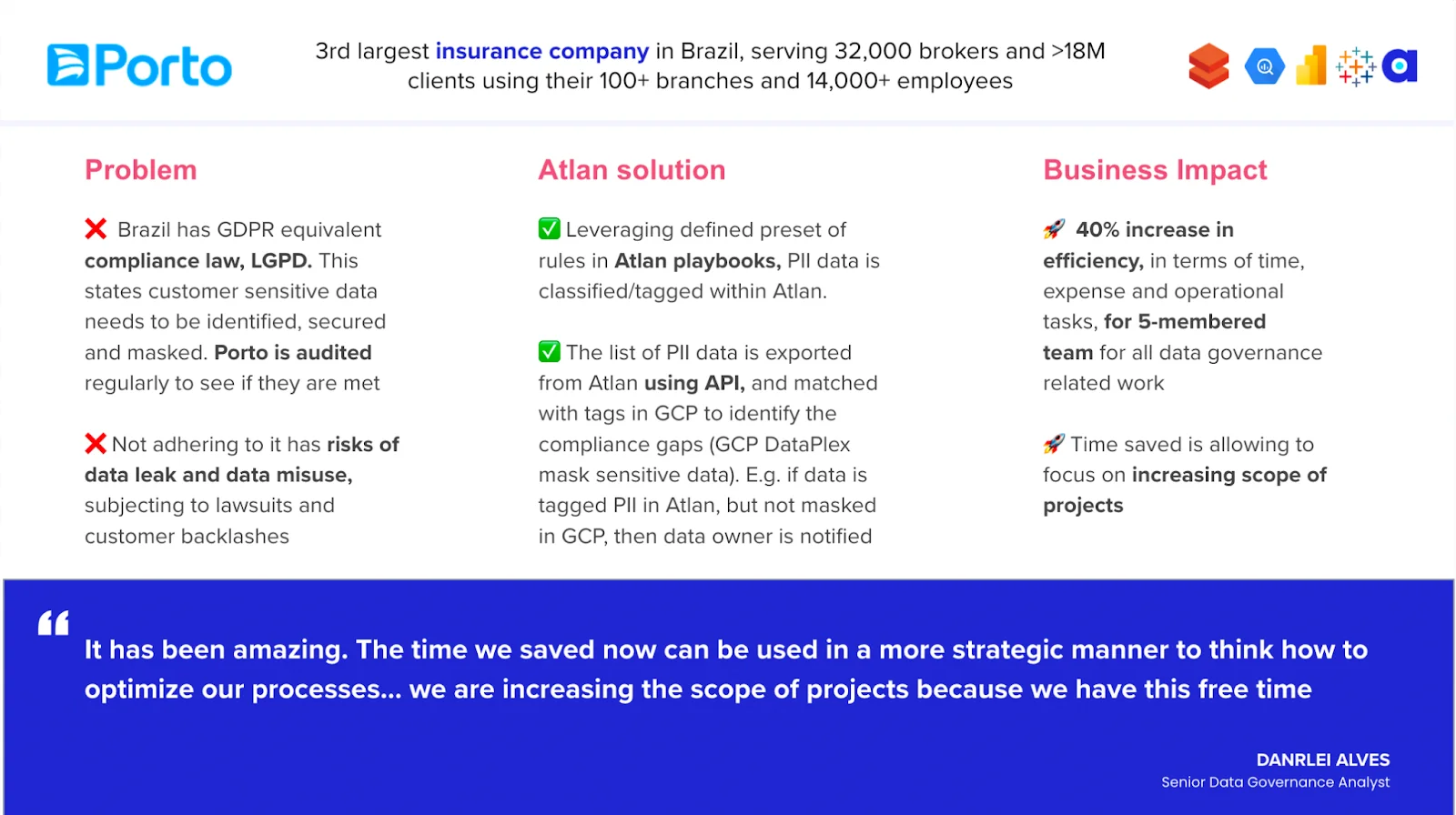

How Porto scaled data classification and compliance with automation

Permalink to “How Porto scaled data classification and compliance with automation”With 13 million customers across a diverse set of insurance and banking products and services, Porto is Brazil’s third largest insurance company.

Protecting sensitive information is critical for Porto to comply with LGPD, Brazil’s GDPR-equivalent law, which mandates organizations to identify, secure, and mask sensitive customer data.

Porto’s 5-member data governance team manages a vast data estate of over 1 million assets. To extend their reach across such a large environment, Porto uses Atlan Playbooks—rule-based automation that scales classification, tagging, and compliance workflows.

Here’s how it works:

- Porto configured preset rules in Atlan Playbooks to detect patterns in asset names and descriptions for PII classification.

- These classifications were cross-referenced with GCP Dataplex logs to verify that sensitive data was properly masked.

- If a data asset was classified as PII but not masked appropriately, asset owners were automatically alerted for corrective action.

This automation-driven workflow led to a 40% increase in operational efficiency for the governance team, freeing up time for higher-value governance initiatives.

“This is a 40% reduction of five people’s time. We’re using the time savings to focus on optimizing our processes and upleveling the type of work we are doing.” - Danrlei Alves, Senior Data Governance Analyst

How Porto scaled LGPD compliance with automation - Image by Atlan.

Wrapping up

Permalink to “Wrapping up”Choosing the right data classification tool is about scaling trust, governance, and compliance across a fragmented, fast-moving data landscape. Enterprises need tools that can handle real-world complexity – hybrid systems, regulatory shifts, business growth, and AI acceleration.

Modern data catalogs like Atlan centralize and automate classification, while connecting it seamlessly to broader governance and compliance ecosystems.

Data classification tools: Frequently asked questions (FAQs)

Permalink to “Data classification tools: Frequently asked questions (FAQs)”1. What is a data classification tool?

Permalink to “1. What is a data classification tool?”A data classification tool identifies, labels, and categorizes data assets based on business, security, and regulatory requirements, enabling secure access, governance, and compliance.

2. What are the benefits of using data classification tools?

Permalink to “2. What are the benefits of using data classification tools?”Data classification tools bring clarity to complex data estates, strengthen regulatory compliance, improve data security, streamline audits, enable responsible data use, and lay the foundation for AI-readiness across the enterprise.

3. What are the key capabilities a modern data classification tool should offer?

Permalink to “3. What are the key capabilities a modern data classification tool should offer?”Essential capabilities include:

- Tagging and labeling automation

- Support for multiple data types

- Lineage-aware propagation

- Bi-directional sync based on source metadata

- Governance integration

- Extensibility and interoperability across hybrid environments

4. What is metadata-based classification differ from content scanning?

Permalink to “4. What is metadata-based classification differ from content scanning?”Metadata-based classification uses asset context (e.g., owners, domains, tags) for more scalable and governance-aligned classification. This is essential for a broader business context.

5. Why is bi-directional sync with source systems important?

Permalink to “5. Why is bi-directional sync with source systems important?”Bi-directional sync ensures that changes to classification or metadata in source systems (like Snowflake or dbt) are automatically reflected in the governance platform, maintaining consistency and reducing manual effort.

6. How can automation improve data classification workflows?

Permalink to “6. How can automation improve data classification workflows?”Automation minimizes manual tagging, speeds up classification across millions of assets, ensures compliance, and frees up governance teams to focus on optimization and strategic initiatives.

7. What are some examples of data classification tools?

Permalink to “7. What are some examples of data classification tools?”Atlan is an example of a modern data catalog that simplifies and scales data classification. It offers features, such as:

- Rule-based automation through Playbooks

- Integration with external classifiers

- Granular tag-based classification

- Lineage-aware propagation of classifications

These features enable enterprises to classify and govern data efficiently across cloud and on-premise systems.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Data classification tools: Related reads

Permalink to “Data classification tools: Related reads”- Data Classification: Definition, Types, Examples, Tools

- Data Classification and Tagging: Marie Kondo Your Data Catalog

- What is Data Governance? Its Importance, Principles & How to Get Started?

- Data Governance and Compliance: An Act of Checks & Balances

- Data Governance and GDPR: A Comprehensive Guide to Achieving Regulatory Compliance

- Data Compliance Management in 2025

- Enterprise Data Governance Basics, Strategy, Key Challenges, Benefits & Best Practices

- Data Governance in Banking: Benefits, Challenges, Capabilities

- Financial Data Governance: Strategies, Trends, Best Practices

- BCBS 239 Data Governance: What Banks Need to Know in 2025

- Data Governance & Business Intelligence: Why Their Integration Matters and How It is Crucial for Business Success?

- Unified Control Plane for Data: The Future of Data Cataloging