Apache Iceberg: All You Need to Know About This Open Table Format in 2025

Share this article

Apache Iceberg is an open table format for managing and organizing data in data lakes and data lakehouses at scale.

Unlock Your Data’s Potential With Atlan – Start Product Tour

Table formats are critical to data lakehouse implementations as they aim to provide key relational database-like features, such as ACID transactions, consistent reads, and concurrency control.

This article explores the key ideas behind Apache Iceberg, its architecture, use cases, and alternatives.

Table of Contents

Permalink to “Table of Contents”- What is Apache Iceberg?

- Why did Netflix develop Apache Iceberg?

- Apache Iceberg architecture: 5 key components

- 6 essential Apache Iceberg use cases

- Apache Iceberg alternatives

- Apache Iceberg data management: The need for a metadata control plane

- Atlan + Iceberg integration via Polaris

- Summary

- Apache Iceberg: Related reads

What is Apache Iceberg?

Permalink to “What is Apache Iceberg?”Apache Iceberg is a table format that provides an open specification for handling a “large, slow-changing collection of files in a distributed file system of the key-value store as a table.”

“[Iceberg] is designed to improve on the de-facto standard table layout built into Apache Hive, Presto, and Apache Spark.” - Iceberg proposal to the ASF (Apache Software Foundation)

It’s important to note that Iceberg doesn’t define a new file format. All data is stored in Apache Avro, Apache ORC, or Apache Parquet files.

A quick note regarding file and table formats

Permalink to “A quick note regarding file and table formats”Before understanding how Apache Iceberg works, it is important to know the difference between file formats and table formats:

- A file is an object that stores data. A file format specification describes how data is stored and managed on disk or in memory.

- A table is an object that abstracts, manages, and organizes many files to give you the impression of a single, easily accessible data object. A table format specification describes how files (that contain data) are managed and organized on disk and in memory.

Apache Iceberg: Versions and compatibility

Permalink to “Apache Iceberg: Versions and compatibility”There have been two versions of the spec: v1 and v2. Both versions have been widely used. The next version, v3, is currently under active development but hasn’t been adopted yet.

Apache Iceberg specification supports three immutable file formats–Parquet, Avro, and ORC–to different degrees, depending on the version of the spec. So, if your data resides in either of these file formats, you can use Apache Iceberg to create a data lake or a data lakehouse at scale.

Iceberg will handle the metadata layer, including schema evolution, partitioning, transaction support, point-in-time querying, and query optimization.

Why did Netflix develop Apache Iceberg?

Permalink to “Why did Netflix develop Apache Iceberg?”Apache Iceberg was originally developed by Netflix to solve issues related to transaction guarantees, read and write optimizations, schema evolution, and point-in-time lookups, among other things.

After its initial development in 2017 and potential for its wide adoption, Netflix donated the project to the Apache Software Foundation in 2018. Iceberg became a top-level project in 2020 and is currently supported by and integrated with data platforms and query engines like ClickHouse, Snowflake, Databricks, Dremio, Starburst, Tabular, and VeloDB.

Before using Apache Iceberg for your workloads, you should also understand the reasons behind its inception and some of the problems it solves.

Before Apache Iceberg, the most widely used alternative was Apache Hive, which became a core part of the Hadoop ecosystem after Facebook created it in 2007 to overcome the challenges of querying data stored in HDFS using MapReduce.

With the evolution of data engineering in the 2010s and the increasing variety of workloads that were expected to be supported by data lakes (and later data lakehouses), a better approach was needed to manage files and overcome the following challenges:

- Lack of support for ACID transactions

- Schema and partition evolution

- Inefficient partition management and pruning

- Missing point-in-time querying capabilities

“Without atomic commits, every change to a Hive table risks correctness errors elsewhere, and so automation to fix problems was a pipe dream and maintenance was left to data engineers. Iceberg tackled atomicity to make automation possible, even in cloud object stores.” - Ryan Blue, co-creator of Iceberg

With that in mind, let’s look at an overview of Apache Iceberg’s architecture that allows it to overcome the challenges above.

Apache Iceberg architecture: 5 key components

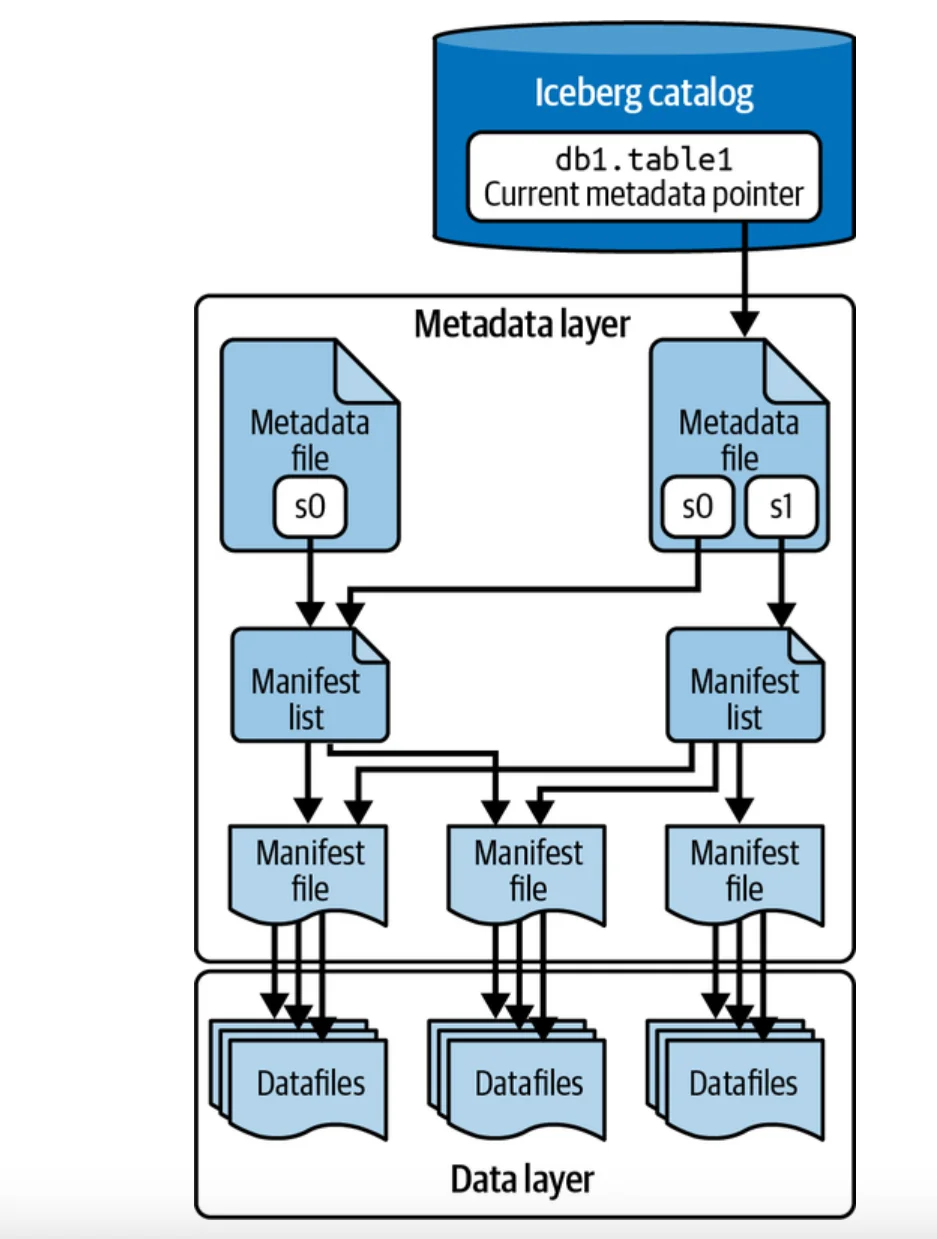

Permalink to “Apache Iceberg architecture: 5 key components”Apache Iceberg contains the following five components to achieve efficient management and organization of files:

- Iceberg catalog: Iceberg catalog is the “library” that keeps track of tables and views within Iceberg. It is responsible for tracking the current metadata (file, manifest, etc.) used when accessing an Iceberg table.

- Metadata file: The state of a table is maintained in metadata files. Any change to the table state results in creating a new metadata file. This file also tracks the table’s schema, partitioning, and snapshots.

- Manifest list: The manifest list represents a snapshot – it contains the list of all the files that make up a snapshot. A manifest list stores information about all the manifests, including partitioning statistics and the number of data files.

- Manifest file: The list of data files stored in formats like Parquet, ORC, and Avro are stored in a manifest file, along with the metadata associated with those files, such as file statistics, partition information, etc.

- Data file: The entity that stores the actual data is itself stored in the snapshots that are tracked by manifest files. The data in a snapshot is the union of all the files in its manifests.

The architecture of an Apache Iceberg table - Source: O’Reilly Media.

Note that Iceberg allows you to use the cataloging engine of your choice. You can create your custom catalog or use an existing catalog using the REST API or the JDBC option.

You’ll likely end up using one of the more common options, such as the Hive Metastore or Apache Nessie. For cloud platform-specific implementations, you can also choose something like AWS Glue Catalog as the base technical catalog for Iceberg.

6 essential Apache Iceberg use cases

Permalink to “6 essential Apache Iceberg use cases”“If you’re looking at Iceberg from a data lake background, its features are impressive: queries can time travel, transactions are safe so queries never lie, partitioning (data layout) is automatic and can be updated, schema evolution is reliable – no more zombie data!.” - Ryan Blue, co-creator of Iceberg

Most use cases of traditional data lakes that evolved from the Hadoop ecosystem still apply to Apache Iceberg, but with great flexibility, scale, and efficiency because of its architecture.

Here are some of the key use cases of Apache Iceberg where it is much better than its older alternatives like Apache Hive:

- Heavy analytics workloads: Iceberg gets rid of the key drawback that was constraining Hive to cater to heavy read workloads – directory listing. With a manifest-based organization and efficient metadata pruning, Iceberg can cater to analytics and read-heavy workloads at scale.

- Point-in-time querying: When you want to query the state of your data at a given point in time, Iceberg can help you with that using snapshot-based storage. You can use the AS OF keyword to access a point-in-time data snapshot.

- Schema evolution: While schema evolution was possible in Hive, it took a huge amount of effort and overhead to implement it. Iceberg natively supports schema evolution without rewriting everything in the table.

- Support for transactions: Full transactional support wasn’t possible without much customization and overhead before Iceberg. The need for the ACID properties arose when data lakes started seeing more concurrent read and write workloads. Providing a consistent read to a user or system became increasingly important. Iceberg achieved this by enforcing snapshot isolation.

- Query engine flexibility: With a move towards an open data lake architecture, data engineers and analysts needed the freedom to use their query engines, such as Trino, Presto, Spark, and Dremio, based on their use cases.

- Advanced filtering and pruning: In supporting reporting, analytics, and business intelligence use cases, there’s a need for lots of on-demand data filtering. Iceberg achieves advanced filtering capabilities with efficient partition pruning and file metadata caching.

Apache Iceberg alternatives

Permalink to “Apache Iceberg alternatives”As Apache Iceberg was being developed and adopted, newer open table alternatives like Apache Paimon and Apache Hudi were also developed.

Here’s a quick summary of all the major table formats for implementing data lakes and data lakehouses:

- Apache Hive: In 2010, Hive was born out of the need for a file format to replace HDFS in the Hadoop ecosystem. The Hive metastore component of Apache Hive is still widely used by various projects, including Apache Iceberg.

- Apache Hudi: Hudi was initially developed at Uber in 2017 to solve scalability issues with real-time and batch processing workloads. Later, the project was donated to the ASF and is now a top-level project.

- Delta Lake: Delta Lake was developed by Databricks in 2019 tightly coupled with Apache Parquet as the underlying file format. It also has interoperability with other table formats like Hudi and Iceberg via UniForm.

- Apache Paimon: Paimon was initially developed at Alibaba Cloud to overcome the challenges of handling streaming lakes – faced when using Hive, Hudi, and even Iceberg.

Data platforms like Databricks and Snowflake have also implemented their proprietary table formats.

Still, because of the rising popularity of open table formats, they have started supporting interoperability between proprietary formats and formats like Apache Iceberg and Apache Hudi, even creating technical catalogs that support these table formats.

Snowflake’s Polaris (built around Iceberg), which is now an incubating Apache project, along with Databricks’s UniForm (built around Hudi), both try to achieve the same.

Apache Iceberg data management: The need for a metadata control plane

Permalink to “Apache Iceberg data management: The need for a metadata control plane”While Iceberg has a technical catalog that serves as the metadata layer in its architecture, it doesn’t interact with other non-Iceberg components of your data lakehouse stack.

In reality, one data source, file format, or table format is often insufficient to serve an enterprise’s broad set of use cases. This is why a control plane for your whole data stack is needed, where you can plug in all your data systems, including Iceberg, and manage your complete data estate from one place.

Such a control plane makes it easier for you to bring in features like data lineage, business glossary, data policies, and contracts, among others, to your stack.

Read more → A unified control plane for data is the future of data cataloging

In the next section, let’s see how Atlan, a control plane for data and AI, can integrate with Iceberg and help you unlock the full potential of your data assets.

Atlan + Iceberg integration via Polaris

Permalink to “Atlan + Iceberg integration via Polaris”As mentioned earlier in the article, Iceberg gives you the flexibility of picking the technical catalog of your choice. This catalog decides whether your implementation supports ACID transactions, version control, etc., and to what degree.

The most flexible catalog option that Iceberg provides is the REST API.

Snowflake’s Polaris is one of the first fully-featured implementations of the Iceberg REST API. It provides multi-engine interoperability across various platforms, including Apache Doris, Dremio, Trino, Apache Spark, Apache Flink, etc.

Atlan integrates with Iceberg via Polaris. This helps you bring in all tables stored in the Iceberg table format, irrespective of location and storage engine.

Bringing the Polaris catalog for Iceberg and Atlan’s metadata control place activates data and AI governance across your data ecosystem.

Summary

Permalink to “Summary”A table format is an important component of a data lakehouse. Apache Iceberg is one of the key open-table formats you can use in your data lakehouse to manage and organize the underlying files more efficiently.

This article took you through the basics of Apache Iceberg, a brief history, and why it was created. You also got an overview of Apache Iceberg’s architecture and use cases. You also learned a little about the existence of Apache Hive, Apache Hudi, Delta Lake, and Apache Paimon. And finally, you learned how Atlan integrates Iceberg via the Polaris catalog.

To know more, head over to the official documentation of Atlan.

Apache Iceberg: Related reads

Permalink to “Apache Iceberg: Related reads”- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article