Simplify your Airflow data lineage with OpenLineage

Share this article

Apache Airflow is an open-source batch job orchestration tool for data pipelines. It works on the foundation of DAGs (Directed Acyclic Graphs) to define upstream dependencies for workflows.

Airflow is extremely popular because of its extensible Python framework that allows you to manage your data pipelines in code. It also has a well-developed UI, which you can use to start, stop, pause, debug, monitor, and observe your workflows.

Airflow also supports tracking data lineage for the objects in your workflows. However, Airflow’s out-of-the-box lineage solution isn’t to be relied on entirely, as Airflow documentation says, “lineage support is very experimental and subject to change.”

OpenLineage is an open standard for metadata and lineage collection from various components of your data stack. With a common standard that all tools can use, OpenLineage reduces the effort you need to collect, maintain, and action lineage in your data ecosystem.

It comes with integrations with some of the most widely used open-source projects like Apache Spark, Apache Airflow, dbt, Apache Flink, Dagster, and more. The original implementation of OpenLineage was with Marquez. OpenLineage and Marquez are projects now maintained by the Linux Foundation AI & Data project.

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

This article will take you through how OpenLineage and Airflow can be integrated to provide you with a detailed view of the data lineage across your data ecosystem under the following themes:

- Understanding Airflow data lineage

- Enhancing data lineage in Airflow using OpenLineage

- How the OpenLineage Airflow provider works

- Atlan’s Integration with OpenLineage & Airflow

Let’s get to it!

Table of contents #

- Understanding Airflow data lineage

- Enhancing data lineage in Airflow using OpenLineage

- How the OpenLineage Airflow Provider works

- Summary

- OpenLineage + Airflow data lineage integration: Related reads

Understanding Airflow data lineage #

As hinted in the introduction, Airflow’s lineage capabilities are limited, but understanding how it works would help appreciate why an external tool like OpenLineage might be required. Airflow allows you to define inlets and outlets for your tasks. Inlets can be upstream tasks or attr annotated objects, while outlets can only be attr annotated objects.

Airflow allows you to send the lineage metadata to a custom lineage backend by providing an instance of LineageBackend. Using this, you can integrate with external tools, such as data cataloging, governance, quality, and monitoring tools.

In a typical setup with Airflow, it becomes the producer of the data lineage, as it handles all the actual data movement and transformation workflows. At the same time, OpenLineage sits between Airflow and one of the backends where the lineage metadata is temporarily or permanently stored. This lineage metadata is then sent for consumption by a tool usually with a frontend, for lineage editing, debugging, and visualization purposes. Let’s see how it works in the next section.

Enhancing data lineage in Airflow using OpenLineage #

If you’re using Airflow < 2.7.0, you might need to use the openlineage-airflow package and follow some manual steps to set up and enable the OpenLineage + Airflow integration. However, if you are on Airflow >= 2.7.0, you might have noticed that you don’t need to do anything extra to reap the benefits of OpenLineage as it has become a built-in feature instead of an external integration with the OpenLineage Airflow Provider announcement.

This new provider has not only removed the need to set up an integration but has also been rewritten to avoid repeat work like writing Extractors for unit tests. This provider is fully baked into the base Airflow Docker image. You can use the following command to spin up an OpenLineage-enabled Airflow instance on Docker:

docker run -it - entrypoint /bin/bash apache/airflow:2.7.0-python3.10 - c "pip freeze | grep openlineage"

You also need to tell OpenLineage where to send lineage and metadata events. For instance, Marquez, Amundsen, Apache Atlas, Atlan, etc.

[openlineage]

namespace = 'airflow'

transport = '{"type": "http", "url": "<http://marquez:5000>"}'

disabled = False

extractors = ''

disable_source_code = False

config_path = ''

Once that’s done, you’ll be ready to consume lineage metadata using this new provider. With this provider, you can also add OpenLineage support to your custom operator in Airflow without calling before task execution, after success, or after complete methods. This provider also opens up avenues for supporting the integration with XCom datasets, Airflow hooks, and Task Flow. To learn more, let’s dive deeper into how the OpenLineage + Airflow providers work.

How the OpenLineage Airflow Provider works #

The OpenLineage + Airflow provider has a data model that maps directly to core Airflow entities like datasets, jobs, and runs. Metadata for all these entities is represented in OpenLineage as a facet. A facet is an atomic piece of metadata attached to an entity, such as information about the type of lineage event, who created the event, and what occurrence the event covered. On top of that, OpenLineage also collects and provides information about the job, the run, the inputs, and the outputs. You can also create your custom facets by extending the base facets.

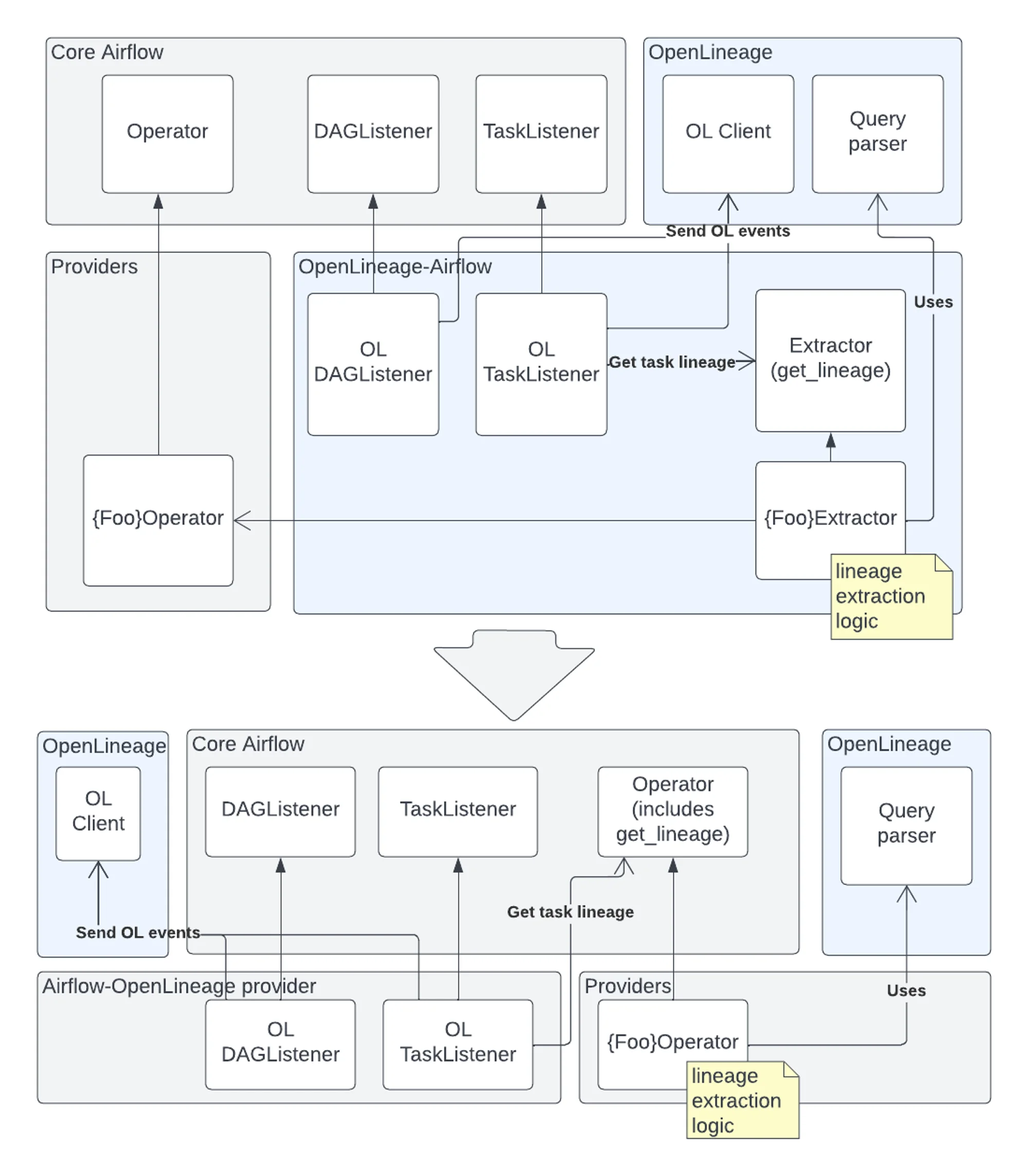

This process materializes in the lineage extraction lifecycle, which involves calling the get_openlineage_facets_on_start function, followed by executing the operator’s execute method. Finally, the lifecycle will be completed using the get_openlineage_facets_on_complete or get_openlineage_facets_on_failure method. A detailed view of how the Core Airflow and OpenLineage subsystems work with the OpenLineage + Airflow operator is shown in the image below:

State Diagram for Core Airflow, OpenLineage, and OpenLineage + Airflow Provider - Image from Airflow Improvement Proposal's.

Support for various operators, including the most popular PythonOperator, is coming soon for the OpenLineage + Airflow provider. Meanwhile, you can still use the out-of-the-box features of this provider with data cataloging, discovery, and lineage tools that offer integration capabilities with OpenLineage and Airflow and have UI functionality to display lineage metadata.

All in all, this integration, in the form of an Airflow provider for Airflow >= 2.7.0 and a regular integration for Airflow < 2.7.0, is a great way to enhance the minimal capabilities of Airflow to track and maintain lineage metadata. The open standard from OpenLineage makes it easier to collect lineage metadata from heterogeneous data sources and get a unified view of the data pipeline.

Atlan’s Integration with OpenLineage & Airflow #

Lineage metadata, like all metadata, needs actioning. You must integrate your lineage workflows with the right tool to enable that. Atlan is a metadata activation platform that helps you make the most out of your lineage metadata. Atlan’s integration with OpenLineage and Airflow allows it to track DAGs and Tasks with status and duration filters.

The integration enables operational metadata from Airflow DAGs, and Airflow objects like Tasks, Runs, and Pods to seamlessly flow into Atlan, offering an easy interface for search, discovery, and visualization through end-to-end data lineage. All of this is powered by bringing in Airflow objects as native assets in Atlan.

Having an accurate visual representation of the lineage also helps data engineers do an impact analysis before making any code changes to a DAG to avoid any breaking changes and surprises downstream. Once Atlan brings in data assets and reconstructs the lineage graph, you can use that metadata to send notifications, raise alerts, and create Jira tickets, among other things, in essence, activating the metadata that you’ve captured.

Summary #

This article walked you through the latest on the OpenLineage + Airflow integration, how it works, and its benefits. You also learned about the type of metadata this integration lets you track and ingest into a third-party tool of your choice.

Finally, you learned about Atlan’s integration with OpenLineage and Airflow, which allows you to bring in Airflow objects as native assets, build lineage on top of them, and offer a way to activate operational metadata to monitor, automate, and analyse downstream impact of code changes. There’s much more to the intergration. To learn more, head over to the official documentation.

OpenLineage + Airflow data lineage integration: Related reads #

- OpenLineage documentation

- OpenLineage GitHub repository

- OpenLineage: Understanding the Origins, Architecture, Features, Integrations & More

- OpenMetadata vs. OpenLineage: Primary Capabilities, Architecture & More

- How to integrate Airflow/OpenLineage

- Airflow: DAGs, Workflows as Python Code, Orchestration for Batch Workflows, and More

- Dagster vs Airflow: Data Orchestration Capabilities, UX, Setup, and Community Support

- Airflow/OpenLineage Connectivity

Share this article