Databricks Data Catalog: Native Capabilities, Benefits of Integration with Atlan, and More

Share this article

Unity Catalog is Databricks’ data catalog and a built-in governance solution for data and AI. It automatically creates a technical data catalog in INFORMATION_SCHEMA for every catalog.

Additionally, a central system catalog governs tables and views across the entire Databricks Workspace, housing metadata like auditing, table and column lineage, job history, and more. This metadata forms the foundation of a Databricks metadata layer, accessible to all Unity Catalog-enabled workspaces.

See How Atlan Simplifies Data Cataloging – Start Product Tour

This article provides an overview of the Databricks data catalog and explores how to set up a unified control plane for metadata across your entire data stack—not just Databricks.

Table of contents

Permalink to “Table of contents”- Databricks data catalog: Native cataloging features

- Atlan as the unified metadata control plane for Databricks

- Bringing cataloging, discovery, and governance together

- How organizations making the most out of their data using Atlan

- Summary

- Related reads

Databricks data catalog: Native cataloging features

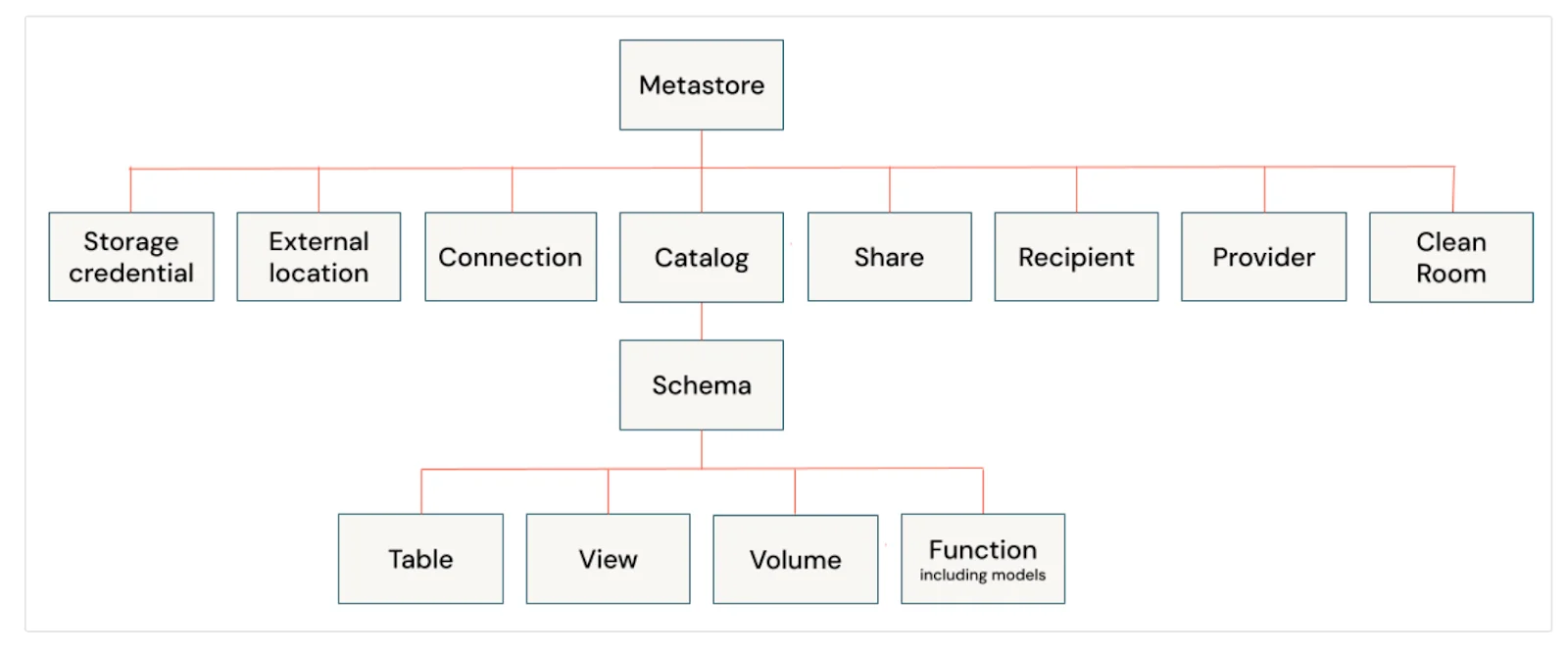

Permalink to “Databricks data catalog: Native cataloging features”Databricks manages technical and operational metadata separately within each Workspace. Unity Catalog stitches this metadata together into a singular metastore, storing all securable data objects such as catalogs, schemas, tables, views, and more.

Securable objects in Unity Catalog - Source: Databricks.

Databricks offers the following native features for search, discovery, and governance (via Unity Catalog):

- Data access control: Grant and revoke access to any securable data object.

- Data isolation: Control where and how your data is stored, in terms of cloud storage accounts or buckets.

- Auditing: Create audit trails for all metastore actions, capturing query history across Unity Catalog-enabled workspaces.

- Lineage: Query table and column-level lineage for different types of computes run in notebooks, dashboards, jobs, and queries.

- Lakehouse federation: Manage query federation when external data sources are involved in data processing and queries, and maintain the data lineage across all sources uniformly.

- Data sharing: Use the open-source Delta Sharing protocol to securely share data internally and externally, with full control over permissions.

While these features enable technical cataloging, they don’t provide a unified control plane for metadata that interacts with non-Databricks systems. That’s where Atlan can help, by creating a unified metadata control plane for your data stack. Let’s see how that works.

Atlan as the unified metadata control plane for Databricks

Permalink to “Atlan as the unified metadata control plane for Databricks”As data tools and technologies increase, the data ecosystem becomes more complex, resulting in the evolution of plain-old information_schema metadata to actual big data. This big data is big not just because of the volume alone. It has more to do with the impact on the security, quality, discoverability, and governance of all things in your data ecosystem.

Atlan’s lakehouse approach to metadata ensures:

- End-to-end data enablement of your data ecosystem

- Automation workflows for both technical and operational workloads

Atlan builds on the native features of Databricks’ data catalog, i.e., Unity Catalog, offering an intuitive interface for all your data needs, such as:

- Finding, previewing, and accessing data assets

- Controlling data permissions

- Classifying data assets

- Sharing data assets internally or outside your organization in a secure manner

A Databricks + Atlan setup can help you lay the foundation for AI-ready data ecosystems. Let’s explore the specifics in the next section.

Bringing cataloging, discovery, and governance together

Permalink to “Bringing cataloging, discovery, and governance together”Built on top of the metadata crawled from the Databricks data catalog, Atlan provides the following capabilities:

- Discovery: An intuitive user interface to search and discover data assets across all the source and target systems (including Databricks).

- Governance: Customized governance based on your organization’s operational model

- Classification: Enhanced data search through certification and verification tags; Atlan uses a two-way syncing mechanism for Databricks Unity Catalog tags.

- Ownership: Support for the Unity Catalog object ownership model, helping you design and implement a data asset ownership model across your enterprise (especially useful in complying with a data mesh architecture).

- Freshness: Automatically signal freshness and trustworthiness to build trust in data using Databricks’ usage metadata.

- Cost optimization: Leverage the usage pattern metadata to understand the popularity of data assets while also identifying and deprecating dormant or stale assets, reducing storage and compute costs.

- Lineage: Create a journey map of all the data flowing in and out of your data platform ecosystem, allowing you to visualize end-to-end lineage (from ingestion to consumption).

The above capabilities offer a glimpse of how Atlan can enhance your existing Databricks setup. There are other use cases centered around automation, business glossary, metadata activation, personalization, and more.

How organizations making the most out of their data using Atlan

Permalink to “How organizations making the most out of their data using Atlan”Forrester Wave™ recently published a report, ranking Atlan as a leader in enterprise data catalogs for its ability to:

- Automatically catalog the entire technology, data, and AI ecosystem

- Enable the data ecosystem AI and automation first

- Prioritize data democratization and self-service

Atlan has helped numerous enterprises unlock the full potential of their data and leverage it for their AI use cases. Let’s look at one such instance.

Yape, the payment app built by Peru’s largest bank, uses Databricks on Azure as the core data platform. Yape wanted an easy-to-use data cataloging tool that made data accessible to everyone in the team, instead of a small number of people in the engineering team.

They chose Atlan, as it “[had] the best UI in the market right now.”

Summary

Permalink to “Summary”As data ecosystems become more diverse, the need for a unified control plane grows. Such a control plane becomes the one-stop go-to place for all of your organization’s data needs, irrespective of the role of the data consumer.

Atlan provides this control plane, managing governance and compliance across all systems, not just Databricks. With Atlan, your organization is better equipped to succeed in data and AI use cases.

Databricks data catalog: Related reads

Permalink to “Databricks data catalog: Related reads”- Databricks Unity Catalog: A Comprehensive Guide to Features, Capabilities, Architecture

- Data Catalog for Databricks: How To Setup Guide

- Databricks Lineage: Why is it Important & How to Set it Up?

- Databricks Governance: What To Expect, Setup Guide, Tools

- Databricks Metadata Management: FAQs, Tools, Getting Started

- Databricks Cost Optimization: Top Challenges & Strategies

- Databricks Data Mesh: Native Capabilities, Benefits of Integration with Atlan, and More

- Data Catalog: What It Is & How It Drives Business Value

Share this article